This is the multi-page printable view of this section. Click here to print.

2020

STACKIT Kubernetes Engine with Gardener

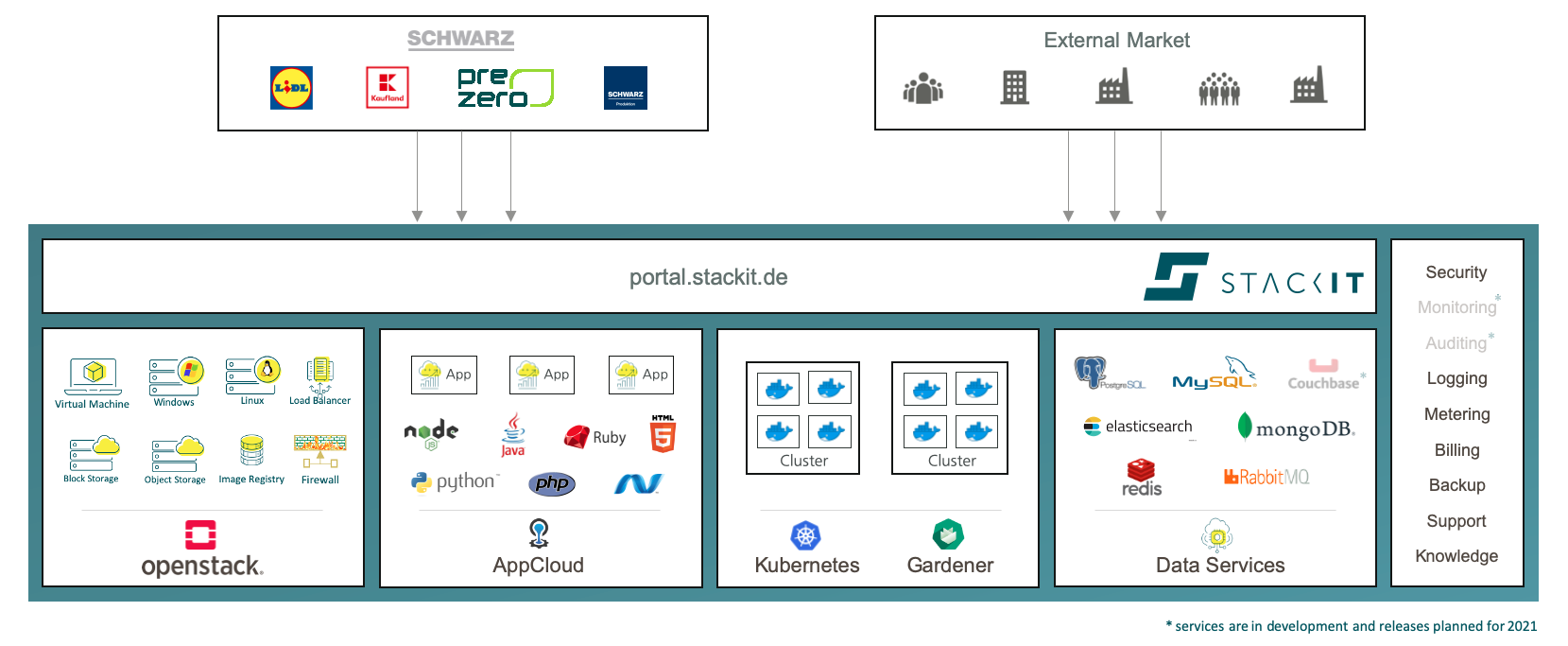

STACKIT is a digital brand of Europe’s biggest retailer, the Schwarz Group, which consists of Lidl, Kaufland, as well as production and recycling companies. Following the industry trend, the Schwarz Group is in the process of a digital transformation. STACKIT enables this transformation by helping to modernize the internal IT of the company branches.

What is STACKIT and the STACKIT Kubernetes Engine (SKE)?

STACKIT started with colocation solutions for internal and external customers in Europe-based data centers, which was then expanded to a full cloud platform stack providing an IaaS layer with VMs, storage and network, as well as a PaaS layer including Cloud Foundry and a growing set of cloud services, like databases, messaging, etc.

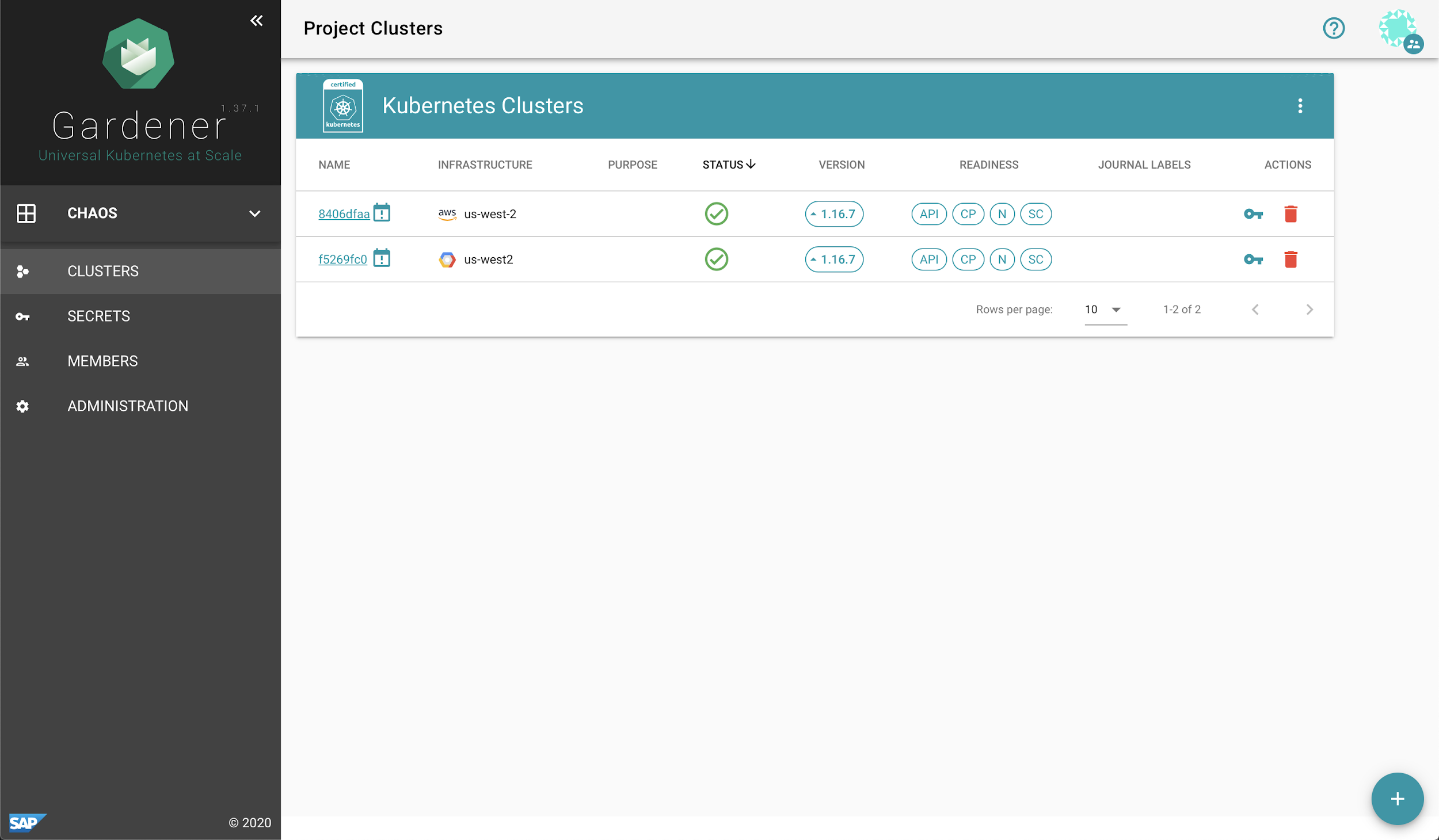

With containers and Kubernetes becoming the lingua franca of the cloud, we are happy to announce the STACKIT Kubernetes Engine (SKE), which has been released as Beta in November this year. We decided to use Gardener as the cluster management engine underneath SKE - for good reasons as you will see – and we would like to share our experiences with Gardener when working on the SKE Beta release, and serve as a testimonial for this technology.

Why We Chose Gardener as a Cluster Management Tool

We started with the Kubernetes endeavor in the beginning of 2020 with a newly formed agile team that consisted of software engineers, highly experienced in IT operations and development. After some exploration and a short conceptual phase, we had a clear-cut opinion on how the cluster management for STACKIT should look like: we were looking for a highly customizable tool that could be adapted to the specific needs of STACKIT and the Schwarz Group, e.g. in terms of network setup or the infrastructure layer it should be running on. Moreover, the tool should be scalable to a high number of managed Kubernetes clusters and should therefore provide a fully automated operation experience. As an open source project, contributing and influencing the tool, as well as collaborating with a larger community were important aspects that motivated us. Furthermore, we aimed to offer cluster management as a self-service in combination with an excellent user experience. Our objective was to have the managed clusters come with enterprise-grade SLAs – i.e. with “batteries included”, as some say.

With this mission, we started our quest through the world of Kubernetes and soon found Gardener to be a hot candidate of cluster management tools that seemed to fulfill our demands. We quickly got in contact and received a warm welcome from the Gardener community. As an interested potential adopter, but in the early days of the COVID-19 lockdown, we managed to organize an online workshop during which we got an introduction and deep dive into Gardener and discussed the STACKIT use cases. We learned that Gardener is extensible in many dimensions, and that contributions are always welcome and encouraged. Once we understood the basic Gardener concepts of Garden, Shoot and Seed clusters, its inception design and how this extends Kubernetes concepts in a natural way, we were eager to evaluate this tool in more detail.

After this evaluation, we were convinced that this tool fulfilled all our requirements - a decision was made and off we went.

How Gardener was Adapted and Extended by SKE

After becoming familiar with Gardener, we started to look into its code base to adapt it to the specific needs of the STACKIT OpenStack environment. Changes and extensions were made in order to get it integrated into the STACKIT environment, and whenever reasonable, we contributed those changes back:

- To run smoothly with the STACKIT OpenStack layer, the Gardener configuration was adapted in different places, e.g. to support CSI driver or to configure the domains of a shoot API server or ingress.

- Gardener was extended to support shoots and shooted seeds in dual stack and dual home setup. This is used in SKE for the communication between shooted seeds and the Garden cluster.

- SKE uses a private image registry for the Gardener installation in order to resolve dependencies to public image registries and to have more control over the used Gardener versions. To install and run Gardener with the private image registry, some new configurations need to be introduced into Gardener.

- Gardener is a first-class API based service what allowed us to smoothly integrate it into the STACKIT User Interface. We were also able to jump-start and utilize the Gardener Dashboard for our Beta release by merely adjusting the look-&-feel, i.e. colors, labels and icons.

Experience with Gardener Operations

As no OpenStack installation is identical to one another, getting Gardener to run stable on the STACKIT IaaS layer revealed some operational challenges. For instance, it was challenging to find the right configuration for Cinder CSI.

To test for its resilience, we tried to break the managed clusters with a Chaos Monkey test, e.g. by deleting services or components needed by Kubernetes and Gardener to work properly. The reconciliation feature of Gardener fixed all those problems automatically, so that damaged Shoot clusters became operational again after a short period of time. Thus, we were not able to break Shoot clusters from an end user perspective permanently, despite our efforts. Which again speaks for Gardener’s first-class cloud native design.

We also participated in a fruitful community support: For several challenges we contacted the community channel and help was provided in a timely manner. A lesson learned was that raising an issue in the community early on, before getting stuck too long on your own with an unresolved problem, is essential and efficient.

Summary

Gardener is used by SKE to provide a managed Kubernetes offering for internal use cases of the Schwarz Group as well as for the public cloud offering of STACKIT. Thanks to Gardener, it was possible to get from zero to a Beta release in only about half a year’s time – this speaks for itself. Within this period, we were able to integrate Gardener into the STACKIT environment, i.e. in its OpenStack IaaS layer, its management tools and its identity provisioning solution.

Gardener has become a vital building block in STACKIT’s cloud native platform offering. For the future, the possibility to manage clusters also on other infrastructures and hyperscalers is seen as another great opportunity for extended use cases. The open co-innovation exchange with the Gardener community member companies has also opened the door to commercial co-operation.

Gardener v1.13 Released

Dear community, we’re happy to announce a new minor release of Gardener, in fact, the 16th in 2020! v1.13 came out just today after a couple of weeks of code improvements and feature implementations. As usual, this blog post provides brief summaries for the most notable changes that we introduce with this version. Behind the scenes (and not explicitly highlighted below) we are progressing on internal code restructurings and refactorings to ease further extensions and to enhance development productivity. Speaking of those: You might be interested in watching the recording of the last Gardener Community Meeting which includes a detailed session for v2 of Terraformer, a complete rewrite in Golang, and improved state handling.

Notable Changes in v1.13

The main themes of Gardener’s v1.13 release are increments for feature gate promotions, scalability and robustness, and cleanups and refactorings. The community plans to continue on those and wants to deliver at least one more release in 2020.

Automatic Quotas for Gardener Resources (gardener/gardener#3072)

Gardener already supports ResourceQuotas since the last release, however, it was still up to operators/administrators to create these objects in project namespaces.

Obviously, in large Gardener installations with thousands of projects, this is a quite challenging task.

With this release, we are shipping an improvement in the Project controller in the gardener-controller-manager that allows operators to automatically create ResourceQuotas based on configuration.

Operators can distinguish via project label selectors which default quotas shall be defined for various projects.

Please find more details at Gardener Controller Manager!

Resource Capacity and Reservations for Seeds (gardener/gardener#3075)

The larger the Gardener landscape, the more seed clusters you require.

Naturally, they have limits of how many shoots they can accommodate (based on constraints of the underlying infrastructure provider and/or seed cluster configuration).

Until this release, there were no means to prevent a seed cluster from becoming overloaded (and potentially die due to this load).

Now you define resource capacity and reservations in the gardenlet’s component configuration, similar to how the kubelet announces allocatable resources for Node objects.

We are defaulting this to 250 shoots, but you might want to adapt this value for your own environment.

Distributed Gardenlet Rollout for Shooted Seeds (gardener/gardener#3135)

With the same motivation, i.e., to improve catering with large landscapes, we allow operators to configure distributed rollouts of gardenlets for shooted seeds.

When a new Gardener version is being deployed in landscapes with a high number of shooted seeds, gardenlets of earlier versions were immediately re-deploying copies of themselves into the shooted seeds they manage.

This leads to a large number of new gardenlet pods that all roughly start at the same time.

Depending on the size of the landscape, this may trouble the gardener-apiservers as all of them are starting to fill their caches and create watches at the same time.

By default, this rollout is now randomized within a 5m time window, i.e., it may take up to 5m until all gardenlets in all seeds have been updated.

Progressing on Beta-Promotion for APIServerSNI Feature Gate (gardener/gardener#3082, gardener/gardener#3143)

The alpha APIServerSNI feature will drastically reduce the costs for load balancers in the seed clusters, thus, it is effectively contributing to Gardener’s “minimal TCO” goal.

In this release we are introducing an important improvement that optimizes the connectivity when pods talk to their control plane by avoiding an extra network hop.

This is realized by a MutatingWebhookConfiguration whose server runs as a sidecar container in the kube-apiserver pod in the seed (only when the APIServerSNI feature gate is enabled).

The webhook injects a KUBERNETES_SERVICE_HOST environment variable into pods in the shoot which prevents the additional network hop to the apiserver-proxy on all worker nodes.

You can read more about it in APIServerSNI environment variable injection.

More Control Plane Configurability (gardener/gardener#3141, gardener/gardener#3139)

A main capability beloved by Gardener users is its openness when it comes to configurability and fine-tuning of the Kubernetes control plane components.

Most managed Kubernetes offerings are not exposing options of the master components, but Gardener’s Shoot API offers a selected set of settings.

With this release we are allowing to change the maximum number of (non-)mutating requests for the kube-apiserver of shoot clusters.

Similarly, the grace period before deleting pods on failed nodes can now be fine-grained for the kube-controller-manager.

Improved Project Resource Handling (gardener/gardener#3137, gardener/gardener#3136, gardener/gardener#3179)

Projects are an important resource in the Gardener ecosystem as they enable collaboration with team members.

A couple of improvements have landed into this release.

Firstly, duplicates in the member list were not validated so far.

With this release, the gardener-apiserver is automatically merging them, and in future releases requests with duplicates will be denied.

Secondly, specific Projects may now be excluded from the stale checks if desired.

Lastly, namespaces for Projects that were adopted (i.e., those that exist before the Project already) will now no longer be deleted when the Project is being deleted.

Please note that this only applies for newly created Projects.

Removal of Deprecated Labels and Annotations (gardener/gardener#3094)

The core.gardener.cloud API group succeeded the old garden.sapcloud.io API group in the beginning of 2020, however, a lot of labels and annotations with the old API group name were still supported.

We have continued with the process of removing those deprecated (but replaced with the new API group name) names.

Concretely, the project labels garden.sapcloud.io/role=project and project.garden.sapcloud.io/name=<project-name> are no longer supported now.

Similarly, the shoot.garden.sapcloud.io/use-as-seed and shoot.garden.sapcloud.io/ignore-alerts annotations got deleted.

We are not finished yet, but we do small increments and plan to progress on the topic until we finally get rid of all artifacts with the old API group name.

NodeLocalDNS Network Policy Rules Adapted (gardener/gardener#3184)

The alpha NodeLocalDNS feature was already introduced and explained with Gardener v1.8 with the motivation to overcome certain bottlenecks with the horizontally auto-scaled CoreDNS in all shoot clusters.

Unfortunately, due to a bug in the network policy rules, it was not working in all environments.

We have fixed this one now, so it should be ready for further tests and investigations.

Come give it a try!

Please bear in mind that this blog post only highlights the most noticeable changes and improvements, but there is a whole bunch more, including a ton of bug fixes in older versions! Come check out the full release notes and share your feedback in Slack!

Case Study: Migrating ETCD Volumes in Production

Note

This is a guest commentary from metal-stack.

metal-stack is a software that provides an API for provisioning and managing physical servers in the data center. To categorize this product, the terms “Metal-as-a-Service” (MaaS) or “bare metal cloud” are commonly used.

One reason that you stumbled upon this blog post could be that you saw errors like the following in your ETCD instances:

etcd-main-0 etcd 2020-09-03 06:00:07.556157 W | etcdserver: read-only range request "key:\"/registry/deployments/shoot--pwhhcd--devcluster2/kube-apiserver\" " with result "range_response_count:1 size:9566" took too long (13.95374909s) to execute

As it turns out, 14 seconds are way too slow for running Kubernetes API servers. It makes them go into a crash loop (leader election fails). Even worse, this whole thing is self-amplifying: The longer a response takes, the more requests queue up, leading to response times increasing further and further. The system is very unlikely to recover. 😞

On Github, you can easily find the reason for this problem. Most probably your disks are too slow (see etcd-io/etcd#10860). So, when you are (like in our case) on GKE and run your ETCD on their default persistent volumes, consider moving from standard disks to SSDs and the error messages should disappear. A guide on how to use SSD volumes on GKE can be found at Using SSD persistent disks.

Case closed? Well. For some people it might be. But when you are seeing this in your Gardener infrastructure, it’s likely that there is something going wrong. The entire ETCD management is fully managed by Gardener, which makes the problem a bit more interesting to look at. This blog post strives to cover topics such as:

- Gardener operating principles

- Gardener architecture and ETCD management

- Pitfalls with multi-cloud environments

- Migrating GCP volumes to a new storage class

We from metal-stack learned quite a lot about the capabilities of Gardener through this problem. We are happy to share this experience with a broader audience. Gardener adopters and operators read on.

How Gardener Manages ETCDs

In our infrastructure, we use Gardener to provision Kubernetes clusters on bare metal machines in our own data centers using metal-stack. Even if the entire stack could be running on-premise, our initial seed cluster and the metal control plane are hosted on GKE. This way, we do not need to manage a single Kubernetes cluster in our entire landscape manually. As soon as we have Gardener deployed on this initial cluster, we can spin up further Seeds in our own data centers through the concept of ManagedSeeds.

To make this easier to understand, let us give you a simplified picture of how our Gardener production setup looks like:

For every shoot cluster, Gardener deploys an individual, standalone ETCD as a stateful set into a shoot namespace. The deployment of the ETCD stateful set is managed by a controller called etcd-druid, which reconciles a special resource of the kind etcds.druid.gardener.cloud. This Etcd resource is getting deployed during the shoot provisioning flow in the gardenlet.

For failure-safety, the etcd-druid deploys the official ETCD container image along with a sidecar project called etcd-backup-restore. The sidecar automatically takes backups of the ETCD and stores them at a cloud provider, e.g. in S3 Buckets, Google Buckets, or similar. In case the ETCD comes up without or with corrupted data, the sidecar looks into the backup buckets and automatically restores the latest backup before ETCD starts up. This entire approach basically takes away the pain for operators to manually have to restore data in the event of data loss.

Note

We found the etcd-backup-restore project very intriguing. It was the inspiration for us to come up with a similar sidecar for the databases we use with metal-stack. This project is called backup-restore-sidecar. We can cope with postgres and rethinkdb database at the moment and more to come. Feel free to check it out when you are interested.

As it’s the nature for multi-cloud applications to act upon a variety of cloud providers, with a single installation of Gardener, it is easily possible to spin up new Kubernetes clusters not only on GCP, but on other supported cloud platforms, too.

When the Gardenlet deploys a resource like the Etcd resource into a shoot namespace, a provider-specific extension-controller has the chance to manipulate it through a mutating webhook. This way, a cloud provider can adjust the generic Gardener resource to fit the provider-specific needs. For every cloud that Gardener supports, there is such an extension-controller. For metal-stack, we also maintain one, called gardener-extension-provider-metal.

Note

A side note for cloud providers: Meanwhile, new cloud providers can be added fully out-of-tree, i.e. without touching any of Gardener’s sources. This works through API extensions and CRDs. Gardener handles generic resources and backpacks provider-specific configuration through raw extensions. When you are a cloud provider on your own, this is really encouraging because you can integrate with Gardener without any burdens. You can find documentation on how to integrate your cloud into Gardener at Adding Cloud Providers and Extensibility Overview.

The Mistake Is in the Deployment

Note

This section contains code examples from Gardener v1.8.

Now that we know how the ETCDs are managed by Gardener, we can come back to the original problem from the beginning of this article. It turned out that the real problem was a misconfiguration in our deployment. Gardener actually does use SSD-backed storage on GCP for ETCDs by default. During reconciliation, the gardener-extension-controller-gcp deploys a storage class called gardener.cloud-fast that enables accessing SSDs on GCP.

But for some reason, in our cluster we did not find such a storage class. And even more interesting, we did not use the gardener-extension-provider-gcp for any shoot reconciliation, only for ETCD backup purposes. And that was the big mistake we made: We reconciled the shoot control plane completely with gardener-extension-provider-metal even though our initial Seed actually runs on GKE and specific parts of the shoot control plane should be reconciled by the GCP extension-controller instead!

This is how the initial Seed resource looked like:

apiVersion: core.gardener.cloud/v1beta1

kind: Seed

metadata:

name: initial-seed

spec:

...

provider:

region: gke

type: metal

...

...

Surprisingly, this configuration was working pretty well for a long time. The initial seed properly produced the Kubernetes control planes of our managed seeds that looked like this:

$ kubectl get controlplanes.extensions.gardener.cloud

NAME TYPE PURPOSE STATUS AGE

fra-equ01 metal Succeeded 85d

fra-equ01-exposure metal exposure Succeeded 85d

And this is another interesting observation: There are two ControlPlane resources. One regular resource and one with an exposure purpose. Gardener distinguishes between two types for this exact reason: Environments where the shoot control plane runs on a different cloud provider than the Kubernetes worker nodes. The regular ControlPlane resource gets reconciled by the provider configured in the Shoot resource, and the exposure type ControlPlane by the provider configured in the Seed resource.

With the existing configuration the gardener-extension-provider-gcp does not kick in and hence, it neither deploys the gardener.cloud-fast storage class nor does it mutate the Etcd resource to point to it. And in the end, we are left with ETCD volumes using the default storage class (which is what we do for ETCD stateful sets in the metal-stack seeds, because our default storage class uses csi-lvm that writes into logical volumes on the SSD disks in our physical servers).

The correction we had to make was a one-liner: Setting the provider type of the initial Seed resource to gcp.

$ kubectl get seed initial-seed -o yaml

apiVersion: core.gardener.cloud/v1beta1

kind: Seed

metadata:

name: initial-seed

spec:

...

provider:

region: gke

type: gcp # <-- here

...

...

This change moved over the control plane exposure reconciliation to the gardener-extension-provider-gcp:

$ kubectl get -n <shoot-namespace> controlplanes.extensions.gardener.cloud

NAME TYPE PURPOSE STATUS AGE

fra-equ01 metal Succeeded 85d

fra-equ01-exposure gcp exposure Succeeded 85d

And boom, after some time of waiting for all sorts of magic reconciliations taking place in the background, the missing storage class suddenly appeared:

$ kubectl get sc

NAME PROVISIONER

gardener.cloud-fast kubernetes.io/gce-pd

standard (default) kubernetes.io/gce-pd

Also, the Etcd resource was now configured properly to point to the new storage class:

$ kubectl get -n <shoot-namespace> etcd etcd-main -o yaml

apiVersion: druid.gardener.cloud/v1alpha1

kind: Etcd

metadata:

...

name: etcd-main

spec:

...

storageClass: gardener.cloud-fast # <-- was pointing to default storage class before!

volumeClaimTemplate: main-etcd

...

Note

Only the

etcd-mainstorage class gets changed togardener.cloud-fast. Theetcd-eventsconfiguration will still point to standard disk storage because this ETCD is much less occupied as compared to theetcd-mainstateful set.

The Migration

Now that the deployment was in place such that this mistake would not repeat in the future, we still had the ETCDs running on the default storage class. The reconciliation does not delete the existing persistent volumes (PVs) on its own.

To bring production back up quickly, we temporarily moved the ETCD pods to other nodes in the GKE cluster. These were nodes which were less occupied, such that the disk throughput was a little higher than before. But surely that was not a final solution.

For a proper solution we had to move the ETCD data out of the standard disk PV into a SSD-based PV.

Even though we had the etcd-backup-restore sidecar, we did not want to fully rely on the restore mechanism to do the migration. The backup should only be there for emergency situations when something goes wrong. Thus, we came up with another approach to introduce the SSD volume: GCP disk snapshots. This is how we did the migration:

- Scale down etcd-druid to zero in order to prevent it from disturbing your migration

- Scale down the kube-apiservers deployment to zero, then wait for the ETCD stateful to take another clean snapshot

- Scale down the ETCD stateful set to zero as well

- (in order to prevent Gardener from trying to bring up the downscaled resources, we used small shell constructs like

while true; do kubectl scale deploy etcd-druid --replicas 0 -n garden; sleep 1; done) - Take a drive snapshot in GCP from the volume that is referenced by the ETCD PVC

- Create a new disk in GCP from the snapshot on a SSD disk

- Delete the existing PVC and PV of the ETCD (oops, data is now gone!)

- Manually deploy a PV into your Kubernetes cluster that references this new SSD disk

- Manually deploy a PVC with the name of the original PVC and let it reference the PV that you have just created

- Scale up the ETCD stateful set and check that ETCD is running properly

- (if something went terribly wrong, you still have the backup from the etcd-backup-restore sidecar, delete the PVC and PV again and let the sidecar bring up ETCD instead)

- Scale up the kube-apiserver deployment again

- Scale up etcd-druid again

- (stop your shell hacks ;D)

This approach worked very well for us and we were able to fix our production deployment issue. And what happened: We have never seen any crashing kube-apiservers again. 🎉

Conclusion

As bad as problems in production are, they are the best way for learning from your mistakes. For new users of Gardener it can be pretty overwhelming to understand the rich configuration possibilities that Gardener brings. However, once you get a hang of how Gardener works, the application offers an exceptional versatility that makes it very much suitable for production use-cases like ours.

This example has shown how Gardener:

- Can handle arbitrary layers of infrastructure hosted by different cloud providers.

- Allows provider-specific tweaks to gain ideal performance for every cloud you want to support.

- Leverages Kubernetes core principles across the entire project architecture, making it vastly extensible and resilient.

- Brings useful disaster recovery mechanisms to your infrastructure (e.g. with etcd-backup-restore).

We hope that you could take away something new through this blog post. With this article we also want to thank the SAP Gardener team for helping us to integrate Gardener with metal-stack. It’s been a great experience so far. 😄 😍

Gardener v1.11 and v1.12 Released

Two months after our last Gardener release update, we are happy again to present release v1.11 and v1.12 in this blog post. Control plane migration, load balancer consolidation, and new security features are just a few topics we progressed with. As always, a detailed list of features, improvements, and bug fixes can be found in the release notes of each release. If you are going to update from a previous Gardener version, please take the time to go through the action items in the release notes.

Notable Changes in v1.12

Release v1.12, fresh from the oven, is shipped with plenty of improvements, features, and some API changes we want to pick up in the next sections.

Drop Functionless DNS Providers (gardener/gardener#3036)

This release drops the support for the so-called functionless DNS providers. Those are providers in a shoot’s specification (.spec.dns.providers) which don’t serve the shoot’s domain (.spec.dns.domain), but are created by Gardener in the seed cluster to serve DNS requests coming from the shoot cluster. If such providers don’t specify a type or secretName, the creation or update request for the corresponding shoot is denied.

Seed Taints (gardener/gardener#2955)

In an earlier release, we reserved a dedicated section in seed.spec.settings as a replacement for disable-capacity-reservation, disable-dns, invisible taints. These already deprecated taints were still considered and synced, which gave operators enough time to switch their integration to the new settings field. As of version v1.12, support for them has been discontinued and they are automatically removed from seed objects. You may use the actual taint names in a future release of Gardener again.

Load Balancer Events During Shoot Reconciliation (gardener/gardener#3028)

As Gardener is capable of managing thousands of clusters, it is crucial to keep operation efforts at a minimum. This release demonstrates this endeavor by further improving error reporting to the end user. During a shoot’s reconciliation, Gardener creates Services of type LoadBalancer in the shoot cluster, e.g. for VPN or Nginx-Ingress addon, and waits for a successful creation. However, in the past we experienced that occurring issues caused by the party creating the load balancer (typically Cloud-Controller-Manager) are only exposed in the logs or as events. Gardener now fetches these event messages and propagates them to the shoot status in case of a failure. Users can then often fix the problem themselves, if for example the failure discloses an exhausted quota on the cloud provider.

KonnectivityTunnel Feature per Shoot(gardener/gardener#3007)

Since release v1.6, Gardener has been capable of reversing the tunnel direction from the seed to the shoot via the KonnectivityTunnel feature gate. With this release we make it possible to control the feature per shoot. We recommend to selectively enable the KonnectivityTunnel, as it is still in alpha state.

Reference Protection (gardener/gardener#2771, gardener/gardener 1708419)

Shoot clusters may refer to external objects, like Secrets for specified DNS providers or they have a reference to an audit policy ConfigMap. Deleting those objects while any shoot still references them causes server errors, often only recoverable by an immense amount of manual operations effort. To prevent such scenarios, Gardener now adds a new finalizer gardener.cloud/reference-protection to these objects and removes it as soon as the object itself becomes releasable. Due to compatibility reasons, we decided that the handling for the audit policy ConfigMaps is delivered as an opt-in feature first, so please familiarize yourself with the necessary settings in the Gardener Controller Manager component config if you already plan to enable it.

Support for Resource Quotas (gardener/gardener#2627)

After the Kubernetes upstream change (kubernetes/kubernetes#93537) for externalizing the backing admission plugin has been accepted, we are happy to announce the support of ResourceQuotas for Gardener offered resource kinds. ResourceQuotas allow you to specify a maximum number of objects per namespace, especially for end-user objects like Shoots or SecretBindings in a project namespace. Even though the admission plugin is enabled by default in the Gardener API Server, make sure the Kube Controller Manager runs the resourcequota controller as well.

Watch Out Developers, Terraformer v2 is Coming! (gardener/gardener#3034)

Although not related only to Gardener core, the preparation towards Terraformer v2 in the extensions library is still an important milestone to mention. With Terraformer v2, Gardener extensions using Terraform scripts will benefit from great consistency improvements. Please check out PR #3034, which demonstrates necessary steps to transition to Terraformer v2 as soon as it’s released.

Notable Changes in v1.11

The Gardener community worked eagerly to deliver plenty of improvements with version v1.11. Those help us to further progress with topics like control plane migration, which is actively being worked on, or to harden our load balancer consolidation (APIServerSNI) feature. Besides improvements and fixes (full list available in release notes), this release contains major features as well, and we don’t want to miss a chance to walk you through them.

Gardener Admission Controller (gardener/gardener#2832), (gardener/gardener#2781)

In this release, all admission related HTTP handlers moved from the Gardener Controller Manager (GCM) to the new component Gardener Admission Controller. The admission controller is rather a small component as opposed to GCM with regards to memory footprint and CPU consumption, and thus allows you to run multiple replicas of it much cheaper than it was before. We certainly recommend specifying the admission controller deployment with more than one replica, since it reduces the odds of a system-wide outage and increases the performance of your Gardener service.

Besides the already known Namespace and Kubeconfig Secret validation, a new admission handler Resource-Size-Validator was added to the admission controller. It allows operators to restrict the size for all kinds of Kubernetes objects, especially sent by end-users to the Kubernetes or Gardener API Server. We address a security concern with this feature to prevent denial of service attacks in which an attacker artificially increases the size of objects to exhaust your object store, API server caches, or to let Gardener and Kubernetes controllers run out-of-memory. The documentation reveals an approach of finding the right resource size for your setup and why you should create exceptions for technical users and operators.

Deferring Shoot Progress Reporting (gardener/gardener#2909)

Shoot progress reporting is the continuous update process of a shoot’s .status.lastOperation field while the shoot is being reconciled by Gardener. Many steps are involved during reconciliation and depending on the size of your setup, the updates might become an issue for the Gardener API Server, which will refrain from processing further requests for a certain period.

With .controllers.shoot.progressReportPeriod in Gardenlet’s component configuration, you can now delay these updates for the specified period.

New Policy for Controller Registrations (gardener/gardener#2896)

A while ago, we added support for different policies in ControllerRegistrations which determine under which circumstances the deployments of registration controllers happen in affected seed clusters. If you specify the new policy AlwaysExceptNoShoots, the respective extension controller will be deployed to all seed cluster hosting at least one shoot cluster. After all shoot clusters from a seed are gone, the extension deployment will be deleted again.

A full list of supported policies can be found at Registering Extension Controllers.

Gardener Integrates with KubeVirt

The Gardener team is happy to announce that Gardener now offers support for an additional, often requested, infrastructure/virtualization technology, namely KubeVirt! Gardener can now provide Kubernetes-conformant clusters using KubeVirt managed Virtual Machines in the environment of your choice. This integration has been tested and works with any qualified Kubernetes (provider) cluster that is compatibly configured to host the required KubeVirt components, in particular for example Red Hat OpenShift Virtualization.

Gardener enables Kubernetes consumers to centralize and operate efficiently homogenous Kubernetes clusters across different IaaS providers and even private environments. This way the same cloud-based application version can be hosted and operated by its vendor or consumer on a variety of infrastructures. When a new customer or your development team demands for a new infrastructure provider, Gardener helps you to quickly and easily on-board your workload. Furthermore, on this new infrastructure, Gardener keeps the seamless Kubernetes management experience for your Kubernetes operators, while upholding the consistency of the CI/CD pipeline of your software development team.

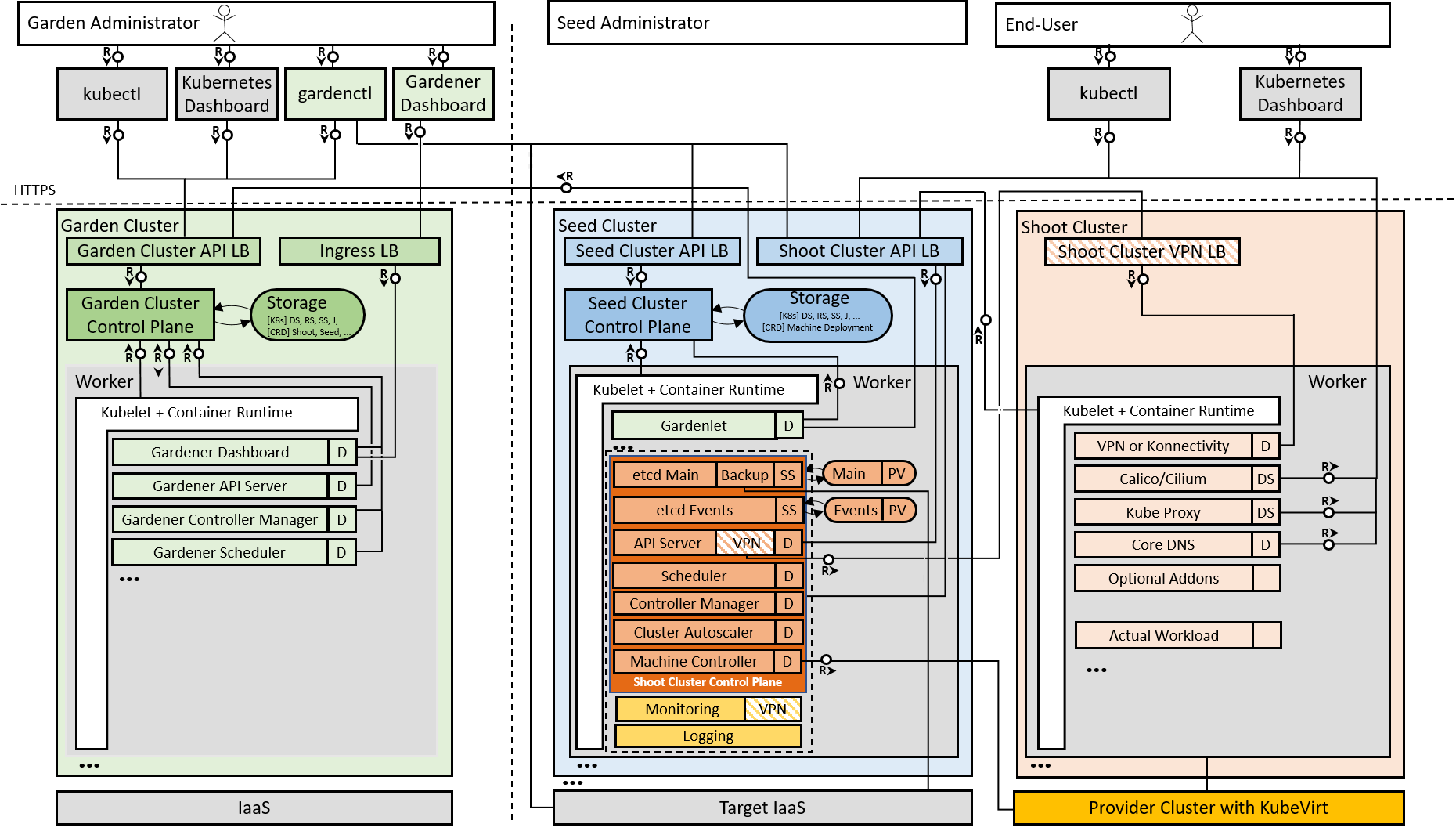

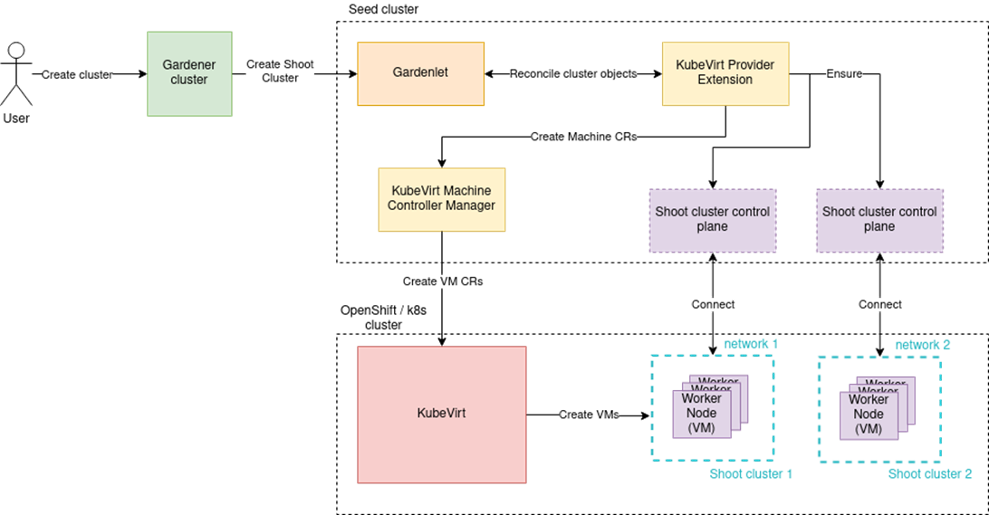

Architecture and Workflow

Gardener is based on the idea of three types of clusters – Garden cluster, Seed cluster and Shoot cluster (see Figure 1). The Garden cluster is used to control the entire Kubernetes environment centrally in a highly scalable design. The highly available seed clusters are used to host the end users (shoot) clusters’ control planes. Finally, the shoot clusters consist only of worker nodes to host the cloud native applications.

An integration of the Gardener open source project with a new cloud provider follows a standard Gardener extensibility approach. The integration requires two new components: a provider extension and a Machine Controller Manager (MCM) extension. Both components together enable Gardener to instruct the new cloud provider. They run in the Gardener seed clusters that host the control planes of the shoots based on that cloud provider. The role of the provider extension is to manage the provider-specific aspects of the shoot clusters’ lifecycle, including infrastructure, control plane, worker nodes, and others. It works in cooperation with the MCM extension, which in particular is responsible to handle machines that are provisioned as worker nodes for the shoot clusters. To get this job done, the MCM extension leverages the VM management/API capabilities available with the respective cloud provider.

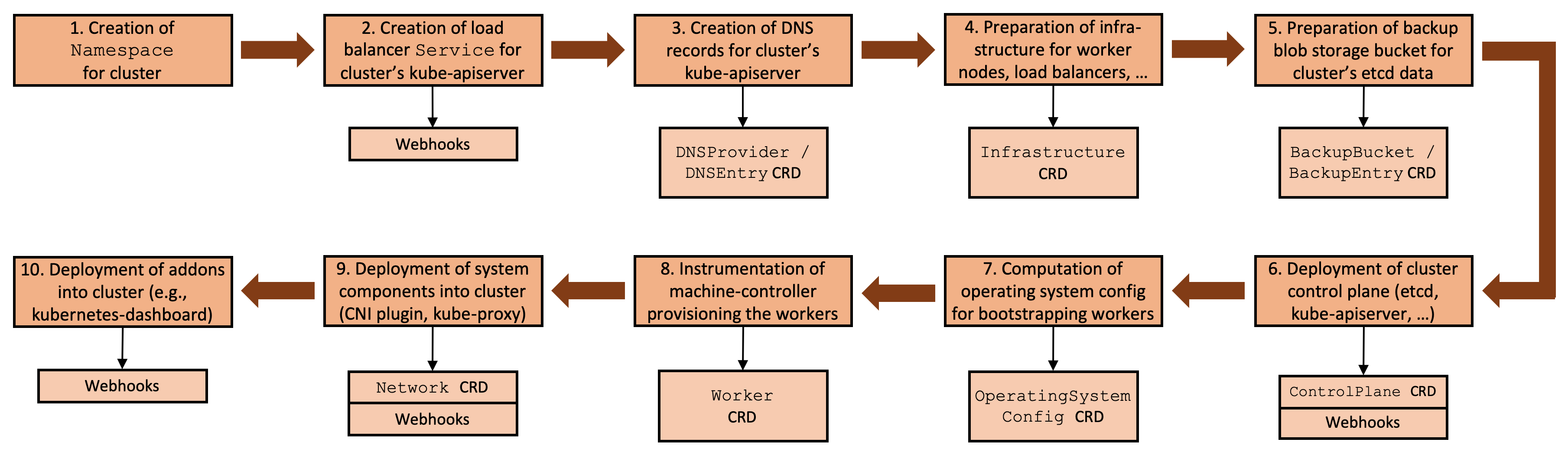

Setting up a Kubernetes cluster always involves a flow of interdependent steps (see Figure 2), beginning with the generation of certificates and preparation of the infrastructure, continuing with the provisioning of the control plane and the worker nodes, and ending with the deployment of system components. Gardener can be configured to utilize the KubeVirt extensions in its generic workflow at the right extension points, and deliver the desired outcome of a KubeVirt backed cluster.

Gardener Integration with KubeVirt in Detail

Integration with KubeVirt follows the Gardener extensibility concept and introduces the two new components mentioned above: the KubeVirt Provider Extension and the KubeVirt Machine Controller Manager (MCM) Extension.

The KubeVirt Provider Extension consists of three separate controllers that handle respectively the infrastructure, the control plane, and the worker nodes of the shoot cluster.

The Infrastructure Controller configures the network communication between the shoot worker nodes. By default, shoot worker nodes only use the provider cluster’s pod network. To achieve higher level of network isolation and better performance, it is possible to add more networks and replace the default pod network with a different network using container network interface (CNI) plugins available in the provider cluster. This is currently based on Multus CNI and NetworkAttachmentDefinitions.

Example infrastructure configuration in a shoot definition:

provider:

type: kubevirt

infrastructureConfig:

apiVersion: kubevirt.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

networks:

tenantNetworks:

- name: network-1

config: |

{

"cniVersion": "0.4.0",

"name": "bridge-firewall",

"plugins": [

{

"type": "bridge",

"isGateway": true,

"isDefaultGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"subnet": "10.100.0.0/16"

}

},

{

"type": "firewall"

}

]

}

default: true

The Control Plane Controller deploys a Cloud Controller Manager (CCM). This is a Kubernetes control plane component that embeds cloud-specific control logic. As any other CCM, it runs the Node controller that is responsible for initializing Node objects, annotating and labeling them with cloud-specific information, obtaining the node’s hostname and IP addresses, and verifying the node’s health. It also runs the Service controller that is responsible for setting up load balancers and other infrastructure components for Service resources that require them.

Finally, the Worker Controller is responsible for managing the worker nodes of the Gardener shoot clusters.

Example worker configuration in a shoot definition:

provider:

type: kubevirt

workers:

- name: cpu-worker

minimum: 1

maximum: 2

machine:

type: standard-1

image:

name: ubuntu

version: "18.04"

volume:

type: default

size: 20Gi

zones:

- europe-west1-c

For more information about configuring the KubeVirt Provider Extension as an end-user, see Using the KubeVirt provider extension with Gardener as end-user.

Enabling Your Gardener Setup to Leverage a KubeVirt Compatible Environment

The very first step required is to define the machine types (VM types) for VMs that will be available. This is achieved via the CloudProfile custom resource. The machine types configuration includes details such as CPU, GPU, memory, OS image, and more.

Example CloudProfile custom resource:

apiVersion: core.gardener.cloud/v1beta1

kind: CloudProfile

metadata:

name: kubevirt

spec:

type: kubevirt

providerConfig:

apiVersion: kubevirt.provider.extensions.gardener.cloud/v1alpha1

kind: CloudProfileConfig

machineImages:

- name: ubuntu

versions:

- version: "18.04"

sourceURL: "https://cloud-images.ubuntu.com/bionic/current/bionic-server-cloudimg-amd64.img"

kubernetes:

versions:

- version: "1.18.5"

machineImages:

- name: ubuntu

versions:

- version: "18.04"

machineTypes:

- name: standard-1

cpu: "1"

gpu: "0"

memory: 4Gi

volumeTypes:

- name: default

class: default

regions:

- name: europe-west1

zones:

- name: europe-west1-b

- name: europe-west1-c

- name: europe-west1-d

Once a machine type is defined, it can be referenced in shoot definitions. This information is used by the KubeVirt Provider Extension to generate MachineDeployment and MachineClass custom resources required by the KubeVirt MCM extension for managing the worker nodes of the shoot clusters during the reconciliation process.

For more information about configuring the KubeVirt Provider Extension as an operator, see Using the KubeVirt provider extension with Gardener as operator.

KubeVirt Machine Controller Manager (MCM) Extension

The KubeVirt MCM Extension is responsible for managing the VMs that are used as worker nodes of the Gardener shoot clusters using the virtualization capabilities of KubeVirt. This extension handles all necessary lifecycle management activities, such as machines creation, fetching, updating, listing, and deletion.

The KubeVirt MCM Extension implements the Gardener’s common driver interface for managing VMs in different cloud providers. As already mentioned, the KubeVirt MCM Extension is using the MachineDeployments and MachineClasses – an abstraction layer that follows the Kubernetes native declarative approach - to get instructions from the KubeVirt Provider Extension about the required machines for the shoot worker nodes. Also, the cluster austoscaler integrates with the scale subresource of the MachineDeployment resource. This way, Gardener offers a homogeneous autoscaling experience across all supported providers.

When a new shoot cluster is created or when a new worker node is needed for an existing shoot cluster, a new Machine will be created, and at that time, the KubeVirt MCM extension will create a new KubeVirt VirtualMachine in the provider cluster. This VirtualMachine will be created based on a set of configurations in the MachineClass that follows the specification of the KubeVirt provider.

The KubeVirt MCM Extension has two main components. The MachinePlugin is responsible for handling the machine objects, and the PluginSPI is in charge of making calls to the cloud provider interface, to manage its resources.

As shown in Figure 4, the MachinePlugin receives a machine request from the MCM and starts its processing by decoding the request, doing partial validation, extracting the relevant information, and sending it to the PluginSPI.

The PluginSPI then creates, gets, or deletes VirtualMachines depending on the method called by the MachinePlugin. It extracts the kubeconfig of the provider cluster and handles all other required KubeVirt resources such as the secret that holds the cloud-init configurations, and DataVolumes that are mounted as disks to the VMs.

Supported Environments

The Gardener KubeVirt support is currently qualified on:

- KubeVirt v0.32.0 (and later)

- Red Hat OpenShift Container Platform 4.4 (and later)

There are also plans for further improvements and new features, for example integration with CSI drivers for storage management. Details about the implementation progress can be found in the Gardener project on GitHub.

You can find further resources about the open source project Gardener at https://gardener.cloud.

Shoot Reconciliation Details

Do you want to understand how Gardener creates and updates Kubernetes clusters (Shoots)? Well, it’s complicated, but if you are not afraid of large diagrams and are a visual learner like me, this might be useful to you.

Introduction

In this blog post I will share a technical diagram which attempts to tie together the various components involved when Gardener creates a Kubernetes cluster. I have created and curated the diagram, which visualizes the Shoot reconciliation flow since I started developing on Gardener. Aside from serving as a memory aid for myself, I created it in hopes that it may potentially help contributors to understand a core piece of the complex Gardener machinery. Please be advised that the diagram and components involved are large. Although it can be easily divided into multiple diagrams, I want to show all the components and connections in a single diagram to create an overview of the reconciliation flow.

The goal is to visualize the interactions of the components involved in the Shoot creation. It is not intended to serve as a documentation of every component involved.

Background

Taking a step back, the Gardener README states:

In essence, Gardener is an extension API server that comes along with a bundle of custom controllers. It introduces new API objects in an existing Kubernetes cluster (which is called a garden cluster) in order to use them for the management of end-user Kubernetes clusters (which are called shoot clusters). These shoot clusters are described via declarative cluster specifications which are observed by the controllers. They will bring up the clusters, reconcile their state, perform automated updates and make sure they are always up and running.

This means that Gardener, just like any Kubernetes controller, creates Kubernetes clusters (Shoots) using a reconciliation loop.

The Gardenlet contains the controller and reconciliation loop responsible for the creation, update, deletion, and migration of Shoot clusters (there are more, but we spare them in this article). In addition, the Gardener Controller Manager also reconciles Shoot resources, but only for seed-independent functionality such as Shoot hibernation, Shoot maintenance or quota control.

This blog post is about the reconciliation loop in the Gardenlet responsible for creating and updating Shoot clusters. The code can be found in the gardener/gardener repository. The reconciliation loops of the extension controllers can be found in their individual repositories.

Shoot Reconciliation Flow Diagram

When Gardner creates a Shoot cluster, there are three conceptual layers involved: the Garden cluster, the Seed cluster and the Shoot cluster. Each layer represents a top-level section in the diagram (similar to a lane in a BPMN diagram).

It might seem confusing that the Shoot cluster itself is a layer, because the whole flow in the first place is about creating the Shoot cluster. I decided to introduce this separate layer to make a clear distinction between which resources exist in the Seed API server (managed by Gardener) and which in the Shoot API server (accessible by the Shoot owner).

Each section contains several components. Components are mostly Kubernetes resources in a Gardener installation (e.g. the gardenlet deployment in the Seed cluster).

This is the list of components:

(Virtual) Garden Cluster

- Gardener Extension API server

- Validating Provider Webhooks

- Project Namespace

Seed Cluster

- Gardenlet

- Seed API server

- every Shoot Control Plane has a dedicated namespace in the Seed.

- Cloud Provider (owned by Stakeholder)

- Arguably part of the Shoot cluster but used by components in the Seed cluster to create the infrastructure for the Shoot.

- Gardener DNS extension

- Provider Extension (such as gardener-extension-provider-aws)

- Gardener Extension ETCD Druid

- Gardener Resource Manager

- Operating System Extension (such as gardener-extension-os-gardenlinux)

- Networking Extension (such as gardener-extension-networking-cilium)

- Machine Controller Manager

- ContainerRuntime extension (such as gardener-extension-runtime-gvisor)

- Shoot API server (in the Shoot Namespace in the Seed cluster)

Shoot Cluster

- Cloud Provider Compute API (owned by Stakeholder) - for VM/Node creation.

- VM / Bare metal node hosted by Cloud Provider (in Stakeholder owned account).

How to Use the Diagram

The diagram:

- should be read from top to bottom - starting in the top left corner with the creation of the Shoot resource via the Gardener Extension API server.

- should not require an encompassing documentation / description. More detailed documentation on the components itself can usually be found in the respective repository.

- does not show which activities execute in parallel (many) and also does not describe the exact dependencies between the steps. This can be found out by looking at the source code. It however tries to put the activities in a logical order of execution during the reconciliation flow.

Occasionally, there is an info box with additional information next to parts in the diagram that in my point of view require further explanation. Large example resource for the Gardener CRDs (e.g Worker CRD, Infrastructure CRD) are placed on the left side and are referenced by a dotted line (—–).

Be aware that Gardener is an evolving project, so the diagram will most likely be already outdated by the time you are reading this. Nevertheless, it should give a solid starting point for further explorations into the details of Gardener.

Flow Diagram

The diagram can be found below and on GitHub. There are multiple formats available (svg, vsdx, draw.io, html).

Please open an issue or open a PR in the repository if information is missing or is incorrect. Thanks!

Gardener v1.9 and v1.10 Released

Summer holidays aren’t over yet, still, the Gardener community was able to release two new minor versions in the past weeks. Despite being limited in capacity these days, we were able to reach some major milestones, like adding Kubernetes v1.19 support and the long-delayed automated gardenlet certificate rotation. Whilst we continue to work on topics related to scalability, robustness, and better observability, we agreed to adjust our focus a little more into the areas of development productivity, code quality and unit/integration testing for the upcoming releases.

Notable Changes in v1.10

Gardener v1.10 was a comparatively small release (measured by the number of changes) but it comes with some major features!

Kubernetes 1.19 Support (gardener/gardener#2799)

The newest minor release of Kubernetes is now supported by Gardener (and all the maintained provider extensions)! Predominantly, we have enabled CSI migration for OpenStack now that it got promoted to beta, i.e. 1.19 shoots will no longer use the in-tree Cinder volume provisioner. The CSI migration enablement for Azure got postponed (to at least 1.20) due to some issues that the Kubernetes community is trying to fix in the 1.20 release cycle. As usual, the 1.19 release notes should be considered before upgrading your shoot clusters.

Automated Certificate Rotation for gardenlet (gardener/gardener#2542)

Similar to the kubelet, the gardenlet supports TLS bootstrapping when deployed into a new seed cluster.

It will request a client certificate for the garden cluster using the CertificateSigningRequest API of Kubernetes and store the generated results in a Secret object in the garden namespace of its seed.

These certificates are usually valid for one year.

We have now added support for automatic renewals if the expiration dates are approaching.

Improved Monitoring Alerts (gardener/gardener#2776)

We have worked on a larger refactoring to improve reliability and accuracy of our monitoring alerts for both shoot control planes in the seed, as well as shoot system components running on worker nodes. The improvements are primarily for operators and should result in less false positive alerts. Also, the alerts should fire less frequently and are better grouped in order to reduce to overall amount of alerts.

Seed Deletion Protection (gardener/gardener#2732)

Our validation to improve robustness and countermeasures against accidental mistakes has been improved.

Earlier, it was possible to remove the use-as-seed annotation for shooted seeds or directly set the deletionTimestamp on Seed objects, despite of the fact that they might still run shoot control planes.

Seed deletion would not start in these cases, although, it would disrupt the system unnecessarily, and result in some unexpected behaviour.

The Gardener API server is now forbidding such requests if the seeds are not completely empty yet.

Logging Improvements for Loki (multiple PRs)

After we released our large logging stack refactoring (from EFK to Loki) with Gardener v1.8, we have continued to work on reliability, quality and user feedback in general.

We aren’t done yet, though, Gardener v1.10 includes a bunch of improvements which will help to graduate the Logging feature gate to beta and GA, eventually.

Notable Changes in v1.9

The v1.9 release contained tons of small improvements and adjustments in various areas of the code base and a little less new major features. However, we don’t want to miss the opportunity to highlight a few of them.

CRI Validation in CloudProfiles (gardener/gardener#2137)

A couple of releases back we have introduced support for containerd and the ContainerRuntime extension API.

The supported container runtimes are operating system specific, and until now it wasn’t possible for end-users to easily figure out whether they can enable containerd or other ContainerRuntime extensions for their shoots.

With this change, Gardener administrators/operators can now provide that information in the .spec.machineImages section in the CloudProfile resource.

This also allows for enhanced validation and prevents misconfigurations.

New Shoot Event Controller (gardener/gardener#2649)

The shoot controllers in both the gardener-controller-manager and gardenlet fire several Events for some important operations (e.g., automated hibernation/wake-up due to hibernation schedule, automated Kubernetes/machine image version update during maintenance, etc.).

Earlier, the only way to prolong the lifetime of these events was to modify the --event-ttl command line parameter of the garden cluster’s kube-apiserver.

This came with the disadvantage that all events were kept for a longer time (not only those related to Shoots that an operator is usually interested in and ideally wants to store for a couple of days).

The new shoot event controller allows to achieve this by deleting non-shoot events.

This helps operators and end-users to better understand which changes were applied to their shoots by Gardener.

Early Deployment of the Logging Stack for New Shoots (gardener/gardener#2750)

Since the first introduction of the Logging feature gate two years back, the logging stack was only deployed at the very end of the shoot creation.

This had the disadvantage that control plane pod logs were not kept in case the shoot creation flow is interrupted before the logging stack could be deployed.

In some situations, this was preventing fetching relevant information about why a certain control plane component crashed.

We now deploy the logging stack very early in the shoot creation flow to always have access to such information.

Gardener v1.8.0 Released

Even if we are in the midst of the summer holidays, a new Gardener release came out yesterday: v1.8.0! It’s main themes are the large change of our logging stack to Loki (which was already explained in detail on a blog post on grafana.com), more configuration options to optimize the utilization of a shoot, node-local DNS, new project roles, and significant improvements for the Kubernetes client that Gardener uses to interact with the many different clusters.

Notable Changes

Logging 2.0: EFK Stack Replaced by Loki (gardener/gardener#2515)

Since two years or so, Gardener could optionally provision a dedicated logging stack per seed and per shoot which was based on fluent-bit, fluentd, ElasticSearch and Kibana. This feature was still hidden behind an alpha-level feature gate and never got promoted to beta so far. Due to various limitations of this solution, we decided to replace the EFK stack with Loki. As we already have Prometheus and Grafana deployments for both users and operators by default for all clusters, the choice was just natural. Please find out more on this topic at this dedicated blog post.

Cluster Identities and DNSOwner Objects (gardener/gardener#2471, gardener/gardener#2576)

The shoot control plane migration topic is ongoing since a few months already, and we are very much progressing with it. A first alpha version will probably make it out soon. As part of these endeavors, we introduced cluster identities and the usage of DNSOwner objects in this release. Both are needed to gracefully migrate the DNSEntry extension objects from the old seed to the new seed as part of the control plane migration process.

Please find out more on this topic at this blog post.

New uam Role for Project Members to Limit User Access Management Privileges (gardener/gardener#2611)

In order to allow external user access management system to integrate with Gardener and to fulfil certain compliance aspects, we have introduced a new role called uam for Project members (next to admin and viewer). Only if a user has this role, then he/she is allowed to add/remove other human users to the respective Project. By default, all newly created Projects assign this role only to the owner while, for backwards-compatibility reasons, it will be assigned for all members for existing projects. Project owners can steadily revoke this access as desired.

Interestingly, the uam role is backed by a custom RBAC verb called manage-members, i.e., the Gardener API server is only admitting changes to the human Project members if the respective user is bound to this RBAC verb.

New Node-Local DNS Feature for Shoots (gardener/gardener#2528)

By default, we are using CoreDNS as DNS plugin in shoot clusters which we auto-scale horizontally using HPA. However, in some situations we are discovering certain bottlenecks with it, e.g., unreliable UDP connections, unnecessary node hopping, inefficient load balancing, etc.

To further optimize the DNS performance for shoot clusters, it is now possible to enable a new alpha-level feature gate in the gardenlet’s componentconfig: NodeLocalDNS. If enabled, all shoots will get a new DaemonSet to run a DNS server on each node.

More kubelet and API Server Configurability (gardener/gardener#2574, gardener/gardener#2668)

One large benefit of Gardener is that it allows you to optimize the usage of your control plane as well as worker nodes by exposing relevant configuration parameters in the Shoot API.

In this version, we are adding support to configure kubelet’s values for systemReserved and kubeReserved resources as well as the kube-apiserver’s watch cache sizes.

This allows end-users to get to better node utilization and/or performance for their shoot clusters.

Configurable Timeout Settings for machine-controller-manager (gardener/gardener#2563)

One very central component in Project Gardener is the machine-controller-manager for managing the worker nodes of shoot clusters. It has extensive qualities with respect to node lifecycle management and rolling updates. As such, it uses certain timeout values, e.g. when creating or draining nodes, or when checking their health.

Earlier, those were not customizable by end-users, but we are adding this possibility now. You can fine-grain these settings per worker pool in the Shoot API such that you can optimize the lifecycle management of your worker nodes even more!

Improved Usage of Cached Client to Reduce Network I/O (gardener/gardener#2635, gardener/gardener#2637)

In the last Gardener release v1.7 we have introduced a huge refactoring the clients that we use to interact with the many different Kubernetes clusters. This is to further optimize the network I/O performed by leveraging watches and caches as good as possible. It’s still an alpha-level feature that must be explicitly enabled in the Gardenlet’s component configuration, though, with this release we have improved certain things in order to pave the way for beta promotion. For example, we were initially also using a cached client when interacting with shoots. However, as the gardenlet runs in the seed as well (and thus can communicate cluster-internally with the kube-apiservers of the respective shoots) this cache is not necessary and just memory overhead. We have removed it again and saw the memory usage getting lower again. More to come!

AWS EBS Volume Encryption by Default (gardener/gardener-extension-provider-aws#147)

The Shoot API already exposed the possibility to encrypt the root disks of worker nodes since quite a while, but it was disabled by default (for backwards-compatibility reasons). With this release we have change this default, so new shoot worker nodes will be provisioned with encrypted root disks out-of-the-box. However, the g4dn instance types of AWS don’t support this encryption, so when you use them you have to explicitly disable the encryption in the worker pool configuration.

Liveness Probe for Gardener API Server Deployment (gardener/gardener#2647)

A small, but very valuable improvement is the introduction of a liveness probe for our Gardener API server. As it’s built with the same library like the Kubernetes API server, it exposes two endpoints at /livez and /readyz which were created exactly for the purpose of live- and readiness probes.

With Gardener v1.8, the Helm chart contains a liveness probe configuration by default, and we are awaiting an upstream fix (kubernetes/kubernetes#93599) to also enable the readiness probe. This will help in a smoother rolling update of the Gardener API server pods, i.e., preventing clients from talking to a not yet initialized or already terminating API server instance.

Webhook Ports Changed to Enable OpenShift (gardener/gardener#2660)

In order to make it possible to run Gardener on OpenShift clusters as well, we had to make a change in the port configuration for the webhooks we are using in both Gardener and the extension controllers. Earlier, all the webhook servers directly exposed port 443, i.e., a system port which is a security concern and disallowed in OpenShift. We have changed this port now across all places and also adapted our network policies accordingly. This is most likely not the last necessary change to enable this scenario, however, it’s a great improvement to push the project forward.

If you’re interested in more details and even more improvements, you can read all the release notes for Gardener v1.8.0.

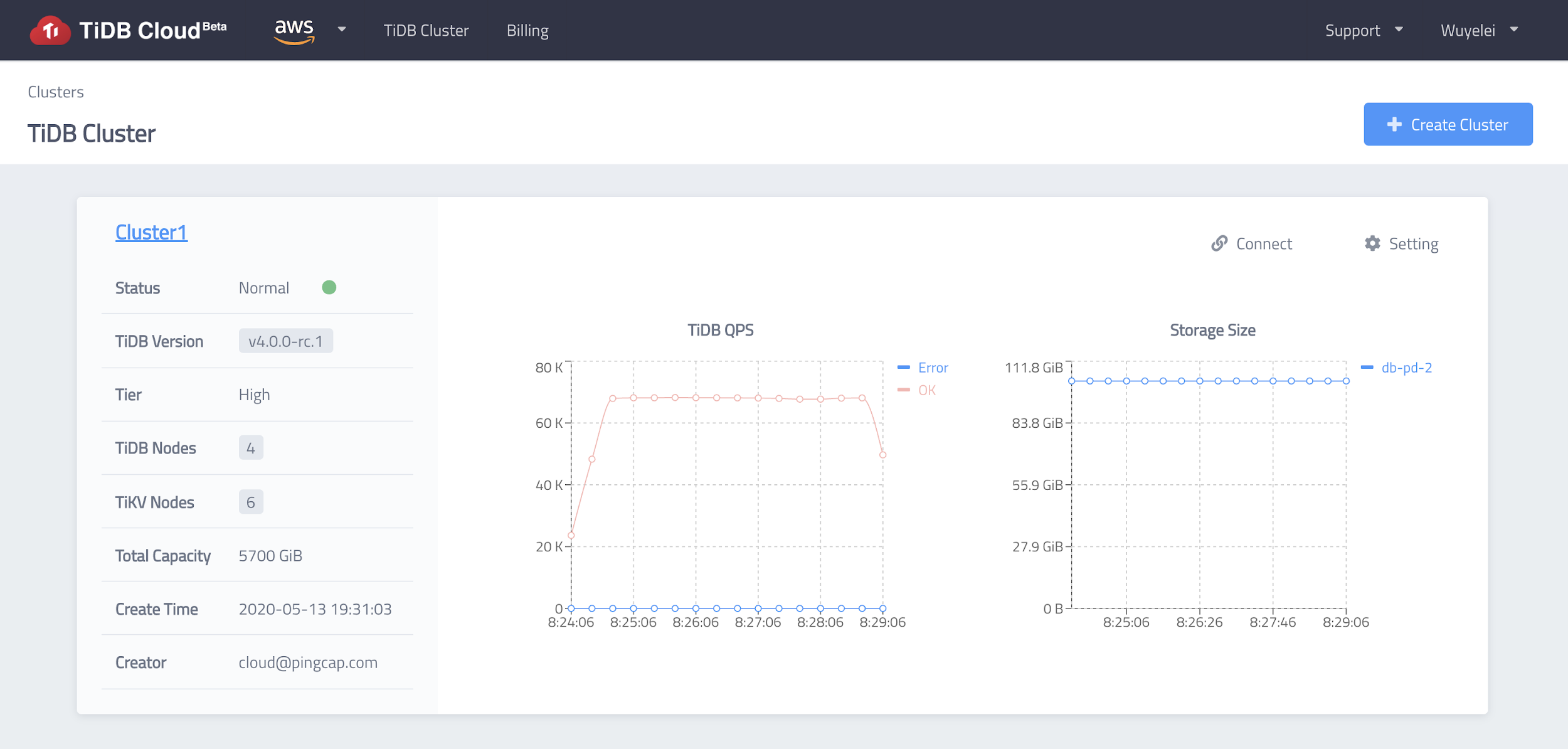

PingCAP’s Experience in Implementing Their Managed TiDB Service with Gardener

Gardener is showing successful collaboration with its growing community of contributors and adopters. With this come some success stories, including PingCAP using Gardener to implement its managed service.

About PingCAP and Its TiDB Cloud

PingCAP started in 2015, when three seasoned infrastructure engineers working at leading Internet companies got sick and tired of the way databases were managed, scaled and maintained. Seeing no good solution on the market, they decided to build their own - the open-source way. With the help of a first-class team and hundreds of contributors from around the globe, PingCAP is building a distributed NewSQL, hybrid transactional and analytical processing (HTAP) database.

Its flagship project, TiDB, is a cloud-native distributed SQL database with MySQL compatibility, and one of the most popular open-source database projects - with 23.5K+ stars and 400+ contributors. Its sister project TiKV is a Cloud Native Interactive Landscape project.

PingCAP envisioned their managed TiDB service, known as TiDB Cloud, to be multi-tenant, secure, cost-efficient, and to be compatible with different cloud providers. As a result, the company turned to Gardener to build their managed TiDB cloud service offering.

Limitations with Other Public Managed Kubernetes Services

Previously, PingCAP encountered issues while using other public managed K8s cluster services, to develop the first version of its TiDB Cloud. Their worst pain point was that they felt helpless when encountering certain malfunctions. PingCAP wasn’t able to do much to resolve these issues, except waiting for the providers’ help. More specifically, they experienced problems due to cloud-provider specific Kubernetes system upgrades, delays in the support response (which could be avoided in exchange of a costly support fee), and no control over when things got fixed.

There was also a lot of cloud-specific integration work needed to follow a multi-cloud strategy, which proved to be expensive both to produce and maintain. With one of these managed K8s services, you would have to integrate the instance API, as opposed to a solution like Gardener, which provides a unified API for all clouds. Such a unified API eliminates the need to worry about cloud specific-integration work altogether.

Why PingCAP Chose Gardener to Build TiDB Cloud

“Gardener has similar concepts to Kubernetes. Each Kubernetes cluster is just like a Kubernetes pod, so the similar concepts apply, and the controller pattern makes Gardener easy to manage. It was also easy to extend, as the team was already very familiar with Kubernetes, so it wasn’t hard for us to extend Gardener. We also saw that Gardener has a very active community, which is always a plus!”

- Aylei Wu, (Cloud Engineer) at PingCAP

At first glance, PingCAP had initial reservations about using Gardener - mainly due to its adoption level (still at the beginning) and an apparent complexity of use. However, these were soon eliminated as they learned more about the solution. As Aylei Wu mentioned during the last Gardener community meeting, “a good product speaks for itself”, and once the company got familiar with Gardener, they quickly noticed that the concepts were very similar to Kubernetes, which they were already familiar with.

They recognized that Gardener would be their best option, as it is highly extensible and provides a unified abstraction API layer. In essence, the machines can be managed via a machine controller manager for different cloud providers - without having to worry about the individual cloud APIs.

They agreed that Gardener’s solution, although complex, was definitely worth it. Even though it is a relatively new solution, meaning they didn’t have access to other user testimonials, they decided to go with the service since it checked all the boxes (and as SAP was running it productively with a huge fleet). PingCAP also came to the conclusion that building a managed Kubernetes service themselves would not be easy. Even if they were to build a managed K8s service, they would have to heavily invest in development and would still end up with an even more complex platform than Gardener’s. For all these reasons combined, PingCAP decided to go with Gardener to build its TiDB Cloud.

Here are certain features of Gardener that PingCAP found appealing:

- Cloud-agnostic: Gardener’s abstractions for cloud-specific integrations dramatically reduce the investment in supporting more than one cloud infrastructure. Once the integration with Amazon Web Services was done, moving on to Google Cloud Platform proved to be relatively easy. (At the moment, TiDB Cloud has subscription plans available for both GCP and AWS, and they are planning to support Alibaba Cloud in the future.)

- Familiar concepts: Gardener is K8s native; its concepts are easily related to core Kubernetes concepts. As such, it was easy to onboard for a K8s experienced team like PingCAP’s SRE team.

- Easy to manage and extend: Gardener’s API and extensibility are easy to implement, which has a positive impact on the implementation, maintenance costs and time-to-market.

- Active community: Prompt and quality responses on Slack from the Gardener team tremendously helped to quickly onboard and produce an efficient solution.

How PingCAP Built TiDB Cloud with Gardener

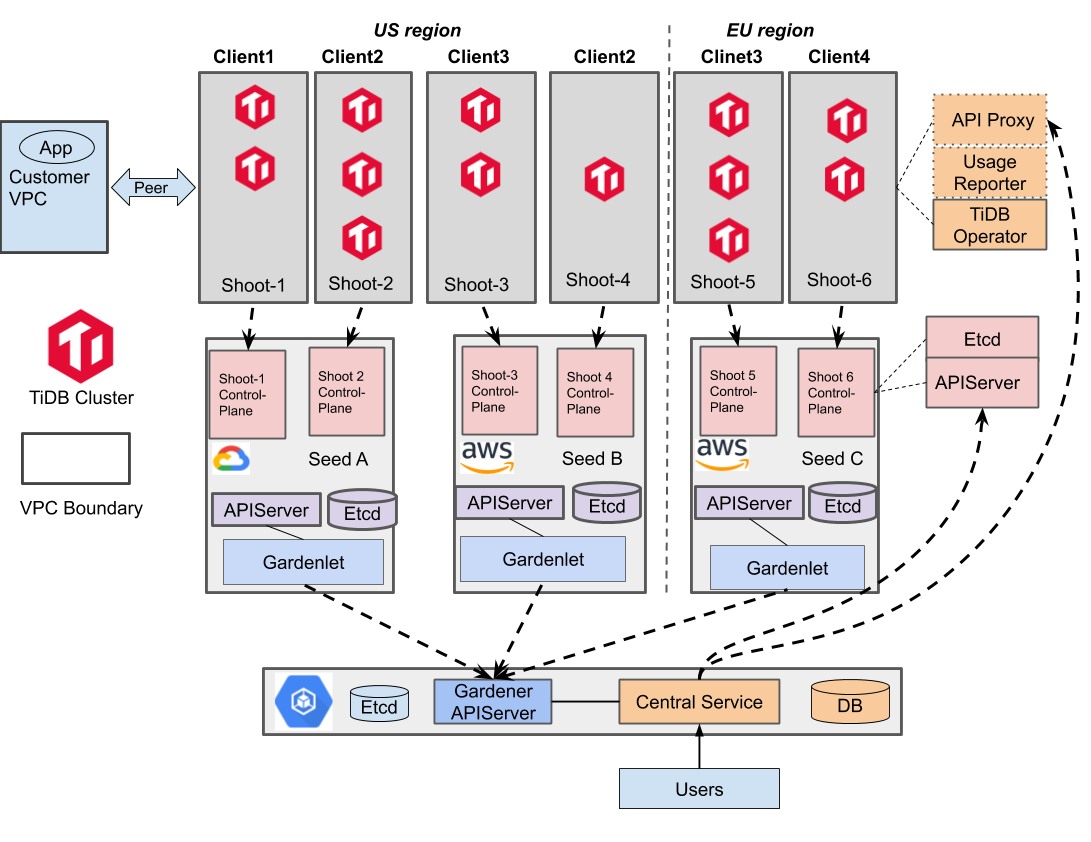

On a technical level, PingCAP’s set-up overview includes the following:

- A Base Cluster globally, which is the top-level control plane of TiDB Cloud

- A Seed Cluster per cloud provider per region, which makes up the fundamental data plane of TiDB Cloud

- A Shoot Cluster is dynamically provisioned per tenant per cloud provider per region when requested

- A tenant may create one or more TiDB clusters in a Shoot Cluster

As a real world example, PingCAP sets up the Base Cluster and Seed Clusters in advance. When a tenant creates its first TiDB cluster under the us-west-2 region of AWS, a Shoot Cluster will be dynamically provisioned in this region, and will host all the TiDB clusters of this tenant under us-west-2. Nevertheless, if another tenant requests a TiDB cluster in the same region, a new Shoot Cluster will be provisioned. Since different Shoot Clusters are located in different VPCs and can even be hosted under different AWS accounts, TiDB Cloud is able to achieve hard isolation between tenants and meet the critical security requirements for our customers.

To automate these processes, PingCAP creates a service in the Base Cluster, known as the TiDB Cloud “Central” service. The Central is responsible for managing shoots and the TiDB clusters in the Shoot Clusters. As shown in the following diagram, user operations go to the Central, being authenticated, authorized, validated, stored and then applied asynchronously in a controller manner. The Central will talk to the Gardener API Server to create and scale Shoot clusters. The Central will also access the Shoot API Service to deploy and reconcile components in the Shoot cluster, including control components (TiDB Operator, API Proxy, Usage Reporter for billing, etc.) and the TiDB clusters.

What’s Next for PingCAP and Gardener

With the initial success of using the project to build TiDB Cloud, PingCAP is now working heavily on the stability and day-to-day operations of TiDB Cloud on Gardener. This includes writing Infrastructure-as-Code scripts/controllers with it to achieve GitOps, building tools to help diagnose problems across regions and clusters, as well as running chaos tests to identify and eliminate potential risks. After benefiting greatly from the community, PingCAP will continue to contribute back to Gardener.

In the future, PingCAP also plans to support more cloud providers like AliCloud and Azure. Moreover, PingCAP may explore the opportunity of running TiDB Cloud in on-premise data centers with the constantly expanding support this project provides. Engineers at PingCAP enjoy the ease of learning from Gardener’s Kubernetes-like concepts and being able to apply them everywhere. Gone are the days of heavy integrations with different clouds and worrying about vendor stability. With this project, PingCAP now sees broader opportunities to land TiDB Cloud on various infrastructures to meet the needs of their global user group.

Stay tuned, more blog posts to come on how Gardener is collaborating with its contributors and adopters to bring fully-managed clusters at scale everywhere! If you want to join in on the fun, connect with our community.

New Website, Same Green Flower

The Gardener project website just received a serious facelift. Here are some of the highlights:

- A completely new landing page, emphasizing both on Gardener’s value proposition and the open community behind it.

- The Community page was reconstructed for quick access to the various community channels and will soon merge the Adopters page. It will provide a better insight into success stories from the community.

- Improved blogs layout. One-click sharing options are available starting with simple URL copy link and twitter button and others will closely follow up. While we are at it, give it a try. Spread the word.

Website builds also got to a new level with:

- Containerization. The whole build environment is containerized now, eliminating differences between local and CI/CD setup and reducing content developers focus only to the

/documentationrepository. Running a local server for live preview of changes as you make them when developing content for the website, is now as easy as runingmake servein your local/documentationclone. - Numerous improvements to the buld scripts. More configuration options, authenticated requests, fault tolerance and performance.

- Good news for Windows WSL users who will now enjoy a significantly support. See the updated README for details on that.

- A number of improvements in layouts styles, site assets and hugo site-building techniques.

But hey, THAT’S NOT ALL!

Stay tuned for more improvements around the corner. The biggest ones are aligning the documentation with the new theme and restructuring it along, more emphasis on community success stories all around, more sharing options and more than a handful of shortcodes for content development and … let’s cut the spoilers here.

I hope you will like it. Let us know what you think about it. Feel free to leave comments and discuss on

Go ahead and help us spread the word: https://gardener.cloud