This is the multi-page printable view of this section. Click here to print.

2021

Navigating Cloud-Native Security - Lessons from a Recent Container Service Vulnerability

The cloud-native landscape is constantly evolving, bringing immense benefits in agility and scale. However, with this evolution comes a complex and ever-changing threat landscape. Recently, a significant vulnerability was reported by Unit 42 concerning Azure Container Instances (ACI), a service designed to run containers in a multi-tenant environment. This incident offers valuable lessons for the entire community, and we at Gardener believe in sharing insights that can help strengthen collective security.

This particular vulnerability underscores the critical importance of vigilance, timely patching, and defense-in-depth, principles we have long championed within the Gardener project.

Understanding the ACI Vulnerability

As detailed in the Unit 42 report, the attack vector on ACI involved several stages, leveraging a combination of outdated software and architectural choices:

- Outdated

runc: The initial entry point exploited a version ofruncfrom October 2016. This version was susceptible to CVE-2019-5736, a critical vulnerability allowing host takeover. This vulnerability was widely publicized in early 2019. - Lateral Movement via Kubelet Impersonation: After gaining node access, the next step involved attempting to impersonate the Kubelet to interact with the Kubernetes API server. The ACI clusters were reportedly running Kubernetes versions (v1.8.x - v1.10.x) from 2017/2018, which were, in principle, vulnerable to such an attack.

- Exploiting a Custom Bridge Component: While the direct Kubelet impersonation didn’t work as initially expected, investigators found that a custom bridge component, designed to abstract the underlying Kubernetes, became the next target. This proprietary component inadvertently held a service account token with

cluster-adminprivileges. By capturing this token, attackers could gain full control over the Kubernetes cluster. - Control Plane Access: The report also noted that the API server appeared to be self-hosted, potentially allowing easier movement from the data plane to the control plane environment once cluster-admin privileges were obtained.

- Alternative Attack Vector: A second distinct attack vector, also leveraging the custom bridge component, was identified, pointing to another area where security hardening could have prevented compromise.

Gardener’s Proactive Security Posture

The ACI incident highlights several threat vectors that the Gardener team has actively worked to mitigate over the years, often well in advance of them becoming widely exploited.

- Timely Patching of Critical Vulnerabilities (e.g.,

runcCVE-2019-5736): When CVE-2019-5736 was pre-disclosed, the Gardener team treated it with utmost seriousness. We had announcements and patches prepared, rolling them out on the day of public disclosure. This rapid response is crucial for minimizing exposure to known high-severity vulnerabilities. - Hardening Against Exploits: The Kubelet impersonation vector mentioned in the ACI report is particularly relevant to Gardener. The underlying Kubernetes vulnerability (CVE-2020-8558, tracked as Kubernetes issue #85867) that could allow a compromised node/Kubelet to redirect API server traffic (like

kubectl exec) was discovered and reported by Alban Crequy from Kinvolk. This discovery was made during a penetration test commissioned by the Gardener project, specifically asking to find loopholes in our seed clusters. We were able to implement mitigations in Gardener even before the upstream Kubernetes fix was available, further securing our seed cluster architecture. The second distinct attack vector was also discovered during such a penetration test and Gardener further hardened its network policies. - Principle of Least Privilege and Secure Component Design: The ACI bridge component’s

cluster-admintoken is a stark reminder of the dangers of overly privileged components, especially those interacting with user workloads. Within Gardener, we’ve invested heavily in mechanisms like the Gardener Seed Authorizer (as discussed in Gardener issue #1723). It goes beyond standard RBAC to strictly limit the capabilities of components and prevent lateral movement, ensuring that even if one part is compromised, the blast radius is contained. We also meticulously review and restrict permissions for all components. - Strict Separation of Concerns: A core architectural principle in Gardener is the strict separation between the control plane and the data plane - at all levels. Being an administrator in a shoot cluster does not grant any access to the underlying seed cluster’s control plane execution environment or the upper hierarchy of runtime and garden cluster, a critical defense against escalation.

Learning and Moving Forward

The ACI vulnerability is a powerful reminder that security is not a one-time task but a continuous process of vigilance, proactive hardening, and learning from every incident, whether our own or others’. No system is impenetrable, and the assumption that any single entity, regardless of size, has perfected security can lead to complacency.

At Gardener, we remain committed to:

- Staying current: Diligently updating dependencies and core components.

- Defense-in-depth: Implementing multiple layers of security controls.

- Proactive discovery: Continuously testing and seeking out potential weaknesses.

- Community collaboration: Sharing knowledge and contributing to upstream security efforts.

We believe that by fostering a culture of security awareness and investing in robust, layered defenses, we can build more resilient cloud-native systems for everyone. This recent industry event, while unfortunate for those affected, provides crucial learning points that reinforce our commitment to the security principles embedded in Gardener. We will continue to evolve Gardener’s security posture, always striving to stay ahead of emerging threats.

Happy Anniversary, Gardener! Three Years of Open Source Kubernetes Management

Happy New Year Gardeners! As we greet 2021, we also celebrate Gardener’s third anniversary. Gardener was born with its first open source commit on 10.1.2018 (its inception within SAP was of course some 9 months earlier):

commit d9619d01845db8c7105d27596fdb7563158effe1

Author: Gardener Development Community <gardener.opensource@sap.com>

Date: Wed Jan 10 13:07:09 2018 +0100

Initial version of gardener

This is the initial contribution to the Open Source Gardener project.

...

Looking back, three years down the line, the project initiators were working towards a special goal: Publishing Gardener as an open source project on Github.com. Join us as we look back at how it all began, the challenges Gardener aims to solve, and why open source and the community was and is the project’s key enabler.

Gardener Kick-Off: “We opted to BUILD ourselves”

Early 2017, SAP put together a small, jelled team of experts with a clear mission: work out how SAP could serve Kubernetes based environments (as a service) for all teams within the company. Later that same year, SAP also joined the CNCF as a platinum member.

We first deliberated intensively on the BUY options (including acquisitions, due to the size and estimated volume needed at SAP). There were some early products from commercial vendors and startups available that did not bind exclusively to one of the hyperscalers, but these products did not cover many of our crucial and immediate requirements for a multi-cloud environment.

Ultimately, we opted to BUILD ourselves. This decision was not made lightly, because right from the start, we knew that we would have to cover thousands of clusters, across the globe, on all kinds of infrastructures. We would have to be able to create them at scale as well as manage them 24x7. And thus, we predicted the need to invest into automation of all aspects, to keep the service TCO at a minimum, and to offer an enterprise worthy SLA early on. This particular endeavor grew into launching the project Gardener, first internally, and ultimately fulfilling all checks, externally based on open source. Its mission statement, in a nutshell, is “Universal Kubernetes at scale”. Now, that’s quite bold. But we also had a nifty innovation that helped us tremendously along the way. And we can openly reveal the secret here: Gardener was built, not only for creating Kubernetes at scale, but it was built (recursively) in Kubernetes itself.

What Do You Get with Gardener?

Gardener offers managed and homogenous Kubernetes clusters on IaaS providers like AWS, Azure, GCP, AliCloud, Open Telekom Cloud, SCS, OVH and more, but also covers versatile infrastructures like OpenStack, VMware or bare metal. Day-1 and Day-2 operations are an integral part of a cluster’s feature set. This means that Gardener is not only capable of provisioning or de-provisioning thousands of clusters, but also of monitoring your cluster’s health state, upgrading components in a rolling fashion, or scaling the control plane as well as worker nodes up and down depending on the current resource demand.

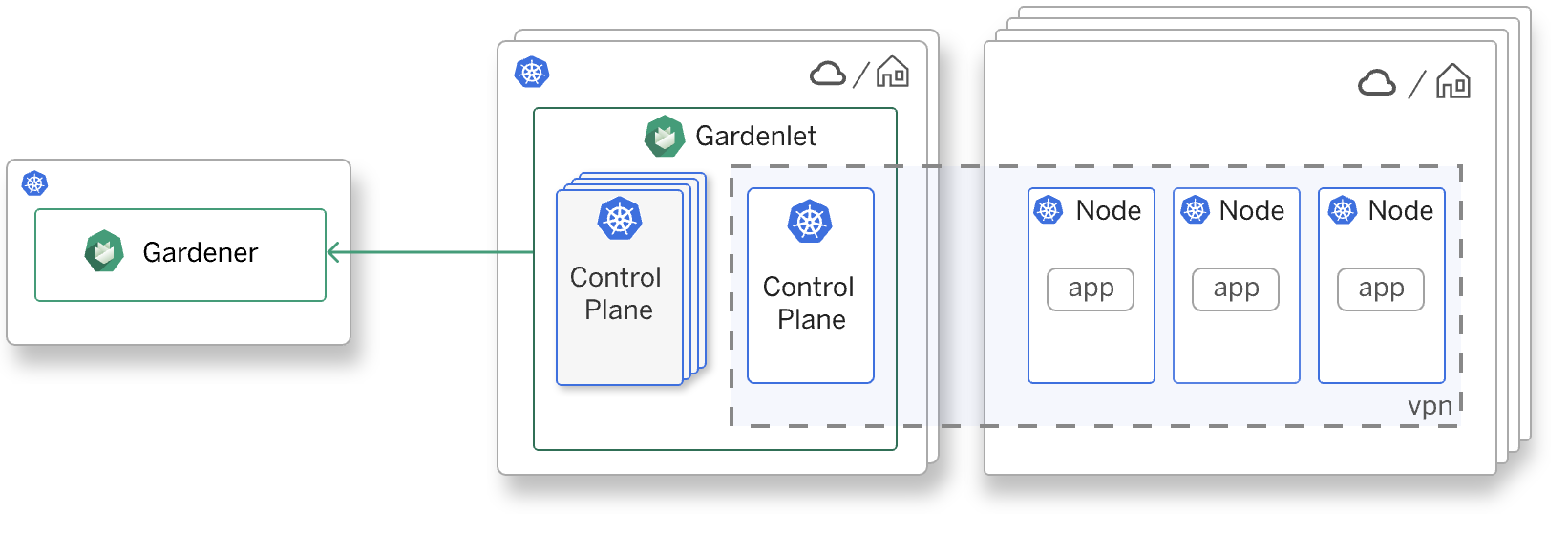

Some features mentioned above might sound familiar to you, simply because they’re squarely derived from Kubernetes. Concretely, if you explore a Gardener managed end-user cluster, you’ll never see the so-called “control plane components” (Kube-Apiserver, Kube-Controller-Manager, Kube-Scheduler, etc.) The reason is that they run as Pods inside another, hosting/seeding Kubernetes cluster. Speaking in Gardener terms, the latter is called a Seed cluster, and the end-user cluster is called a Shoot cluster; and thus the botanical naming scheme for Gardener was born. Further assets like infrastructure components or worker machines are modelled as managed Kubernetes objects too. This allows Gardener to leverage all the great and production proven features of Kubernetes for managing Kubernetes clusters. Our blog post on Kubernetes.io reveals more details about the architectural refinements.

End-users directly benefit from Gardener’s recursive architecture. Many of the requirements that we identified for the Gardener service turned out to be highly convenient for shoot owners. For instance, Seed clusters are usually equipped with DNS and x509 services. At the same time, these service offerings can be extended to requests coming from the Shoot clusters i.e., end-users get domain names and certificates for their applications out of the box.

Recognizing the Power of Open Source

The Gardener team immediately profited from open source: from Kubernetes obviously, and all its ecosystem projects. That all facilitated our project’s very fast and robust development. But it does not answer:

“Why would SAP open source a tool that clearly solves a monetizable enterprise requirement?"_

Short spoiler alert: it initially involved a leap of faith. If we just look at our own decision path, it is undeniable that developers, and with them entire industries, gravitate towards open source. We chose Linux, Containers, and Kubernetes exactly because they are open, and we could bet on network effects, especially around skills. The same decision process is currently replicated in thousands of companies, with the same results. Why? Because all companies are digitally transforming. They are becoming software companies as well to a certain extent. Many of them are also our customers and in many discussions, we recognized that they have the same challenges that we are solving with Gardener. This, in essence, was a key eye opener. We were confident that if we developed Gardener as open source, we’d not only seize the opportunity to shape a Kubernetes management tool that finds broad interest and adoption outside of our use case at SAP, but we could solve common challenges faster with the help of a community, and that in consequence would sustain continuous feature development.

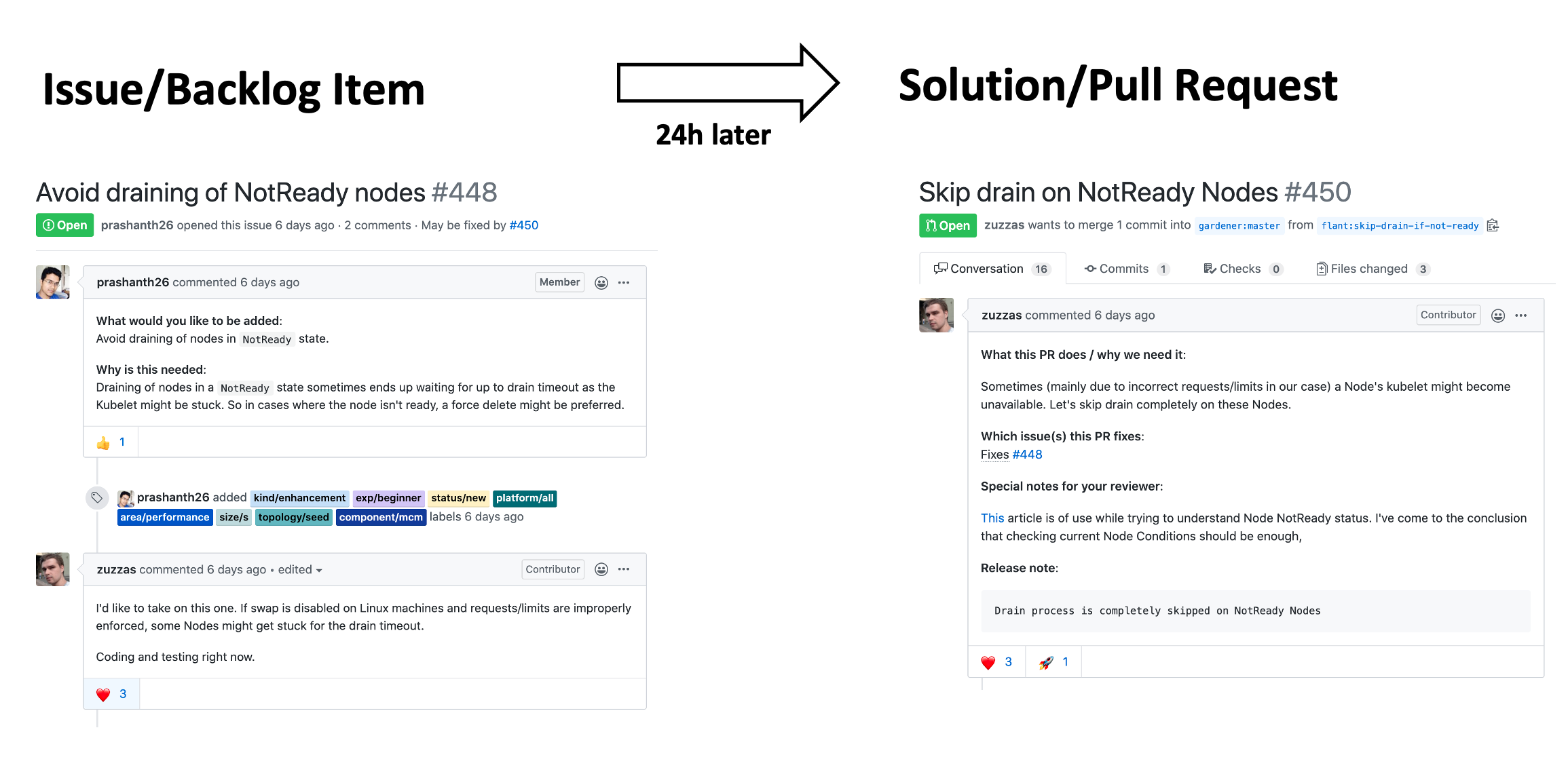

Coincidently, that was also when the SAP Open Source Program Office (OSPO) was launched. It supported us making a case to develop Gardener completely as open source. Today, we can witness that this strategy has unfolded. It opened the gates not only for adoption, but for co-innovation, investment security, and user feedback directly in code. Below you can see an example of how the Gardener project benefits from this external community power as contributions are submitted right away.

Differentiating Gardener from Other Kubernetes Management Solutions

Imagine that you have created a modern solid cloud native app or service, fully scalable, in containers. And the business case requires you to run the service on multiple clouds, like AWS, AliCloud, Azure, … maybe even on-premises like OpenStack or VMware. Your development team has done everything to ensure that the workload is highly portable. But they would need to qualify each providers’ managed Kubernetes offering and their custom Bill-of-Material (BoM), their versions, their deprecation plan, roadmap etc. Your TCD would explode and this is exactly what teams at SAP experienced. Now, with Gardener you can, instead, roll out homogeneous clusters and stay in control of your versions and a single roadmap. Across all supported providers!

Also, teams that have serious, or say, more demanding workloads running on Kubernetes will come to the same conclusion: They require the full management control of the Kubernetes underlay. Not only that, they need access, visibility, and all the tuning options for the control plane to safeguard their service. This is a conclusion not only from teams at SAP, but also from our community members, like PingCap, who use Gardener to serve TiDB Cloud service. Whenever you need to get serious and need more than one or two clusters, Gardener is your friend.

Who Is Using Gardener?

Well, there is SAP itself of course, but also the number of Gardener adopters and companies interested in Gardener is growing (~1700 GitHub stars), as more are challenged by multi-cluster and multi-cloud requirements.

Flant, PingCap, STACKIT, T-Systems, Sky, or b’nerd are among these companies, to name a few. They use Gardener to either run products they sell on top or offer managed Kubernetes clusters directly to their clients, or even only components that are re-usable from Gardener.

An interesting journey in the open source space started with Finanz Informatik Technologie Service (FI-TS), an European Central Bank regulated and certified hoster for banks. They operate in very restricted environments, as you can imagine, and as such, they re-designed their datacenter for cloud native workloads from scratch, that is from cabling, racking and stacking to an API that serves bare metal servers. For Kubernetes-as-a-Service, they evaluated and chose Gardener because it was open and a perfect candidate. With Gardener’s extension capabilities, it was possible to bring managed Kubernetes clusters to their very own bare metal stack, metal-stack.io. Of course, this meant implementation effort. But by reusing the Gardener project, FI-TS was able to leverage our standard with minimal adjustments for their special use-case. Subsequently, with their contributions, SAP was able to make Gardener more open for the community.

Full Speed Ahead with the Community in 2021

Some of the current and most active topics are about the installer (Landscaper), control plane migration, automated seed management and documentation. Even though once you are into Kubernetes and then Gardener, all complexity falls into place, you can make all the semantic connections yourself. But beginners that join the community without much prior knowledge should experience a ramp-up with slighter slope. And that is currently a pain point. Experts directly ask questions about documentation not being up-to-date or clear enough. We prioritized the functionality of what you get with Gardener at the outset and need to catch up. But here is the good part: Now that we are starting the installation subject, later we will have a much broader picture of what we need to install and maintain Gardener, and how we will build it.

In a community call last summer, we gave an overview of what we are building: The Landscaper. With this tool, we will be able to not only install a full Gardener landscape, but we will also streamline patches, updates and upgrades with the Landscaper. Gardener adopters can then attach to a release train from the project and deploy Gardener into a dev, canary and multiple production environments sequentially. Like we do at SAP.

Key Takeaways in Three Years of Gardener

#1 Open Source is Strategic

Open Source is not just about using freely available libraries, components, or tools to optimize your own software production anymore. It is strategic, unfolds for projects like Gardener, and that in the meantime has also reached the Board Room.

#2 Solving Concrete Challenges by Co-Innovation

Users of a particular product or service increasingly vote/decide for open source variants, such as project Gardener, because that allows them to freely innovate and solve concrete challenges by developing exactly what they require (see FI-TS example). This user-centric process has tremendous advantages. It clears out the middleman and other vested interests. You have access to the full code. And lastly, if others start using and contributing to your innovation, it allows enterprises to secure their investments for the long term. And that re-enforces point #1 for enterprises that have yet to create a strategic Open Source Program Office.

#3 Cloud Native Skills

Gardener solves problems by applying Kubernetes and Kubernetes principles itself. Developers and operators who obtain familiarity with Kubernetes will immediately notice and appreciate our concept and can contribute intuitively. The Gardener maintainers feel responsible to facilitate community members and contributors. Barriers will further be reduced by our ongoing landscaper and documentation efforts. This is why we are so confident on Gardener adoption.

The Gardener team is gladly welcoming new community members, especially regarding adoption and contribution. Feel invited to try out your very own Gardener installation, join our Slack workspace or community calls. We’re looking forward to seeing you there!

Machine Controller Manager

Kubernetes is a cloud-native enabler built around the principles for a resilient, manageable, observable, highly automated, loosely coupled system. We know that Kubernetes is infrastructure agnostic with the help of a provider specific Cloud Controller Manager. But Kubernetes has explicitly externalized the management of the nodes. Once they appear - correctly configured - in the cluster, Kubernetes can use them. If nodes fail, Kubernetes can’t do anything about it, external tooling is required. But every tool, every provider is different. So, why not elevate node management to a first class Kubernetes citizen? Why not create a Kubernetes native resource that manages machines just like pods? Such an approach is brought to you by the Machine Controller Manager (aka MCM), which, of course, is an open sourced project. MCM gives you the following benefits:

- seamlessly manage machines/nodes with a declarative API (of course, across different cloud providers)

- integrate generically with the cluster autoscaler

- plugin with tools such as the node-problem-detector

- transport the immutability design principle to machine/nodes

- implement e.g. rolling upgrades of machines/nodes

Machine Controller Manager aka MCM

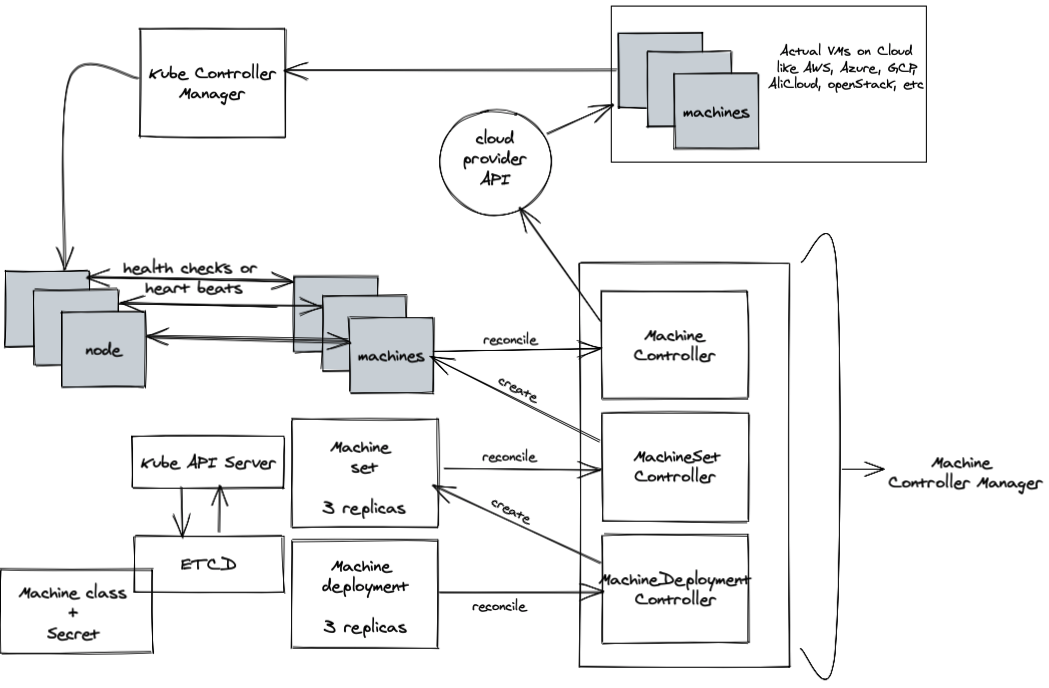

Machine Controller Manager is a group of cooperative controllers that manage the lifecycle of the worker machines. It is inspired by the design of Kube Controller Manager in which various sub controllers manage their respective Kubernetes Clients.

Machine Controller Manager reconciles a set of Custom Resources namely MachineDeployment, MachineSet and Machines which are managed & monitored by their controllers MachineDeployment Controller, MachineSet Controller, Machine Controller respectively along with another cooperative controller called the Safety Controller.

Understanding the sub-controllers and Custom Resources of MCM

The Custom Resources MachineDeployment, MachineSet and Machines are very much analogous to the native K8s resources of Deployment, ReplicaSet and Pods respectively. So, in the context of MCM:

MachineDeploymentprovides a declarative update forMachineSetandMachines.MachineDeployment Controllerreconciles theMachineDeploymentobjects and manages the lifecycle ofMachineSetobjects.MachineDeploymentconsumes a provider specificMachineClassin itsspec.template.spec, which is the template of the VM spec that would be spawned on the cloud by MCM.MachineSetensures that the specified number ofMachinereplicas are running at a given point of time.MachineSet Controllerreconciles theMachineSetobjects and manages the lifecycle ofMachineobjects.Machinesare the actual VMs running on the cloud platform provided by one of the supported cloud providers.Machine Controlleris the controller that actually communicates with the cloud provider to create/update/delete machines on the cloud.- There is a

Safety Controllerresponsible for handling the unidentified or unknown behaviours from the cloud providers. - Along with the above Custom Controllers and Resources, MCM requires the

MachineClassto use K8sSecretthat stores cloudconfig (initialization scripts used to create VMs) and cloud specific credentials.

Workings of MCM

In MCM, there are two K8s clusters in the scope — a Control Cluster and a Target Cluster. The Control Cluster is the K8s cluster where the MCM is installed to manage the machine lifecycle of the Target Cluster. In other words, the Control Cluster is the one where the machine-* objects are stored. The Target Cluster is where all the node objects are registered. These clusters can be two distinct clusters or the same cluster, whichever fits.

When a MachineDeployment object is created, the MachineDeployment Controller creates the corresponding MachineSet object. The MachineSet Controller in-turn creates the Machine objects. The Machine Controller then talks to the cloud provider API and actually creates the VMs on the cloud.

The cloud initialization script that is introduced into the VMs via the K8s Secret consumed by the MachineClasses talks to the KCM (K8s Controller Manager) and creates the node objects. After registering themselves to the Target Cluster, nodes start sending health signals to the machine objects. That is when MCM updates the status of the machine object from Pending to Running.

More on Safety Controller

Safety Controller contains the following functions:

Orphan VM Handling

- It lists all the VMs in the cloud; matching the tag of given cluster name and maps the VMs with the

Machineobjects using theProviderIDfield. VMs without any backingMachineobjects are logged and deleted after confirmation. - This handler runs every 30 minutes and is configurable via

--machine-safety-orphan-vms-periodflag.

Freeze Mechanism

Safety Controllerfreezes theMachineDeploymentandMachineSet controllerif the number ofMachineobjects goes beyond a certain threshold on top of theSpec.Replicas. It can be configured by the flag--safety-upor--safety-downand also--machine-safety-overshooting-period.Safety Controllerfreezes the functionality of the MCM if either of thetarget-apiserveror thecontrol-apiserveris not reachable.Safety Controllerunfreezes the MCM automatically once situation is resolved to normal. Afreezelabel is applied onMachineDeployment/MachineSetto enforce the freeze condition.

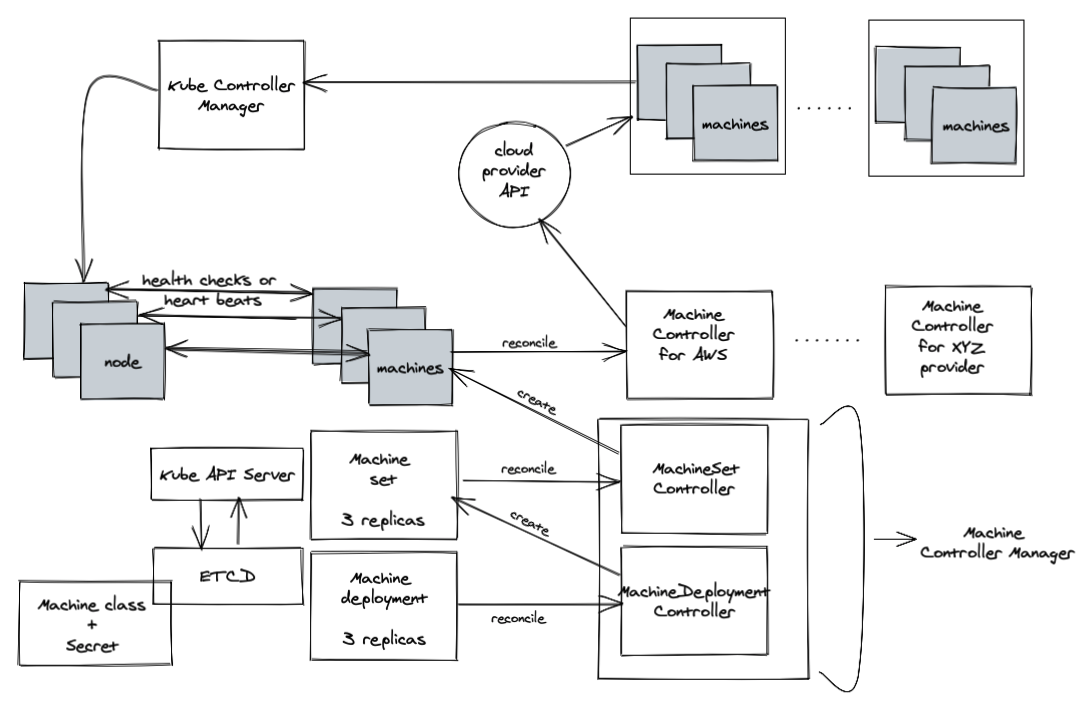

Evolution of MCM from In-Tree to Out-of-Tree (OOT)

MCM supports declarative management of machines in a K8s Cluster on various cloud providers like AWS, Azure, GCP, AliCloud, OpenStack, Metal-stack, Packet, KubeVirt, VMWare, Yandex. It can, of course, be easily extended to support other cloud providers.

Going ahead, having the implementation of the Machine Controller Manager supporting too many cloud providers would be too much upkeep from both a development and a maintenance point of view. Which is why the Machine Controller component of MCM has been moved to Out-of-Tree design, where the Machine Controller for each respective cloud provider runs as an independent executable, even though typically packaged under the same deployment.

This OOT Machine Controller will implement a common interface to manage the VMs on the respective cloud provider. Now, while the Machine Controller deals with the Machine objects, the Machine Controller Manager (MCM) deals with higher level objects such as the MachineSet and MachineDeployment objects.

A lot of contributions are already being made towards an OOT Machine Controller Manager for various cloud providers. Below are the links to the repositories:

- Out of Tree Machine Controller Manager for AliCloud

- Out of Tree Machine Controller Manager for AWS

- Out of Tree Machine Controller Manager for Azure

- Out of Tree Machine Controller Manager for GCP

- Out of Tree Machine Controller Manager for KubeVirt

- Out of Tree Machine Controller Manager for Metal

- Out of Tree Machine Controller Manager for vSphere

- Out of Tree Machine Controller Manager for Yandex

Watch the Out of Tree Machine Controller Manager video on our Gardener Project YouTube channel to understand more about OOT MCM.

Who Uses MCM?

MCM is originally developed and employed by a K8s Control Plane as a Service called Gardener. However, the MCM’s design is elegant enough to be employed when managing the machines of any independent K8s clusters, without having to necessarily associate it with Gardener.

Metal-stack is a set of microservices that implements Metal as a Service (MaaS). It enables you to turn your hardware into elastic cloud infrastructure. Metal-stack employs the adopted Machine Controller Manager to their Metal API. Check out an introduction to it in metal-stack - kubernetes on bare metal.

Sky UK Limited (a broadcaster) migrated their Kubernetes node management from Ansible to Machine Controller Manager. Check out the How Sky is using Machine Controller Manager (MCM) and autoscaler video on our Gardener Project YouTube channel.

Also, other interesting use cases with MCM are implemented by Kubernetes enthusiasts, who for example adjusted the Machine Controller Manager to provision machines in the cloud to extend a local Raspberry-Pi K3s cluster. This topic is covered in detail in the 2020-07-03 Gardener Community Meeting on our Gardener Project YouTube channel.

Conclusion

Machine Controller Manager is the leading automation tool for machine management for, and in, Kubernetes. And the best part is that it is open sourced. It is freely (and easily) usable and extensible, and the community more than welcomes contributions.

If you want to know more about Machine Controller Manager or find out about a similar scope for your solutions, feel free to visit the GitHub page machine-controller-manager. We are so excited to see what you achieve with Machine Controller Manager.