This is the multi-page printable view of this section. Click here to print.

2024

Unleashing Potential: Highlights from the 6th Gardener Community Hackathon

The 6th Gardener Community Hackathon, hosted at Schlosshof Freizeitheim in Schelklingen, Germany in December 2024, was a hub of creativity and collaboration. Developers of various companies joined forces to explore new frontiers of the Gardener project. Here’s a rundown of the key outcomes:

- 🌐 IPv6 Support on IronCore: The team successfully created dual-stack shoot clusters on IronCore, although LoadBalancer services for IPv6 traffic still need some work.

- 🔁 Version Classification Lifecycle in

CloudProfile: A Gardener Enhancement Proposal (GEP) was developed to predefine the timestamps for Kubernetes or machine image version classifications inCloudProfiles. - 💡 Gardener SLIs: Shoot Cluster Creation/Deletion Times: Metrics for shoot cluster creation and deletion times were exposed, improving observability in end-to-end testing.

- 🛡️ Enhanced

SeedAuthorizer With Label/Field Selectors: TheSeedAuthorizer was upgraded to enforce label/field selectors, restrictinggardenletaccess to specificShootresources. - 🔑 Bring Your Own ETCD Encryption Key via Key Management Systems: Users can now manage the encryption key for ETCD of shoot clusters using external key management systems like Vault or AWS KMS.

- ⚖️ Load Balancing for Calls to

kube-apiserver: Scalability and load balancing of requests tokube-apiserverwere improved by leveraging Istio. - 🪴 Validate PoC For In-Place Node Updates Of Shoot Clusters: A proof-of-concept for in-place updates of Kubernetes minor versions and machine image versions in shoot clusters was validated.

- 🚀 Prevent

PodScheduling Issues Due To Overscaling: The issue of the Vertical Pod Autoscaler recommending resource requirements beyond the allocatable resources of the largest nodes was addressed. - 💪🏻 Prevent Multiple

systemdUnit Restarts On Reconciliation Errors: The reconciliation process ofgardener-node-agentwas improved to prevent multiple restarts ofsystemdunits. - 🤹♂️ Trigger Nodes Rollout Individually per Worker Pool During Credentials Rotation: More control over the rollout of worker nodes during shoot cluster credentials rotation was introduced.

- ⛓️💥 E2E Test Skeleton For Autonomous Shoot Clusters: The e2e test infrastructure for managing autonomous shoot clusters was established.

- ⬆️ Deploy Prow Via Flux: Prow, Gardener’s CI and automation system, was deployed using Flux, a cloud-native solution for continuous delivery based on GitOps.

- 🚏 Replace

TopologyAwareHintsWithServiceTrafficDistribution:TopologyAwareHintswere replaced withServiceTrafficDistribution, eliminating custom code in Gardener. - 🪪 Support More Use-Cases For

TokenRequestor: The injection of the current CA bundle into access secrets was enabled, supporting more use cases. - 🫄

cluster-autoscaler’sProvisioningRequestAPI: TheProvisioningRequestAPI incluster-autoscalerwas introduced, allowing users to provision new nodes or check if a pod would fit in the existing cluster without scaling up. - 👀 Watch

ManagedResources InShootCare Controller: A watch forManagedResources in theShootcare controller was introduced, re-evaluating health checks immediately when relevant conditions change. - 🐢 Cluster API Provider For Gardener: The cluster API in Gardener was supported, allowing for the deployment and deletion of shoot clusters via the cluster API.

- 👨🏼💻 Make

cluster-autoscalerWork In Local Setup: Thecluster-autoscalerwas made to work in the local setup, setting thenodeTemplatein theMachineClassfor thecluster-autoscalerto get the resource capacity of the nodes. - 🧹 Use Structured Authorization In Local KinD Cluster: Structured Authorization was used to enable the

SeedAuthorizer in the local KinD clusters, speeding up cluster creation. - 🧹 Drop Internal Versions From Component Configuration APIs: The internal version of component configurations was removed, reducing maintenance effort during development. 15:55

- 🐛 Fix Non-Functional Shoot Node Logging In Local Setup: The shoot node logging in the local development setup was fixed.

- 🧹 No Longer Generate Empty

SecretForreconcileOperatingSystemConfigs: The generation of an emptySecretforreconcileOperatingSystemConfigs was stopped. - 🖥️ Generic Monitoring Extension: The requirements for externalizing the monitoring aspect of Gardener were discussed.

These outcomes reflect the ongoing progress and collaborative spirit of the Gardener community. We’re eager to see what the next Hackathon will bring. Keep an eye out for more updates on the Gardener project!

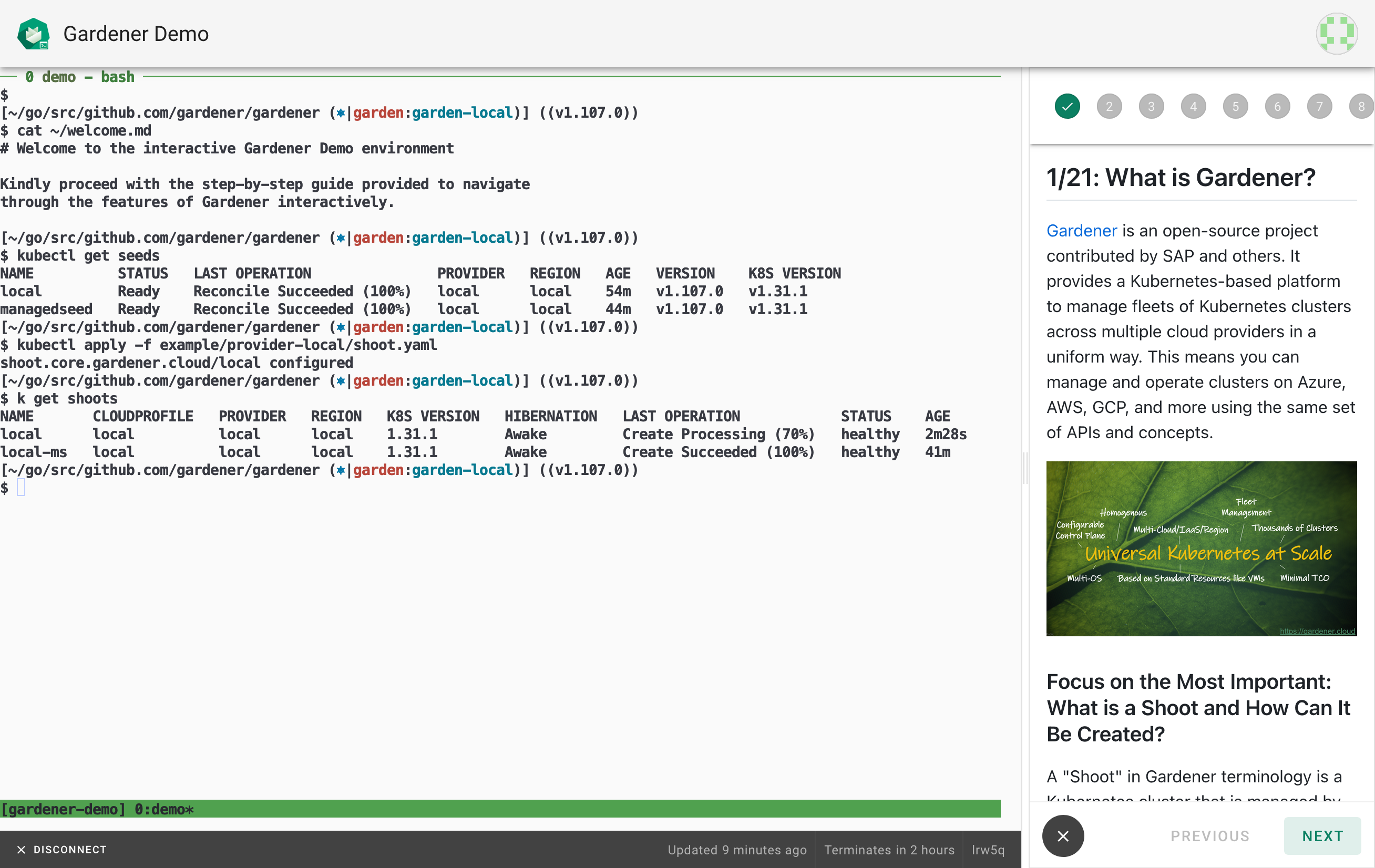

Introducing the New Gardener Demo Environment: Your Hands-On Playground for Kubernetes Management

We’re thrilled to announce the launch of our new Gardener demo environment! This interactive playground is designed to provide you with a hands-on experience of Gardener, our open-source project that offers a Kubernetes-based solution for managing Kubernetes clusters across various cloud providers uniformly.

Why a Demo Environment?

We understand that the best way to learn is by doing. That’s why we’ve created this demo environment. It’s a space where you can experiment with Gardener, explore its features, and see firsthand how it can simplify your Kubernetes operations - all without having to install anything on your local machine.

Easy Access, Quick Learning

Getting started is as simple as logging in with your GitHub account. Once you’re in, you’ll have access to a terminal session running a pre-configured Gardener system (based on the local setup which you can also install on your machine if preferred). This means you can dive right in and start creating and managing Kubernetes clusters. Whether you’re a seasoned Kubernetes user or just starting your Kubernetes journey, this demo environment is a great way to learn about Gardener. It’s designed to be intuitive and interactive, helping you learn at your own pace.

Discover the Power of Gardener

Gardener is all about making Kubernetes management easier and more efficient. With its ability to manage Kubernetes clusters homogenously across different cloud providers, it’s a powerful tool that can optimize your resources, improve performance, and enhance the resilience of your Kubernetes workloads. But don’t just take our word for it - try it out for yourself! The Gardener demo environment is your playground to discover the power of Gardener.

Start Your Gardener Journey Today

We’re excited to see what you’ll create with Gardener. Log in with your GitHub account and start your hands-on Gardener journey today. Happy exploring!

PromCon EU 2024 Highlights

Overview

Many innovative observability and application performance management (APM) products and services were released over the last few years. They often adopt or enhance concepts that Prometheus invented more than a decade ago. However, Prometheus, as an open-source project, has never lost its importance in this fast-moving industry and is the core of Gardener’s monitoring stack.

On September 11th and 12th, Prometheus developers and users met for PromCon EU 2024 in Berlin. The single-track conference provided a good mix of talks about the latest Prometheus development and governance by many core contributors, as well as users who shared their experiences with monitoring in a cloud-native environment. The overarching topic of the event was the upcoming Prometheus 3.0 release. Many of the presented innovations will also be interesting for the future of Gardener’s observability stack. We will take a closer look at the changes in the Prometheus and Perses projects in the remainder of this article.

Prometheus 3.0 - OpenTelemetry Everywhere

A first beta version of Prometheus 3.0 was released during the first day of the conference. The main focus of the new major release is compatibility with OpenTelemetry and full support for metric ingestion using the OpenTelemetry protocol (OTLP). While OpenTelemetry and Prometheus metrics have many things in common, a lot of incompatibilities still need to be sorted out.

One of the differences can be found in the naming conventions for metrics and label or attribute names. Instead of simply replacing dots with underscores, the Prometheus developers decided to introduce full UTF-8 support to achieve the best possible compatibility. This plan brought up interesting syntactical challenges for PromQL queries, as well as a demand for conversion when interacting with systems that still have the restrictions of previous Prometheus releases.

The development of native histograms in Prometheus has been ongoing for a while. The more efficient way to represent histograms also contributes to OpenTelemetry compatibility.

While Prometheus traditionally uses labels to enrich a time series with metadata, OpenTelemetry describes the concepts of metric attributes and resource attributes. While the new release contains a new Info PromQL function to ease the enrichment with resource attributes, the development of a new metadata store has been initiated to improve the experience even more in the long term.

A new UI makes the creation of ad-hoc queries easier and provides better feedback about the structure of a query and possible mistakes to the user.

The Prometheus project has reached a high level of maturity after more than 12 years of development by an active community of contributors. To ensure that the community can continue growing, a new governance model was proposed, including clearly defined roles and a new steering committee.

Perses - The New Kid in The Sandbox

Perses was of particular interest to us working on monitoring at Gardener. As you may know, Gardener is currently using Plutono, a fork of Grafana’s most recent Apache-licensed version. This was introduced in g/g#7318 over a year ago and intended as a stop-gap solution. Since then, we have been interested in finding a more sustainable solution, as we’re cut off from the enhancements to Grafana. If you’ve ever made a change to a dashboard in Gardener, you’ll know that there are some pain points in working with Plutono. Therefore, we were looking forward especially to a talk on a project known as Perses. An open-source dashboard solution that recently joined the CNCF as a sandbox project. Driven by development from Amadeus, Chronosphere, and Red Hat Openshift, Perses already offers a variety of dashboard panels, supports dashboards-as-code workflows, and has data-source plugins for metrics and distributed traces. It also brings tooling to convert Grafana dashboards to Perses, which also works for Plutono! Adjacent to the Perses project itself is a Perses operator which enables deployment of Perses on Kubernetes using Custom Resource Definitions (CRDs). This is not a new concept - we also use the Prometheus operator in Gardener and would like to use it for Perses as well. One of the areas in which Perses is still lacking is the ability to use it to visualize logs, a key feature of Plutono. With work on the plugin architecture continuing, we hope to be able to work on this feature in the not-too-distant future. We are excited to see how Perses will develop and are looking forward to contributing to the project.

Conclusion

PromCon EU 2024 brought together open-source developers, commercial vendors, and users of observability tools. The event provided excellent learning opportunities during many high-quality talks and the chance to network with peers from the community while enjoying a BBQ after the first conference day. The large and healthy Prometheus community shows that open-source observability tools can coexist with commercial solutions.

Gardener at KubeCon + CloudNativeCon North America 2024

KubeCon + CloudNativeCon NA is just around the corner, taking place this year amidst the stunning backdrop of the Rocky Mountains in Salt Lake City, Utah.

This year, we’re thrilled to announce that the Gardener open-source project will have its own booth at the event. If you’re passionate about multi-cloud Kubernetes clusters, optimizing for low TCO, built with enterprise-grade features, then Gardener should be on your must-visit list at KubeCon.

Why You Should Visit the Gardener Booth

The Gardener team will be presenting several features and enhancements that demonstrate the project’s continuous progress. Here are some highlights you’ll be able to experience first-hand:

Extensible and Automated Kubernetes Cluster Management: Learn how Gardener simplifies and automates Kubernetes cluster management across various infrastructures, allowing for consistent and reliable deployments.

Hosted Control Planes with Enhanced Security: Get insights into Gardener’s security features for hosted control planes. Discover how Gardener ensures robust isolation, scalability, and performance while keeping security at the forefront.

Multi-Cloud Strategy: Gardener has been built with multi-cloud in mind. Whether you’re deploying clusters across AWS, Azure, Google Cloud, or on-prem, Gardener has the flexibility to manage them all seamlessly.

etcd Cluster Operator: Explore our advancements with our in-house operator etcd-druid, a key component responsible for managing etcd instances of Gardener’s hosted control planes, ensuring the stability and performance of Kubernetes clusters.

Latest Project Developments and Roadmap: Stay ahead of the curve by getting a sneak peek at the latest updates, ongoing enhancements, and future plans for Gardener.

Software Lifecycle Management with Open Component Model (OCM): Learn how OCM supports compliant and secure software lifecycle management, and how it can help streamline processes across various projects and environments.

Meet the Gardener Team

One of the best parts of KubeCon is the opportunity to meet the community behind the projects. At the Gardener booth, you’ll have the chance to connect directly with the maintainers who are driving innovation in Kubernetes cluster management. Whether you’re new to the project or already using Gardener in production, our team will be there to answer questions, provide demos, and discuss best practices.

See You in Salt Lake City!

Don’t miss the chance to explore how Gardener can streamline your Kubernetes management operations. Visit us at KubeCon + CloudNativeCon North America 2024. Our booth will be located at R39. We look forward to seeing you there!

Innovation Unleashed: A Deep Dive into the 5th Gardener Community Hackathon

The Gardener community recently concluded its 5th Hackathon, a week-long event that brought together multiple companies to collaborate on common topics of interest. The Hackathon, held at Schlosshof Freizeitheim in Schelklingen, Germany, was a testament to the power of collective effort and open-source, producing a tremendous number of results in a short time and moving the Gardener project forward with innovative solutions.

A Week of Collaboration and Innovation

The Hackathon addressed a wide range of topics, from improving the maturity of the Gardener API to harmonizing development setups and automating additional preparation tasks for Gardener installations. The event also saw the introduction of new resources and configurations, the rewriting of VPN components from Bash to Golang, and the exploration of a Tailscale-based VPN to secure shoot clusters.

Key Achievements

- 🗃️ OCI Helm Release Reference for ControllerDeployment: The Hackathon introduced the

core.gardener.cloud/v1API, which supports OCI repository-based Helm chart references. This innovation reduces operational complexity and enables reusability for other scenarios. - 👨🏼💻 Local

gardener-operatorDevelopment Setup with gardenlet: A new Skaffold configuration was created to harmonize the development setups for Gardener. This configuration deploysgardener-operatorand itsGardenCRD together with a deployment ofgardenletto register a seed cluster, allowing for a full-fledged Gardener setup. - 👨🏻🌾 Extensions for Garden Cluster via

gardener-operator: The Hackathon focused on automating additional preparation tasks for Gardener installations. TheGardencontroller was augmented to deploy extensions as part of its reconciliation flow, reducing operational complexity. - 🪄 Gardenlet Self-Upgrades for Unmanaged

Seeds: A newGardenletresource was introduced, allowing for the specification of deployment values and component configurations. A new controller withingardenletwatches these resources and updates thegardenlet’s Helm chart and configuration accordingly, effectively implementing self-upgrades. - 🦺 Type-Safe Configurability in

OperatingSystemConfig: The Hackathon improved the configurability of theOperatingSystemConfigforcontainerd, DNS, NTP, etc. TheOperatingSystemConfigAPI was augmented to supportcontainerd-config related use-cases. - 👮 Expose Shoot API Server in Tailscale VPN: The Hackathon explored the use of a Tailscale-based VPN to secure shoot clusters. A document was compiled explaining how shoot owners can expose their API server within a Tailscale VPN.

- ⌨️ Rewrite

gardener/vpn2from Bash to Golang: The Hackathon improved the VPN components by rewriting them in Golang. All functionality was successfully rewritten, and the pull requests have been opened forgardener/vpn2and the integration intogardener/gardener. - 🕳️ Pure IPv6-Based VPN Tunnel: The Hackathon addressed the restriction of the VPN network CIDR by switching the VPN tunnel to a pure IPv6-based network (follow-up of gardener/gardener#9597). This allows for more flexibility in network design.

- 👐 Harmonize Local VPN Setup with Real-World Scenario: The Hackathon aimed to align the local VPN setup with real-world scenarios regarding the VPN connection.

provider-localwas augmented to dynamically create Calico’sIPPoolresources to emulate the real-world’s networking situation. - 🐝 Support Cilium

v1.15+for HAShoots: The Hackathon addressed the issue ofCilium v1.15+not consideringStatefulSetlabels inNetworkPolicys. A prototype was developed to make theServiceresources forvpn-seed-serverheadless. - 🍞 Compression for

ManagedResourceSecrets: The Hackathon focused on reducing the size ofSecretrelated toManagedResources by leveraging the Brotli compression algorithm. This reduces network I/O and related costs, improving scalability and reducing load on the ETCD cluster. - 🚛 Making Shoot Flux Extension Production-Ready: The Hackathon aimed to promote the Flux extension to “production-ready” status. Features such as reconciliation sync mode, and the option to provide additional

Secretresources were added. - 🧹 Move

machine-controller-manager-provider-localRepository into gardener/gardener: The Hackathon focused on moving themachine-controller-manager-provider-localrepository content into thegardener/gardenerrepository. This simplifies maintenance and development tasks. - 🗄️ Stop Vendoring Third-Party Code in OS Extensions: The Hackathon aimed to avoid vendoring third-party code in the OS extensions. Two out of the four OS extensions have been adapted.

- 📦 Consider Embedded Files for Local Image Builds: The Hackathon addressed the issue that changes to embedded files don’t lead to automatic rebuilds of the Gardener images by

Skaffoldfor local development. The relatedhackscript was augmented to detect embedded files and make them part of the list of dependencies.

Note that a significant portion of the above topics have been built on top of the achievements of previous Hackathons.This continuity and progression of these Hackathons, with each one building on the achievements of the last, is a testament to the power of sustained collaborative effort.

Looking Ahead

As we look towards the future, the Gardener community is already gearing up for the next Hackathon slated for the end of 2024. The anticipation is palpable, as these events have consistently proven to be a hotbed of creativity, innovation, and collaboration. The 5th Gardener Community Hackathon has once again demonstrated the remarkable outcomes that can be achieved when diverse minds unite to work on shared interests. The event has not only yielded an impressive array of results in a short span but has also sparked innovations that promise to propel the Gardener project to new heights. The community eagerly awaits the next Hackathon, ready to tackle new challenges and continue the journey of innovation and growth.

Gardener's Registry Cache Extension: Another Cost Saving Win and More

Use Cases

In Kubernetes, on every Node the container runtime daemon pulls the container images that are configured in the Pods’ specifications running on the corresponding Node. Although these container images are cached on the Node’s file system after the initial pull operation, there are imperfections with this setup.

New Nodes are often created due to events such as auto-scaling (scale up), rolling updates, or replacements of unhealthy Nodes. A new Node would need to pull the images running on it from the container registry because the Node’s cache is initially empty. Pulling an image from a registry incurs network traffic and registry costs.

To reduce network traffic and registry costs for your Shoot cluster, it is recommended to enable the Gardener’s Registry Cache extension to run a registry as pull-through cache in the Shoot cluster.

The use cases of using a pull-through cache are not only limited to cost savings. Using a pull-through cache makes the Kubernetes cluster resilient to failures with the upstream registry - outages, failures due to rate limiting.

Solution

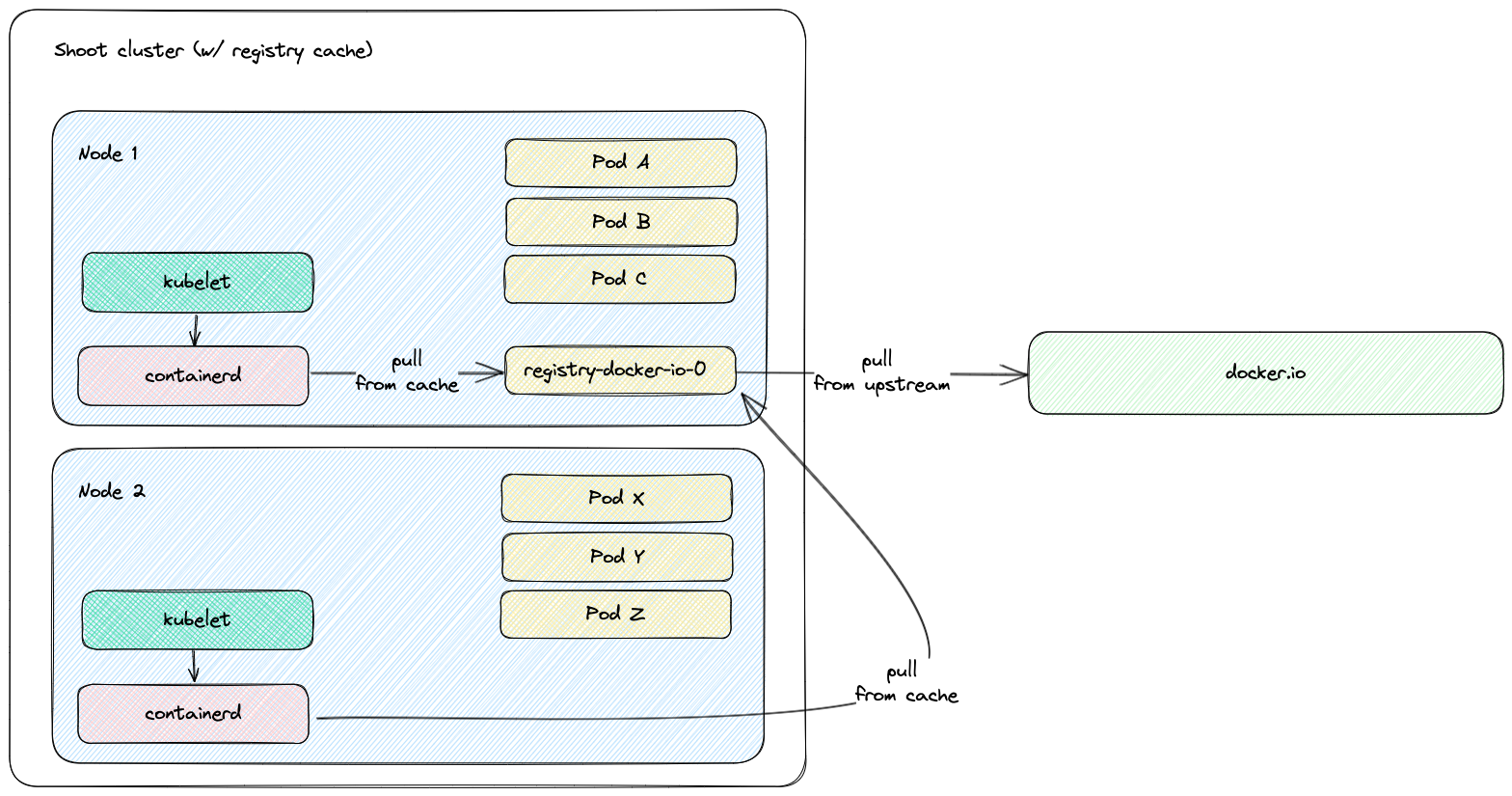

Gardener’s Registry Cache extension deploys and manages a pull-through cache registry in the Shoot cluster.

A pull-through cache registry is a registry that caches container images in its storage. The first time when an image is requested from the pull-through cache, it pulls the image from the upstream registry, returns it to the client, and stores it in its local storage. On subsequent requests for the same image, the pull-through cache serves the image from its storage, avoiding network traffic to the upstream registry.

Imagine that you have a DaemonSet in your Kubernetes cluster. In a cluster without a pull-through cache, every Node must pull the same container image from the upstream registry. In a cluster with a pull-through cache, the image is pulled once from the upstream registry and served later for all Nodes.

A Shoot cluster setup with a registry cache for Docker Hub (docker.io).

Cost Considerations

An image pull represents ingress traffic for a virtual machine (data is entering to the system from outside) and egress traffic for the upstream registry (data is leaving the system).

Ingress traffic from the internet to a virtual machine is free of charge on AWS, GCP, and Azure. However, the cloud providers charge NAT gateway costs for inbound and outbound data processed by the NAT gateway based on the processed data volume (per GB). The container registry offerings on the cloud providers charge for egress traffic - again, based on the data volume (per GB).

Having all of this in mind, the Registry Cache extension reduces NAT gateway costs for the Shoot cluster and container registry costs.

Try It Out!

We would also like to encourage you to try it! As a Gardener user, you can also reduce your infrastructure costs and increase resilience by enabling the Registry Cache for your Shoot clusters. The Registry Cache extension is a great fit for long running Shoot clusters that have high image pull rate.

For more information, refer to the Registry Cache extension documentation!

SpinKube on Gardener - Serverless WASM on Kubernetes

With the rising popularity of WebAssembly (WASM) and WebAssembly System Interface (WASI) comes a variety of integration possibilities. WASM is now not only suitable for the browser, but can be also utilized for running workloads on the server. In this post we will explore how you can get started writing serverless applications powered by SpinKube on a Gardener Shoot cluster. This post is inspired by a similar tutorial that goes through the steps of Deploying the Spin Operator on Azure Kubernetes Service. Keep in mind that this post does not aim to define a production environment. It is meant to show that Gardener Shoot clusters are able to run WebAssembly workloads, giving users the chance to experiment and explore this cutting-edge technology.

Prerequisites

- kubectl - the Kubernetes command line tool

- helm - the package manager for Kubernetes

- A running Gardener Shoot cluster

Gardener Shoot Cluster

For this showcase I am using a Gardener Shoot cluster on AWS infrastructure with nodes powered by Garden Linux, although the steps should be applicable for other infrastructures as well, since Gardener aims to provide a homogenous Kubernetes experience.

As a prerequisite for next steps, verify that you have access to your Gardener Shoot cluster.

# Verify the access to the Gardener Shoot cluster

kubectl get ns

NAME STATUS AGE

default Active 4m1s

kube-node-lease Active 4m1s

kube-public Active 4m1s

kube-system Active 4m1s

If you are having troubles accessing the Gardener Shoot cluster, please consult the Accessing Shoot Clusters documentation page.

Deploy the Spin Operator

As a first step, we will install the Spin Operator Custom Resource Definitions and the Runtime Class needed by wasmtime-spin-v2.

# Install Spin Operator CRDs

kubectl apply -f https://github.com/spinkube/spin-operator/releases/download/v0.1.0/spin-operator.crds.yaml

# Install the Runtime Class

kubectl apply -f https://github.com/spinkube/spin-operator/releases/download/v0.1.0/spin-operator.runtime-class.yaml

Next, we will install cert-manager, which is required for provisioning TLS certificates used by the admission webhook of the Spin Operator. If you face issues installing cert-manager, please consult the cert-manager installation documentation.

# Add and update the Jetstack repository

helm repo add jetstack https://charts.jetstack.io

helm repo update

# Install the cert-manager chart alongside with CRDs needed by cert-manager

helm install \

cert-manager jetstack/cert-manager \

--namespace cert-manager \

--create-namespace \

--version v1.14.4 \

--set installCRDs=true

In order to install the containerd-wasm-shim on the Kubernetes nodes we will use the kwasm-operator. There is also a successor of kwasm-operator - runtime-class-manager which aims to address some of the limitations of kwasm-operator and provide a production grade implementation for deploying containerd shims on Kubernetes nodes. Since kwasm-operator is easier to install, for the purpose of this post we will use it instead of the runtime-class-manager.

# Add the kwasm helm repository

helm repo add kwasm http://kwasm.sh/kwasm-operator/

helm repo update

# Install KWasm operator

helm install \

kwasm-operator kwasm/kwasm-operator \

--namespace kwasm \

--create-namespace \

--set kwasmOperator.installerImage=ghcr.io/spinkube/containerd-shim-spin/node-installer:v0.13.1

# Annotate all nodes in the cluster so kwasm can select them and provision the required containerd shim

kubectl annotate node --all kwasm.sh/kwasm-node=true

We can see that a pod has started and completed in the kwasm namespace.

kubectl -n kwasm get pod

NAME READY STATUS RESTARTS AGE

ip-10-180-7-60.eu-west-1.compute.internal-provision-kwasm-qhr8r 0/1 Completed 0 8s

kwasm-operator-6c76c5f94b-8zt4s 1/1 Running 0 15s

The logs of the kwasm-operator also indicate that the node was provisioned with the required shim.

kubectl -n kwasm logs kwasm-operator-6c76c5f94b-8zt4s

{"level":"info","node":"ip-10-180-7-60.eu-west-1.compute.internal","time":"2024-04-18T05:44:25Z","message":"Trying to Deploy on ip-10-180-7-60.eu-west-1.compute.internal"}

{"level":"info","time":"2024-04-18T05:44:31Z","message":"Job ip-10-180-7-60.eu-west-1.compute.internal-provision-kwasm is still Ongoing"}

{"level":"info","time":"2024-04-18T05:44:31Z","message":"Job ip-10-180-7-60.eu-west-1.compute.internal-provision-kwasm is Completed. Happy WASMing"}

Finally we can deploy the spin-operator alongside with a shim-executor.

helm install spin-operator \

--namespace spin-operator \

--create-namespace \

--version 0.1.0 \

--wait \

oci://ghcr.io/spinkube/charts/spin-operator

kubectl apply -f https://github.com/spinkube/spin-operator/releases/download/v0.1.0/spin-operator.shim-executor.yaml

Deploy a Spin App

Let’s deploy a sample Spin application using the following command:

kubectl apply -f https://raw.githubusercontent.com/spinkube/spin-operator/main/config/samples/simple.yaml

After the CRD has been picked up by the spin-operator, a pod will be created running the sample application. Let’s explore its logs.

kubectl logs simple-spinapp-56687588d9-nbrtq

Serving http://0.0.0.0:80

Available Routes:

hello: http://0.0.0.0:80/hello

go-hello: http://0.0.0.0:80/go-hello

We can see the available routes served by the application. Let’s port forward to the application service and test them out.

kubectl port-forward services/simple-spinapp 8000:80

Forwarding from 127.0.0.1:8000 -> 80

Forwarding from [::1]:8000 -> 80

In another terminal, we can verify that the application returns a response.

curl http://localhost:8000/hello

Hello world from Spin!%

This sets the ground for further experimentation and testing. What the SpinApp CRD provides as capabilities and API can be explored through the SpinApp CRD reference.

Cleanup

Let’s clean all deployed resources so far.

# Delete the spin app and its executor

kubectl delete spinapp simple-spinapp

kubectl delete spinappexecutors.core.spinoperator.dev containerd-shim-spin

# Uninstall the spin-operator chart

helm -n spin-operator uninstall spin-operator

# Remove the kwasm.sh/kwasm-node annotation from nodes

kubectl annotate node --all kwasm.sh/kwasm-node-

# Uninstall the kwasm-operator chart

helm -n kwasm uninstall kwasm-operator

# Uninstall the cert-manager chart

helm -n cert-manager uninstall cert-manager

# Delete the runtime class and SpinApp CRDs

kubectl delete runtimeclass wasmtime-spin-v2

kubectl delete crd spinappexecutors.core.spinoperator.dev

kubectl delete crd spinapps.core.spinoperator.dev

Conclusion

In my opinion, WASM on the server is here to stay. Communities are expressing more and more interest in integrating Kubernetes with WASM workloads. As shown Gardener clusters are perfectly capable of supporting this use case. This setup is a great way to start exploring the capabilities that WASM can bring to the server. As stated in the introduction, bear in mind that this post does not define a production environment, but is rather meant to define a playground suitable for exploring and trying out ideas.

KubeCon / CloudNativeCon Europe 2024 Highlights

KubeCon + CloudNativeCon Europe 2024, recently held in Paris, was a testament to the robustness of the open-source community and its pivotal role in driving advancements in AI and cloud-native technologies. With a record attendance of over +12,000 participants, the conference underscored the ubiquity of cloud-native architectures and the business opportunities they provide.

AI Everywhere

LLMs and GenAI took center stage at the event, with discussions on challenges such as security, data management, and energy consumption. A popular quote stated, “If #inference is the new web application, #kubernetes is the new web server”. The conference emphasized the need for more open data models for AI to democratize the technology. Cloud-native platforms offer advantages for AI innovation, such as packaging models and dependencies as Docker packages and enhancing resource management for proper model execution. The community is exploring AI workload management, including using CPUs for inferencing and preprocessing data before handing it over to GPUs. CNCF took the initiative and put together an AI whitepaper outlining the apparent synergy between cloud-native technologies and AI.

Cluster Autopilot

The conference showcased popular projects in the cloud-native ecosystem, including Kubernetes, Istio, and OpenTelemetry. Kubernetes was highlighted as a platform for running massive AI workloads. The UXL Foundation aims to enable multi-vendor AI workloads on Kubernetes, allowing developers to move AI workloads without being locked into a specific infrastructure. Every vendor we interacted with has assembled an AI-powered chatbot, which performs various functions – from assessing cluster health through analyzing cost efficiency and proposing workload optimizations to troubleshooting issues and alerting for potential challenges with upcoming Kubernetes version upgrades. Sysdig went even further with a chatbot, which answers the popular question, “Do any of my products have critical CVEs in production?” and analyzes workloads’ structure and configuration. Some chatbots leveraged the k8sgpt project, which joined the CNCF sandbox earlier this year.

Sophisticated Fleet Management

The ecosystem showcased maturity in observability, platform engineering, security, and optimization, which will help operationalize AI workloads. Data demands and costs were also in focus, touching on data observability and cloud-cost management. Cloud-native technologies, also going beyond Kubernetes, are expected to play a crucial role in managing the increasing volume of data and scaling AI. Google showcased fleet management in their Google Hosted Cloud offering (ex-Anthos). It allows for defining teams and policies at the fleet level, later applied to all the Kubernetes clusters in the fleet, irrespective of the infrastructure they run on (GCP and beyond).

WASM Everywhere

The conference also highlighted the growing interest in WebAssembly (WASM) as a portable binary instruction format for executable programs and its integration with Kubernetes and other functions. The topic here started with a dedicated WASM pre-conference day, the sessions of which are available in the following playlist. WASM is positioned as the smoother approach to software distribution and modularity, providing more lightweight runtime execution options and an easier way for app developers to enter.

Rust on the Rise

Several talks were promoting Rust as an ideal programming language for cloud-native workloads. It was even promoted as suitable for writing Kubernetes controllers.

Internal Developer Platforms

The event showcased the importance of Internal Developer Platforms (IDPs), both commercial and open-source, in facilitating the development process across all types of organizations – from Allianz to Mercedes. Backstage leads the pack by a large margin, with all relevant sessions being full or at capacity. Much effort goes into the modularization of Backstage, which was also a notable highlight at the conference.

Sustainability

Sustainability was a key theme, with discussions on the role of cloud-native technologies in promoting green practices. The KubeCost application folks put a lot of effort into emphasizing the large amount of wasted money, which hyperscalers benefit from. In parallel – the kube-green project emphasized optimizing your cluster footprint to minimize CO2 emissions. The conference also highlighted the importance of open source in creating a level playing field for multiple players to compete, fostering diverse participation, and solving global challenges.

Customer Stories

In contrast to the Chicago KubeCon in 2023, the one in Paris outlined multiple case studies, best practices, and reference scenarios. Many enterprises and their IT teams were well represented at KubeCon - regarding sessions, sponsorships, and participation. These companies strive to excel forward, reaping the efficiency and flexibility benefits cloud-native architectures provide. We came across multiple companies using Gardener as their Kubernetes management underlay – including FUGA Cloud, STACKIT, and metal-stack Cloud. We eagerly anticipate more companies embracing Gardener at future events. The consistent feedback from these companies has been overwhelmingly positive—they absolutely love using Gardener and our shared excitement grows as the community thrives!

Notable Talks

Notable talks from leaders in the cloud-native world, including Solomon Hykes, Bob Wise, and representatives from KCP for Platforms and the United Nations, provided valuable insights into the future of AI and cloud-native technologies. All the talks are now uploaded to YouTube in the following playlist. Those do not include the various pre-conference days, available as separate playlists by CNCF.

In Conclusion…

In conclusion, KubeCon 2024 showcased the intersection of AI and cloud-native technologies, the open-source community’s growth, and the cloud-native ecosystem’s maturity. Many enterprises are actively engaged there, innovating, trying, and growing their internal expertise. They’re using KubeCon as a recruiting event, expanding their internal talent pool and taking more of their internal operations and processes into their own hands. The event served as a platform for global collaboration, cross-company alignments, innovation, and the exchange of ideas, setting the stage for the future of cloud-native computing.