This is the multi-page printable view of this section. Click here to print.

2025

June

Getting Started with OpenTelemetry on a Gardener Shoot Cluster

In this blog post, we will explore how to set up an OpenTelemetry based observability stack on a Gardener shoot cluster. OpenTelemetry is an open-source observability framework that provides a set of APIs, SDKs, agents, and instrumentation to collect telemetry data from applications and systems. It provides a unified approach for collecting, processing, and exporting telemetry data such as traces, metrics, and logs. In addition, it gives flexibility in designing observability stacks, helping avoid vendor lock-in and allowing users to choose the most suitable tools for their use cases.

Here we will focus on setting up OpenTelemetry for a Gardener shoot cluster, collecting both logs and metrics and exporting them to various backends. We will use the OpenTelemetry Operator to simplify the deployment and management of OpenTelemetry collectors on Kubernetes and demonstrate some best practices for configuration including security and performance considerations.

Prerequisites

To follow along with this guide, you will need:

- A Gardener Shoot Cluster.

kubectlconfigured to access the cluster.shoot-cert-serviceenabled on the shoot cluster, to manage TLS certificates for the OpenTelemetry Collectors and backends.

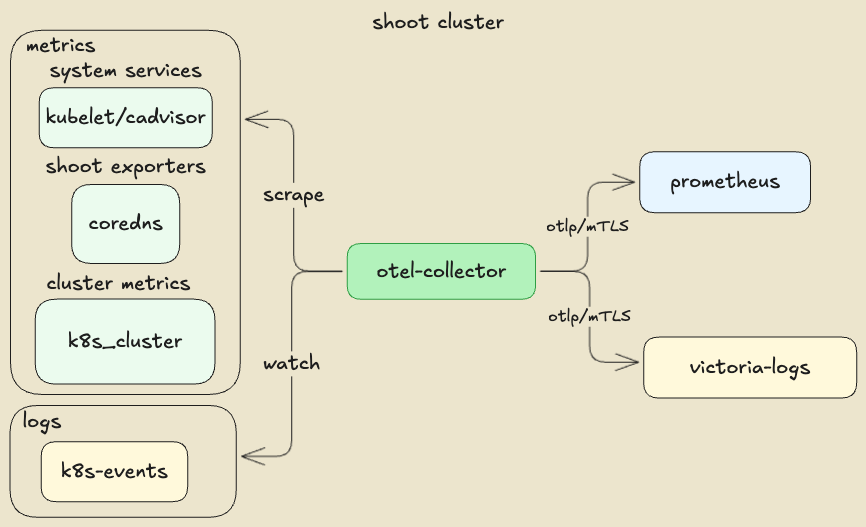

Component Overview of the Sample OpenTelemetry Stack

Setting Up a Gardener Shoot for mTLS Certificate Management

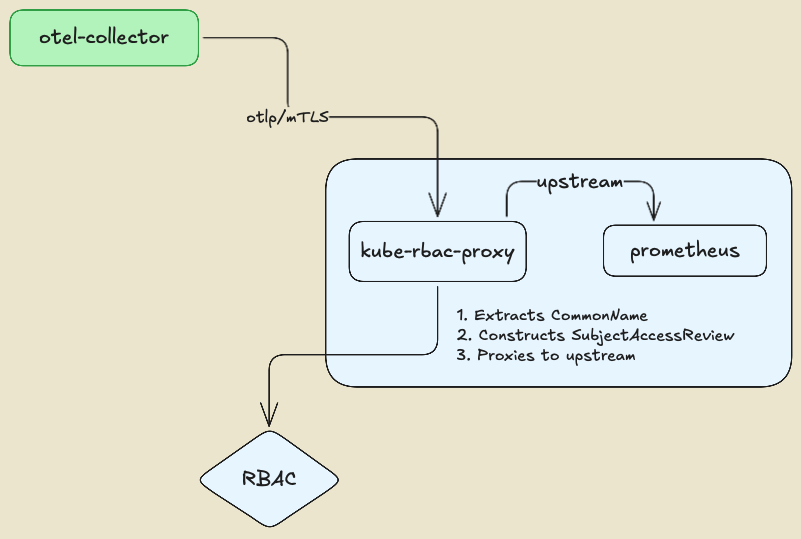

Here we use a self managed mTLS architecture with an illustration purpose. In a production environment, you would typically use a managed certificate authority (CA) or a service mesh to handle mTLS certificates and encryption. However, there might be cases where you want to have flexibility in authentication and authorization mechanisms, for example, by leveraging Kubernetes RBAC to determine whether a service is authorized to connect to a backend or not. In our illustration, we will use a kube-rbac-proxy as a sidecar to the backends, to enforce the mTLS authentication and authorization. The kube-rbac-proxy is a reverse proxy that uses Kubernetes RBAC to control access to services, allowing us to define fine-grained access control policies.

The kube-rbac-proxy extracts the identity of the client (OpenTelemetry collector) from the CommonName (CN) field of the TLS certificate and uses it to perform authorization checks against the Kubernetes API server. This enables fine-grained access control policies based on client identity, ensuring that only authorized clients can connect to the backends.

First, set up the Issuer certificate in the Gardener shoot cluster, allowing you to later issue and manage TLS certificates for the OpenTelemetry collectors and the backends. To allow a custom issuer, the shoot cluster shall be configured with the shoot-cert-service extension.

kind: Shoot

apiVersion: core.gardener.cloud/v1beta1

metadata:

name: my-shoot

namespace: my-project

...

spec:

extensions:

- type: shoot-cert-service

providerConfig:

apiVersion: service.cert.extensions.gardener.cloud/v1alpha1

kind: CertConfig

shootIssuers:

enabled: true

...

Once the shoot is reconciled, the Issuer.cert.gardener.cloud resources will be available.

We can use openssl to create a self-signed CA certificate that will be used to sign the TLS certificates for the OpenTelemetry Collector and backends.

openssl genrsa -out ./ca.key 4096

openssl req -x509 -new -nodes -key ./ca.key -sha256 -days 365 -out ./ca.crt -subj "/CN=ca"

# Create namespace and apply the CA secret and issuer

kubectl create namespace certs \

--dry-run=client -o yaml | kubectl apply -f -

# Create the CA secret in the certs namespace

kubectl create secret tls ca --namespace certs \

--key=./ca.key --cert=./ca.crt \

--dry-run=client -o yaml | kubectl apply -f -

Next, we will create the cluster Issuer resource, referencing the CA secret we just created.

apiVersion: cert.gardener.cloud/v1alpha1

kind: Issuer

metadata:

name: issuer-selfsigned

namespace: certs

spec:

ca:

privateKeySecretRef:

name: ca

namespace: certs

Later, we can create Certificate resources to securely connect the OpenTelemetry collectors to the backends.

Setting Up the OpenTelemetry Operator

To deploy the OpenTelemetry Operator on your Gardener Shoot Cluster, we can use the project helm chart with a minimum configuration. The important part is to set the collector image to the latest contrib distribution image which determines the set of receivers, processors, and exporters plugins that will be available in the OpenTelemetry collector instance. There are several pre-built distributions available such as: otelcol, otelcol-contrib, otelcol-k8s, otelcol-otlp, and otelcol-ebpf-profiler. For the purpose of this guide, we will use the otelcol-contrib distribution, which includes a wide range of plugins for various backends and data sources.

manager:

collectorImage:

repository: "otel/opentelemetry-collector-contrib"

Setting Up the Backends (prometheus, victoria-logs)

Setting up the backends is a straightforward process. We will use plain resource manifests for illustration purposes, outlining the important parts allowing OpenTelemetry collectors to connect securely to the backends using mTLS. An important part is enabling the respective OTLP ingestion endpoints on the backends, which will be used by the OpenTelemetry collectors to send telemetry data. In a production environment, the lifecycle of the backends will be probably managed by the respective component’s operators

Setting Up Prometheus (Metrics Backend)

Here is the complete list of manifests for deploying a single prometheus instance with the OTLP ingestion endpoint and a kube-rbac-proxy sidecar for mTLS authentication and authorization:

Prometheus Certificate That is the serving certificate of the

kube-rbac-proxysidecar. The OpenTelemetry collector needs to trust the signing CA, hence we use the sameIssuerwe created earlier.Prometheus The prometheus needs to be configured to allow

OTLPingestion endpoint:--web.enable-OTLP-receiver. That allows the OpenTelemetry collector to push metrics to the Prometheus instance (via thekube-rbac-proxysidecar).Prometheus Configuration In Prometheus’ case, the

OpenTelemetryresource attributes usually set by the collectors can be used to determine labels for the metrics. This is illustrated in the collector’sprometheusreceiver configuration. A common and unified set of labels across all metrics collected by the OpenTelemetry collector is a fundamental requirement for sharing and understanding the data across different teams and systems. This common set is defined by the OpenTelemetry Semantic Conventions specification. For example ,k8s.pod.name,k8s.namespace.name,k8s.node.name, etc. are some of the common labels that can be used to identify the source of the observability signals. Those are also common across the different types of telemetry data (traces, metrics, logs), serving correlation and analysis use cases.mTLS Proxy rbac This example defines a

Roleallowing requests to the prometheus backend to pass the kube-rbac-proxy.rules: - apiGroups: ["authorization.kubernetes.io"] resources: - observabilityapps/prometheus verbs: ["get", "create"] # GET, POSTIn this example, we allow

GETandPOSTrequests to reach the prometheus upstream service, if the request is authenticated with a valid mTLS certificate and the identified user is allowed to access the Prometheus service by the correspondingRoleBinding.PATCHandDELETErequests are not allowed. The mapping between the http request methods and the Kubernetes RBAC verbs is seen at kube-rbac-proxy/proxy.go.subjects: - apiGroup: rbac.authorization.k8s.io kind: User name: clientmTLS Proxy resource-attributes

kube-rbac-proxycreates KubernetesSubjectAccessReviewto determine if the request is allowed to pass. TheSubjectAccessReviewis created with theresourceAttributesset to the upstream service, in this case the Prometheus service.

Setting Up victoria-logs (Logs Backend)

In our example, we will use victoria-logs as the logs backend. victoria-logs is a high-performance, cost-effective, and scalable log management solution. It is designed to work seamlessly with Kubernetes and provides powerful querying capabilities. It is important to note that any OTLP compatible backend can be used as a logs backend, allowing flexibility in choosing the best tool for the concrete needs.

Here is the complete manifests for deploying a single victoria-logs instance with the OTLP ingestion endpoint enabled and kube-rbac-proxy sidecar for mTLS authentication and authorization, using the upstream helm chart:

- Victoria-Logs Certificate

That is the serving certificate of the

kube-rbac-proxysidecar. The OpenTelemetry collector needs to trust the signing CA hence we use the sameIssuerwe created earlier. - Victoria-Logs chart values

The certificate secret shall be mounted in the VictoriaLogs pod as a volume, as it is referenced by the

kube-rbac-proxysidecar. - Victoria-Logs mTLS Proxy rbac

There is no fundamental difference compared to how we configured the Prometheus mTLS proxy. The

Roleallows requests to the VictoriaLogs backend to pass the kube-rbac-proxy. - Victoria-Logs mTLS Proxy resource-attributes

By now we shall have a working Prometheus and victoria-logs backends, both secured with mTLS and ready to accept telemetry data from the OpenTelemetry collector.

Setting Up the OpenTelemetry Collectors

We are going to deploy two OpenTelemetry collectors: k8s-events and shoot-metrics. Both collectors will emit their own telemetry data in addition to the data collected from the respective receivers.

k8s-events collector

In this example, we use 2 receivers:

- k8s_events receiver to collect Kubernetes events from the cluster.

- k8s_cluster receiver to collect Kubernetes cluster metrics.

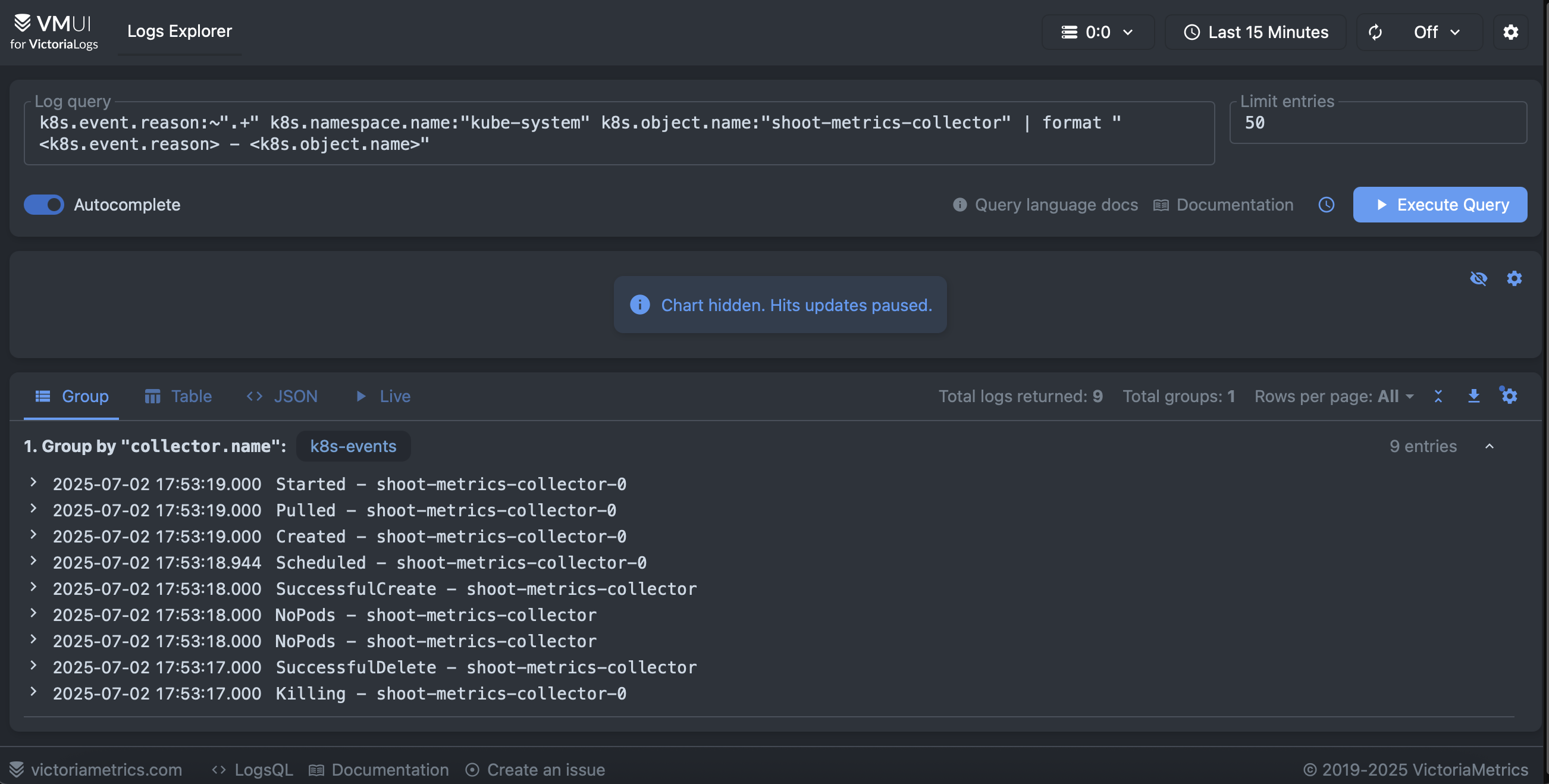

Here is an example of Kubernetes events persited in the victoria-logs backend. It filters logs which represents events from kube-system namesapce related to a rollout restart of the target statefulset. Then it formats the UI to show the event reason and object name.

The collector features few important configurations related to reliability and performance. The collected metrics points are are sent in batches to the Prometheus backend using the corresponding OTLP exporter and the memory consumption of the collector is also limited. In general, it is always a good practice to set a memory limiter and batch processing in the collector pipeline.

processors:

memory_limiter:

check_interval: 1s

limit_percentage: 80

spike_limit_percentage: 2

batch:

timeout: 5s

send_batch_size: 1000

Allowing the collector to emit its own telemetry data is configured in the service section of the collector configuration.

service:

# Configure the collector own telemetry

telemetry:

# Emit collector logs to stdout, you can also push them to a backend.

logs:

level: info

encoding: console

output_paths: [stdout]

error_output_paths: [stderr]

# Push collector internal metrics to Prometheus

metrics:

level: detailed

readers:

- # push metrics to Prometheus backend

periodic:

interval: 30000

timeout: 10000

exporter:

OTLP:

protocol: http/protobuf

endpoint: "${env:PROMETHEUS_URL}/api/v1/OTLP/v1/metrics"

insecure: false # Ensure server certificate is validated against the CA

certificate: /etc/cert/ca.crt

client_certificate: /etc/cert/tls.crt

client_key: /etc/cert/tls.key

The majority of the samples use an prometheus receiver to scrape the collector metrics endpoint, however that is not a clean solution because it puts the metrics via the pipeline, thus consuming resources and potentially causing performance issues. Instead, we use the periodic reader to push the metrics directly to the Prometheus backend.

Since the k8s-events collector obtains telemetry data from the kube-apiserver, it requires a corresponding set of permissions defined at k8s-events rbac manifests.

shoot-metrics collector

In this example, we have a single receiver:

- prometheus receiver scraping metrics from Gardener managed exporters present in the shoot cluster, including the

kubeletsystem service metrics. This receiver accepts standard Prometheus scrape configurations usingkubernetes_sd_configsto discover the targets dynamically. Thekubernetes_sd_configsallows the receiver to discover Kubernetes resources such as pods, nodes, and services, and scrape their metrics endpoints.

Here, the example illustrates the prometheus receiver scraping metrics from the kubelet service, adding node kubernetes labels as labels to the scraped metrics and filtering the metrics to keep only the relevant ones. Since the kubelet metrics endpoint is secured, it needs the corresponding bearer token to be provided in the scrape configuration. The bearer token is automatically mounted in the pod by Kubernetes, allowing the OpenTelemetry collector to authenticate with the kubelet service.

- job_name: shoot-kube-kubelet

honor_labels: false

scheme: https

tls_config:

insecure_skip_verify: true

metrics_path: /metrics

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels:

- job

target_label: __tmp_prometheus_job_name

- target_label: job

replacement: kube-kubelet

action: replace

- target_label: type

replacement: shoot

action: replace

- source_labels:

- __meta_kubernetes_node_address_InternalIP

target_label: instance

action: replace

- regex: __meta_kubernetes_node_label_(.+)

action: labelmap

replacement: "k8s_node_label_$${1}"

metric_relabel_configs:

- source_labels:

- __name__

regex: ^(kubelet_running_pods|process_max_fds|process_open_fds|kubelet_volume_stats_available_bytes|kubelet_volume_stats_capacity_bytes|kubelet_volume_stats_used_bytes|kubelet_image_pull_duration_seconds_bucket|kubelet_image_pull_duration_seconds_sum|kubelet_image_pull_duration_seconds_count)$

action: keep

- source_labels:

- namespace

regex: (^$|^kube-system$)

action: keep

The collector also illustrates collecting metrics from cadvisor endpoints and Gardener specific exporters such as

shoot-apiserver-proxy, shoot-coredns, etc. The exporters usually reside in the kube-system namespace and are configured to expose metrics on a specific port.

Since we aimed at unified set of resources attribues accross all telemetry data, we can translate exporters metrics which do not conform the conventions in OpenTelemetry.

Here is an example of translating the metrics, produced by the kubelet, to the OpenTelemetry conventions using the transform/metrics processor:

# Convert Prometheus metrics names to OpenTelemetry metrics names

transform/metrics:

error_mode: ignore

metric_statements:

- context: datapoint

statements:

- set(attributes["k8s.container.name"], attributes["container"]) where attributes["container"] != nil

- delete_key(attributes, "container") where attributes["container"] != nil

- set(attributes["k8s.pod.name"], attributes["pod"]) where attributes["pod"] != nil

- delete_key(attributes, "pod") where attributes["pod"] != nil

- set(attributes["k8s.namespace.name"], attributes["namespace"]) where attributes["namespace"] != nil

- delete_key(attributes, "namespace") where attributes["namespace"] != nil

Here is a visualization of container_network_transmit_bytes_total metric collected from the cadvisor endpoint of the kubelet service, showing the network traffic in bytes transmitted by the vpn-shoot containers.

Similarly to the k8s-events collector, the shoot-metrics collector also emits its own telemetry data, including metrics and logs. The collector is configured to push its own metrics to the Prometheus backend using the periodic reader, avoiding the need for a separate Prometheus scrape configuration. It requires a corresponding set of permissions defined at shoot-metrics rbac manifest.

Summary

In this blog post, we have explored how to set up an OpenTelemetry based observability stack on a Gardener Shoot Cluster. We have demonstrated how to deploy the OpenTelemetry Operator, configure the backends prometheus and victoria-logs), and deploy OpenTelemetry collectors to obtain telemetry data from the cluster. We have also discussed best practices for configuration, including security and performance considerations. In this blog we have shown the unified set of resource attributes that can be used to identify the source of the telemetry data, allowing correlation and analysis across different teams and systems. We have demonstrated how to transform metrics labels which do not conform to the OpenTelemetry conventions, achieving a unified set of labels across all telemetry data. Finally, we have illustrated how to securely connect the OpenTelemetry collectors to the backends using mTLS and kube-rbac-proxy for authentication and authorization.

We hope this guide will inspire you to get started with OpenTelemetry on a Gardener managed shoot cluster and equip you with ideas and best practices for building a powerful observability stack that meets your needs. For more information, please refer to the OpenTelemetry documentation and the Gardener documentation.

Manifests List

Enabling Seamless IPv4 to Dual-Stack Migration for Kubernetes Clusters on GCP

Gardener continues to enhance its networking capabilities, now offering a streamlined migration path for existing IPv4-only shoot clusters on Google Cloud Platform (GCP) to dual-stack (IPv4 and IPv6). This allows clusters to leverage the benefits of IPv6 networking while maintaining IPv4 compatibility.

The Shift to Dual-Stack: What Changes?

Transitioning a Gardener-managed Kubernetes cluster on GCP from a single-stack IPv4 to a dual-stack setup involves several key modifications to the underlying infrastructure and networking configuration.

Triggering the Migration

The migration process is initiated by updating the shoot specification. Users simply need to add IPv6 to the spec.networking.ipFamilies field, changing it from [IPv4] to [IPv4, IPv6].

Infrastructure Adaptations

Once triggered, Gardener orchestrates the necessary infrastructure changes on GCP:

- IPv6-Enabled Subnets: The existing subnets (node subnet and internal subnet) within the Virtual Private Cloud (VPC) get external IPv6 ranges assigned.

- New IPv6 Service Subnet: A new subnet is provisioned specifically for services, also equipped with an external IPv6 range.

- Secondary IPv4 Range for Node Subnet: The node subnet is allocated an additional (secondary) IPv4 range. This is crucial as dual-stack load balancing on GCP, is managed via

ingress-gce, which utilizes alias IP ranges.

Enhanced Pod Routing on GCP

A significant change occurs in how pod traffic is routed. In the IPv4-only setup with native routing, the Cloud Controller Manager (CCM) creates individual routes in the VPC route table for each node’s pod CIDR. During the migration to dual-stack:

- These existing pod-specific cloud routes are systematically deleted from the VPC routing table.

- To maintain connectivity, the corresponding alias IP ranges are directly added to the Network Interface Card (NICs) of the Kubernetes worker nodes (VM instances).

The Migration Journey

The migration is a multi-phase process, tracked by a DualStackNodesMigrationReady constraint in the shoot’s status, which gets removed after a successfull migration.

Phase 1: Infrastructure Preparation Immediately after the

ipFamiliesfield is updated, an infrastructure reconciliation begins. This phase includes the subnet modifications mentioned above. A critical step here is the transition from VPC routes to alias IP ranges for existing nodes. The system carefully manages the deletion of old routes and the creation of new alias IP ranges on the virtual machines to ensure a smooth transition. Information about the routes to be migrated is temporarily persisted during this step in the infrastructure state.Phase 2: Node Upgrades For nodes to become dual-stack aware (i.e., receive IPv6 addresses for themselves and their pods), they need to be rolled out. This can happen during the next scheduled Kubernetes version or gardenlinux update or can be expedited by manually deleting the nodes, allowing Gardener to recreate the nodes with a new dual-stack configuration. Once all nodes have been updated and posses IPv4 and IPv6 pod CIDRs, the

DualStackNodesMigrationReadyconstraint will change toTrue.Phase 3: Finalizing Dual-Stack Activation With the infrastructure and nodes prepared, the final step involves configuring the remaining control plane components like kube-apiserver and the Container Network Interface (CNI) plugin like Calico or Cilium for dual-stack operation. After these components are fully dual-stack enabled, the migration constraint is removed, and the cluster operates in a full dual-stack mode. Existing IPv4 pods keep their IPv4 address, new ones receive both (IPv4 and IPv6) addresses.

Important Considerations for GCP Users

Before initiating the migration, please note the following:

- Native Routing Prerequisite: The IPv4-only cluster must be operating in native routing mode this. This means the pod overlay network needs to be disabled.

- GCP Route Quotas: When using native routing, especially for larger clusters, be mindful of GCP’s default quota for static routes per VPC (often 200, referred to as

STATIC_ROUTES_PER_NETWORK). It might be necessary to request a quota increase via the GCP cloud console before enabling native routing or migrating to dual-stack to avoid hitting limits.

This enhancement provides a clear path for Gardener users on GCP to adopt IPv6, paving the way for future-ready network architectures.

For further details, you can refer to the official pull request and the relevant segment of the developer talk. Additional documentation can also be found within the Gardener documentation.

Enhancing Meltdown Protection with Dependency-Watchdog Annotations

Gardener’s dependency-watchdog is a crucial component for ensuring cluster stability. During infrastructure-level outages where worker nodes cannot communicate with the control plane, it activates a “meltdown protection” mechanism. This involves scaling down key control plane components like the machine-controller-manager (MCM), cluster-autoscaler (CA), and kube-controller-manager (KCM) to prevent them from taking incorrect actions based on stale information, such as deleting healthy nodes that are only temporarily unreachable.

The Challenge: Premature Scale-Up During Reconciliation

Previously, a potential race condition could undermine this protection. While dependency-watchdog scaled down the necessary components, a concurrent Shoot reconciliation, whether triggered manually by an operator or by other events, could misinterpret the situation. The reconciliation logic, unaware that the scale-down was a deliberate protective measure, would attempt to restore the “desired” state by scaling the machine-controller-manager and cluster-autoscaler back up.

This premature scale-up could have serious consequences. An active machine-controller-manager, for instance, might see nodes in an Unknown state due to the ongoing outage and incorrectly decide to delete them, defeating the entire purpose of the meltdown protection.

The Solution: A New Annotation for Clearer Signaling

To address this, Gardener now uses a more explicit signaling mechanism between dependency-watchdog and gardenlet. When dependency-watchdog scales down a deployment as part of its meltdown protection, it now adds the following annotation to the resource:

dependency-watchdog.gardener.cloud/meltdown-protection-active

This annotation serves as a clear, persistent signal that the component has been intentionally scaled down for safety.

How It Works

The gardenlet component has been updated to recognize and respect this new annotation. During a Shoot reconciliation, before scaling any deployment, gardenlet now checks for the presence of the dependency-watchdog.gardener.cloud/meltdown-protection-active annotation.

If the annotation is found, gardenlet will not scale up the deployment. Instead, it preserves the current replica count set by dependency-watchdog, ensuring that the meltdown protection remains effective until the underlying infrastructure issue is resolved and dependency-watchdog itself restores the components. This change makes the meltdown protection mechanism more robust and prevents unintended node deletions during any degradation of connectivity between the nodes and control plane.

Additionally on a deployment which has dependency-watchdog.gardener.cloud/meltdown-protection-active annotation set, if the operator decides to ignores such a deployment from meltdown consideration by annotating it with dependency-watchdog.gardener.cloud/ignore-scaling, then for such deployments dependency-watchdog shall remove the dependency-watchdog.gardener.cloud/meltdown-protection-active annotation and the deployment shall be considered for scale-up as part of next shoot reconciliation. The operator can also explicitly scale up such a deployment and not wait for the next shoot reconciliation.

For More Information

To dive deeper into the implementation details, you can review the changes in the corresponding pull request.

Improving Credential Management for Seed Backups

Gardener has introduced a new feature gate, DoNotCopyBackupCredentials, to enhance the security and clarity of how backup credentials for managed seeds are handled. This change moves away from an implicit credential-copying mechanism to a more explicit and secure configuration practice.

The Old Behavior and Its Drawbacks

Previously, when setting up a managed seed, the controller would automatically copy the shoot’s infrastructure credentials to serve as the seed’s backup credentials if a backup secret was not explicitly provided. While this offered some convenience, it had several disadvantages:

- Promoted Poor Security Practices: It encouraged the use of the same credentials for both shoot infrastructure and seed backups, violating the principle of least privilege and credential segregation.

- Caused Confusion: The implicit copying of secrets could be confusing for operators, as the source of the backup credential was not immediately obvious from the configuration.

- Inconsistent with Modern Credentials: The mechanism worked for

Secret-based credentials but was not compatible withWorkloadIdentity, which cannot be simply copied.

The New Approach: Explicit Credential Management

The new DoNotCopyBackupCredentials feature gate, when enabled in gardenlet, disables this automatic copying behavior. With the gate active, operators are now required to explicitly create and reference a secret for the seed backup.

If seed.spec.backup.credentialsRef points to a secret that does not exist, the reconciliation process will fail with an error, ensuring that operators consciously provide a dedicated credential for backups. This change promotes the best practice of using separate, segregated credentials for infrastructure and backups, significantly improving the security posture of the landscape.

For Operators: What You Need to Do

When you enable the DoNotCopyBackupCredentials feature gate, you must ensure that any Seed you configure has a pre-existing secret for its backup.

For setups where credentials were previously copied, Gardener helps with the transition. The controller will stop managing the lifecycle of these copied secrets. To help operators identify them for cleanup, these secrets will be labeled with secret.backup.gardener.cloud/status=previously-managed. You can then review these secrets and manage them accordingly.

This enhancement is a step towards more robust, secure, and transparent operations in Gardener, giving operators clearer control over credential management.

Further Reading

Enhanced Extension Management: Introducing `autoEnable` and `clusterCompatibility`

Gardener’s extension mechanism has been enhanced with two new fields in the ControllerRegistration and operatorv1alpha1.Extension APIs, offering operators more granular control and improved safety when managing extensions. These changes, detailed in PR #11982, introduce autoEnable and clusterCompatibility for resources of kind: Extension.

Fine-Grained Automatic Enablement with autoEnable

Previously, operators could use the globallyEnabled field to automatically enable an extension resource for all shoot clusters. This field is now deprecated and will be removed in Gardener v1.123.

The new autoEnable field replaces globallyEnabled and provides more flexibility. Operators can now specify an array of cluster types for which an extension should be automatically enabled. The supported cluster types are:

shootseedgarden

This allows, for example, an extension to be automatically enabled for all seed clusters or a combination of cluster types, which was not possible with the boolean globallyEnabled field.

If autoEnable includes shoot, it behaves like the old globallyEnabled: true for shoot clusters. If an extension is not set to autoEnable for a specific cluster type, it must be explicitly enabled in the respective cluster’s manifest (e.g., Shoot manifest for a shoot cluster).

# Example in ControllerRegistration or operatorv1alpha1.Extension spec.resources

- kind: Extension

type: my-custom-extension

autoEnable:

- shoot

- seed

# globallyEnabled: true # This field is deprecated

Ensuring Correct Deployments with clusterCompatibility

To enhance safety and prevent misconfigurations, the clusterCompatibility field has been introduced. This field allows extension developers and operators to explicitly define which cluster types a generic Gardener extension is compatible with. The supported cluster types are:

shootseedgarden

Gardener will validate that an extension is only enabled or automatically enabled for cluster types listed in its clusterCompatibility definition. If clusterCompatibility is not specified for an Extension kind, it defaults to ['shoot']. This provides an important safeguard, ensuring that extensions are not inadvertently deployed to environments they are not designed for.

# Example in ControllerRegistration or operatorv1alpha1.Extension spec.resources

- kind: Extension

type: my-custom-extension

autoEnable:

- shoot

clusterCompatibility: # Defines where this extension can be used

- shoot

- seed

Important Considerations for Operators

- Deprecation of

globallyEnabled: Operators should migrate fromgloballyEnabledto the newautoEnablefield forkind: Extensionresources.globallyEnabledis deprecated and scheduled for removal in Gardenerv1.123. - Breaking Change for Garden Extensions: The introduction of

clusterCompatibilityis a breaking change for operators managing garden extensions viagardener-operator. If yourGardencustom resource specifiesspec.extensions, you must update the correspondingoperatorv1alpha1.Extensionresources to includegardenin theclusterCompatibilityarray for those extensions intended to run in the garden cluster.

These new fields provide more precise control over extension lifecycle management across different cluster types within the Gardener ecosystem, improving both operational flexibility and system stability.

For further details, you can review the original pull request (#11982) and watch the demonstration in the Gardener Review Meeting (starting at 41:23).

Enhanced Internal Traffic Management: L7 Load Balancing for kube-apiservers in Gardener

Gardener continuously evolves to optimize performance and reliability. A recent improvement focuses on how internal control plane components communicate with kube-apiserver instances, introducing cluster-internal Layer 7 (L7) load balancing to ensure better resource distribution and system stability.

The Challenge: Unbalanced Internal Load on kube-apiservers

Previously, while external access to Gardener-managed kube-apiservers (for Shoots and the Virtual Garden) benefited from L7 load balancing via Istio, internal traffic took a more direct route. Components running within the seed cluster, such as gardener-resource-manager and gardener-controller-manager, would access the kube-apiserver’s internal Kubernetes service directly. This direct access bypassed the L7 load balancing capabilities of the Istio ingress gateway.

This could lead to situations where certain kube-apiserver instances might become overloaded, especially if a particular internal client generated a high volume of requests, potentially impacting the stability and performance of the control plane.

The Solution: Extending L7 Load Balancing Internally

To address this, Gardener now implements cluster-internal L7 load balancing for traffic destined for kube-apiservers from within the control plane. This enhancement ensures that requests from internal components are distributed efficiently across available kube-apiserver replicas, mirroring the sophisticated load balancing already in place for external traffic, but crucially, without routing this internal traffic externally.

Key aspects of this solution include:

- Leveraging Existing Istio Ingress Gateway: The system utilizes the existing Istio ingress gateway, which already handles L7 load balancing for external traffic.

- Dedicated Internal Service: A new, dedicated internal

ClusterIPservice is created for the Istio ingress gateway pods. This service provides an internal entry point for the load balancing. - Smart Kubeconfig Adjustments: The

kubeconfigfiles used by internal components (specifically, the generic token kubeconfigs) are configured to point to thekube-apiserver’s public, resolvable DNS address. - Automated Configuration Injection: A new admission webhook, integrated into

gardener-resource-managerand namedpod-kube-apiserver-load-balancing, plays a crucial role. When control plane pods are created, this webhook automatically injects:- Host Aliases: It adds a host alias to the pod’s

/etc/hostsfile. This alias maps thekube-apiserver’s public DNS name to the IP address of the new internalClusterIPservice for the Istio ingress gateway. - Network Policy Labels: Necessary labels are added to ensure network policies permit this traffic flow.

- Host Aliases: It adds a host alias to the pod’s

With this setup, when an internal component attempts to connect to the kube-apiserver using its public DNS name, the host alias redirects the traffic to the internal Istio ingress gateway service. The ingress gateway then performs L7 load balancing, distributing the requests across the available kube-apiserver instances.

Benefits

This approach offers several advantages:

- Improved Resource Distribution: Load from internal components is now evenly spread across

kube-apiserverinstances, preventing hotspots. - Enhanced Reliability: By avoiding overloading individual

kube-apiserverpods, the overall stability and reliability of the control plane are improved. - Internalized Traffic: Despite using the

kube-apiserver’s public DNS name in configurations, traffic remains within the cluster, avoiding potential costs or latency associated with external traffic routing.

This enhancement represents a significant step in refining Gardener’s internal traffic management, contributing to more robust and efficiently managed Kubernetes clusters.

Further Information

To dive deeper into the technical details, you can explore the following resources:

- Issue: gardener/gardener#8810

- Pull Request: gardener/gardener#12260

- Project Summary: Cluster-Internal L7 Load-Balancing Endpoints For kube-apiservers

- Recording Segment: Watch the introduction of this feature

Gardener Enhances Observability with OpenTelemetry Integration for Logging

Gardener is advancing its observability capabilities by integrating OpenTelemetry, starting with log collection and processing. This strategic move, outlined in GEP-34: OpenTelemetry Operator And Collectors, lays the groundwork for a more standardized, flexible, and powerful observability framework in line with Gardener’s Observability 2.0 vision.

The Drive Towards Standardization

Gardener’s previous observability stack, though effective, utilized vendor-specific formats and protocols. This presented challenges in extending components and integrating with diverse external systems. The adoption of OpenTelemetry addresses these limitations by aligning Gardener with open standards, enhancing interoperability, and paving the way for future innovations like unified visualization, comprehensive tracing support and even LLM integrations via MCP (Model Context Propagation) enabled services.

Core Components: Operator and Collectors

The initial phase of this integration introduces two key OpenTelemetry components into Gardener-managed clusters:

- OpenTelemetry Operator: Deployed on seed clusters (specifically in the

gardennamespace usingManagedResources), the OpenTelemetry Operator for Kubernetes will manage the lifecycle of OpenTelemetry Collector instances across shoot control planes. Its deployment follows a similar pattern to the existing Prometheus and Fluent Bit operators and occurs during theSeedreconciliation flow. - OpenTelemetry Collectors: A dedicated OpenTelemetry Collector instance will be provisioned for each shoot control plane namespace (e.g.,

shoot--project--name). These collectors, managed asDeployments by the OpenTelemetry Operator via anOpenTelemetryCollectorCustom Resource created duringShootreconciliation, are responsible for receiving, processing, and exporting observability data, with an initial focus on logs.

Key Changes and Benefits for Logging

- Standardized Log Transport: Logs from various sources will now be channeled through the OpenTelemetry Collector.

- Shoot Node Log Collection: The existing

valitailsystemd service on shoot nodes is being replaced by an OpenTelemetry Collector. This new collector will gather systemd logs (e.g., fromkernel,kubelet.service,containerd.service) with parity tovalitail’s previous functionality and forward them to the OpenTelemetry Collector instance residing in the shoot control plane. - Fluent Bit Integration: Existing Fluent Bit instances, which act as log shippers on seed clusters, will be configured to forward logs to the OpenTelemetry Collector’s receivers. This ensures continued compatibility with the Vali-based setup previously established by GEP-19.

- Shoot Node Log Collection: The existing

- Backend Agility: While initially the OpenTelemetry Collector will be configured to use its Loki exporter to send logs to the existing Vali backend, this architecture introduces significant flexibility. It allows Gardener to switch to any OpenTelemetry-compatible backend in the future, with plans to eventually migrate to Victoria-Logs.

- Phased Rollout: The transition to OpenTelemetry is designed as a phased approach. Existing observability tools like Vali, Fluent Bit, and Prometheus will be gradually integrated and some backends such as Vali will be replaced.

- Foundation for Future Observability: Although this GEP primarily targets logging, it critically establishes the foundation for incorporating other observability signals, such as metrics and traces, into the OpenTelemetry framework. Future enhancements may include:

- Utilizing the OpenTelemetry Collector on shoot nodes to also scrape and process metrics.

- Replacing the current event logger component with the OpenTelemetry Collector’s

k8s-eventreceiver within the shoot’s OpenTelemetry Collector instance.

Explore Further

This integration marks a significant step in Gardener’s observability journey, promising a more robust and adaptable system.

- Dive deeper into the technical details by reading the full proposal: GEP-34: OpenTelemetry Operator And Collectors.

- Watch the segment from the Gardener Review Meeting discussing this feature: Recording (starts at 14:09).

- Learn more about the overall strategy in the Observability 2.0 vision for Gardener.

Taking Gardener to the Next Level: Highlights from the 7th Gardener Community Hackathon in Schelklingen

Taking Gardener to the Next Level: Highlights from the 7th Gardener Community Hackathon in Schelklingen

The latest “Hack The Garden” event, held in June 2025 at Schlosshof in Schelklingen, brought together members of the Gardener community for an intensive week of collaboration, coding, and problem-solving. The hackathon focused on a wide array of topics aimed at enhancing Gardener’s capabilities, modernizing its stack, and improving user experience. You can find a full summary of all topics on GitHub and watch the wrap-up presentations on YouTube.

Here’s a look at some of the key achievements and ongoing efforts:

🚀 Modernizing Core Infrastructure and Networking

A significant focus was on upgrading and refining Gardener’s foundational components.

One major undertaking was the replacement of OpenVPN with Wireguard (watch presentation). The goal is to modernize the VPN stack for communication between control and data planes, leveraging Wireguard’s reputed performance and simplicity. OpenVPN, while established, presents challenges like TCP-in-TCP. The team developed a Proof of Concept (POC) for a Wireguard-based VPN connection for a single shoot in a local setup, utilizing a reverse proxy like mwgp to manage connections without needing a load balancer per control plane. A document describing the approach is available. Next steps involve thorough testing of resilience and throughput, aggregating secrets for MWGP configuration, and exploring ways to update MWGP configuration without restarts. Code contributions can be found in forks of gardener, vpn2, and mwgp.

Another critical area is overcoming the 450 Node limit on Azure (watch presentation).

Current Azure networking for Gardener relies on route tables, which have size limitations.

The team analyzed the hurdles and discussed a potential solution involving a combination of route tables and virtual networks.

Progress here depends on an upcoming Azure preview feature.

The hackathon also saw progress on cluster-internal L7 Load-Balancing for kube-apiservers.

Building on previous work for external endpoints, this initiative aims to provide L7 load-balancing for internal traffic from components like gardener-resource-manager.

Achievements include an implementation leveraging generic token kubeconfig and a dedicated ClusterIP service for Istio ingress gateway pods.

The PR #12260 is awaiting review to merge this improvement, addressing issue #8810.

🔭 Enhancing Observability and Operations

Improving how users monitor and manage Gardener clusters was another key theme.

A significant step towards Gardener’s Observability 2.0 initiative was made with the OpenTelemetry Transport for Shoot Metrics (watch presentation).

The current method of collecting shoot metrics via the Kubernetes API server /proxy endpoint lacks fine-tuning capabilities.

The hackathon proved the viability of collecting and filtering shoot metrics via OpenTelemetry collector instances on shoots, transporting them to Prometheus OTLP ingestion endpoints on seeds. This allows for more flexible and modern metrics collection.

For deeper network insights, the Cluster Network Observability project (watch presentation) enhanced the Retina tool by Microsoft. The team successfully added labeling for source and destination availability zones to Retina’s traffic metrics (see issue #1654 and PR #1657). This will help identify cross-AZ traffic, potentially reducing costs and latency.

To support lightweight deployments, efforts were made to make gardener-operator Single-Node Ready (watch presentation).

This involved making several components, including Prometheus deployments, configurable to reduce resource overhead in single-node or bare-metal scenarios.

Relevant PRs include those for gardener-extension-provider-gcp #1052, gardener-extension-provider-openstack #1042, fluent-operator #1616, and gardener #12248, along with fixes in forked Cortex and Vali repositories.

Streamlining node management was the focus of the Worker Group Node Roll-out project (watch presentation).

A PoC was created (see rrhubenov/gardener branch) allowing users to trigger a node roll-out for specific worker groups via a shoot annotation (gardener.cloud/operation=rollout-workers=<pool-names>), which is particularly useful for scenarios like dual-stack migration.

Proactive workload management is the aim of the Instance Scheduled Events Watcher (watch presentation). This initiative seeks to create an agent that monitors cloud provider VM events (like reboots or retirements) and exposes them as node events or dashboard warnings. A PR #9170 for cloud-provider-azure was raised to enable this for Azure, allowing users to take timely action.

🛡️ Bolstering Security and Resource Management

Security and efficient resource handling remain paramount.

The Signing of ManagedResource Secrets project (watch presentation) addressed a potential privilege escalation vector.

A PoC demonstrated that signing ManagedResource secrets with a key known only to the Gardener Resource Manager (GRM) is feasible, allowing GRM to verify secret integrity.

This work is captured in gardener PR #12247.

Simplifying operations was the goal of Migrating Control Plane Reconciliation of Provider Extensions to ManagedResources (watch presentation). Instead of using the chart applier, this change wraps control-plane components in ManagedResources, improving scalability and automation (e.g., scaling components imperatively).

Gardener PR #12251 was created for this, with a stretch goal related to issue #12250 explored in a compare branch.

A quick win, marked as a 🏎️ fast-track item, was to Expose EgressCIDRs in the shoot-info ConfigMap (watch presentation).

This makes egress CIDRs available to workloads within the shoot cluster, useful for controllers like Crossplane.

This was implemented and merged during the hackathon via gardener PR #12252.

✨ Improving User and Developer Experience

Enhancing the usability of Gardener tools is always a priority.

The Dashboard Usability Improvements project (watch presentation) tackled several areas based on dashboard issue #2469. Achievements include:

- Allowing custom display names for projects via annotations (dashboard PR #2470).

- Configurable default values for Shoot creation, like AutoScaler min/max replicas (dashboard PR #2476).

- The ability to hide certain UI elements, such as Control Plane HA options (dashboard PR #2478).

The Documentation Revamp (watch presentation) aimed to improve the structure and discoverability of Gardener’s documentation. Metadata for pages was enhanced (documentation PR #652), the glossary was expanded (documentation PR #653), and a PoC for using VitePress as a more modern documentation generator was created.

🔄 Advancing Versioning and Deployment Strategies

Flexibility in managing Gardener versions and deployments was also explored.

The topic of Multiple Parallel Versions in a Gardener Landscape (formerly Canary Deployments) (watch presentation) investigated ways to overcome tight versioning constraints.

It was discovered that the current implementation already allows rolling out different extension versions across different seeds using controller registration seat selectors.

Further discussion is needed on some caveats, particularly around the primary field in ControllerRegistration resources.

Progress was also made on GEP-32 – Version Classification Lifecycles (🏎️ fast-track). This initiative, started in a previous hackathon, aims to automate version lifecycle management. The previous PR (metal-stack/gardener #9) was rebased and broken into smaller, more reviewable PRs.

🌱 Conclusion

The Hack The Garden event in Schelklingen was a testament to the community’s dedication and collaborative spirit. Numerous projects saw significant progress, from PoCs for major architectural changes to practical improvements in daily operations and user experience. Many of these efforts are now moving into further development, testing, and review, promising exciting enhancements for the Gardener ecosystem.

Stay tuned for more updates as these projects mature and become integrated into Gardener!

The next hackathon takes place in early December 2025. If you’d like to join, head over to the Gardener Slack. Happy to meet you there! ✌️

May

Fine-Tuning kube-proxy Readiness: Ensuring Accurate Health Checks During Node Scale-Down

Gardener has recently refined how it determines the readiness of kube-proxy components within managed Kubernetes clusters. This adjustment leads to more accurate system health reporting, especially during node scale-down operations orchestrated by cluster-autoscaler.

The Challenge: kube-proxy Readiness During Node Scale-Down

Previously, Gardener utilized kube-proxy’s /healthz endpoint for its readiness probe. While generally effective, this endpoint’s behavior changed in Kubernetes 1.28 (as part of KEP-3836 and implemented in kubernetes/kubernetes#116470). The /healthz endpoint now reports kube-proxy as unhealthy if its node is marked for deletion by cluster-autoscaler (e.g., via a specific taint) or has a deletion timestamp.

This behavior is intended to help external load balancers (particularly those using externalTrafficPolicy: Cluster on infrastructures like GCP) avoid sending new traffic to nodes that are about to be terminated. However, for Gardener’s internal system component health checks, this meant that kube-proxy could appear unready for extended periods if node deletion was delayed due to PodDisruptionBudgets or long terminationGracePeriodSeconds. This could lead to misleading “unhealthy” states for the cluster’s system components.

The Solution: Aligning with Upstream kube-proxy Enhancements

To address this, Gardener now leverages the /livez endpoint for kube-proxy’s readiness probe in clusters running Kubernetes version 1.28 and newer. The /livez endpoint, also introduced as part of the aforementioned kube-proxy improvements, checks the actual liveness of the kube-proxy process itself, without considering the node’s termination status.

For clusters running Kubernetes versions 1.27.x and older (where /livez is not available), Gardener will continue to use the /healthz endpoint for the readiness probe.

This change, detailed in gardener/gardener#12015, ensures that Gardener’s readiness check for kube-proxy accurately reflects kube-proxy’s operational status rather than the node’s lifecycle state. It’s important to note that this adjustment does not interfere with the goals of KEP-3836; cloud controller managers can still utilize the /healthz endpoint for their load balancer health checks as intended.

Benefits for Gardener Operators

This enhancement brings a key benefit to Gardener operators:

- More Accurate System Health: The system components health check will no longer report

kube-proxyas unhealthy simply because its node is being gracefully terminated bycluster-autoscaler. This reduces false alarms and provides a clearer view of the cluster’s actual health. - Smoother Operations: Operations teams will experience fewer unnecessary alerts related to

kube-proxyduring routine scale-down events, allowing them to focus on genuine issues.

By adapting its kube-proxy readiness checks, Gardener continues to refine its operational robustness, providing a more stable and predictable management experience.

Further Information

- GitHub Pull Request: gardener/gardener#12015

- Recording of the presentation segment: Watch on YouTube (starts at the relevant section)

- Upstream KEP: KEP-3836: Kube-proxy improved ingress connectivity reliability

- Upstream Kubernetes PR: kubernetes/kubernetes#116470

New in Gardener: Forceful Redeployment of gardenlets for Enhanced Operational Control

Gardener continues to enhance its operational capabilities, and a recent improvement introduces a much-requested feature for managing gardenlets: the ability to forcefully trigger their redeployment. This provides operators with greater control and a streamlined recovery path for specific scenarios.

The Standard gardenlet Lifecycle

gardenlets, crucial components in the Gardener architecture, are typically deployed into seed clusters. For setups utilizing the seedmanagement.gardener.cloud/v1alpha1.Gardenlet resource, particularly in unmanaged seeds (those not backed by a shoot cluster and ManagedSeed resource), the gardener-operator handles the initial deployment of the gardenlet.

Once this initial deployment is complete, the gardenlet takes over its own lifecycle, leveraging a self-upgrade strategy to keep itself up-to-date. Under normal circumstances, the gardener-operator does not intervene further after this initial phase.

When Things Go Awry: The Need for Intervention

While the self-upgrade mechanism is robust, certain situations can arise where a gardenlet might require a more direct intervention. For example:

- The gardenlet’s client certificate to the virtual garden cluster might have expired or become invalid.

- The gardenlet

Deploymentin the seed cluster might have been accidentally deleted or become corrupted.

In such cases, because the gardener-operator’s responsibility typically ends after the initial deployment, the gardenlet might not be able to recover on its own, potentially leading to operational issues.

Empowering Operators: The Force-Redeploy Annotation

To address these challenges, Gardener now allows operators to instruct the gardener-operator to forcefully redeploy a gardenlet. This is achieved by annotating the specific Gardenlet resource with:

gardener.cloud/operation=force-redeploy

When this annotation is applied, it signals the gardener-operator to re-initiate the deployment process for the targeted gardenlet, effectively overriding the usual hands-off approach after initial setup.

How It Works

The process for a forceful redeployment is straightforward:

- An operator identifies a gardenlet that requires redeployment due to issues like an expired certificate or a missing deployment.

- The operator applies the

gardener.cloud/operation=force-redeployannotation to the correspondingseedmanagement.gardener.cloud/v1alpha1.Gardenletresource in the virtual garden cluster. - Important: If the gardenlet is for a remote cluster and its kubeconfig

Secretwas previously removed (a standard cleanup step after initial deployment), thisSecretmust be recreated, and its reference (.spec.kubeconfigSecretRef) must be re-added to theGardenletspecification. - The

gardener-operatordetects the annotation and proceeds to redeploy the gardenlet, applying its configurations and charts anew. - Once the redeployment is successfully completed, the

gardener-operatorautomatically removes thegardener.cloud/operation=force-redeployannotation from theGardenletresource. Similar to the initial deployment, it will also clean up the referenced kubeconfigSecretand set.spec.kubeconfigSecretReftonilif it was provided.

Benefits

This new feature offers significant advantages for Gardener operators:

- Enhanced Recovery: Provides a clear and reliable mechanism to recover gardenlets from specific critical failure states.

- Improved Operational Flexibility: Offers more direct control over the gardenlet lifecycle when exceptional circumstances demand it.

- Reduced Manual Effort: Streamlines the process of restoring a misbehaving gardenlet, minimizing potential downtime or complex manual recovery procedures.

This enhancement underscores Gardener’s commitment to operational excellence and responsiveness to the needs of its user community.

Dive Deeper

To learn more about this feature, you can explore the following resources:

- GitHub Pull Request: gardener/gardener#11972

- Official Documentation: Forceful Re-Deployment of gardenlets

- Community Meeting Recording (starts at the relevant segment): Gardener Review Meeting on YouTube

Streamlined Node Onboarding: Introducing `gardenadm token` and `gardenadm join`

Gardener continues to enhance its gardenadm tool, simplifying the management of autonomous Shoot clusters. Recently, new functionalities have been introduced to streamline the process of adding worker nodes to these clusters: the gardenadm token command suite and the corresponding gardenadm join command. These additions offer a more convenient and Kubernetes-native experience for cluster expansion.

Managing Bootstrap Tokens with gardenadm token

A key aspect of securely joining nodes to a Kubernetes cluster is the use of bootstrap tokens. The new gardenadm token command provides a set of subcommands to manage these tokens effectively within your autonomous Shoot cluster’s control plane node. This functionality is analogous to the familiar kubeadm token commands.

The available subcommands include:

gardenadm token list: Displays all current bootstrap tokens. You can also use the--with-token-secretsflag to include the token secrets in the output for easier inspection.gardenadm token generate: Generates a cryptographically random bootstrap token. This command only prints the token; it does not create it on the server.gardenadm token create [token]: Creates a new bootstrap token on the server. If you provide a token (in the format[a-z0-9]{6}.[a-z0-9]{16}), it will be used. If no token is supplied,gardenadmwill automatically generate a random one and create it.- A particularly helpful option for this command is

--print-join-command. When used, instead of just outputting the token, it prints the completegardenadm joincommand, ready to be copied and executed on the worker node you intend to join. You can also specify flags like--description,--validity, and--worker-pool-nameto customize the token and the generated join command.

- A particularly helpful option for this command is

gardenadm token delete <token-value...>: Deletes one or more bootstrap tokens from the server. You can specify tokens by their ID, the full token string, or the name of the Kubernetes Secret storing the token (e.g.,bootstrap-token-<id>).

These commands provide comprehensive control over the lifecycle of bootstrap tokens, enhancing security and operational ease.

Joining Worker Nodes with gardenadm join

Once a bootstrap token is created (ideally using gardenadm token create --print-join-command on a control plane node), the new gardenadm join command facilitates the process of adding a new worker node to the autonomous Shoot cluster.

The command is executed on the prospective worker machine and typically looks like this:

gardenadm join --bootstrap-token <token_id.token_secret> --ca-certificate <base64_encoded_ca_bundle> --gardener-node-agent-secret-name <os_config_secret_name> <control_plane_api_server_address>

Key parameters include:

--bootstrap-token: The token obtained from thegardenadm token createcommand.--ca-certificate: The base64-encoded CA certificate bundle of the cluster’s API server.--gardener-node-agent-secret-name: The name of the Secret in thekube-systemnamespace of the control plane that contains the OperatingSystemConfig (OSC) for thegardener-node-agent. This OSC dictates how the node should be configured.<control_plane_api_server_address>: The address of the Kubernetes API server of the autonomous cluster.

Upon execution, gardenadm join performs several actions:

- It discovers the Kubernetes version of the control plane using the provided bootstrap token and CA certificate.

- It checks if the

gardener-node-agenthas already been initialized on the machine. - If not already joined, it prepares the

gardener-node-initconfiguration. This involves setting up a systemd service (gardener-node-init.service) which, in turn, downloads and runs thegardener-node-agent. - The

gardener-node-agentthen uses the bootstrap token to securely download its specific OperatingSystemConfig from the control plane. - Finally, it applies this configuration, setting up the kubelet and other necessary components, thereby officially joining the node to the cluster.

After the node has successfully joined, the bootstrap token used for the process will be automatically deleted by the kube-controller-manager once it expires. However, it can also be manually deleted immediately using gardenadm token delete on the control plane node for enhanced security.

These new gardenadm commands significantly simplify the expansion of autonomous Shoot clusters, providing a robust and user-friendly mechanism for managing bootstrap tokens and joining worker nodes.

Further Information

Enhanced Network Flexibility: Gardener Now Supports CIDR Overlap for Non-HA Shoots

Gardener is continually evolving to offer greater flexibility and efficiency in managing Kubernetes clusters. A significant enhancement has been introduced that addresses a common networking challenge: the requirement for completely disjoint network CIDR blocks between a shoot cluster and its seed cluster. Now, Gardener allows for IPv4 network overlap in specific scenarios, providing users with more latitude in their network planning.

Addressing IP Address Constraints

Previously, all shoot cluster networks (pods, services, nodes) had to be distinct from the seed cluster’s networks. This could be challenging in environments with limited IP address space or complex network topologies. With this new feature, IPv4 or dual-stack shoot clusters can now define pod, service, and node networks that overlap with the IPv4 networks of their seed cluster.

How It Works: NAT for Seamless Connectivity

This capability is enabled through a double Network Address Translation (NAT) mechanism within the VPN connection established between the shoot and seed clusters. When IPv4 network overlap is configured, Gardener intelligently maps the overlapping shoot and seed networks to a dedicated set of newly reserved IPv4 ranges. These ranges are used exclusively within the VPN pods to ensure seamless communication, effectively resolving any conflicts that would arise from the overlapping IPs.

The reserved mapping ranges are:

241.0.0.0/8: Seed Pod Mapping Range242.0.0.0/8: Shoot Node Mapping Range243.0.0.0/8: Shoot Service Mapping Range244.0.0.0/8: Shoot Pod Mapping Range

Conditions for Utilizing Overlapping Networks

To leverage this new network flexibility, the following conditions must be met:

- Non-Highly-Available VPN: The shoot cluster must utilize a non-highly-available (non-HA) VPN. This is typically the configuration for shoots with a non-HA control plane.

- IPv4 or Dual-Stack Shoots: The shoot cluster must be configured as either single-stack IPv4 or dual-stack (IPv4/IPv6). The overlap feature specifically pertains to IPv4 networks.

- Non-Use of Reserved Ranges: The shoot cluster’s own defined networks (for pods, services, and nodes) must not utilize any of the Gardener-reserved IP ranges, including the newly introduced mapping ranges listed above, or the existing

240.0.0.0/8range (Kube-ApiServer Mapping Range).

It’s important to note that Gardener will prevent the migration of a non-HA shoot to an HA setup if its network ranges currently overlap with the seed, as this feature is presently limited to non-HA VPN configurations. For single-stack IPv6 shoots, Gardener continues to enforce non-overlapping IPv6 networks to avoid any potential issues, although IPv6 address space exhaustion is less common.

Benefits for Gardener Users

This enhancement offers increased flexibility in IP address management, particularly beneficial for users operating numerous shoot clusters or those in environments with constrained IPv4 address availability. By relaxing the strict disjointedness requirement for non-HA shoots, Gardener simplifies network allocation and reduces the operational overhead associated with IP address planning.

Explore Further

To dive deeper into this feature, you can review the original pull request and the updated documentation:

- GitHub PR: feat: Allow CIDR overlap for non-HA VPN shoots (#11582)

- Gardener Documentation: Shoot Networking

- Developer Talk Recording: Gardener Development - Sprint Review #131

Enhanced Node Management: Introducing In-Place Updates in Gardener

Gardener is committed to providing efficient and flexible Kubernetes cluster management. Traditionally, updates to worker pool configurations, such as machine image or Kubernetes minor version changes, trigger a rolling update. This process involves replacing existing nodes with new ones, which is a robust approach for many scenarios. However, for environments with physical or bare-metal nodes, or stateful workloads sensitive to node replacement, or if the virtual machine type is scarce, this can introduce challenges like extended update times and potential disruptions.

To address these needs, Gardener now introduces In-Place Node Updates. This new capability allows certain updates to be applied directly to existing worker nodes without requiring their replacement, significantly reducing disruption and speeding up update processes for compatible changes.

New Update Strategies for Worker Pools

Gardener now supports three distinct update strategies for your worker pools, configurable via the updateStrategy field in the Shoot specification’s worker pool definition:

AutoRollingUpdate: This is the classic and default strategy. When updates occur, nodes are cordoned, drained, terminated, and replaced with new nodes incorporating the changes.AutoInPlaceUpdate: With this strategy, compatible updates are applied directly to the existing nodes. The MachineControllerManager (MCM) automatically selects nodes, cordons and drains them, and then signals the Gardener Node Agent (GNA) to perform the update. Once GNA confirms success, MCM uncordons the node.ManualInPlaceUpdate: This strategy also applies updates directly to existing nodes but gives operators fine-grained control. After an update is specified, MCM marks all nodes in the pool as candidates. Operators must then manually label individual nodes to select them for the in-place update process, which then proceeds similarly to theAutoInPlaceUpdatestrategy.

The AutoInPlaceUpdate and ManualInPlaceUpdate strategies are available when the InPlaceNodeUpdates feature gate is enabled in the gardener-apiserver.

What Can Be Updated In-Place?

In-place updates are designed to handle a variety of common operational tasks more efficiently:

- Machine Image Updates: Newer versions of a machine image can be rolled out by executing an update command directly on the node, provided the image and cloud profile are configured to support this.

- Kubernetes Minor Version Updates: Updates to the Kubernetes minor version of worker nodes can be applied in-place.

- Kubelet Configuration Changes: Modifications to the Kubelet configuration can be applied directly.

- Credentials Rotation: Critical for security, rotation of Certificate Authorities (CAs) and ServiceAccount signing keys can now be performed on existing nodes without replacement.

However, some changes still necessitate a rolling update (node replacement):

- Changing the machine image name (e.g., switching from Ubuntu to Garden Linux).

- Modifying the machine type.

- Altering volume types or sizes.

- Changing the Container Runtime Interface (CRI) name (e.g., from Docker to containerd).

- Enabling or disabling node-local DNS.

Key API and Component Adaptations

Several Gardener components and APIs have been enhanced to support in-place updates:

- CloudProfile: The

CloudProfileAPI now allows specifyinginPlaceUpdatesconfiguration withinmachineImage.versions. This includes a booleansupportedfield to indicate if a version supports in-place updates and an optionalminVersionForUpdatestring to define the minimum OS version from which an in-place update to the current version is permissible. - Shoot Specification: As mentioned, the

spec.provider.workers[].updateStrategyfield allows selection of the desired update strategy. Additionally,spec.provider.workers[].machineControllerManagerSettingsnow includesmachineInPlaceUpdateTimeoutanddisableHealthTimeout(which defaults totruefor in-place strategies to prevent premature machine deletion during lengthy updates). ForManualInPlaceUpdate,maxSurgedefaults to0andmaxUnavailableto1. - OperatingSystemConfig (OSC): The OSC resource, managed by OS extensions, now includes

status.inPlaceUpdates.osUpdatewhere extensions can specify thecommandandargsfor the Gardener Node Agent to execute for machine image (Operating System) updates. Thespec.inPlaceUpdatesfield in the OSC will carry information like the target Operating System version, Kubelet version, and credential rotation status to the node. - Gardener Node Agent (GNA): GNA is responsible for executing the in-place updates on the node. It watches for a specific node condition (

InPlaceUpdatewith reasonReadyForUpdate) set by MCM, performs the OS update, Kubelet updates, or credentials rotation, restarts necessary pods (like DaemonSets), and then labels the node with the update outcome. - MachineControllerManager (MCM): MCM orchestrates the in-place update process. For in-place strategies, while new machine classes and machine sets are created to reflect the desired state, the actual machine objects are not deleted and recreated. Instead, their ownership is transferred to the new machine set. MCM handles cordoning, draining, and setting node conditions to coordinate with GNA.

- Shoot Status & Constraints: To provide visibility, the

status.inPlaceUpdates.pendingWorkerUpdatesfield in theShootnow lists worker pools pendingautoInPlaceUpdateormanualInPlaceUpdate. A newShootManualInPlaceWorkersUpdatedconstraint is added if any manual in-place updates are pending, ensuring users are aware. - Worker Status: The

Workerextension resource now includesstatus.inPlaceUpdates.workerPoolToHashMapto track the configuration hash of worker pools that have undergone in-place updates. This helps Gardener determine if a pool is up-to-date. - Forcing Updates: If an in-place update is stuck, the

gardener.cloud/operation=force-in-place-updateannotation can be added to the Shoot to allow subsequent changes or retries.

Benefits of In-Place Updates

- Reduced Disruption: Minimizes workload interruptions by avoiding full node replacements for compatible updates.

- Faster Updates: Applying changes directly can be quicker than provisioning new nodes, especially for OS patches or configuration changes.

- Bare-Metal Efficiency: Particularly beneficial for bare-metal environments where node provisioning is more time-consuming and complex.

- Stateful Workload Friendly: Lessens the impact on stateful applications that might be sensitive to node churn.

In-place node updates represent a significant step forward in Gardener’s operational flexibility, offering a more nuanced and efficient approach to managing node lifecycles, especially in demanding or specialized environments.

Dive Deeper

To explore the technical details and contributions that made this feature possible, refer to the following resources:

- Parent Issue for “[GEP-31] Support for In-Place Node Updates”: Issue #10219

- GEP-31: In-Place Node Updates of Shoot Clusters: GEP-31: In-Place Node Updates of Shoot Clusters

- Developer Talk Recording (starting at 39m37s): Youtube

Gardener Dashboard 1.80: Streamlined Credentials, Enhanced Cluster Views, and Real-Time Updates

Gardener Dashboard version 1.80 introduces several significant enhancements aimed at improving user experience, credentials management, and overall operational efficiency. These updates bring more clarity to credential handling, a smoother experience for managing large numbers of clusters, and a move towards a more reactive interface.

Unified and Enhanced Credentials Management

The management of secrets and credentials has been significantly revamped for better clarity and functionality:

- Introducing CredentialsBindings: The dashboard now fully supports

CredentialsBindingresources alongside the existingSecretBindingresources. This allows for referencing both Secrets and, in the future, Workload Identities more explicitly. WhileCredentialsBindingsreferencing Workload Identity resources are visible for cluster creation, editing or deleting them via the dashboard is not yet supported. - “Credentials” Page: The former “Secrets” page has been renamed to “Credentials.” It features a new “Kind” column and distinct icons to clearly differentiate between

SecretBindingandCredentialsBindingtypes, especially useful when resources share names. The column showing the referenced credential resource name has been removed as this information is part of the binding’s details. - Contextual Information and Safeguards: When editing a secret, all its associated data is now displayed, providing better context. If an underlying secret is referenced by multiple bindings, a hint is shown to prevent unintended impacts. Deletion of a binding is prevented if the underlying secret is still in use by another binding.

- Simplified Creation and Editing: New secrets created via the dashboard will now automatically generate a

CredentialsBinding. While existingSecretBindingsremain updatable, the creation of newSecretBindingsthrough the dashboard is no longer supported, encouraging the adoption of the more versatileCredentialsBinding. The edit dialog for secrets now pre-fills current data, allowing for easier modification of specific fields. - Handling Missing Secrets: The UI now provides clear information and guidance if a

CredentialsBindingorSecretBindingreferences a secret that no longer exists.

Revamped Cluster List for Improved Scalability

Navigating and managing a large number of clusters is now more efficient:

- Virtual Scrolling: The cluster list has adopted virtual scrolling. Rows are rendered dynamically as you scroll, replacing the previous pagination system. This significantly improves performance and provides a smoother browsing experience, especially for environments with hundreds or thousands of clusters.

- Optimized Row Display: The height of individual rows in the cluster list has been reduced, allowing more clusters to be visible on the screen at once. Additionally, expandable content within a row (like worker details or ticket labels) now has a maximum height with internal scrolling, ensuring consistent row sizes and smooth virtual scrolling performance.

Real-Time Updates for Projects

The dashboard is becoming more dynamic with the introduction of real-time updates:

- Instant Project Changes: Modifications to projects, such as creation or deletion, are now reflected instantly in the project list and interface without requiring a page reload. This is achieved through WebSocket communication.

- Foundation for Future Reactivity: This enhancement for projects lays the groundwork for bringing real-time updates to other resources within the dashboard, such as Seeds and the Garden resource, in future releases.

Other Notable Enhancements

- Kubeconfig Update: The kubeconfig generated for garden cluster access via the “Account” page now uses the

--oidc-pkce-methodflag, replacing the deprecated--oidc-use-pkceflag. Users encountering deprecation messages should redownload their kubeconfig. - Notification Behavior: Kubernetes warning notifications are now automatically dismissed after 5 seconds. However, all notifications will remain visible as long as the mouse cursor is hovering over them, giving users more time to read important messages.

- API Server URL Path: Support has been added for kubeconfigs that include a path in the API server URL.

These updates in Gardener Dashboard 1.80 collectively enhance usability, provide better control over credentials, and improve performance for large-scale operations.

For a comprehensive list of all features, bug fixes, and contributor acknowledgments, please refer to the official release notes. You can also view the segment of the community call discussing these dashboard updates here.

Gardener: Powering Enterprise Kubernetes at Scale and Europe's Sovereign Cloud Future

The Kubernetes ecosystem is dynamic, offering a wealth of tools to manage the complexities of modern cloud-native applications. For enterprises seeking to provision and manage Kubernetes clusters efficiently, securely, and at scale, a robust and comprehensive solution is paramount. Gardener, born from years of managing tens of thousands of clusters efficiently across diverse platforms and in demanding environments, stands out as a fully open-source choice for delivering fully managed Kubernetes Clusters as a Service. It already empowers organizations like SAP, STACKIT, T-Systems, and others (see adopters) and has become a core technology for NeoNephos, a project aimed at advancing digital autonomy in Europe (see KubeCon London 2025 Keynote and press announcement).

The Gardener Approach: An Architecture Forged by Experience