This is the multi-page printable view of this section. Click here to print.

Infrastructure Extensions

1 - Provider Alicloud

Gardener Extension for Alicloud provider

Project Gardener implements the automated management and operation of Kubernetes clusters as a service. Its main principle is to leverage Kubernetes concepts for all of its tasks.

Recently, most of the vendor specific logic has been developed in-tree. However, the project has grown to a size where it is very hard to extend, maintain, and test. With GEP-1 we have proposed how the architecture can be changed in a way to support external controllers that contain their very own vendor specifics. This way, we can keep Gardener core clean and independent.

This controller implements Gardener’s extension contract for the Alicloud provider.

An example for a ControllerRegistration resource that can be used to register this controller to Gardener can be found here.

Please find more information regarding the extensibility concepts and a detailed proposal here.

Supported Kubernetes versions

This extension controller supports the following Kubernetes versions:

| Version | Support | Conformance test results |

|---|---|---|

| Kubernetes 1.33 | 1.33.0+ | N/A |

| Kubernetes 1.32 | 1.32.0+ | |

| Kubernetes 1.31 | 1.31.0+ | |

| Kubernetes 1.30 | 1.30.0+ | |

| Kubernetes 1.29 | 1.29.0+ | |

| Kubernetes 1.28 | 1.28.0+ | |

| Kubernetes 1.27 | 1.27.0+ |

Please take a look here to see which versions are supported by Gardener in general.

How to start using or developing this extension controller locally

You can run the controller locally on your machine by executing make start.

Static code checks and tests can be executed by running make verify. We are using Go modules for Golang package dependency management and Ginkgo/Gomega for testing.

Feedback and Support

Feedback and contributions are always welcome!

Please report bugs or suggestions as GitHub issues or reach out on Slack (join the workspace here).

Learn more!

Please find further resources about out project here:

- Our landing page gardener.cloud

- “Gardener, the Kubernetes Botanist” blog on kubernetes.io

- “Gardener Project Update” blog on kubernetes.io

- GEP-1 (Gardener Enhancement Proposal) on extensibility

- GEP-4 (New

core.gardener.cloud/v1beta1API) - Extensibility API documentation

- Gardener Extensions Golang library

- Gardener API Reference

1.1 - Tutorials

1.1.1 - Create a Kubernetes Cluster on Alibaba Cloud with Gardener

Overview

Gardener allows you to create a Kubernetes cluster on different infrastructure providers. This tutorial will guide you through the process of creating a cluster on Alibaba Cloud.

Prerequisites

- You have created an Alibaba Cloud account.

- You have access to the Gardener dashboard and have permissions to create projects.

Steps

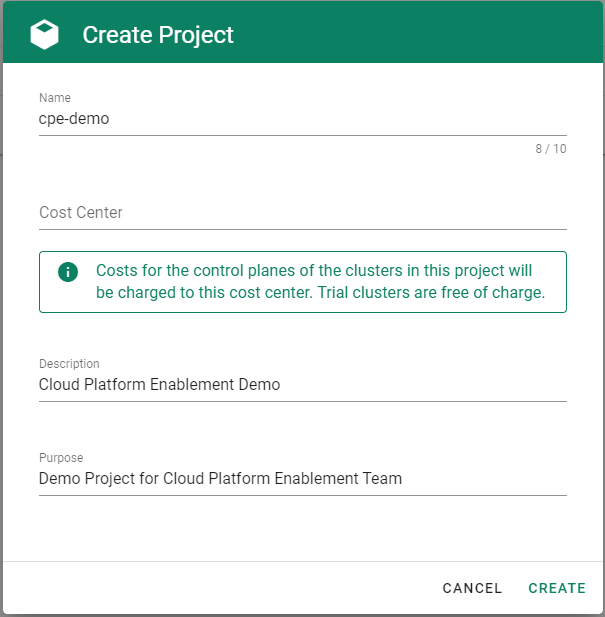

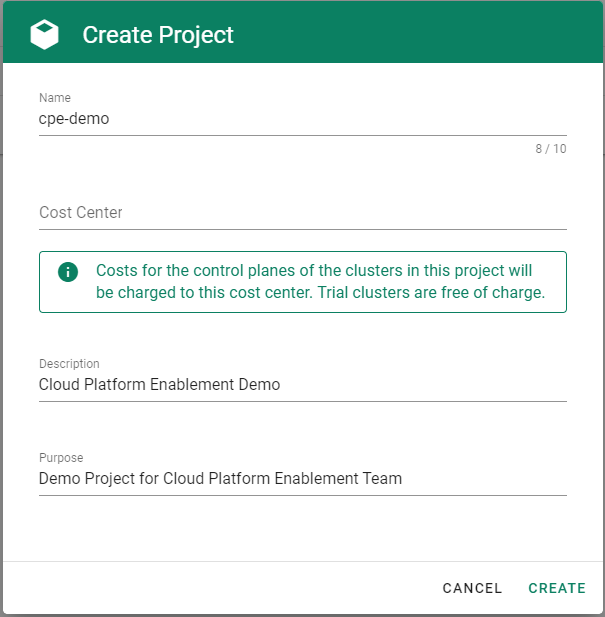

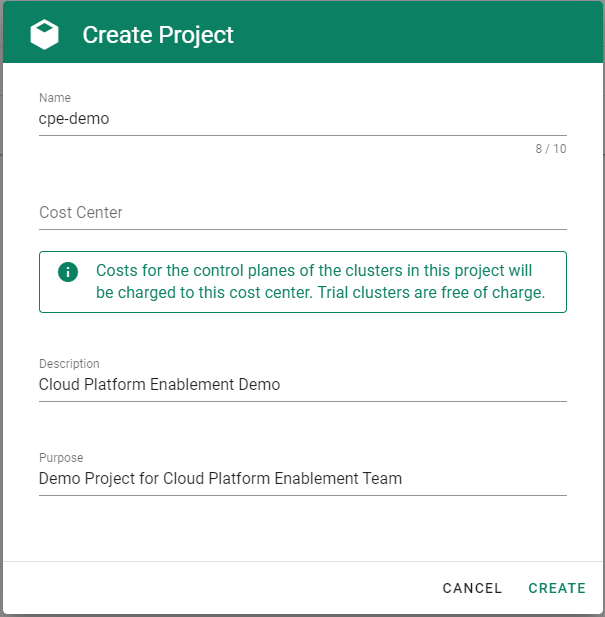

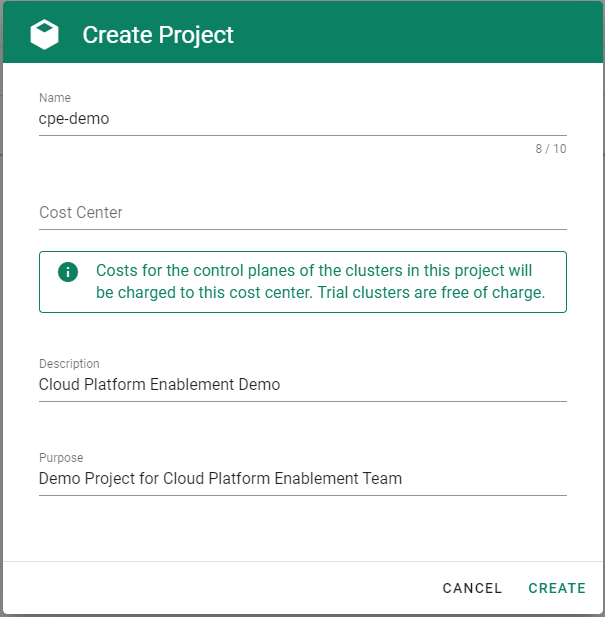

Go to the Gardener dashboard and create a project.

To be able to add shoot clusters to this project, you must first create a technical user on Alibaba Cloud with sufficient permissions.

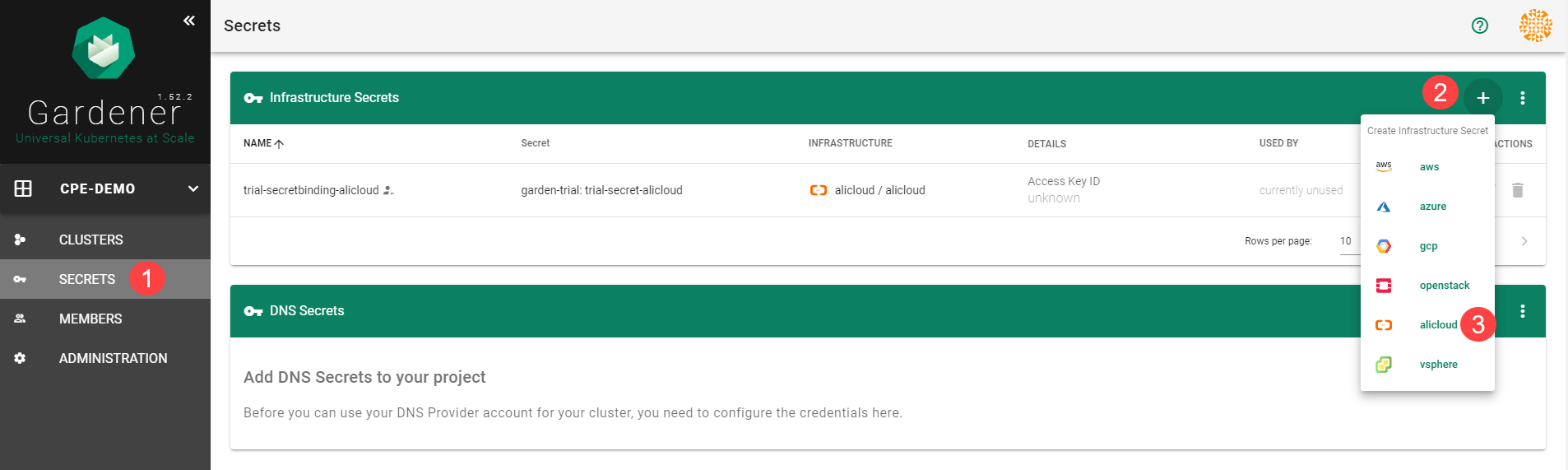

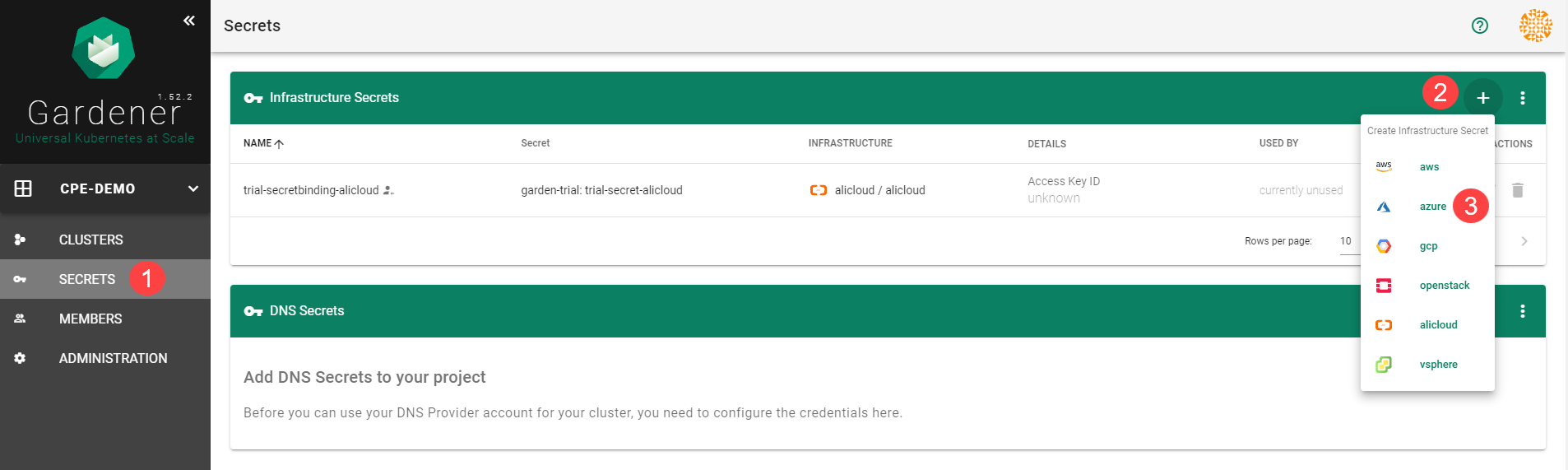

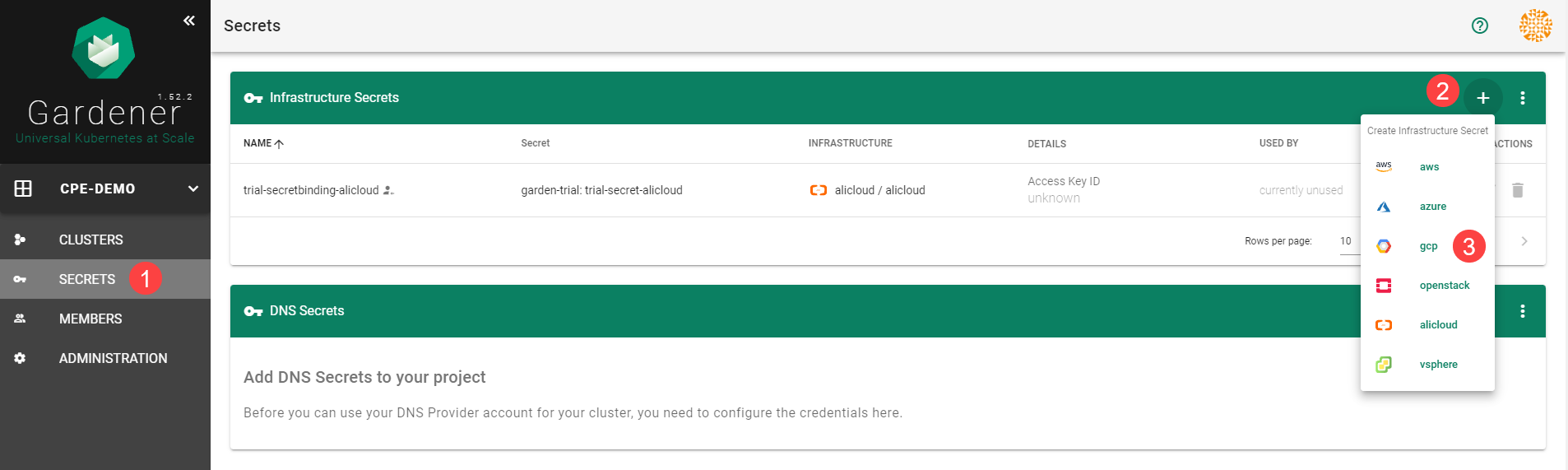

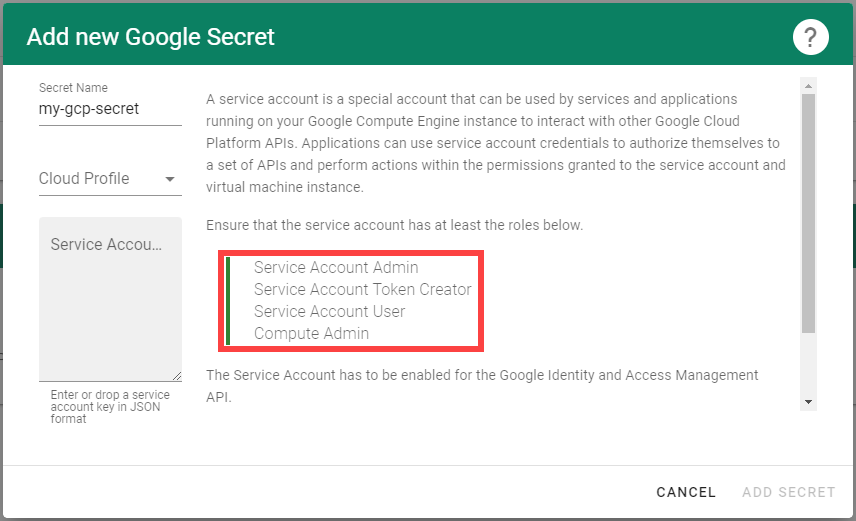

Choose Secrets, then the plus icon

and select AliCloud.

and select AliCloud.

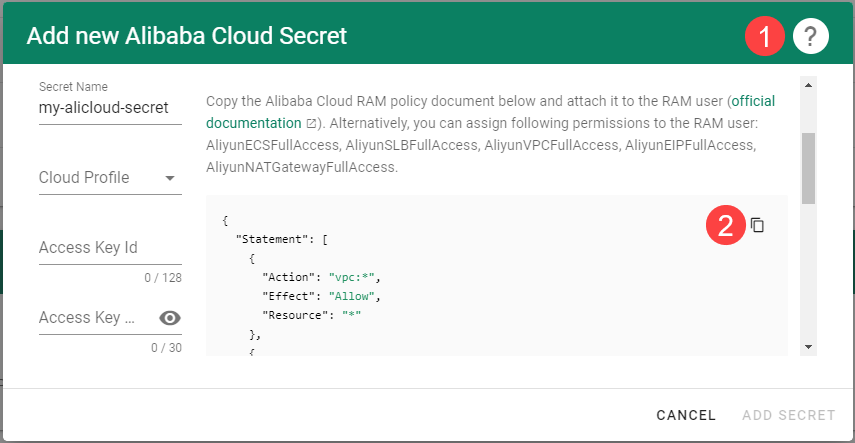

To copy the policy for Alibaba Cloud from the Gardener dashboard, click on the help icon

for Alibaba Cloud secrets, and choose copy

for Alibaba Cloud secrets, and choose copy  .

.

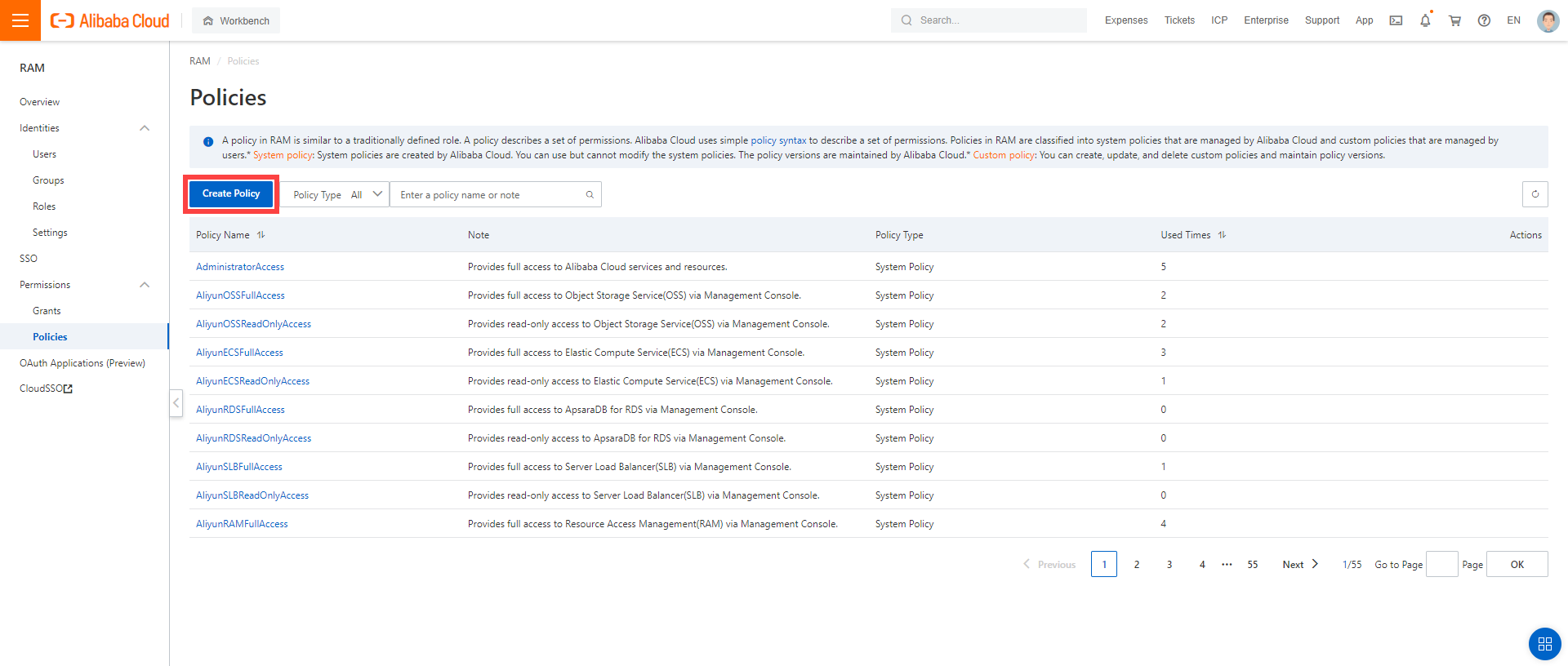

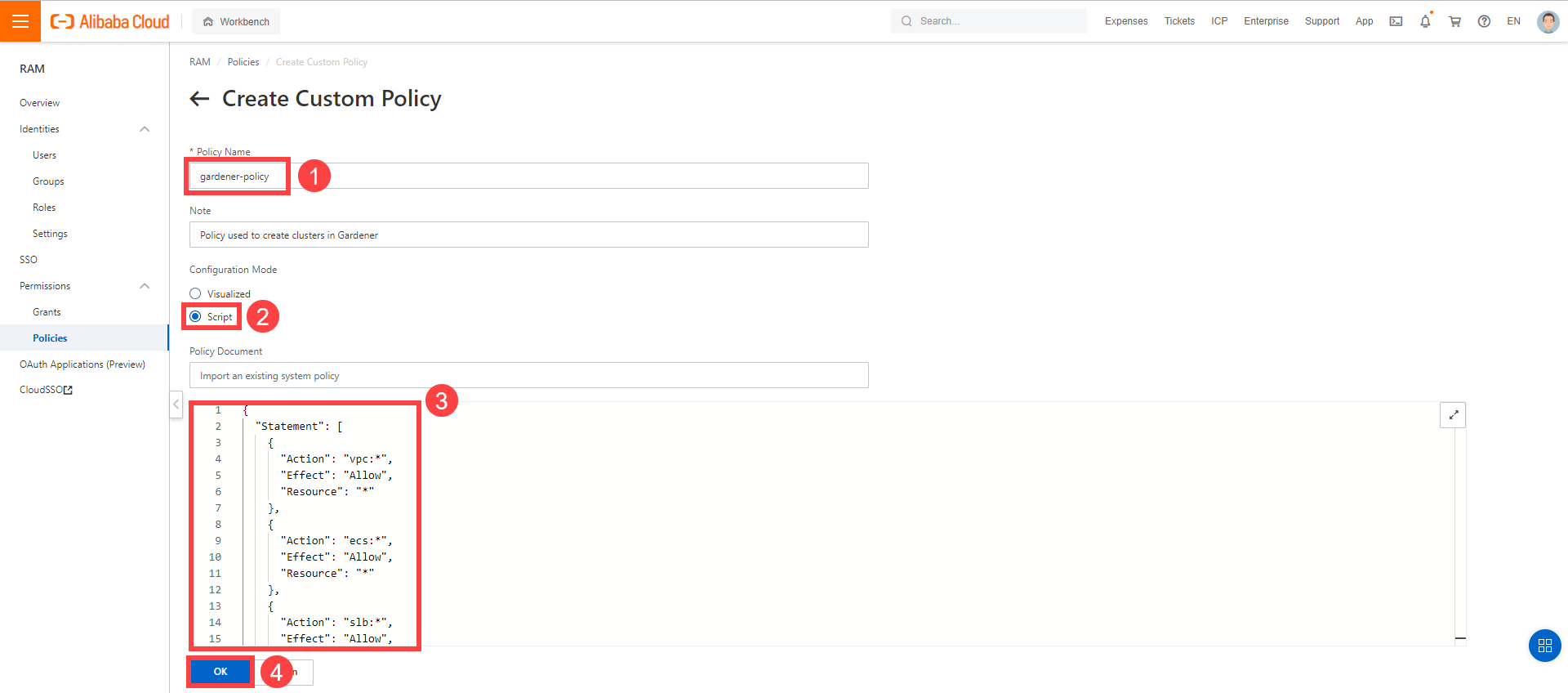

Create a custom policy in Alibaba Cloud:

Log on to your Alibaba account and choose RAM > Permissions > Policies.

Enter the name of your policy.

Select

Script.Paste the policy that you copied from the Gardener dashboard to this custom policy.

Choose OK.

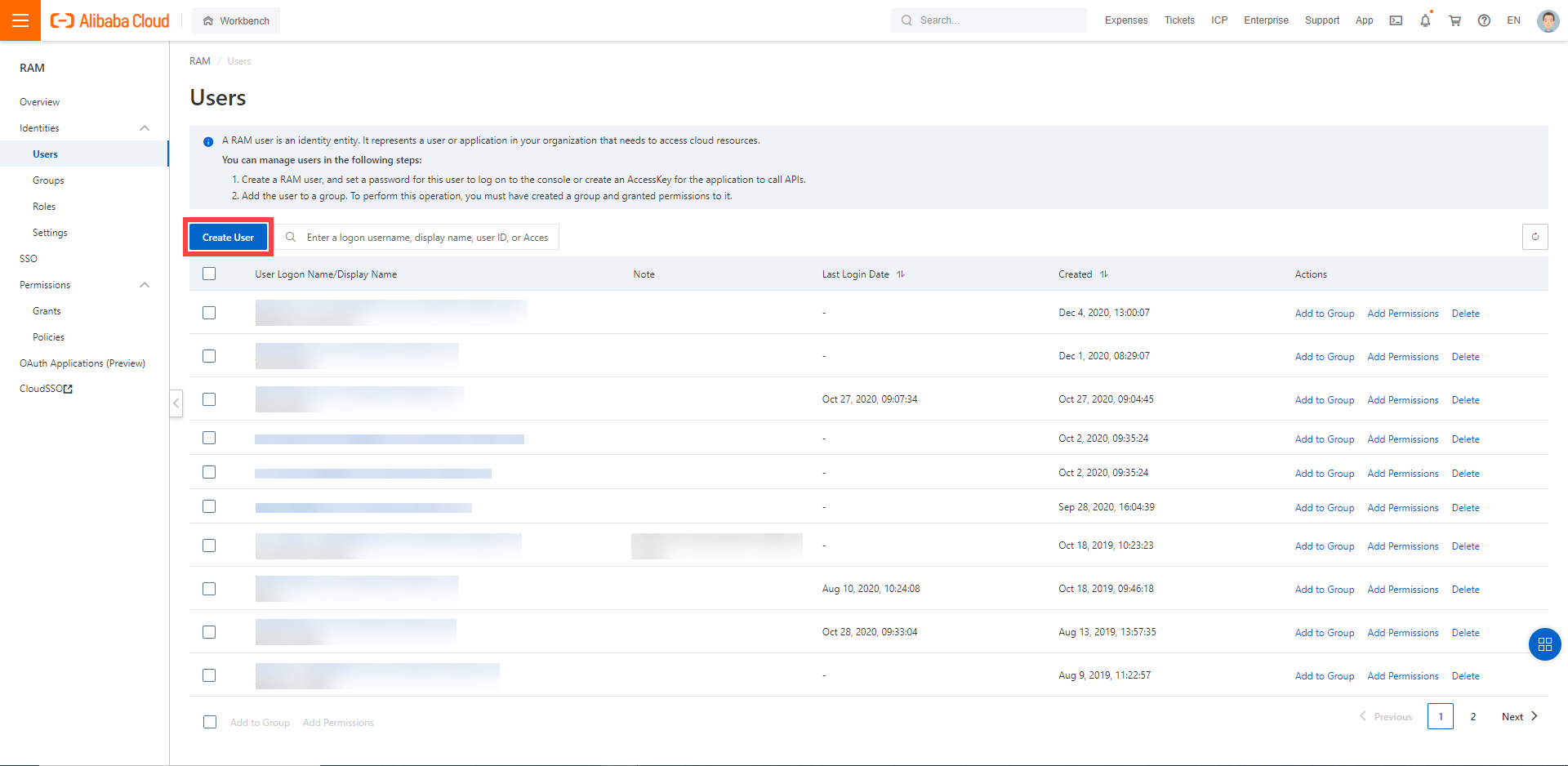

In the Alibaba Cloud console, create a new technical user:

Choose RAM > Users.

Choose Create User.

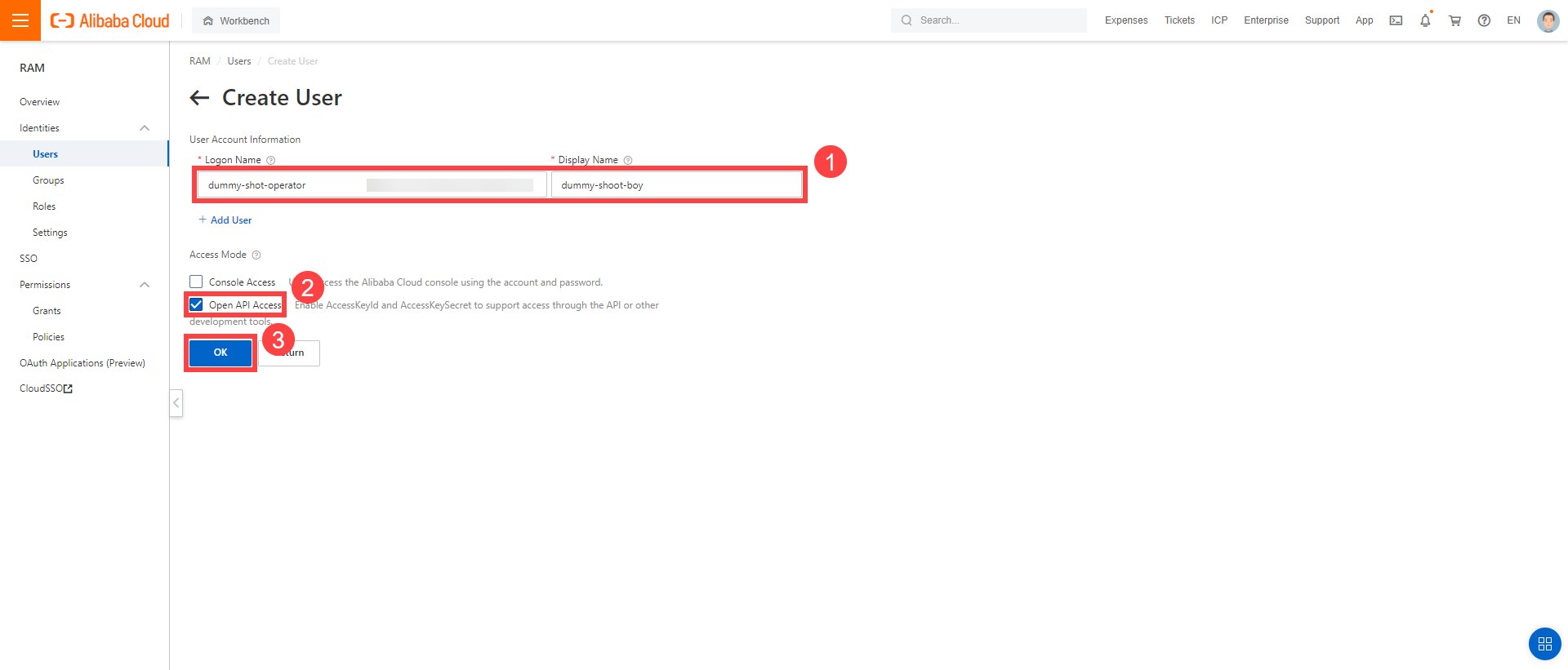

Enter a logon and display name for your user.

Select Open API Access.

Choose OK.

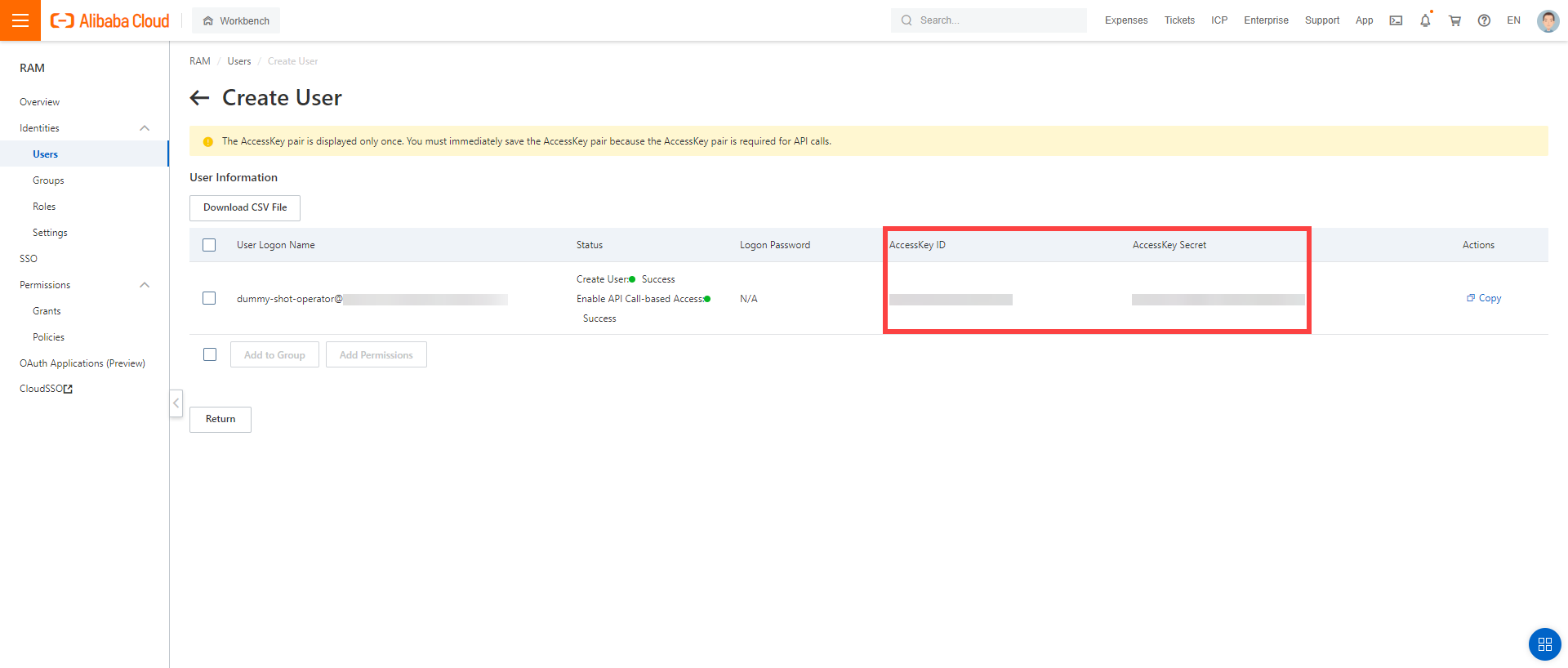

After the user is created,

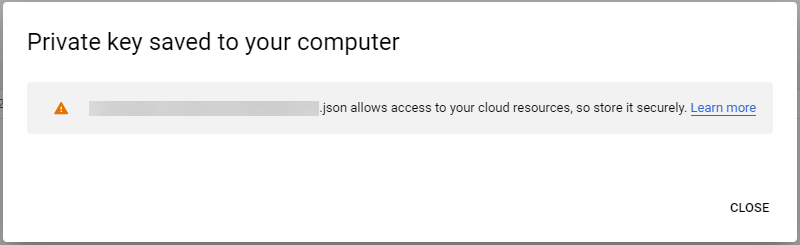

AccessKeyIdandAccessKeySecretare generated and displayed. Remember to save them. TheAccessKeyis used later to create secrets for Gardener.

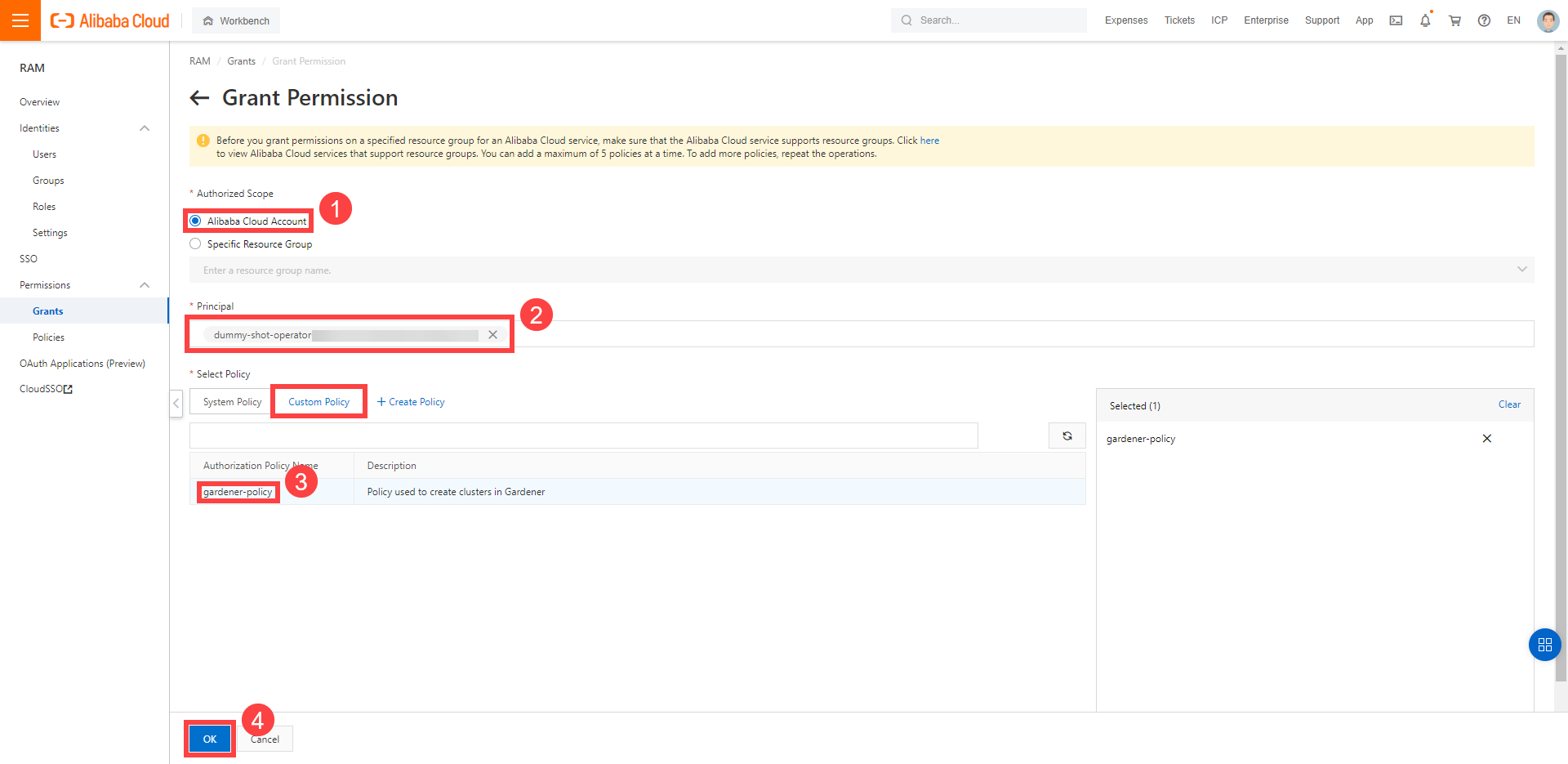

Assign the policy you created to the technical user:

Choose RAM > Permissions > Grants.

Choose Grant Permission.

Select Alibaba Cloud Account.

Assign the policy you’ve created before to the technical user.

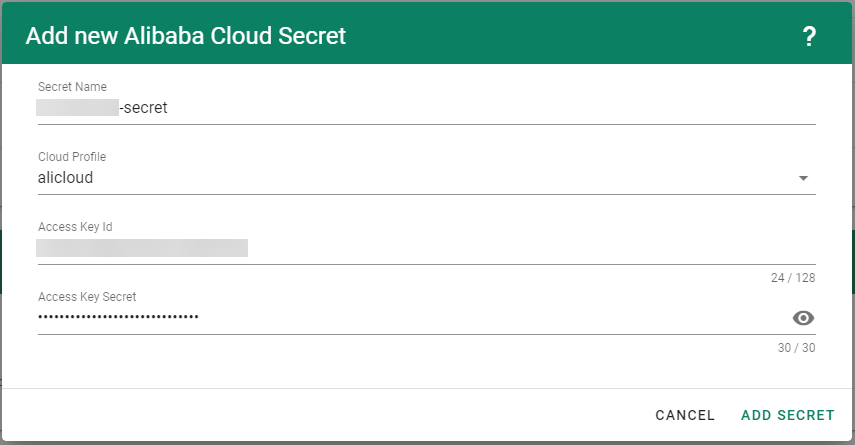

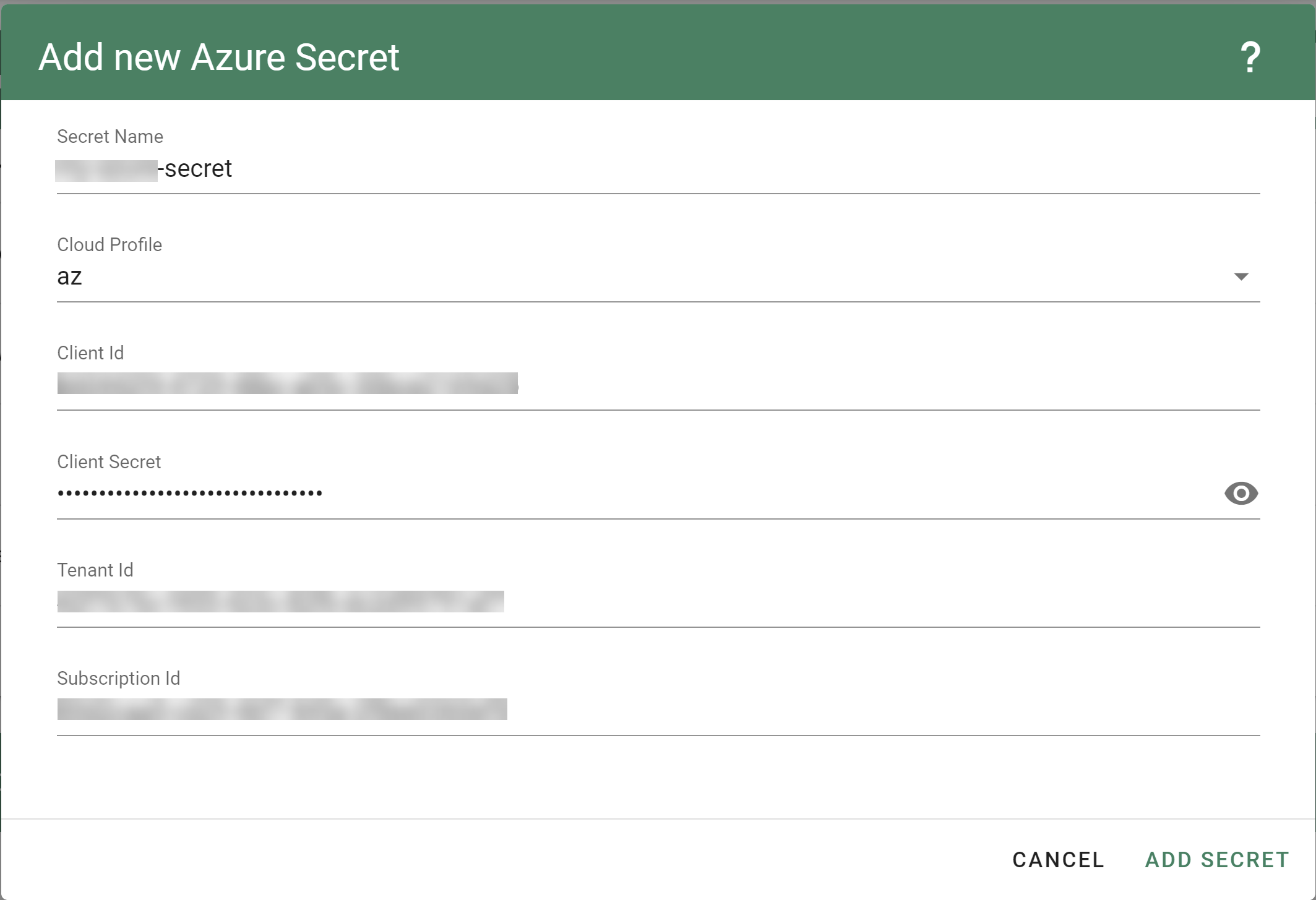

Create your secret.

- Type the name of your secret.

- Copy and paste the

Access Key IDandSecret Access Keyyou saved when you created the technical user on Alibaba Cloud. - Choose Add secret.

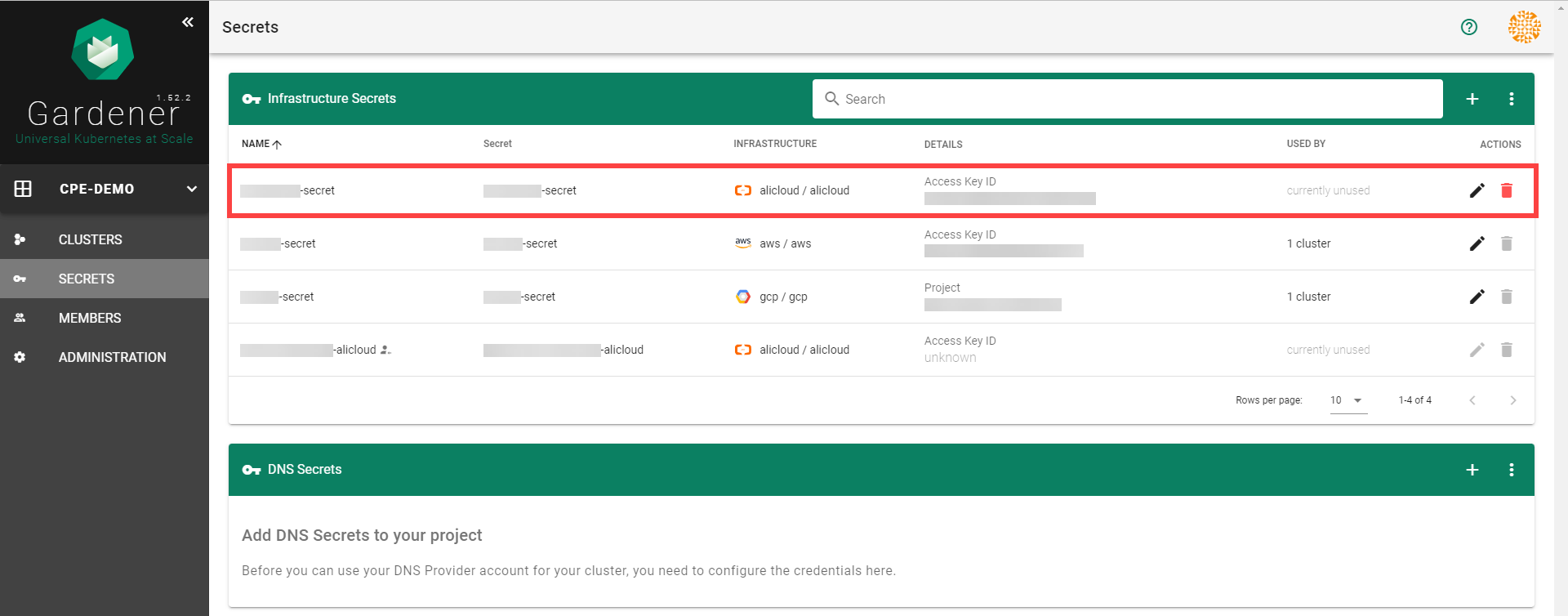

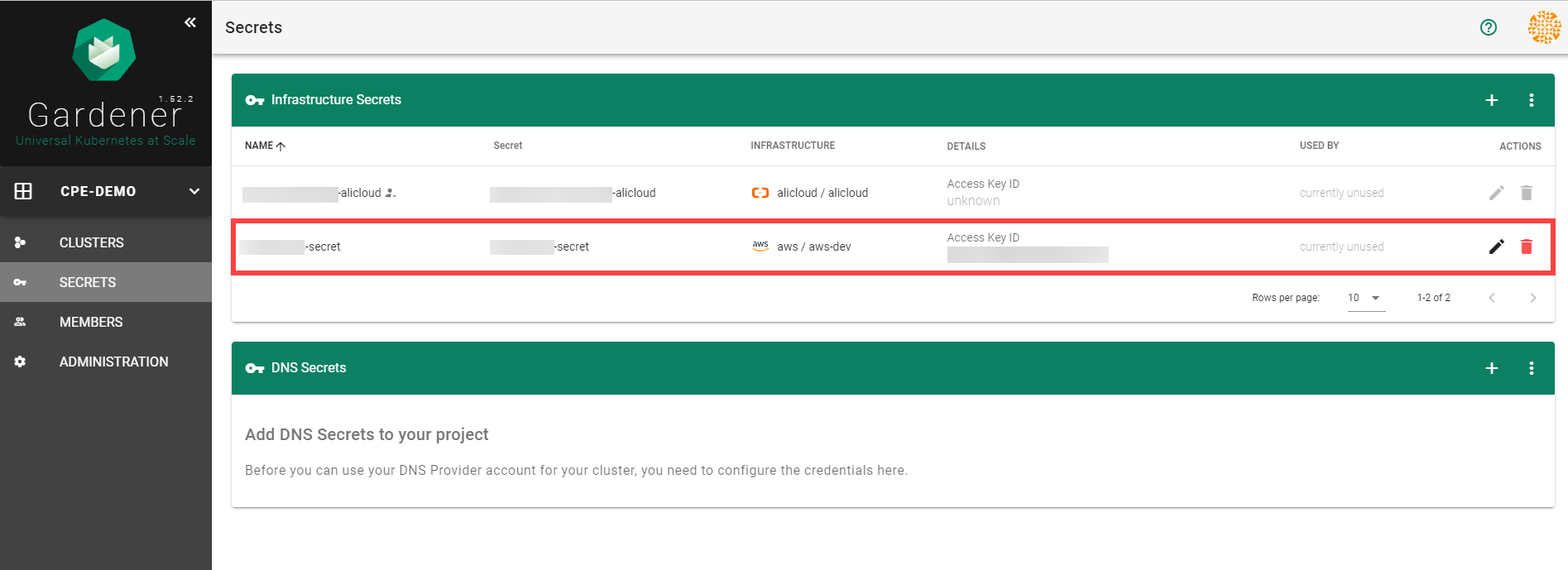

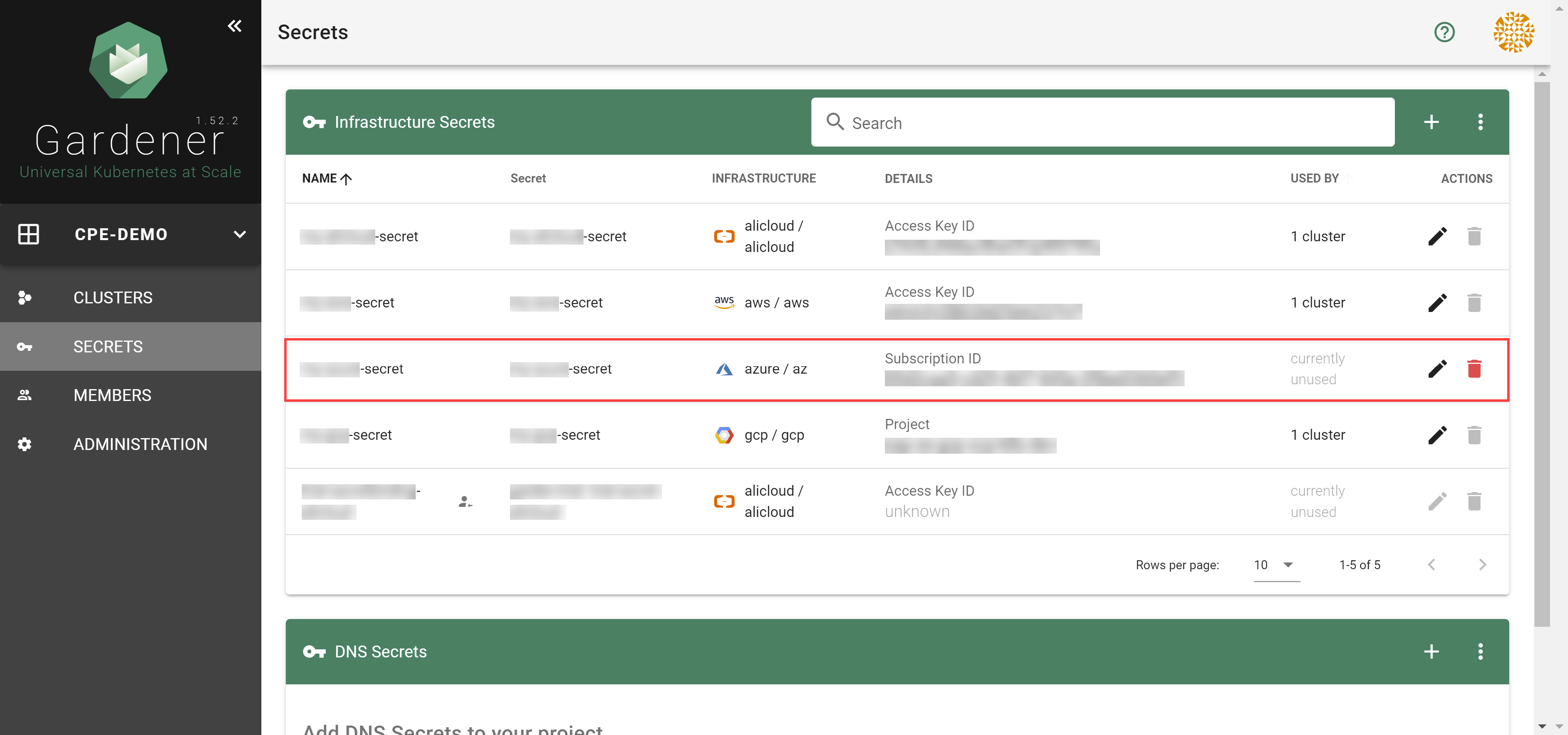

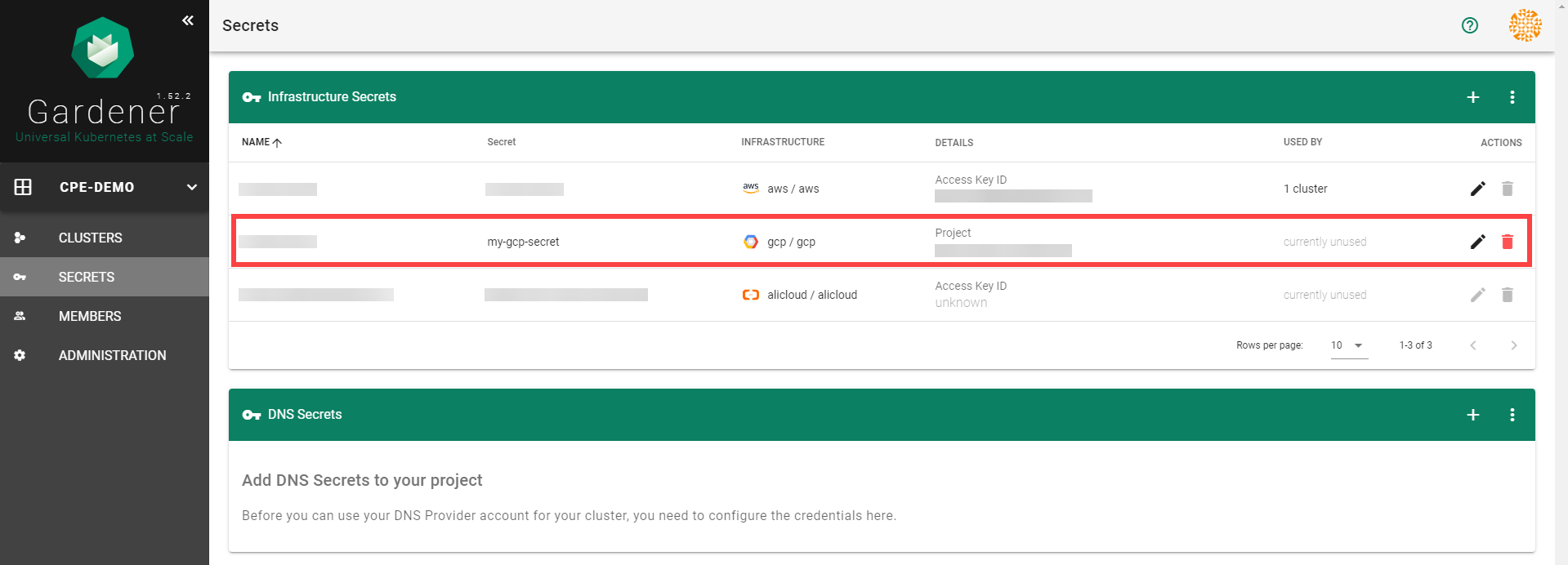

After completing these steps, you should see your newly created secret in the Infrastructure Secrets section.

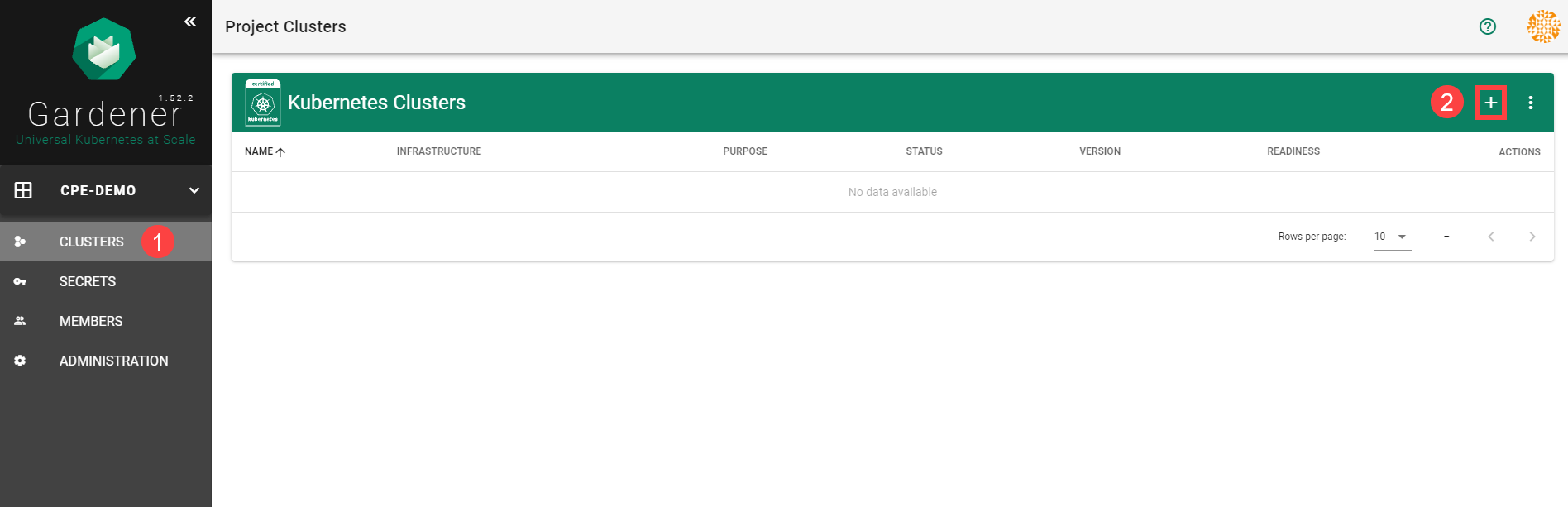

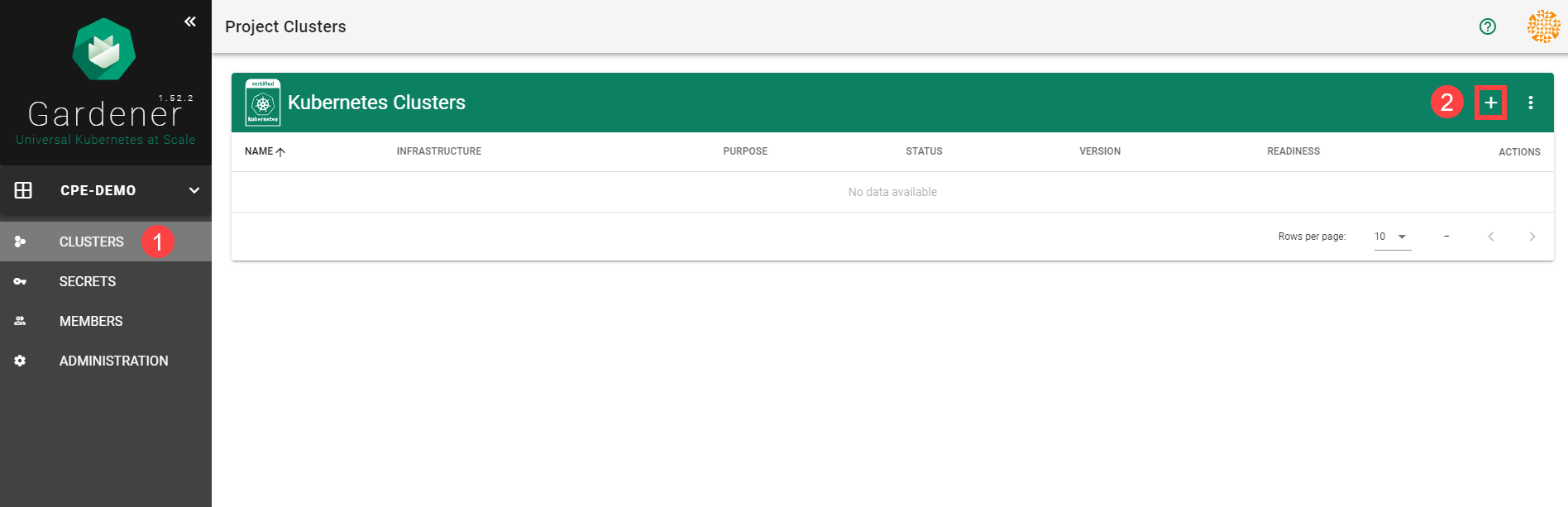

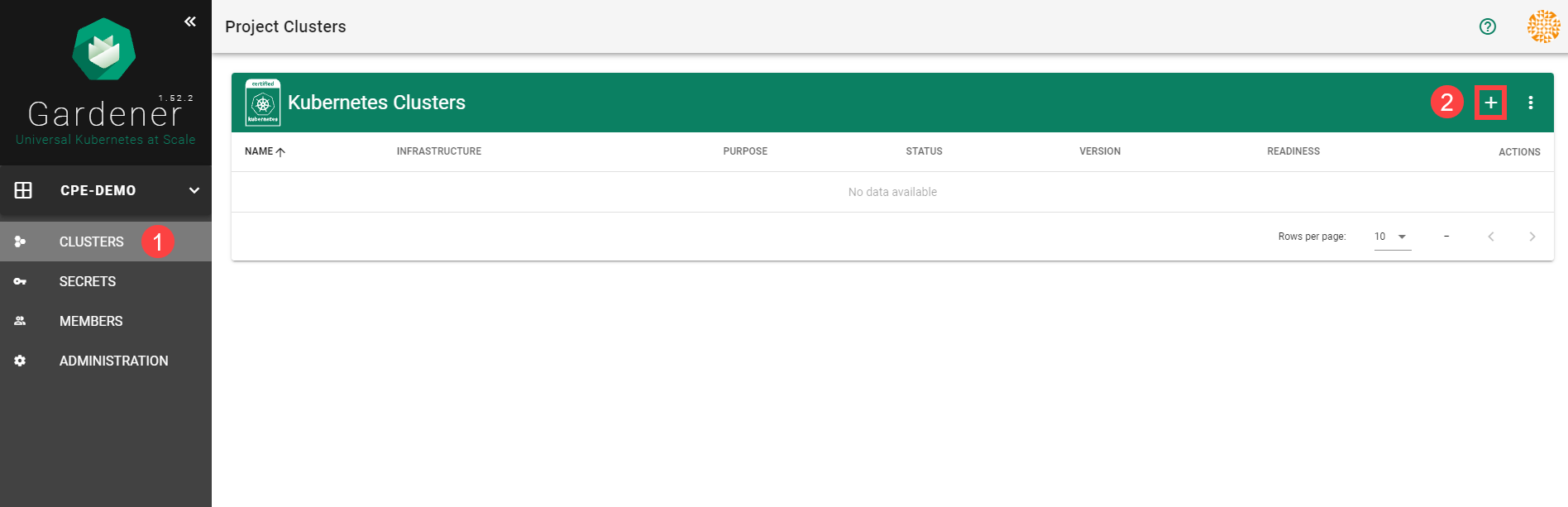

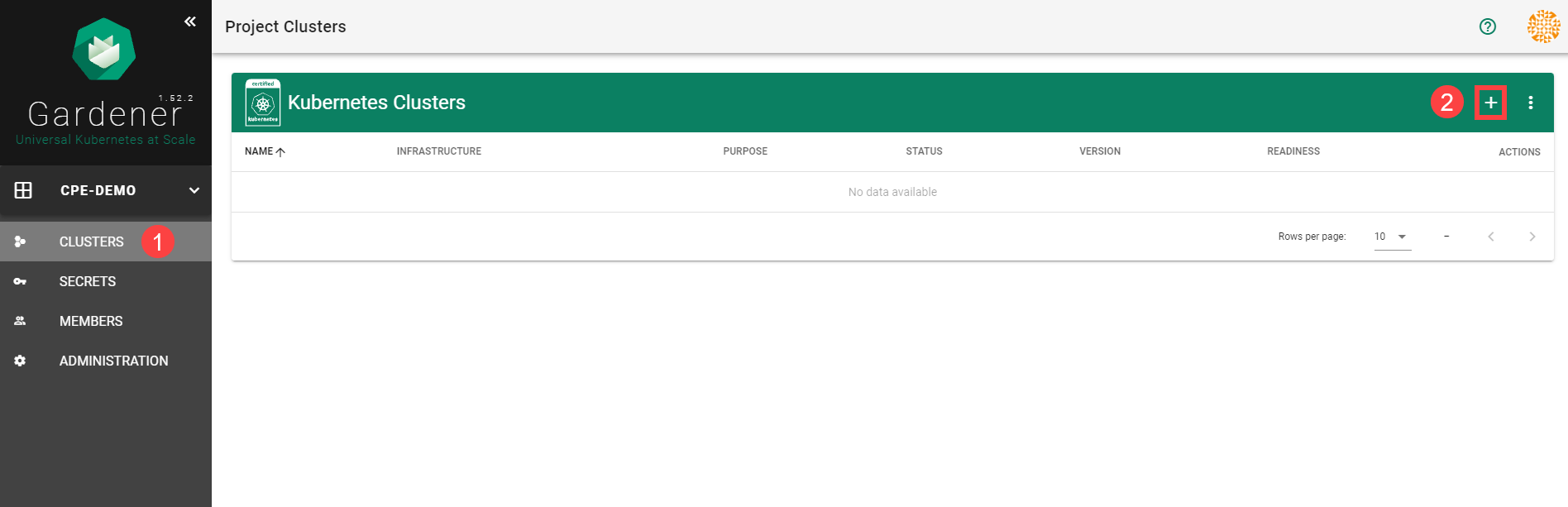

To create a new cluster, choose Clusters and then the plus sign in the upper right corner.

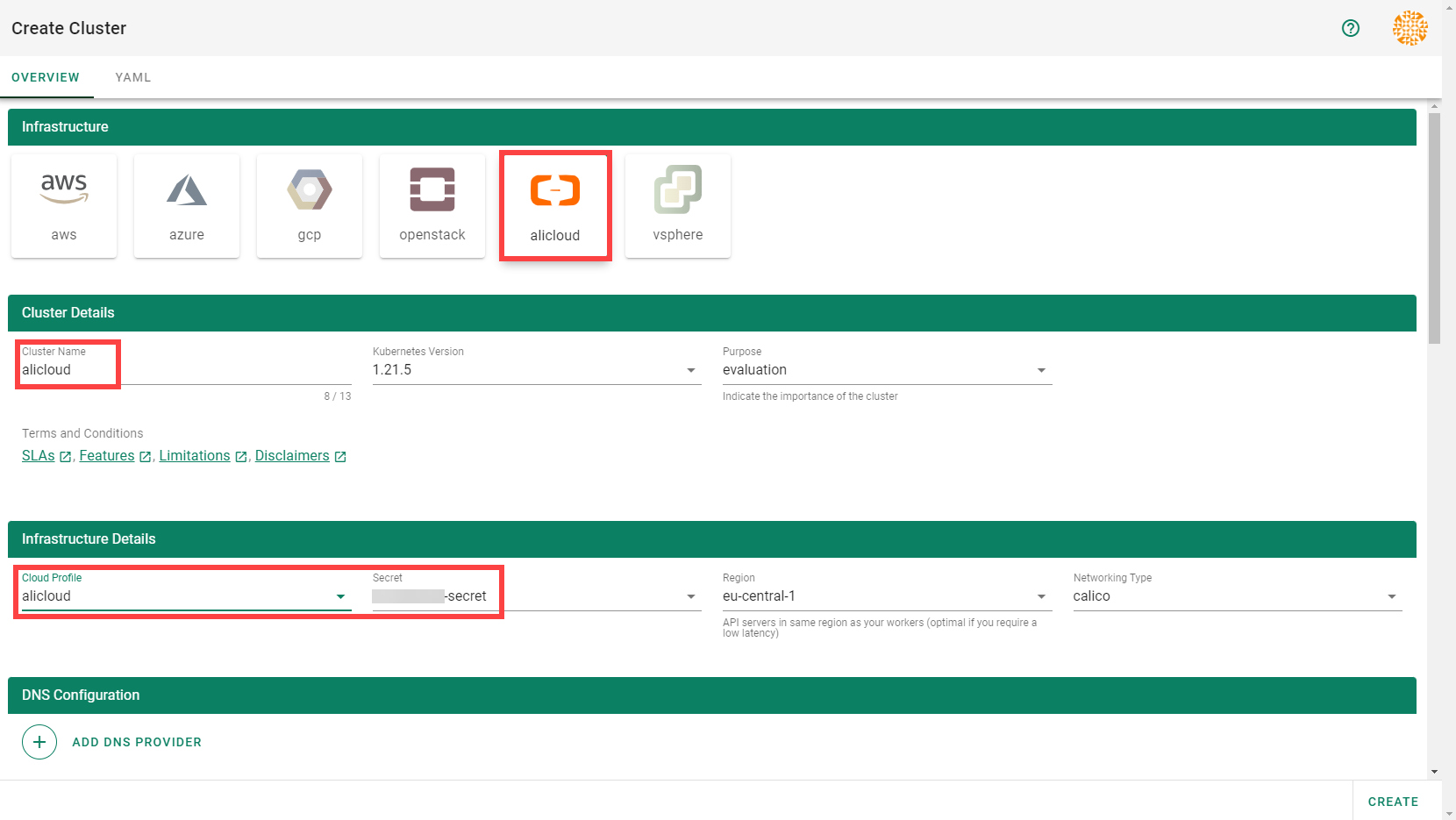

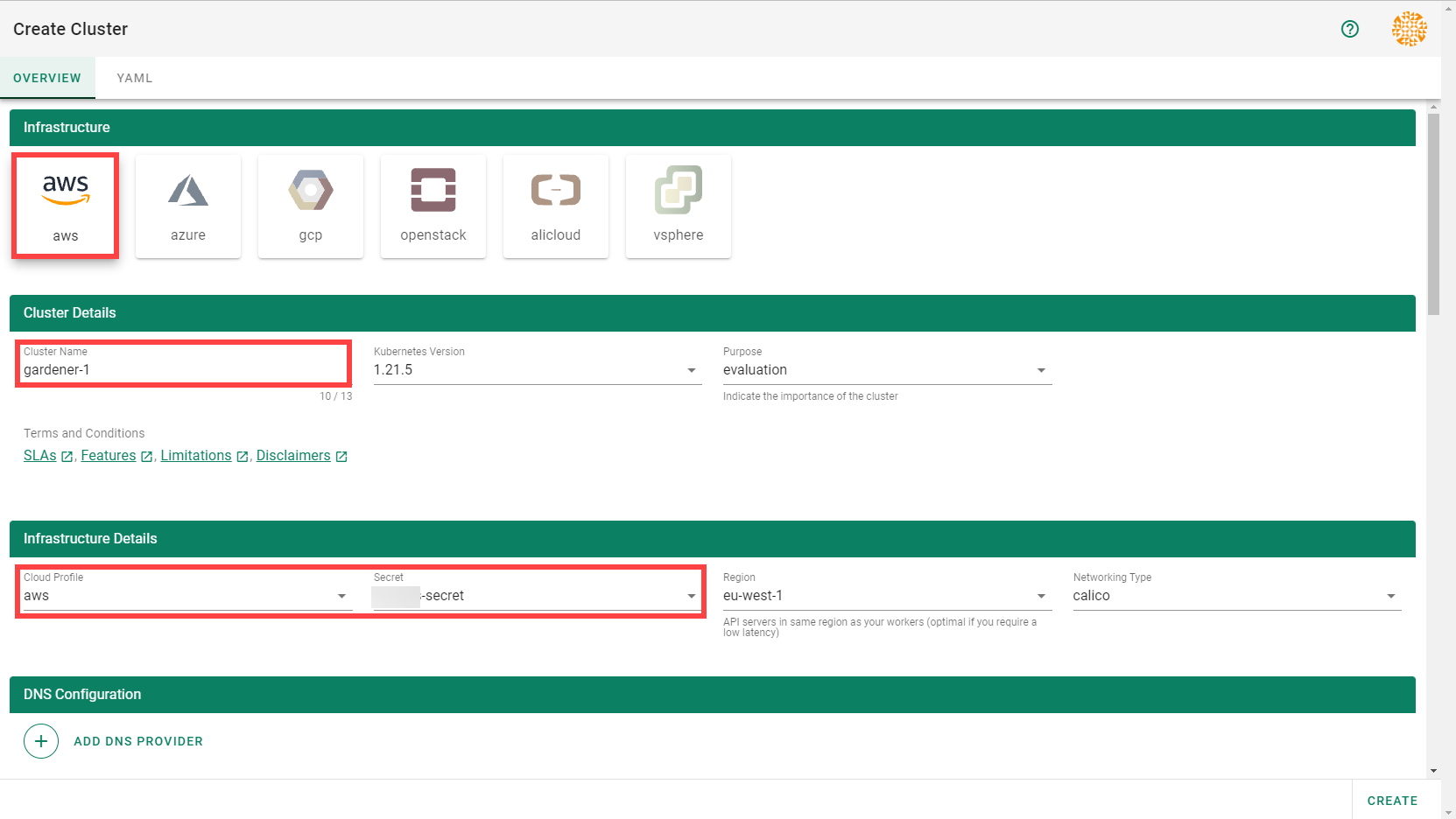

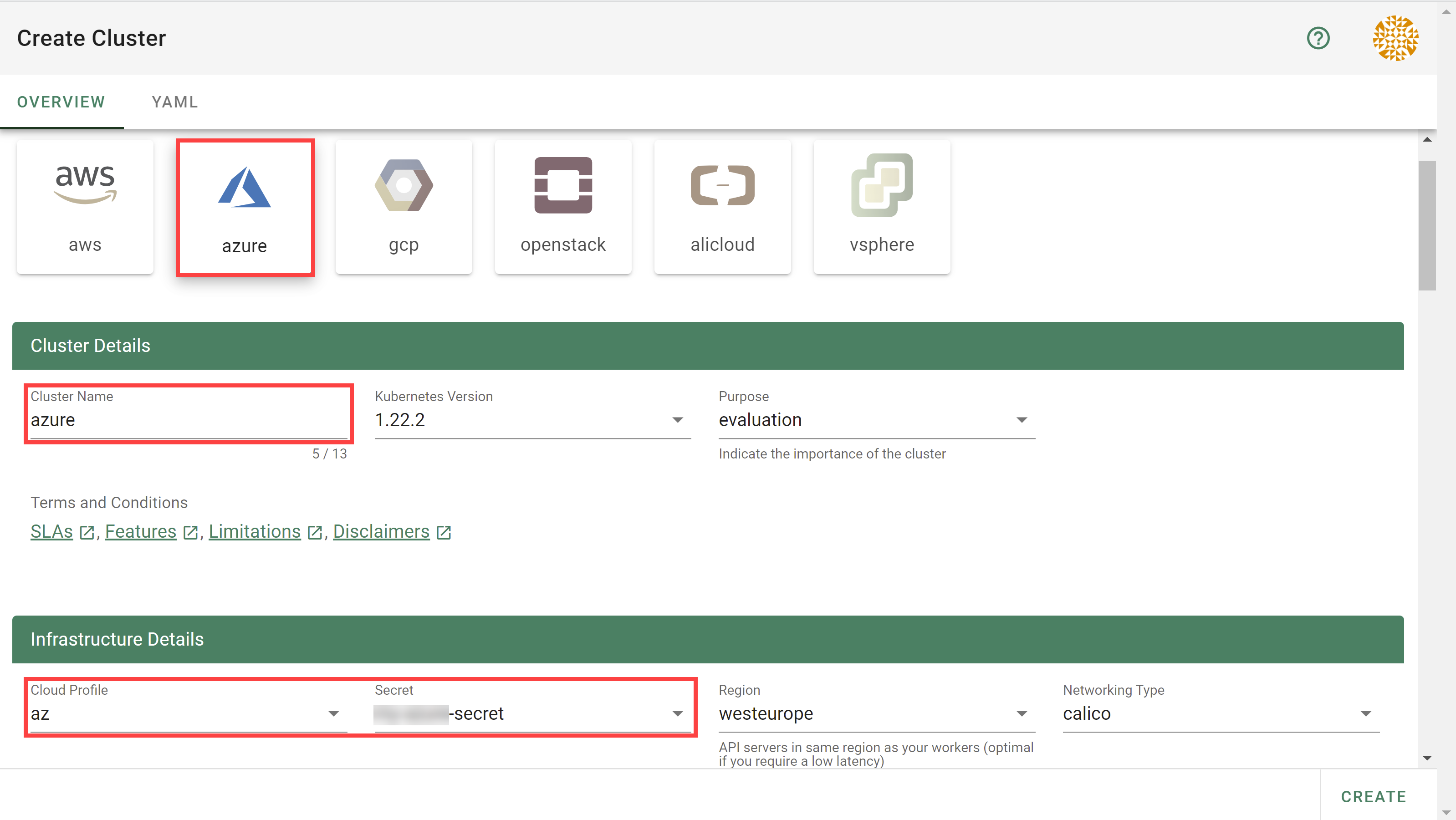

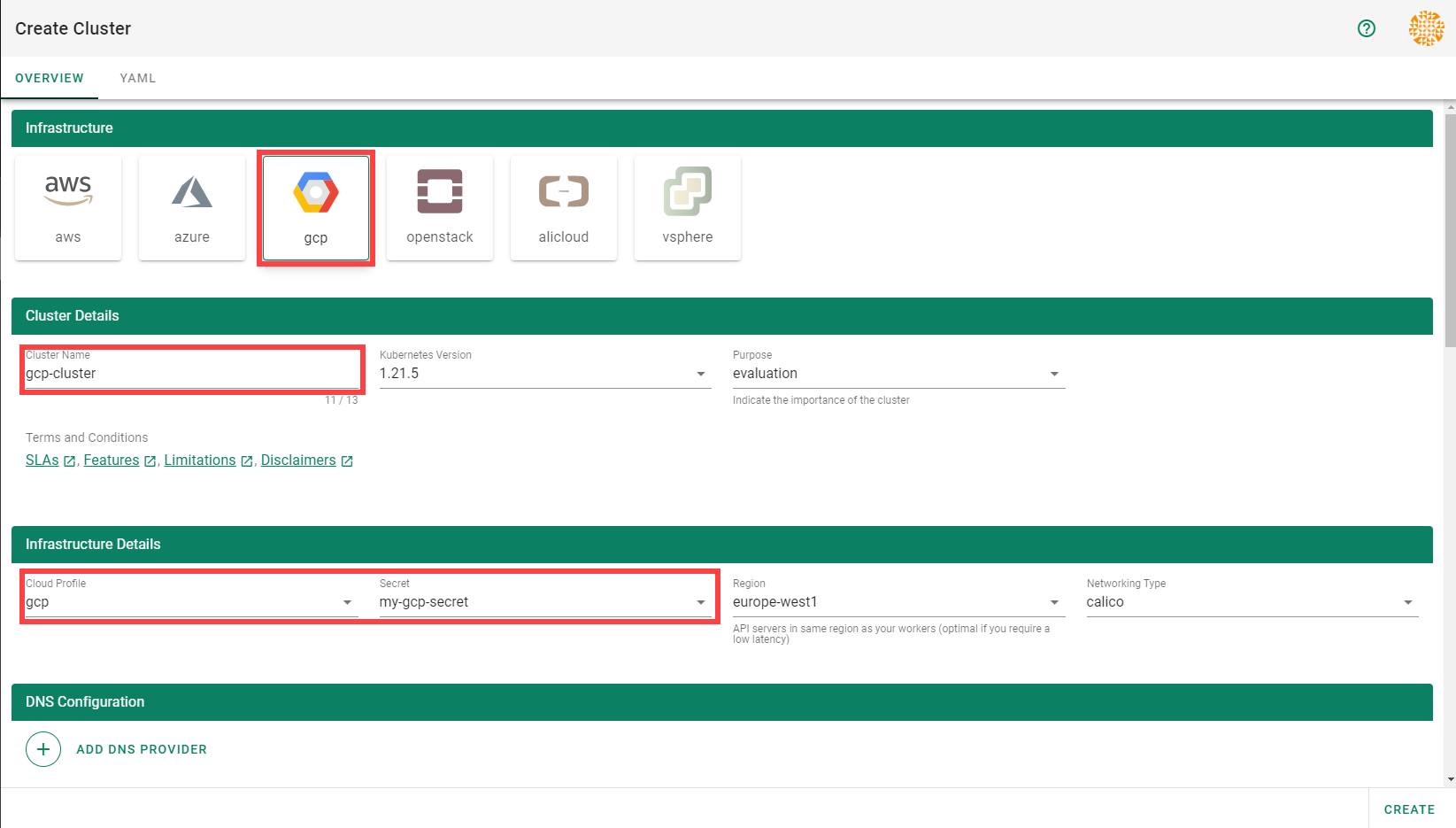

In the Create Cluster section:

Select AliCloud in the Infrastructure tab.

Type the name of your cluster in the Cluster Details tab.

Choose the secret you created before in the Infrastructure Details tab.

Choose Create.

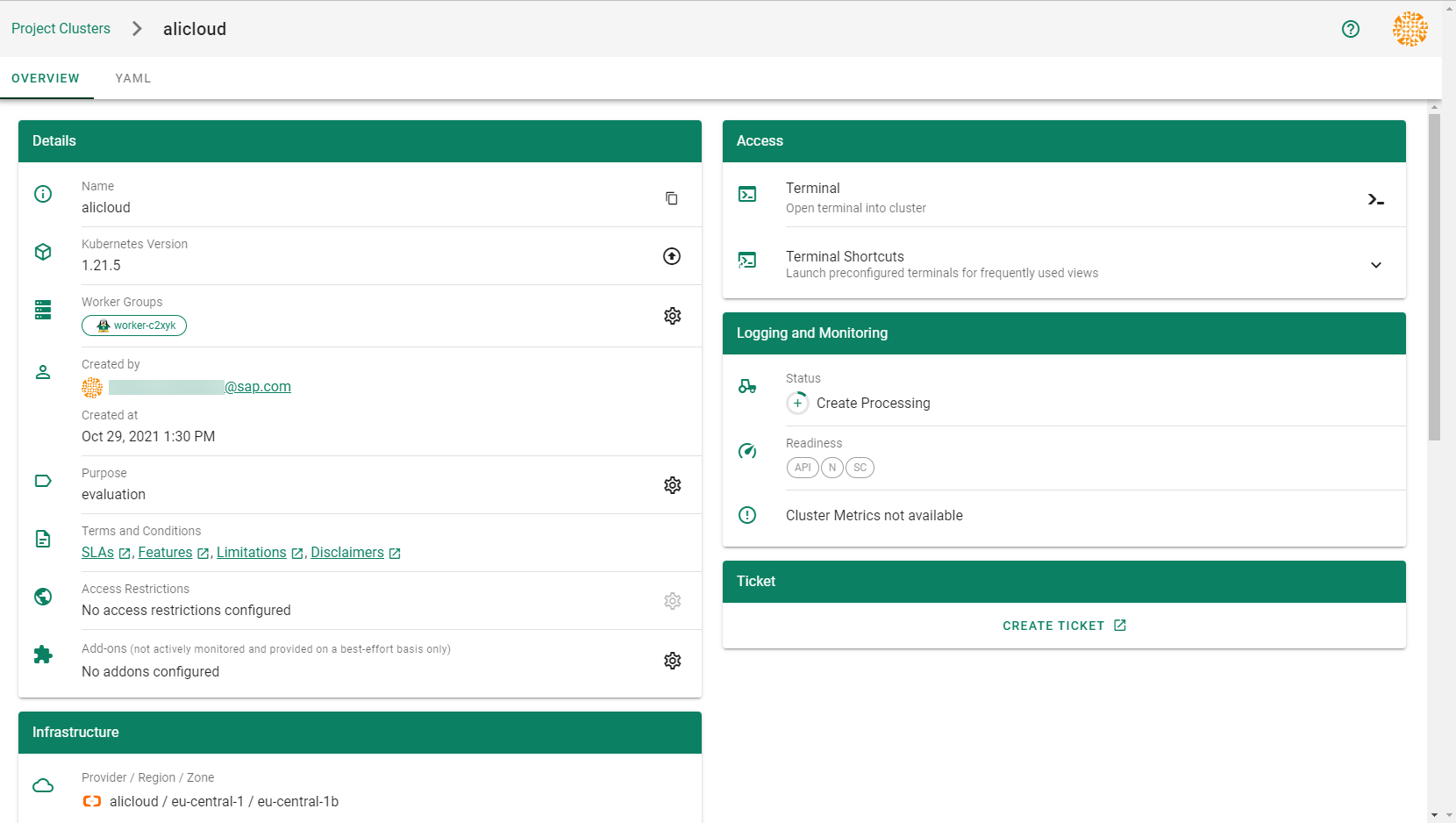

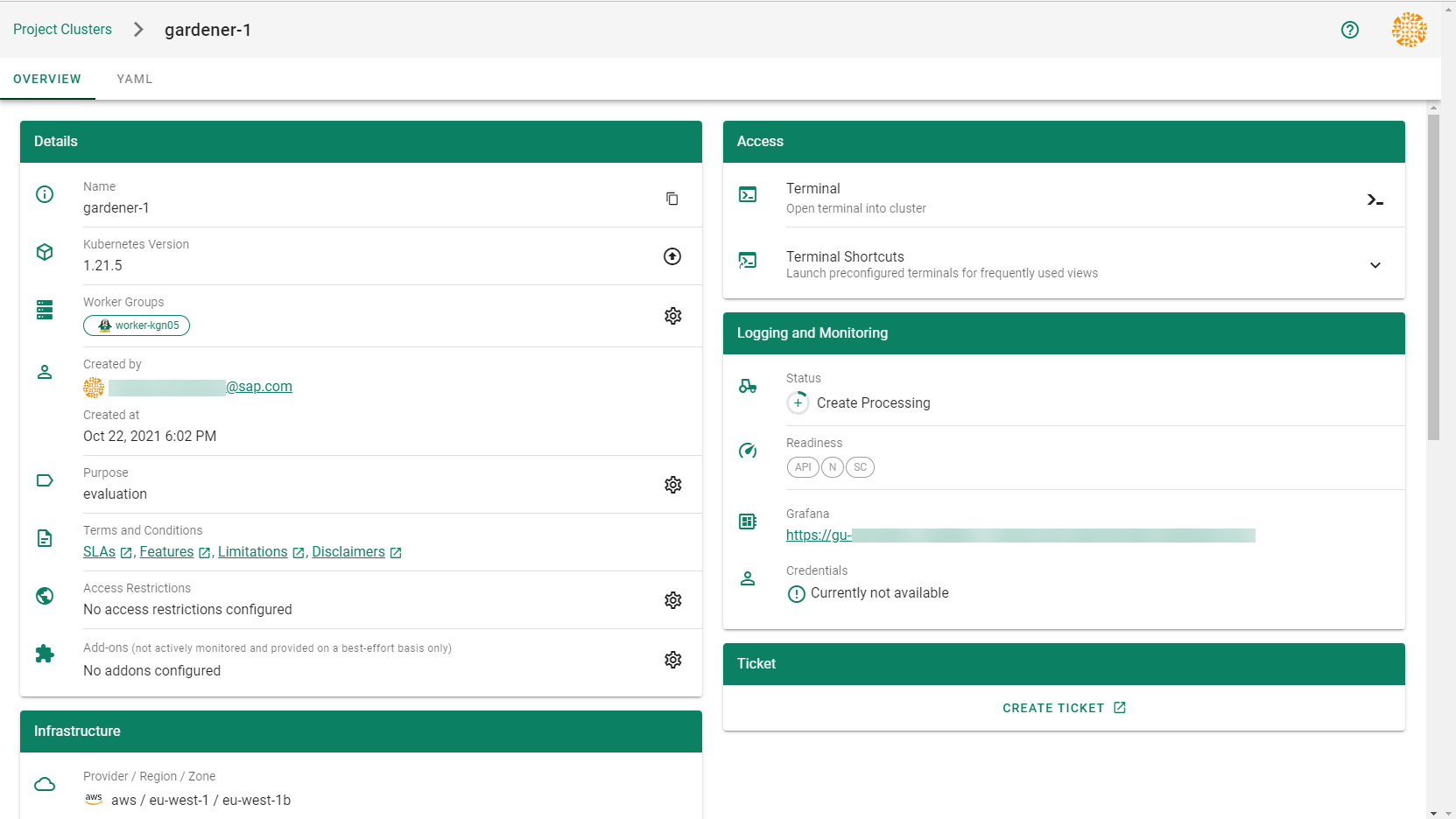

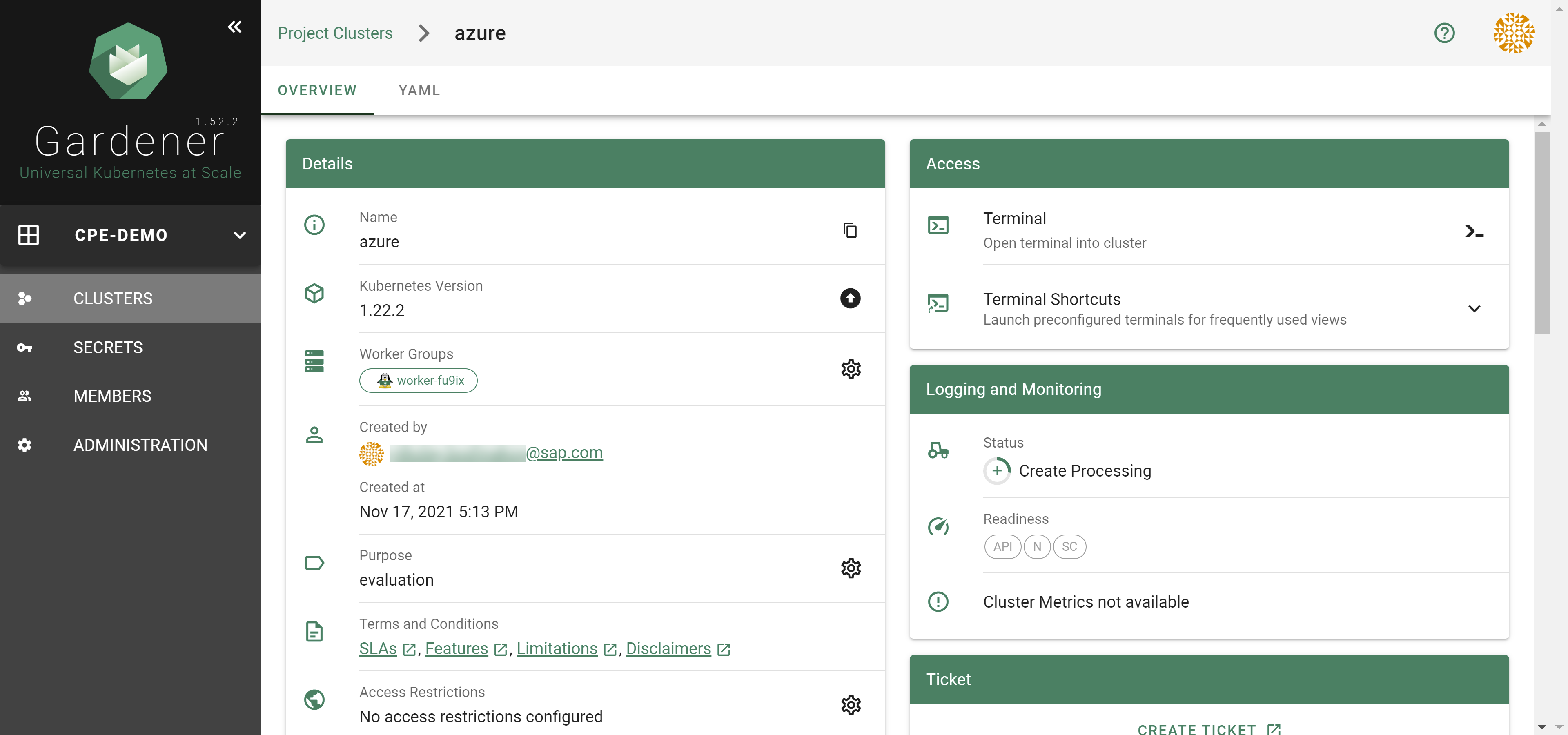

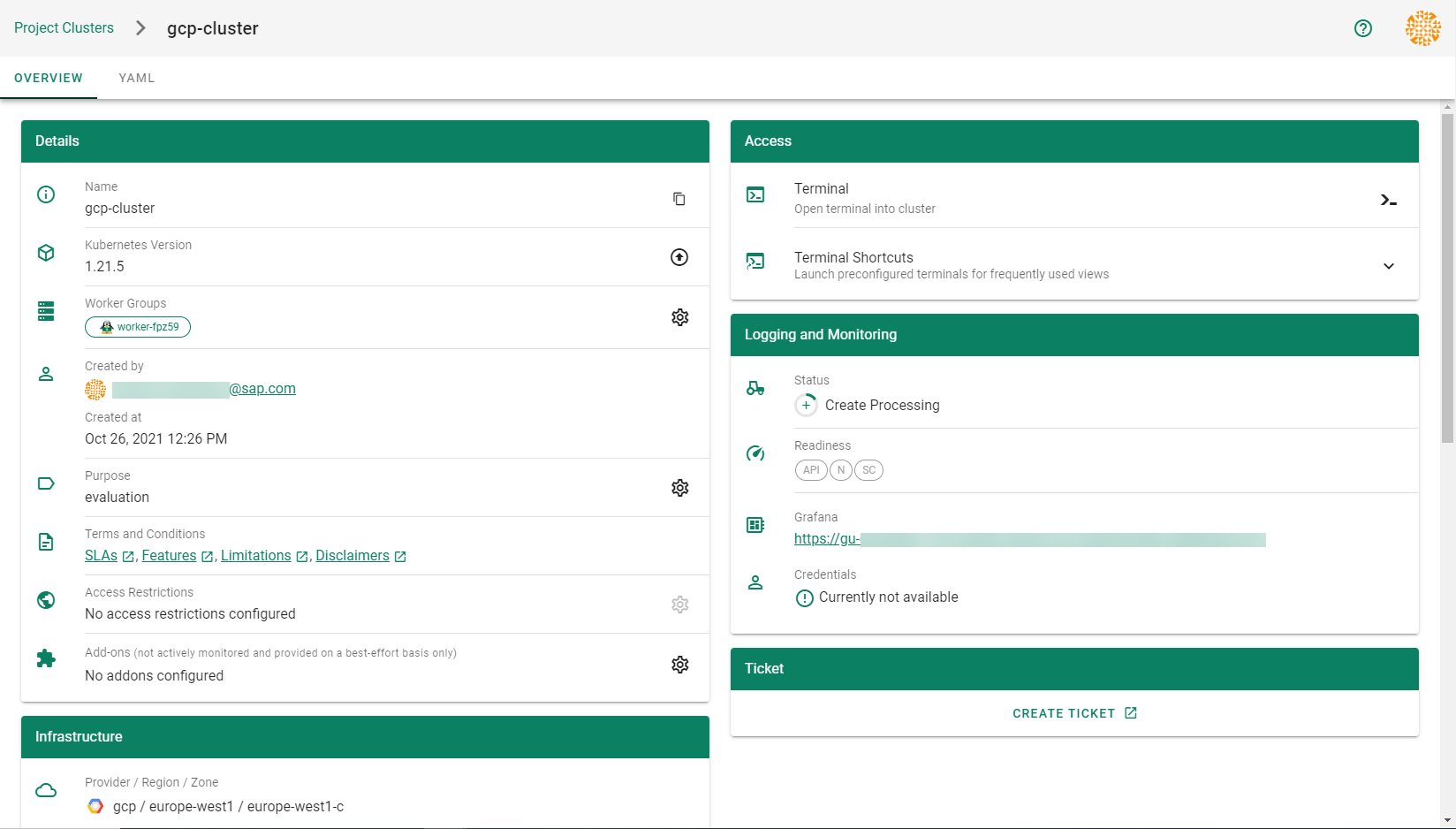

Wait for your cluster to get created.

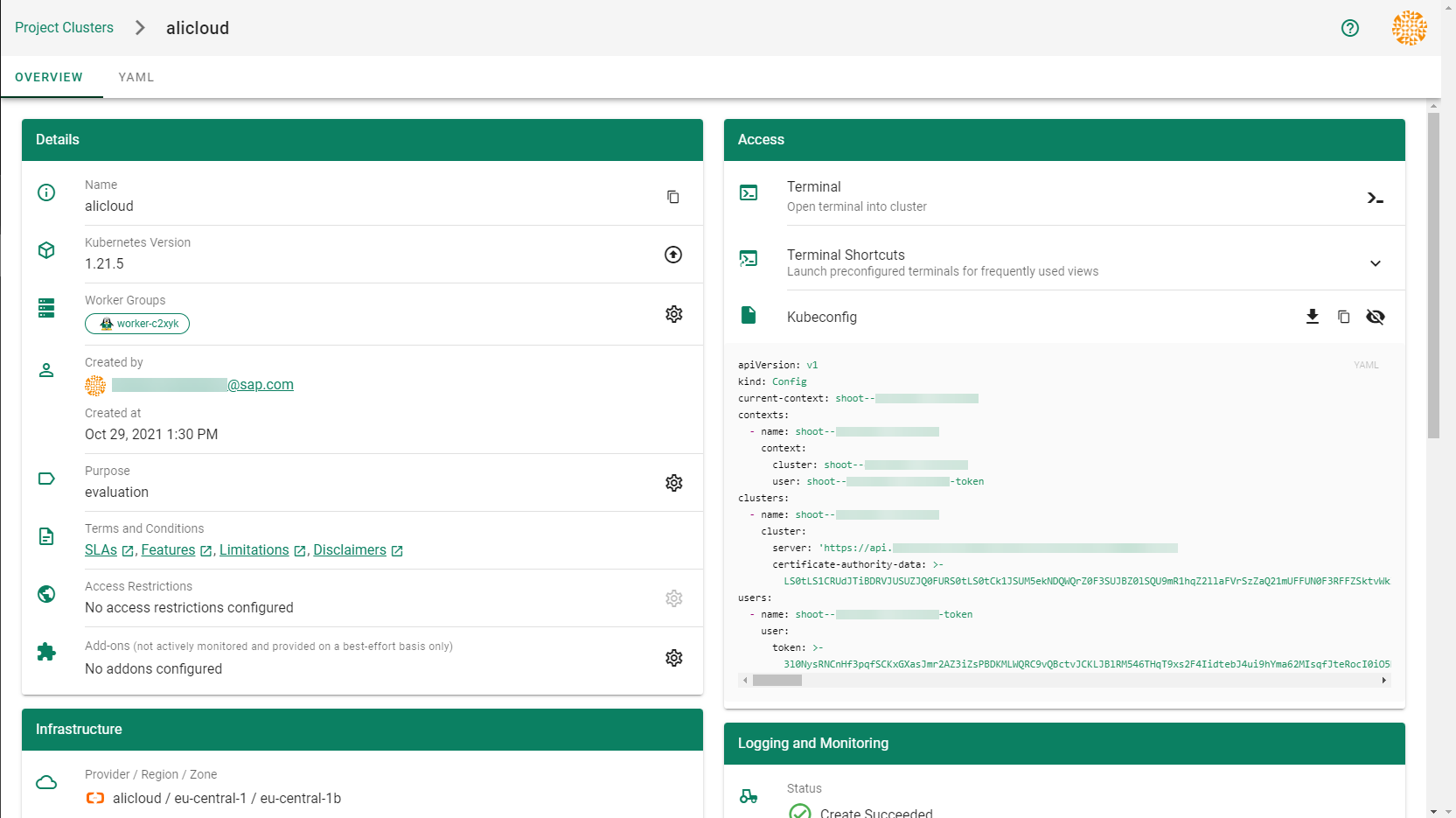

Result

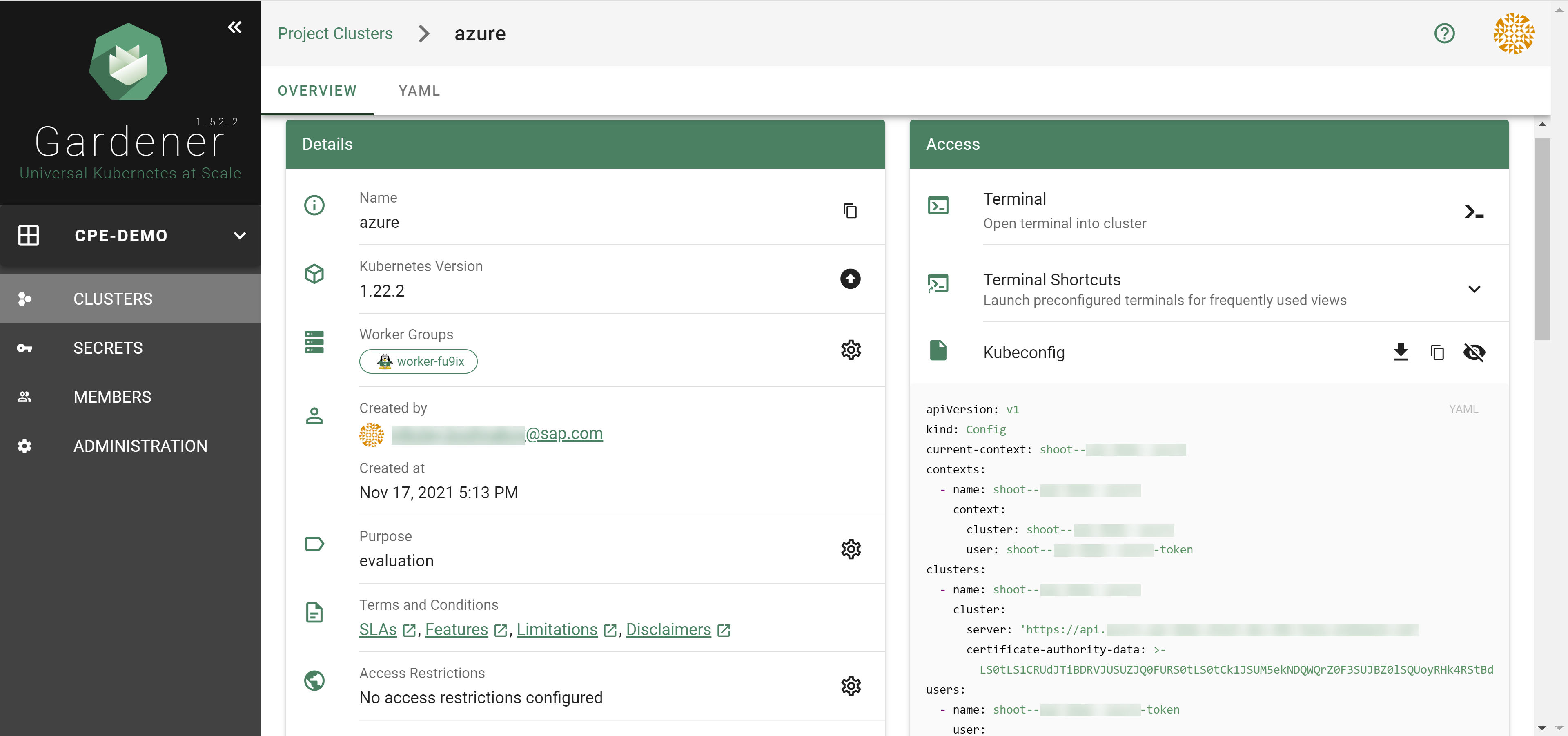

After completing the steps in this tutorial, you will be able to see and download the kubeconfig of your cluster. With it you can create shoot clusters on Alibaba Cloud.

The size of persistent volumes in your shoot cluster must at least be 20 GiB large. If you choose smaller sizes in your Kubernetes PV definition, the allocation of cloud disk space on Alibaba Cloud fails.

1.2 - Deployment

Deployment of the AliCloud provider extension

Disclaimer: This document is NOT a step by step installation guide for the AliCloud provider extension and only contains some configuration specifics regarding the installation of different components via the helm charts residing in the AliCloud provider extension repository.

gardener-extension-admission-alicloud

Authentication against the Garden cluster

There are several authentication possibilities depending on whether or not the concept of Virtual Garden is used.

Virtual Garden is not used, i.e., the runtime Garden cluster is also the target Garden cluster.

Automounted Service Account Token

The easiest way to deploy the gardener-extension-admission-alicloud component will be to not provide kubeconfig at all. This way in-cluster configuration and an automounted service account token will be used. The drawback of this approach is that the automounted token will not be automatically rotated.

This will allow for automatic rotation of the service account token by the kubelet. The configuration can be achieved by setting both .Values.global.serviceAccountTokenVolumeProjection.enabled: true and .Values.global.kubeconfig in the respective chart’s values.yaml file.

Virtual Garden is used, i.e., the runtime Garden cluster is different from the target Garden cluster.

Service Account

The easiest way to setup the authentication will be to create a service account and the respective roles will be bound to this service account in the target cluster. Then use the generated service account token and craft a kubeconfig which will be used by the workload in the runtime cluster. This approach does not provide a solution for the rotation of the service account token. However, this setup can be achieved by setting .Values.global.virtualGarden.enabled: true and following these steps:

- Deploy the

applicationpart of the charts in thetargetcluster. - Get the service account token and craft the

kubeconfig. - Set the crafted

kubeconfigand deploy theruntimepart of the charts in theruntimecluster.

Client Certificate

Another solution will be to bind the roles in the target cluster to a User subject instead of a service account and use a client certificate for authentication. This approach does not provide a solution for the client certificate rotation. However, this setup can be achieved by setting both .Values.global.virtualGarden.enabled: true and .Values.global.virtualGarden.user.name, then following these steps:

- Generate a client certificate for the

targetcluster for the respective user. - Deploy the

applicationpart of the charts in thetargetcluster. - Craft a

kubeconfigusing the already generated client certificate. - Set the crafted

kubeconfigand deploy theruntimepart of the charts in theruntimecluster.

1.3 - Local Setup

admission-alicloud

admission-alicloud is an admission webhook server which is responsible for the validation of the cloud provider (Alicloud in this case) specific fields and resources. The Gardener API server is cloud provider agnostic and it wouldn’t be able to perform similar validation.

Follow the steps below to run the admission webhook server locally.

Start the Gardener API server.

For details, check the Gardener local setup.

Start the webhook server

Make sure that the

KUBECONFIGenvironment variable is pointing to the local garden cluster.make start-admissionSetup the

ValidatingWebhookConfiguration.hack/dev-setup-admission-alicloud.shwill configure the webhook Service which will allow the kube-apiserver of your local cluster to reach the webhook server. It will also apply theValidatingWebhookConfigurationmanifest../hack/dev-setup-admission-alicloud.sh

You are now ready to experiment with the admission-alicloud webhook server locally.

1.4 - Operations

Using the Alicloud provider extension with Gardener as operator

The core.gardener.cloud/v1beta1.CloudProfile resource declares a providerConfig field that is meant to contain provider-specific configuration.

The core.gardener.cloud/v1beta1.Seed resource is structured similarly.

Additionally, it allows configuring settings for the backups of the main etcds’ data of shoot clusters control planes running in this seed cluster.

This document explains the necessary configuration for this provider extension. In addition, this document also describes how to enable the use of customized machine images for Alicloud.

CloudProfile resource

This section describes, how the configuration for CloudProfile looks like for Alicloud by providing an example CloudProfile manifest with minimal configuration that can be used to allow the creation of Alicloud shoot clusters.

CloudProfileConfig

The cloud profile configuration contains information about the real machine image IDs in the Alicloud environment (AMIs).

You have to map every version that you specify in .spec.machineImages[].versions here such that the Alicloud extension knows the AMI for every version you want to offer.

An example CloudProfileConfig for the Alicloud extension looks as follows:

apiVersion: alicloud.provider.extensions.gardener.cloud/v1alpha1

kind: CloudProfileConfig

machineImages:

- name: coreos

versions:

- version: 2023.4.0

regions:

- name: eu-central-1

id: coreos_2023_4_0_64_30G_alibase_20190319.vhd

Example CloudProfile manifest

Please find below an example CloudProfile manifest:

apiVersion: core.gardener.cloud/v1beta1

kind: CloudProfile

metadata:

name: alicloud

spec:

type: alicloud

kubernetes:

versions:

- version: 1.32.0

- version: 1.31.1

expirationDate: "2026-03-31T23:59:59Z"

machineImages:

- name: coreos

versions:

- version: 2023.4.0

machineTypes:

- name: ecs.sn2ne.large

cpu: "2"

gpu: "0"

memory: 8Gi

volumeTypes:

- name: cloud_efficiency

class: standard

- name: cloud_essd

class: premium

regions:

- name: eu-central-1

zones:

- name: eu-central-1a

- name: eu-central-1b

providerConfig:

apiVersion: alicloud.provider.extensions.gardener.cloud/v1alpha1

kind: CloudProfileConfig

machineImages:

- name: coreos

versions:

- version: 2023.4.0

regions:

- name: eu-central-1

id: coreos_2023_4_0_64_30G_alibase_20190319.vhd

Enable customized machine images for the Alicloud extension

Customized machine images can be created for an Alicloud account and shared with other Alicloud accounts.

The same customized machine image has different image ID in different regions on Alicloud.

If you need to enable encrypted system disk, you must provide customized machine images.

Administrators/Operators need to explicitly declare them per imageID per region as below:

machineImages:

- name: customized_coreos

regions:

- imageID: <image_id_in_eu_central_1>

region: eu-central-1

- imageID: <image_id_in_cn_shanghai>

region: cn-shanghai

...

version: 2191.4.1

...

End-users have to have the permission to use the customized image from its creator Alicloud account. To enable end-users to use customized images, the images are shared from Alicloud account of Seed operator with end-users’ Alicloud accounts. Administrators/Operators need to explicitly provide Seed operator’s Alicloud account access credentials (base64 encoded) as below:

machineImageOwnerSecret:

name: machine-image-owner

accessKeyID: <base64_encoded_access_key_id>

accessKeySecret: <base64_encoded_access_key_secret>

As a result, a Secret named machine-image-owner by default will be created in namespace of Alicloud provider extension.

Operators should also maintain custom image IDs which are to be shared with end-users as below:

toBeSharedImageIDs:

- <image_id_1>

- <image_id_2>

- <image_id_3>

Example ControllerDeployment manifest for enabling customized machine images

apiVersion: core.gardener.cloud/v1beta1

kind: ControllerDeployment

metadata:

name: extension-provider-alicloud

spec:

type: helm

providerConfig:

chart: |

H4sIFAAAAAAA/yk...

values:

config:

machineImageOwnerSecret:

accessKeyID: <base64_encoded_access_key_id>

accessKeySecret: <base64_encoded_access_key_secret>

toBeSharedImageIDs:

- <image_id_1>

- <image_id_2>

...

machineImages:

- name: customized_coreos

regions:

- imageID: <image_id_in_eu_central_1>

region: eu-central-1

- imageID: <image_id_in_cn_shanghai>

region: cn-shanghai

...

version: 2191.4.1

...

csi:

enableADController: true

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

memory: 128Mi

Seed resource

This provider extension does not support any provider configuration for the Seed’s .spec.provider.providerConfig field.

However, it supports to managing of backup infrastructure, i.e., you can specify a configuration for the .spec.backup field.

Backup configuration

A Seed of type alicloud can be configured to perform backups for the main etcds’ of the shoot clusters control planes using Alicloud Object Storage Service.

The location/region where the backups will be stored defaults to the region of the Seed (spec.provider.region).

Please find below an example Seed manifest (partly) that configures backups using Alicloud Object Storage Service.

---

apiVersion: core.gardener.cloud/v1beta1

kind: Seed

metadata:

name: my-seed

spec:

provider:

type: alicloud

region: cn-shanghai

backup:

provider: alicloud

credentialsRef:

apiVersion: v1

kind: Secret

name: backup-credentials

namespace: garden

...

An example of the referenced secret containing the credentials for the Alicloud Object Storage Service can be found in the example folder.

Permissions for Alicloud Object Storage Service

Please make sure the RAM user associated with the provided AccessKey pair has the following permission.

- AliyunOSSFullAccess

1.5 - Usage

Using the Alicloud provider extension with Gardener as end-user

The core.gardener.cloud/v1beta1.Shoot resource declares a few fields that are meant to contain provider-specific configuration.

This document describes the configurable options for Alicloud and provides an example Shoot manifest with minimal configuration that can be used to create an Alicloud cluster (modulo the landscape-specific information like cloud profile names, secret binding names, etc.).

Alicloud Provider Credentials

In order for Gardener to create a Kubernetes cluster using Alicloud infrastructure components, a Shoot has to provide credentials with sufficient permissions to the desired Alicloud project.

Every shoot cluster references a SecretBinding or a CredentialsBinding which itself references a Secret, and this Secret contains the provider credentials of the Alicloud project.

This Secret must look as follows:

apiVersion: v1

kind: Secret

metadata:

name: core-alicloud

namespace: garden-dev

type: Opaque

data:

accessKeyID: base64(access-key-id)

accessKeySecret: base64(access-key-secret)

The SecretBinding/CredentialsBinding is configurable in the Shoot cluster with the field secretBindingName/credentialsBindingName.

The required credentials for the Alicloud project are an AccessKey Pair associated with a Resource Access Management (RAM) User. A RAM user is a special account that can be used by services and applications to interact with Alicloud Cloud Platform APIs. Applications can use AccessKey pair to authorize themselves to a set of APIs and perform actions within the permissions granted to the RAM user.

Make sure to create a Resource Access Management User, and create an AccessKey Pair that shall be used for the Shoot cluster.

Permissions

Please make sure the provided credentials have the correct privileges. You can use the following Alicloud RAM policy document and attach it to the RAM user backed by the credentials you provided.

Click to expand the Alicloud RAM policy document!

{

"Statement": [

{

"Action": [

"vpc:*"

],

"Effect": "Allow",

"Resource": [

"*"

]

},

{

"Action": [

"ecs:*"

],

"Effect": "Allow",

"Resource": [

"*"

]

},

{

"Action": [

"slb:*"

],

"Effect": "Allow",

"Resource": [

"*"

]

},

{

"Action": [

"ram:GetRole",

"ram:CreateRole",

"ram:CreateServiceLinkedRole"

],

"Effect": "Allow",

"Resource": [

"*"

]

},

{

"Action": [

"ros:*"

],

"Effect": "Allow",

"Resource": [

"*"

]

}

],

"Version": "1"

}

InfrastructureConfig

The infrastructure configuration mainly describes how the network layout looks like in order to create the shoot worker nodes in a later step, thus, prepares everything relevant to create VMs, load balancers, volumes, etc.

An example InfrastructureConfig for the Alicloud extension looks as follows:

apiVersion: alicloud.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

networks:

vpc: # specify either 'id' or 'cidr'

# id: my-vpc

cidr: 10.250.0.0/16

# gardenerManagedNATGateway: true

zones:

- name: eu-central-1a

workers: 10.250.1.0/24

# natGateway:

# eipAllocationID: eip-ufxsdg122elmszcg

The networks.vpc section describes whether you want to create the shoot cluster in an already existing VPC or whether to create a new one:

- If

networks.vpc.idis given then you have to specify the VPC ID of the existing VPC that was created by other means (manually, other tooling, …). - If

networks.vpc.cidris given then you have to specify the VPC CIDR of a new VPC that will be created during shoot creation. You can freely choose a private CIDR range. - Either

networks.vpc.idornetworks.vpc.cidrmust be present, but not both at the same time. - When

networks.vpc.idis present, in addition, you can also choose to setnetworks.vpc.gardenerManagedNATGateway. It is by defaultfalse. When it is set totrue, Gardener will create an Enhanced NATGateway in the VPC and associate it with a VSwitch created in the first zone in thenetworks.zones. - Please note that when

networks.vpc.idis present, andnetworks.vpc.gardenerManagedNATGatewayisfalseor not set, you have to manually create an Enhance NATGateway and associate it with a VSwitch that you manually created. In this case, make sure the worker CIDRs innetworks.zonesdo not overlap with the one you created. If a NATGateway is created manually and a shoot is created in the same VPC withnetworks.vpc.gardenerManagedNATGatewaysettrue, you need to manually adjust the route rule accordingly. You may refer to here.

The networks.zones section describes which subnets you want to create in availability zones.

For every zone, the Alicloud extension creates one subnet:

- The

workerssubnet is used for all shoot worker nodes, i.e., VMs which later run your applications.

For every subnet, you have to specify a CIDR range contained in the VPC CIDR specified above, or the VPC CIDR of your already existing VPC. You can freely choose these CIDR and it is your responsibility to properly design the network layout to suit your needs.

If you want to use multiple availability zones then add a second, third, … entry to the networks.zones[] list and properly specify the AZ name in networks.zones[].name.

Apart from the VPC and the subnets the Alicloud extension will also create a NAT gateway (only if a new VPC is created), a key pair, elastic IPs, VSwitches, a SNAT table entry, and security groups.

By default, the Alicloud extension will create a corresponding Elastic IP that it attaches to this NAT gateway and which is used for egress traffic.

The networks.zones[].natGateway.eipAllocationID field allows you to specify the Elastic IP Allocation ID of an existing Elastic IP allocation in case you want to bring your own.

If provided, no new Elastic IP will be created and, instead, the Elastic IP specified by you will be used.

⚠️ If you change this field for an already existing infrastructure then it will disrupt egress traffic while Alicloud applies this change, because the NAT gateway must be recreated with the new Elastic IP association. Also, please note that the existing Elastic IP will be permanently deleted if it was earlier created by the Alicloud extension.

ControlPlaneConfig

The control plane configuration mainly contains values for the Alicloud-specific control plane components.

Today, the Alicloud extension deploys the cloud-controller-manager and the CSI controllers.

An example ControlPlaneConfig for the Alicloud extension looks as follows:

apiVersion: alicloud.provider.extensions.gardener.cloud/v1alpha1

kind: ControlPlaneConfig

csi:

enableADController: true

# cloudControllerManager:

# featureGates:

# SomeKubernetesFeature: true

The csi.enableADController is used as the value of environment DISK_AD_CONTROLLER, which is used for AliCloud csi-disk-plugin. This field is optional. When a new shoot is creatd, this field is automatically set true. For an existing shoot created in previous versions, it remains unchanged. If there are persistent volumes created before year 2021, please be cautious to set this field true because they may fail to mount to nodes.

The cloudControllerManager.featureGates contains a map of explicitly enabled or disabled feature gates.

For production usage it’s not recommend to use this field at all as you can enable alpha features or disable beta/stable features, potentially impacting the cluster stability.

If you don’t want to configure anything for the cloudControllerManager simply omit the key in the YAML specification.

WorkerConfig

The Alicloud extension does not support a specific WorkerConfig. However, it supports additional data volumes (plus encryption) per machine.

By default (if not stated otherwise), all the disks are unencrypted.

For each data volume, you have to specify a name.

It also supports encrypted system disk.

However, only Customized image is currently supported to be used as a basic image for encrypted system disk.

Please be noted that the change of system disk encryption flag will cause reconciliation of a shoot, and it will result in nodes rolling update within the worker group.

The following YAML is a snippet of a Shoot resource:

spec:

provider:

workers:

- name: cpu-worker

...

volume:

type: cloud_efficiency

size: 20Gi

encrypted: true

dataVolumes:

- name: kubelet-dir

type: cloud_efficiency

size: 25Gi

encrypted: true

Example Shoot manifest (one availability zone)

Please find below an example Shoot manifest for one availability zone:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

metadata:

name: johndoe-alicloud

namespace: garden-dev

spec:

cloudProfile:

name: alicloud

region: eu-central-1

secretBindingName: core-alicloud

provider:

type: alicloud

infrastructureConfig:

apiVersion: alicloud.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

networks:

vpc:

cidr: 10.250.0.0/16

zones:

- name: eu-central-1a

workers: 10.250.0.0/19

controlPlaneConfig:

apiVersion: alicloud.provider.extensions.gardener.cloud/v1alpha1

kind: ControlPlaneConfig

workers:

- name: worker-xoluy

machine:

type: ecs.sn2ne.large

minimum: 2

maximum: 2

volume:

size: 50Gi

type: cloud_efficiency

zones:

- eu-central-1a

networking:

nodes: 10.250.0.0/16

type: calico

kubernetes:

version: 1.28.2

maintenance:

autoUpdate:

kubernetesVersion: true

machineImageVersion: true

addons:

kubernetesDashboard:

enabled: true

nginxIngress:

enabled: true

Example Shoot manifest (two availability zones)

Please find below an example Shoot manifest for two availability zones:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

metadata:

name: johndoe-alicloud

namespace: garden-dev

spec:

cloudProfile:

name: alicloud

region: eu-central-1

secretBindingName: core-alicloud

provider:

type: alicloud

infrastructureConfig:

apiVersion: alicloud.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

networks:

vpc:

cidr: 10.250.0.0/16

zones:

- name: eu-central-1a

workers: 10.250.0.0/26

- name: eu-central-1b

workers: 10.250.0.64/26

controlPlaneConfig:

apiVersion: alicloud.provider.extensions.gardener.cloud/v1alpha1

kind: ControlPlaneConfig

workers:

- name: worker-xoluy

machine:

type: ecs.sn2ne.large

minimum: 2

maximum: 4

volume:

size: 50Gi

type: cloud_efficiency

# NOTE: Below comment is for the case when encrypted field of an existing shoot is updated from false to true.

# It will cause affected nodes to be rolling updated. Users must trigger a MAINTAIN operation of the shoot.

# Otherwise, the shoot will fail to reconcile.

# You could do it either via Dashboard or annotating the shoot with gardener.cloud/operation=maintain

encrypted: true

zones:

- eu-central-1a

- eu-central-1b

networking:

nodes: 10.250.0.0/16

type: calico

kubernetes:

version: 1.28.2

maintenance:

autoUpdate:

kubernetesVersion: true

machineImageVersion: true

addons:

kubernetesDashboard:

enabled: true

nginxIngress:

enabled: true

Kubernetes Versions per Worker Pool

This extension supports gardener/gardener’s WorkerPoolKubernetesVersion feature gate, i.e., having worker pools with overridden Kubernetes versions since gardener-extension-provider-alicloud@v1.33.

Shoot CA Certificate and ServiceAccount Signing Key Rotation

This extension supports gardener/gardener’s ShootCARotation feature gate since gardener-extension-provider-alicloud@v1.36 and ShootSARotation feature gate since gardener-extension-provider-alicloud@v1.37.

2 - Provider AWS

Gardener Extension for AWS provider

Project Gardener implements the automated management and operation of Kubernetes clusters as a service. Its main principle is to leverage Kubernetes concepts for all of its tasks.

Recently, most of the vendor specific logic has been developed in-tree. However, the project has grown to a size where it is very hard to extend, maintain, and test. With GEP-1 we have proposed how the architecture can be changed in a way to support external controllers that contain their very own vendor specifics. This way, we can keep Gardener core clean and independent.

This controller implements Gardener’s extension contract for the AWS provider.

An example for a ControllerRegistration resource that can be used to register this controller to Gardener can be found here.

Please find more information regarding the extensibility concepts and a detailed proposal here.

Supported Kubernetes versions

This extension controller supports the following Kubernetes versions:

| Version | Support | Conformance test results |

|---|---|---|

| Kubernetes 1.33 | 1.33.0+ | N/A |

| Kubernetes 1.32 | 1.32.0+ | |

| Kubernetes 1.31 | 1.31.0+ | |

| Kubernetes 1.30 | 1.30.0+ | |

| Kubernetes 1.29 | 1.29.0+ | |

| Kubernetes 1.28 | 1.28.0+ | |

| Kubernetes 1.27 | 1.27.0+ |

Please take a look here to see which versions are supported by Gardener in general.

Compatibility

The following lists known compatibility issues of this extension controller with other Gardener components.

| AWS Extension | Gardener | Action | Notes |

|---|---|---|---|

<= v1.15.0 | >v1.10.0 | Please update the provider version to > v1.15.0 or disable the feature gate MountHostCADirectories in the Gardenlet. | Applies if feature flag MountHostCADirectories in the Gardenlet is enabled. Shoots with CSI enabled (Kubernetes version >= 1.18) miss a mount to the directory /etc/ssl in the Shoot API Server. This can lead to not trusting external Root CAs when the API Server makes requests via webhooks or OIDC. |

How to start using or developing this extension controller locally

You can run the controller locally on your machine by executing make start.

Static code checks and tests can be executed by running make verify. We are using Go modules for Golang package dependency management and Ginkgo/Gomega for testing.

Feedback and Support

Feedback and contributions are always welcome!

Please report bugs or suggestions as GitHub issues or reach out on Slack (join the workspace here).

Learn more!

Please find further resources about out project here:

- Our landing page gardener.cloud

- “Gardener, the Kubernetes Botanist” blog on kubernetes.io

- “Gardener Project Update” blog on kubernetes.io

- GEP-1 (Gardener Enhancement Proposal) on extensibility

- GEP-4 (New

core.gardener.cloud/v1beta1API) - Extensibility API documentation

- Gardener Extensions Golang library

- Gardener API Reference

2.1 - Tutorials

Overview

Gardener allows you to create a Kubernetes cluster on different infrastructure providers. This tutorial will guide you through the process of creating a cluster on AWS.

Prerequisites

- You have created an AWS account.

- You have access to the Gardener dashboard and have permissions to create projects.

Steps

Go to the Gardener dashboard and create a Project.

Choose Secrets, then the plus icon

and select AWS.

and select AWS.

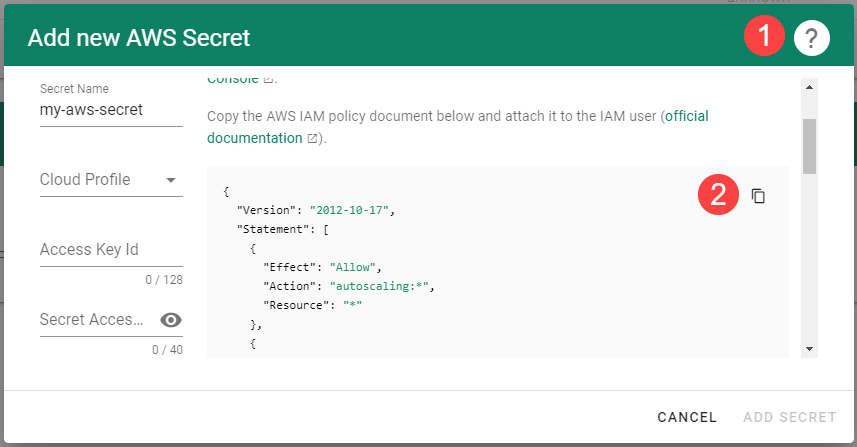

To copy the policy for AWS from the Gardener dashboard, click on the help icon

for AWS secrets, and choose copy

for AWS secrets, and choose copy  .

.

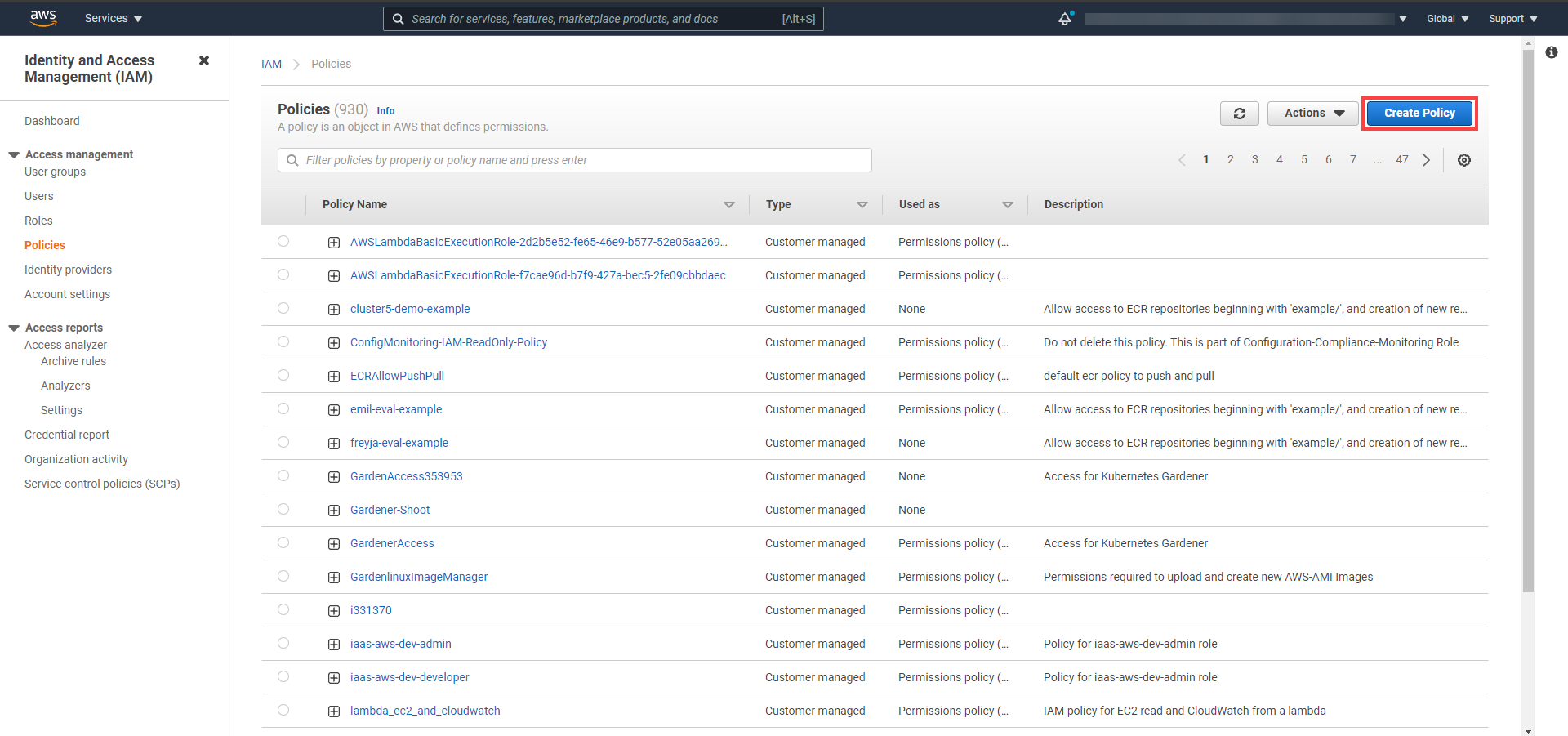

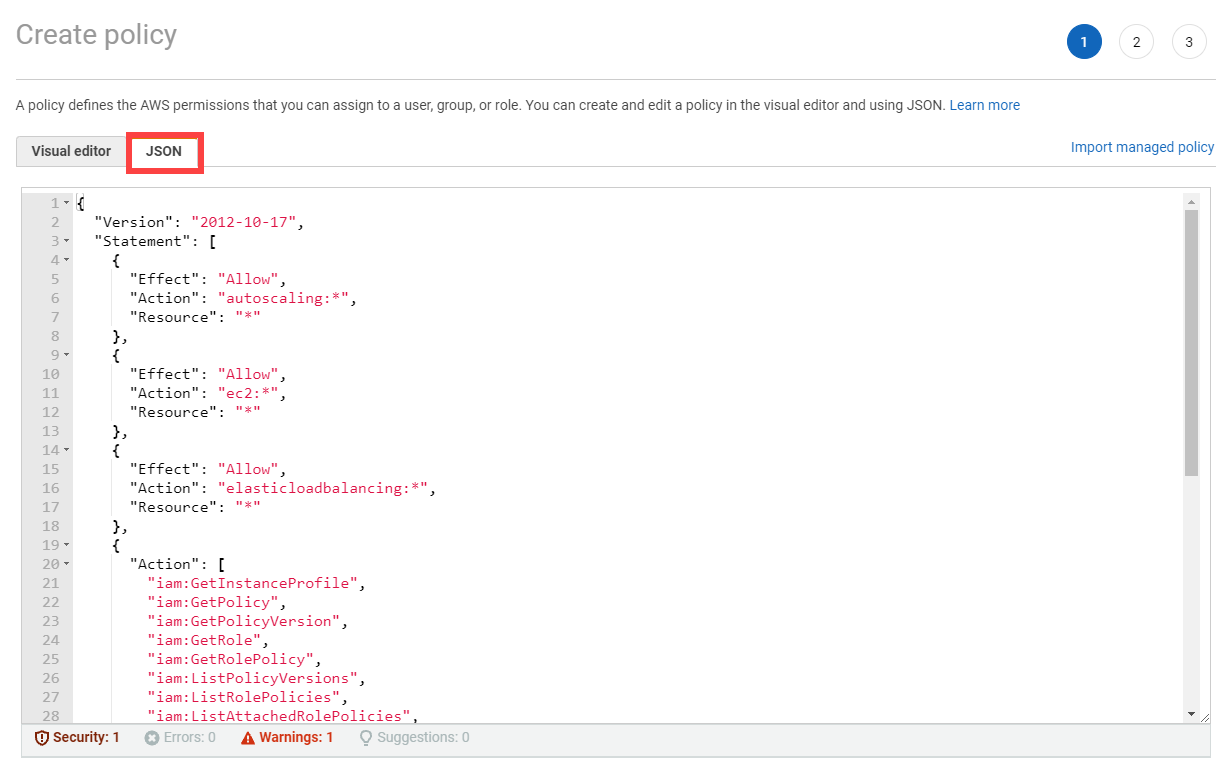

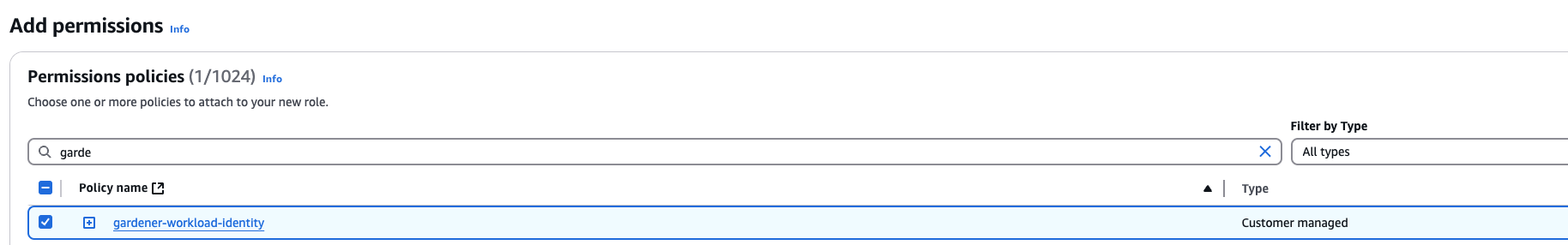

Create a new policy in AWS:

Choose Create policy.

Paste the policy that you copied from the Gardener dashboard to this custom policy.

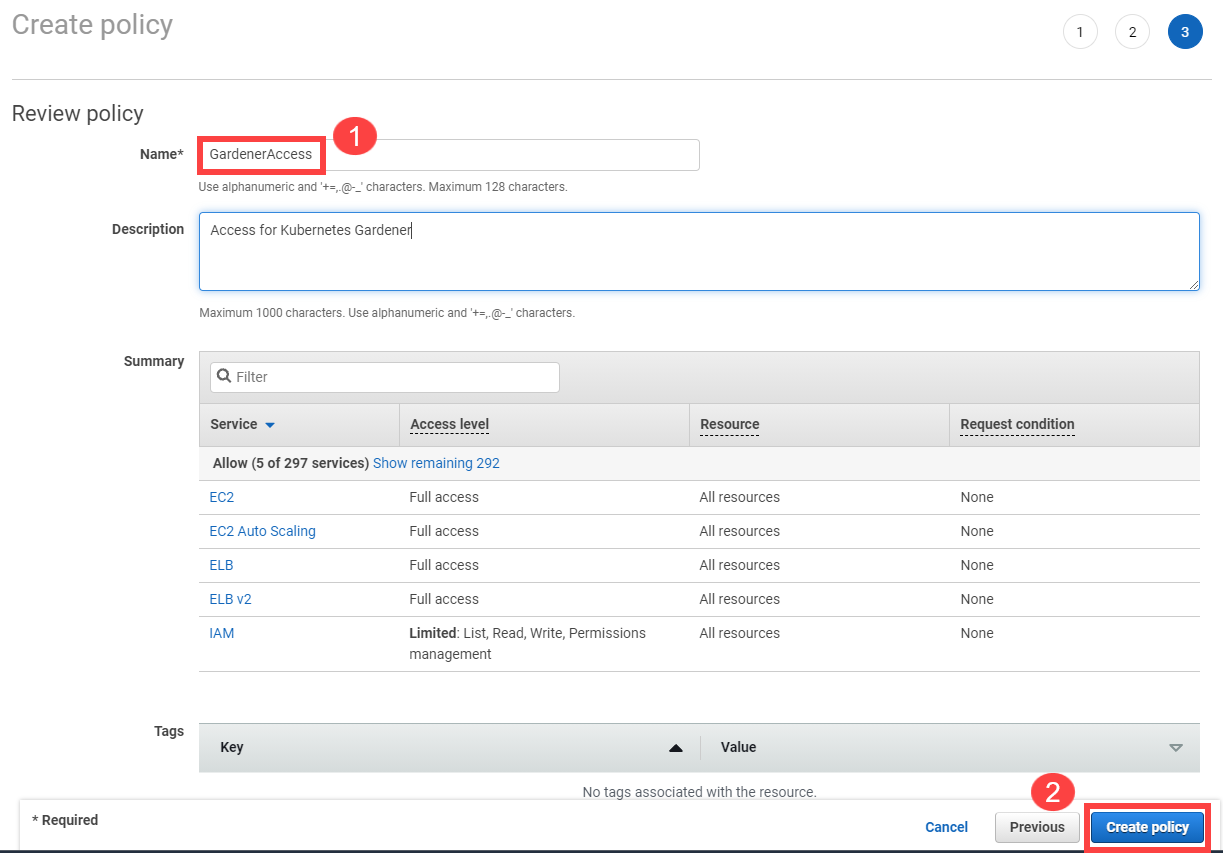

Choose Next until you reach the Review section.

Fill in the name and description, then choose Create policy.

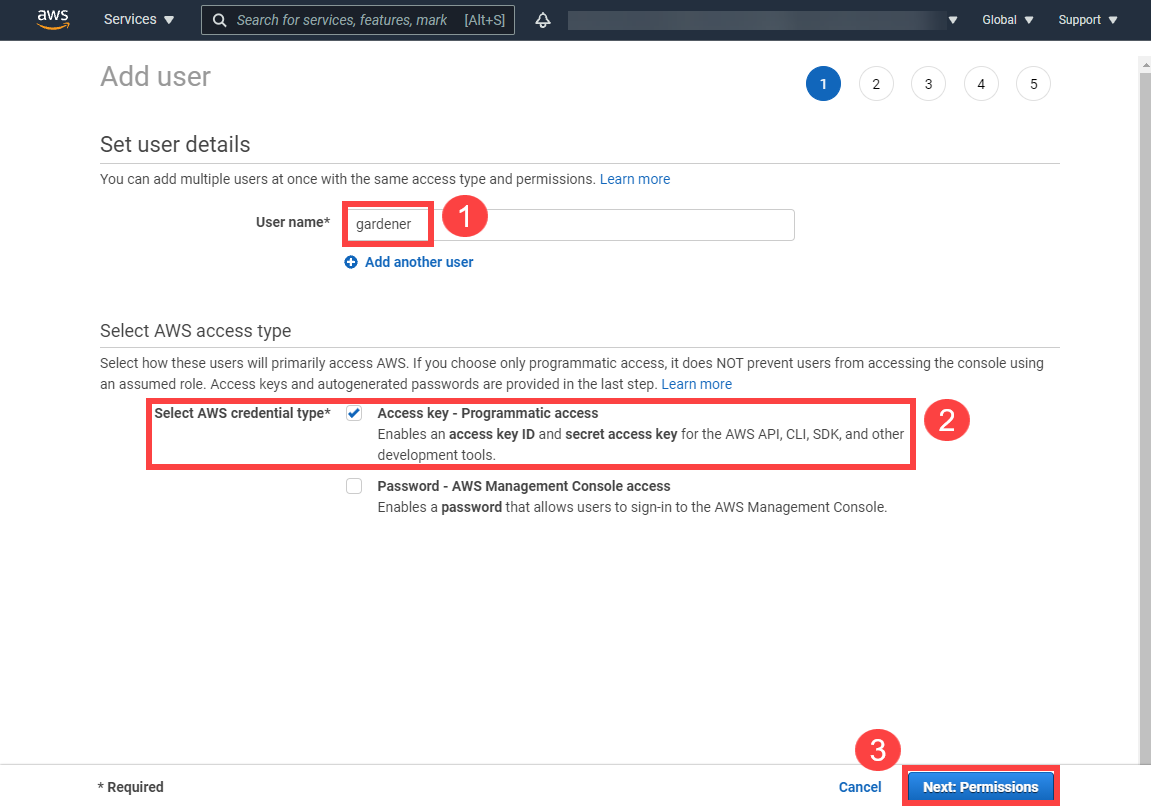

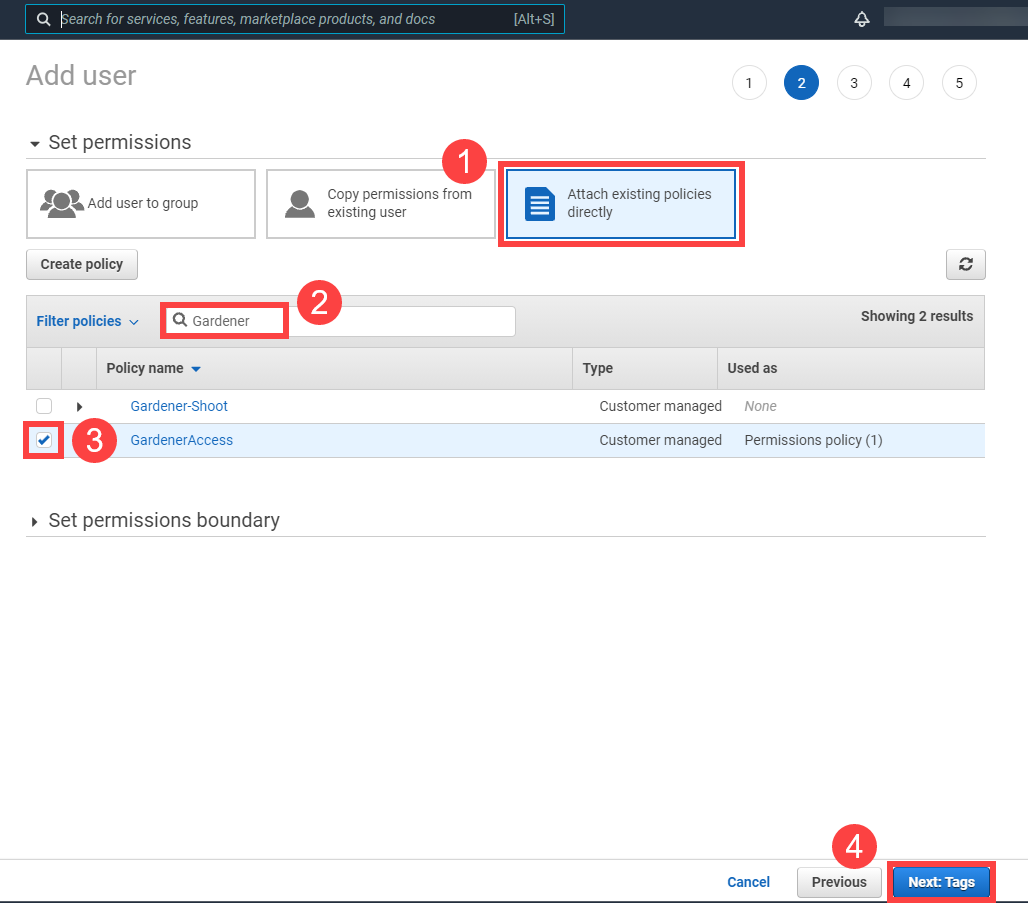

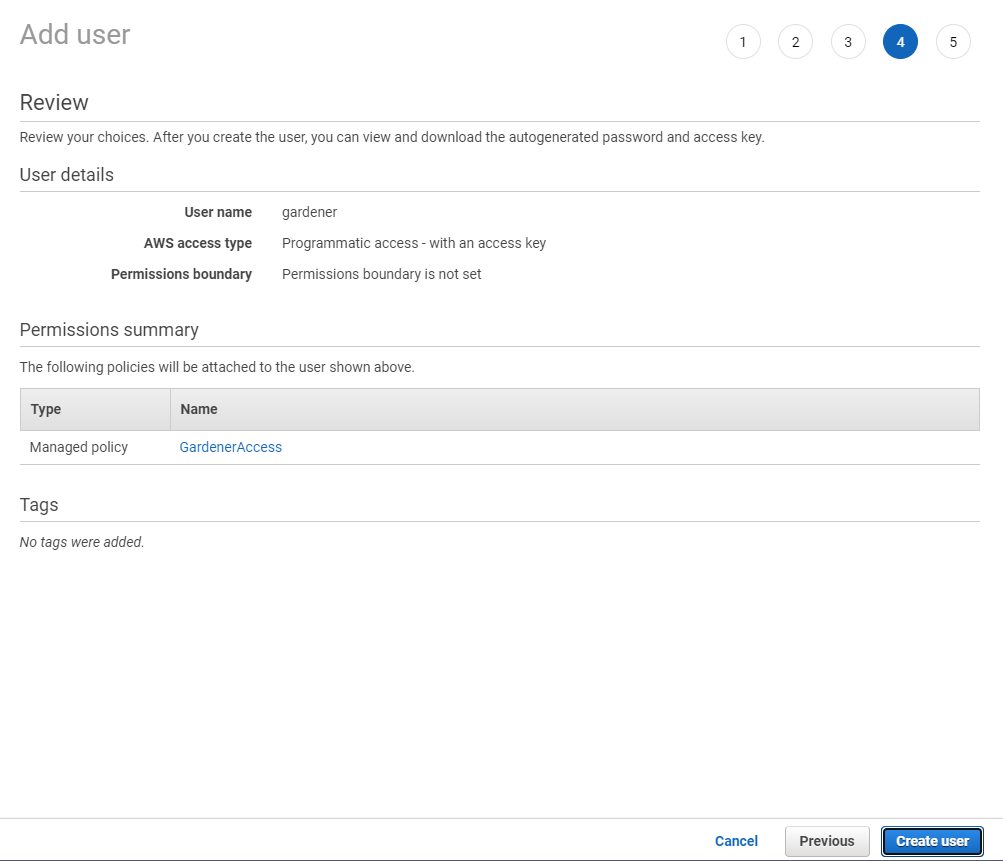

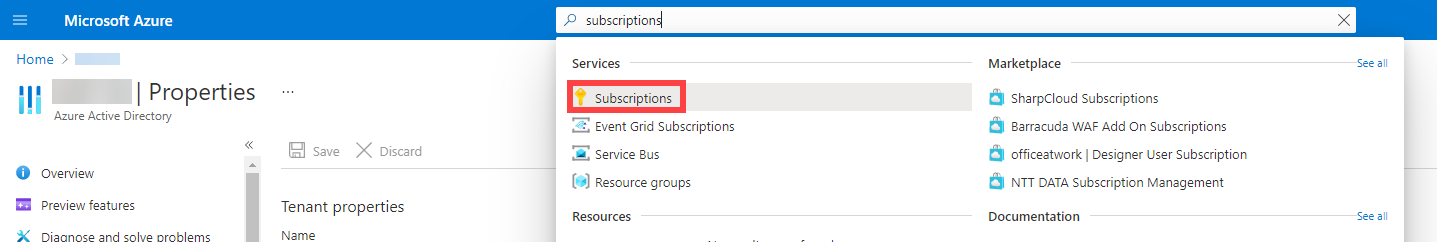

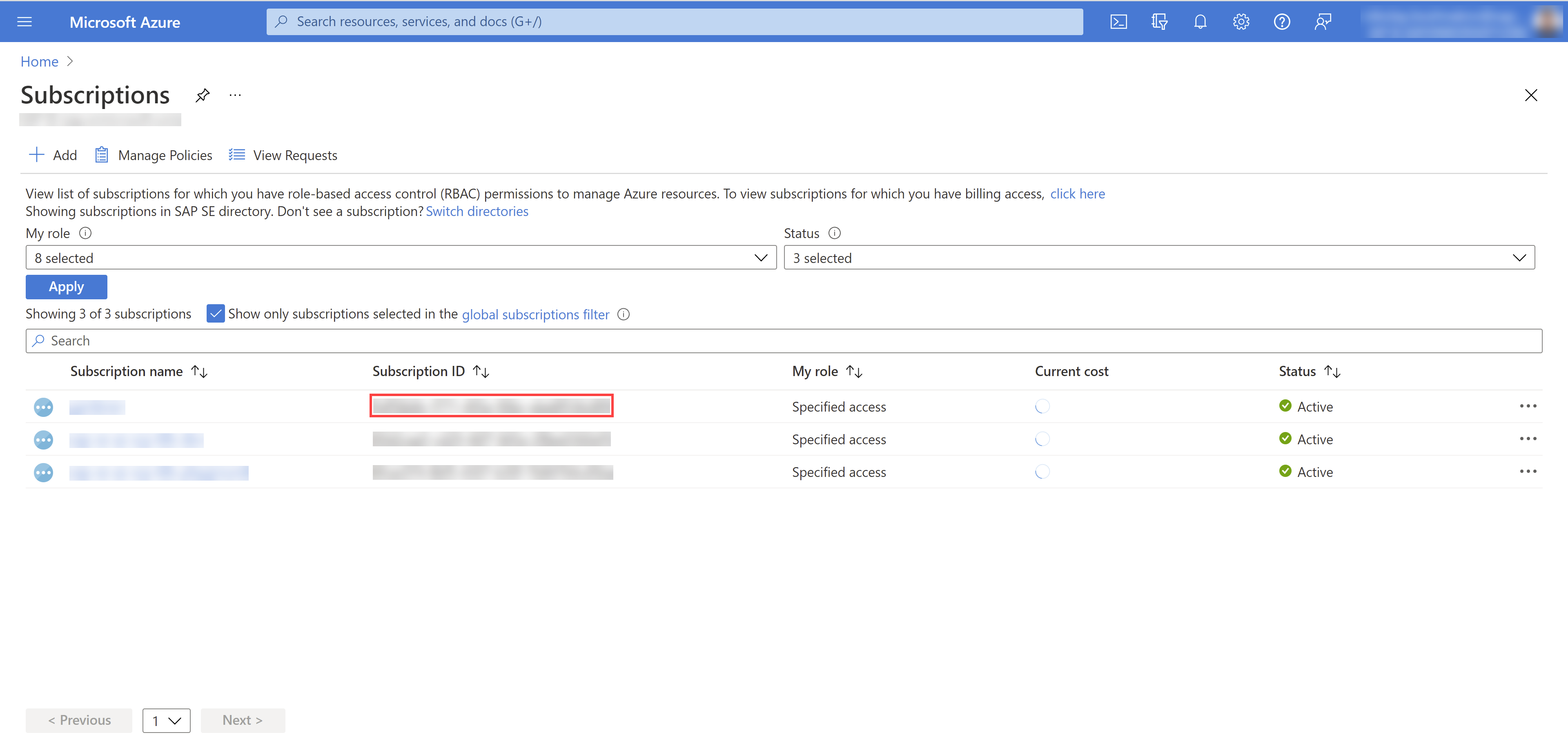

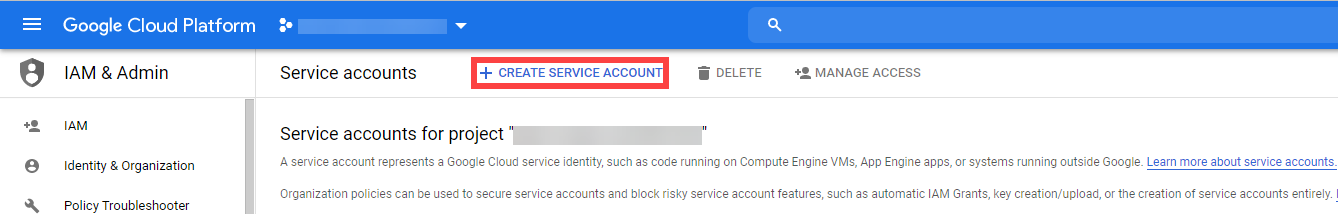

Create a new technical user in AWS:

Type in a username and select the access key credential type.

Choose Attach an existing policy.

Select GardenerAccess from the policy list.

Choose Next until you reach the Review section.

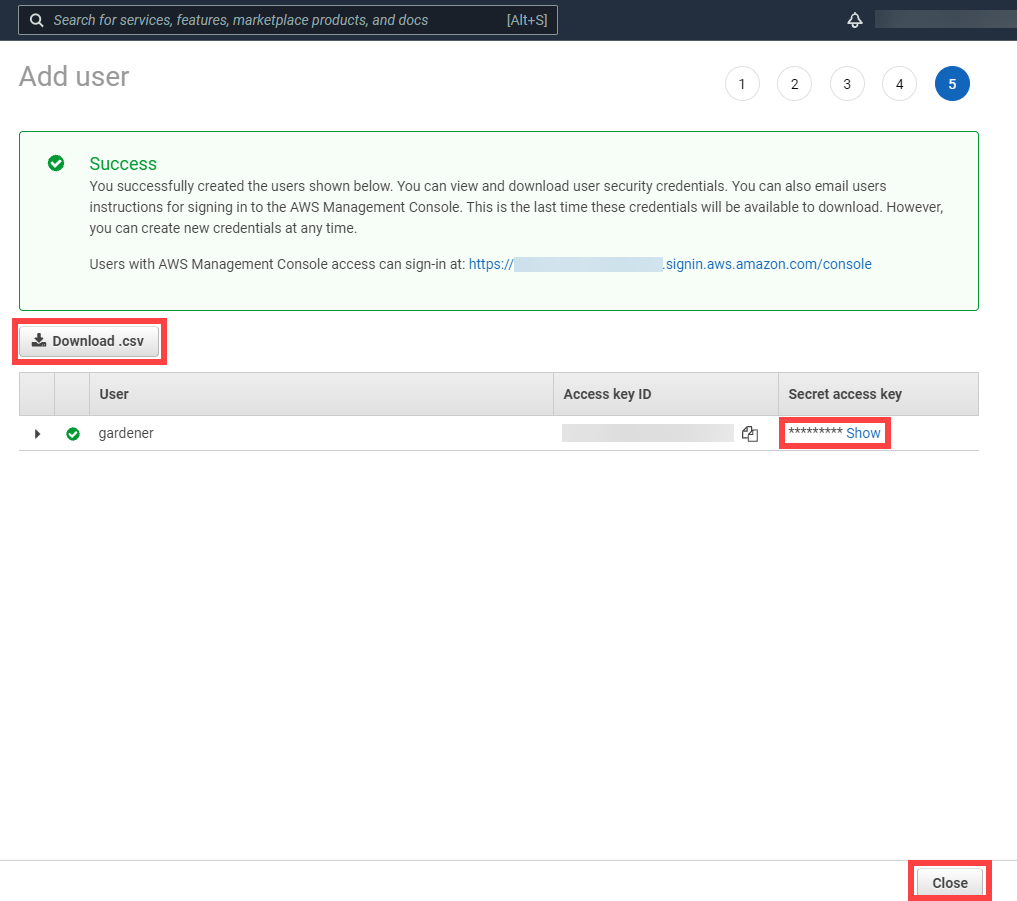

Note

After the user is created,

Access key IDandSecret access keyare generated and displayed. Remember to save them. TheAccess key IDis used later to create secrets for Gardener.

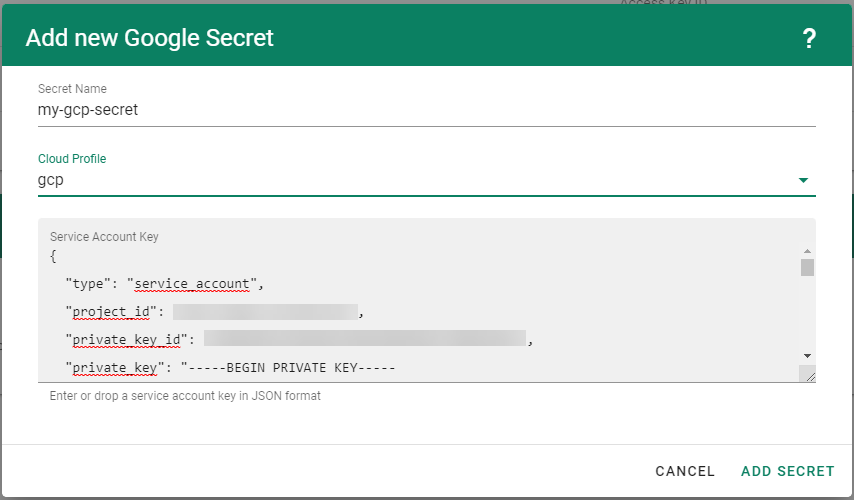

On the Gardener dashboard, choose Secrets and then the plus sign

. Select AWS from the drop down menu to add a new AWS secret.

. Select AWS from the drop down menu to add a new AWS secret.Create your secret.

- Type the name of your secret.

- Copy and paste the

Access Key IDandSecret Access Keyyou saved when you created the technical user on AWS. - Choose Add secret.

After completing these steps, you should see your newly created secret in the Infrastructure Secrets section.

To create a new cluster, choose Clusters and then the plus sign in the upper right corner.

In the Create Cluster section:

- Select AWS in the Infrastructure tab.

- Type the name of your cluster in the Cluster Details tab.

- Choose the secret you created before in the Infrastructure Details tab.

- Choose Create.

Wait for your cluster to get created.

Result

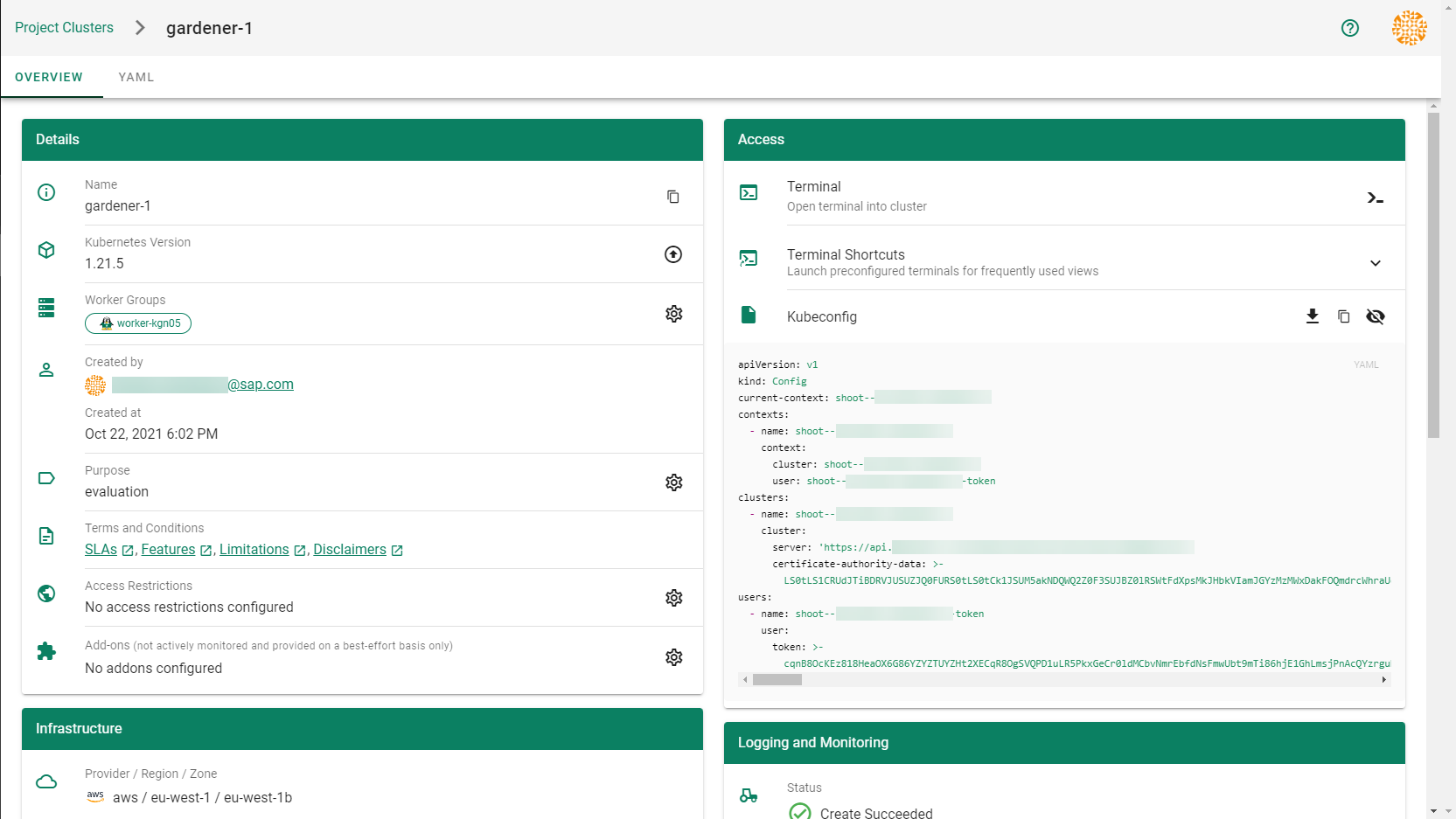

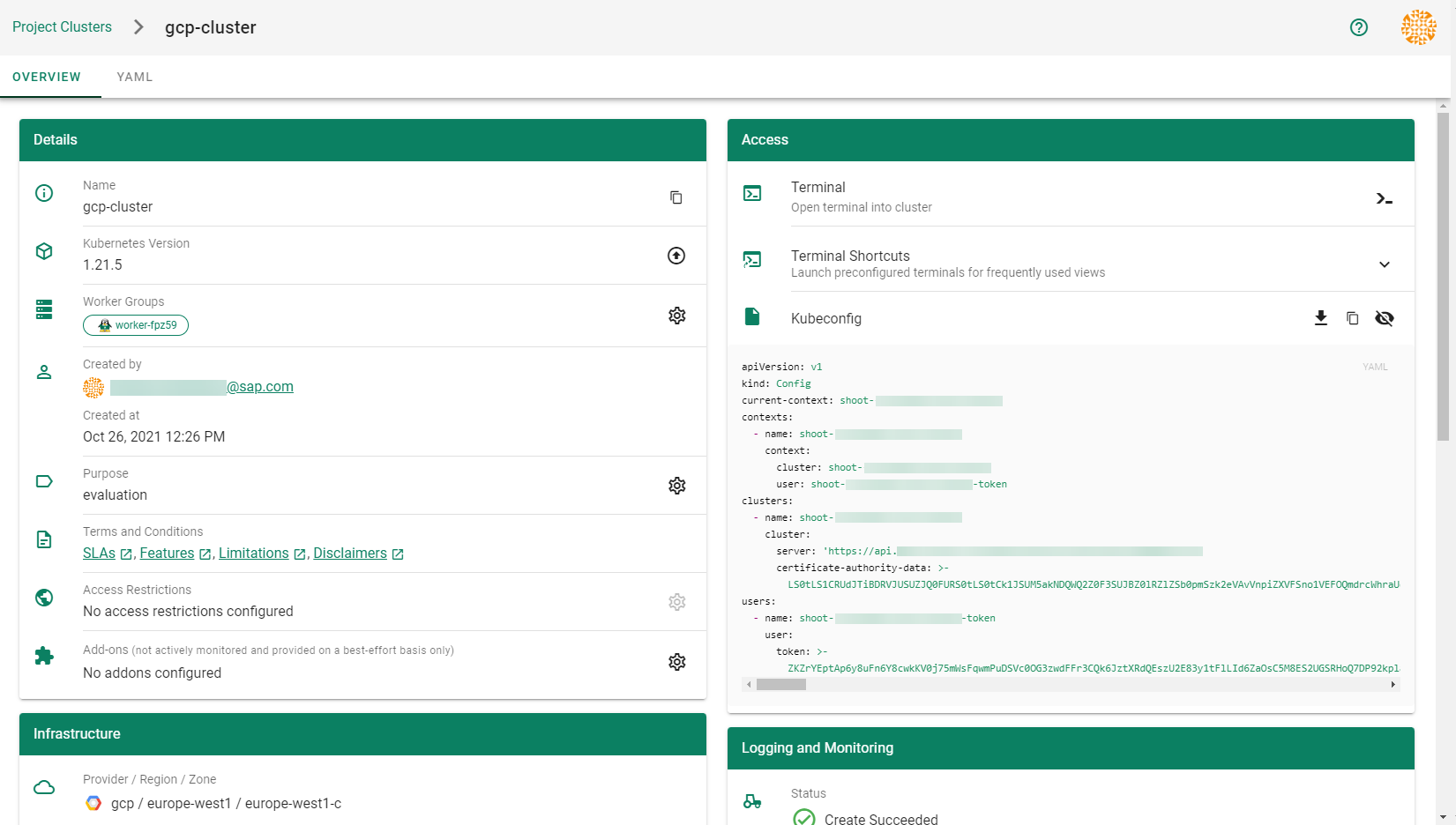

After completing the steps in this tutorial, you will be able to see and download the kubeconfig of your cluster.

2.2 - Deployment

Deployment of the AWS provider extension

Disclaimer: This document is NOT a step by step installation guide for the AWS provider extension and only contains some configuration specifics regarding the installation of different components via the helm charts residing in the AWS provider extension repository.

gardener-extension-admission-aws

Authentication against the Garden cluster

There are several authentication possibilities depending on whether or not the concept of Virtual Garden is used.

Virtual Garden is not used, i.e., the runtime Garden cluster is also the target Garden cluster.

Automounted Service Account Token

The easiest way to deploy the gardener-extension-admission-aws component will be to not provide kubeconfig at all. This way in-cluster configuration and an automounted service account token will be used. The drawback of this approach is that the automounted token will not be automatically rotated.

Service Account Token Volume Projection

Another solution will be to use Service Account Token Volume Projection combined with a kubeconfig referencing a token file (see example below).

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority-data: <CA-DATA>

server: https://default.kubernetes.svc.cluster.local

name: garden

contexts:

- context:

cluster: garden

user: garden

name: garden

current-context: garden

users:

- name: garden

user:

tokenFile: /var/run/secrets/projected/serviceaccount/token

This will allow for automatic rotation of the service account token by the kubelet. The configuration can be achieved by setting both .Values.global.serviceAccountTokenVolumeProjection.enabled: true and .Values.global.kubeconfig in the respective chart’s values.yaml file.

Virtual Garden is used, i.e., the runtime Garden cluster is different from the target Garden cluster.

Service Account

The easiest way to setup the authentication will be to create a service account and the respective roles will be bound to this service account in the target cluster. Then use the generated service account token and craft a kubeconfig which will be used by the workload in the runtime cluster. This approach does not provide a solution for the rotation of the service account token. However, this setup can be achieved by setting .Values.global.virtualGarden.enabled: true and following these steps:

- Deploy the

applicationpart of the charts in thetargetcluster. - Get the service account token and craft the

kubeconfig. - Set the crafted

kubeconfigand deploy theruntimepart of the charts in theruntimecluster.

Client Certificate

Another solution will be to bind the roles in the target cluster to a User subject instead of a service account and use a client certificate for authentication. This approach does not provide a solution for the client certificate rotation. However, this setup can be achieved by setting both .Values.global.virtualGarden.enabled: true and .Values.global.virtualGarden.user.name, then following these steps:

- Generate a client certificate for the

targetcluster for the respective user. - Deploy the

applicationpart of the charts in thetargetcluster. - Craft a

kubeconfigusing the already generated client certificate. - Set the crafted

kubeconfigand deploy theruntimepart of the charts in theruntimecluster.

Projected Service Account Token

This approach requires an already deployed and configured oidc-webhook-authenticator for the target cluster. Also the runtime cluster should be registered as a trusted identity provider in the target cluster. Then projected service accounts tokens from the runtime cluster can be used to authenticate against the target cluster. The needed steps are as follows:

- Deploy OWA and establish the needed trust.

- Set

.Values.global.virtualGarden.enabled: trueand.Values.global.virtualGarden.user.name. Note: username value will depend on the trust configuration, e.g.,<prefix>:system:serviceaccount:<namespace>:<serviceaccount> - Set

.Values.global.serviceAccountTokenVolumeProjection.enabled: trueand.Values.global.serviceAccountTokenVolumeProjection.audience. Note: audience value will depend on the trust configuration, e.g.,<cliend-id-from-trust-config>. - Craft a kubeconfig (see example below).

- Deploy the

applicationpart of the charts in thetargetcluster. - Deploy the

runtimepart of the charts in theruntimecluster.

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority-data: <CA-DATA>

server: https://virtual-garden.api

name: virtual-garden

contexts:

- context:

cluster: virtual-garden

user: virtual-garden

name: virtual-garden

current-context: virtual-garden

users:

- name: virtual-garden

user:

tokenFile: /var/run/secrets/projected/serviceaccount/token

2.3 - Dual Stack Ingress

Using IPv4/IPv6 (dual-stack) Ingress in an IPv4 single-stack cluster

Motivation

IPv6 adoption is continuously growing, already overtaking IPv4 in certain regions, e.g. India, or scenarios, e.g. mobile. Even though most IPv6 installations deploy means to reach IPv4, it might still be beneficial to expose services natively via IPv4 and IPv6 instead of just relying on IPv4.

Disadvantages of full IPv4/IPv6 (dual-stack) Deployments

Enabling full IPv4/IPv6 (dual-stack) support in a kubernetes cluster is a major endeavor. It requires a lot of changes and restarts of all pods so that all pods get addresses for both IP families. A side-effect of dual-stack networking is that failures may be hidden as network traffic may take the other protocol to reach the target. For this reason and also due to reduced operational complexity, service teams might lean towards staying in a single-stack environment as much as possible. Luckily, this is possible with Gardener and IPv4/IPv6 (dual-stack) ingress on AWS.

Simplifying IPv4/IPv6 (dual-stack) Ingress with Protocol Translation on AWS

Fortunately, the network load balancer on AWS supports automatic protocol translation, i.e. it can expose both IPv4 and IPv6 endpoints while communicating with just one protocol to the backends. Under the hood, automatic protocol translation takes place. Client IP address preservation can be achieved by using proxy protocol.

This approach enables users to expose IPv4 workload to IPv6-only clients without having to change the workload/service. Without requiring invasive changes, it allows a fairly simple first step into the IPv6 world for services just requiring ingress (incoming) communication.

Necessary Shoot Cluster Configuration Changes for IPv4/IPv6 (dual-stack) Ingress

To be able to utilize IPv4/IPv6 (dual-stack) Ingress in an IPv4 shoot cluster, the cluster needs to meet two preconditions:

dualStack.enabledneeds to be set totrueto configure VPC/subnet for IPv6 and add a routing rule for IPv6. (This does not add IPv6 addresses to kubernetes nodes.)loadBalancerController.enabledneeds to be set totrueas well to use the load balancer controller, which supports dual-stack ingress.

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

...

spec:

provider:

type: aws

infrastructureConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

dualStack:

enabled: true

controlPlaneConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: ControlPlaneConfig

loadBalancerController:

enabled: true

...

When infrastructureConfig.networks.vpc.id is set to the ID of an existing VPC, please make sure that your VPC has an Amazon-provided IPv6 CIDR block added.

After adapting the shoot specification and reconciling the cluster, dual-stack load balancers can be created using kubernetes services objects.

Creating an IPv4/IPv6 (dual-stack) Ingress

With the preconditions set, creating an IPv4/IPv6 load balancer is as easy as annotating a service with the correct annotations:

apiVersion: v1

kind: Service

metadata:

annotations:

service.beta.kubernetes.io/aws-load-balancer-ip-address-type: dualstack

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: instance

service.beta.kubernetes.io/aws-load-balancer-type: external

name: ...

namespace: ...

spec:

...

type: LoadBalancer

In case the client IP address should be preserved, the following annotation can be used to enable proxy protocol. (The pod receiving the traffic needs to be configured for proxy protocol as well.)

service.beta.kubernetes.io/aws-load-balancer-proxy-protocol: "*"

Please note that changing an existing Service to dual-stack may cause the creation of a new load balancer without

deletion of the old AWS load balancer resource. While this helps in a seamless migration by not cutting existing

connections it may lead to wasted/forgotten resources. Therefore, the (manual) cleanup needs to be taken into account

when migrating an existing Service instance.

For more details see AWS Load Balancer Documentation - Network Load Balancer.

DNS Considerations to Prevent Downtime During a Dual-Stack Migration

In case the migration of an existing service is desired, please check if there are DNS entries directly linked to the corresponding load balancer. The migrated load balancer will have a new domain name immediately, which will not be ready in the beginning. Therefore, a direct migration of the domain name entries is not desired as it may cause a short downtime, i.e. domain name entries without backing IP addresses.

If there are DNS entries directly linked to the corresponding load balancer and they are managed by the

shoot-dns-service, you can identify this via

annotations with the prefix dns.gardener.cloud/. Those annotations can be linked to a Service, Ingress or

Gateway resources. Alternatively, they may also use DNSEntry or DNSAnnotation resources.

For a seamless migration without downtime use the following three step approach:

- Temporarily prevent direct DNS updates

- Migrate the load balancer and wait until it is operational

- Allow DNS updates again

To prevent direct updates of the DNS entries when the load balancer is migrated add the annotation

dns.gardener.cloud/ignore: 'true' to all affected resources next to the other dns.gardener.cloud/... annotations

before starting the migration. For example, in case of a Service ensure that the service looks like the following:

kind: Service

metadata:

annotations:

dns.gardener.cloud/ignore: 'true'

dns.gardener.cloud/class: garden

dns.gardener.cloud/dnsnames: '...'

...

Next, migrate the load balancer to be dual-stack enabled by adding/changing the corresponding annotations.

You have multiple options how to check that the load balancer has been provisioned successfully. It might be useful

to peek into status.loadBalancer.ingress of the corresponding Service to identify the load balancer:

- Check in the AWS console for the corresponding load balancer provisioning state

- Perform domain name lookups with

nslookup/digto check whether the name resolves to an IP address. - Call your workload via the new load balancer, e.g. using

curl --resolve <my-domain-name>:<port>:<IP-address> https://<my-domain-name>:<port>, which allows you to call your service with the “correct” domain name without using actual name resolution. - Wait a fixed period of time as load balancer creation is usually finished within 15 minutes

Once the load balancer has been provisioned, you can remove the annotation dns.gardener.cloud/ignore: 'true' again

from the affected resources. It may take some additional time until the domain name change finally propagates

(up to one hour).

2.4 - Ipv6

Support for IPv6

Overview

Gardener supports different levels of IPv6 support in shoot clusters. This document describes the differences between them and what to consider when using them.

In IPv6 Ingress for IPv4 Shoot Clusters, the focus is on how an existing IPv4-only shoot cluster can provide dual-stack services to clients. Section IPv6-only Shoot Clusters describes how to create a shoot cluster that only supports IPv6. Finally, Dual-Stack Shoot Clusters explains how to create a shoot cluster that supports both IPv4 and IPv6.

IPv6 Ingress for IPv4 Shoot Clusters

Per default, Gardener shoot clusters use only IPv4. Therefore, they also expose their services only via load balancers with IPv4 addresses. To allow external clients to also use IPv6 to access services in an IPv4 shoot cluster, the cluster needs to be configured to support dual-stack ingress.

It is possible to configure a shoot cluster to support dual-stack ingress, see Using IPv4/IPv6 (dual-stack) Ingress in an IPv4 single-stack cluster for more information.

The main benefit of this approach is that the existing cluster stays almost as is without major changes, keeping the operational simplicity. It works very well for services that only require incoming communication, e.g. pure web services.

The main drawback is that certain scenarios, especially related to IPv6 callbacks, are not possible. This means that services, which actively call to their clients via web hooks, will not be able to do so over IPv6. Hence, those services will not be able to allow full-usage via IPv6.

IPv6-only Shoot Clusters

Motivation

IPv6-only shoot clusters are the best option to verify that services are fully IPv6-compatible. While Dual-Stack Shoot Clusters may fall back on using IPv4 transparently, IPv6-only shoot clusters enforce the usage of IPv6 inside the cluster. Therefore, it is recommended to check with IPv6-only shoot clusters if a workload is fully IPv6-compatible.

In addition to being a good testbed for IPv6 compatibility, IPv6-only shoot clusters may also be a desirable eventual target in the IPv6 migration as they allow to support both IPv4 and IPv6 clients while having a single-stack with the cluster.

Creating an IPv6-only Shoot Cluster

To create an IPv6-only shoot cluster, the following needs to be specified in the Shoot resource (see also here):

kind: Shoot

apiVersion: core.gardener.cloud/v1beta1

metadata:

...

spec:

...

networking:

type: ...

ipFamilies:

- IPv6

...

provider:

type: aws

infrastructureConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

networks:

vpc:

cidr: 192.168.0.0/16

zones:

- name: ...

public: 192.168.32.0/20

internal: 192.168.48.0/20

Warning

Please note that

nodes,podsandservicesshould not be specified in.spec.networkingresource.

In contrast to that, it is still required to specify IPv4 ranges for the VPC and the public/internal subnets. This is mainly due to the fact that public/internal load balancers still require IPv4 addresses as there are no pure IPv6-only load balancers as of now. The ranges can be sized according to the expected amount of load balancers per zone/type.

The IPv6 address ranges are provided by AWS. It is ensured that the IPv6 ranges are globally unique und internet routable.

Load Balancer Configuration

The AWS Load Balancer Controller is automatically deployed when using an IPv6-only shoot cluster. When creating a load balancer, the corresponding annotations need to be configured, see AWS Load Balancer Documentation - Network Load Balancer for details.

The AWS Load Balancer Controller allows dual-stack ingress so that an IPv6-only shoot cluster can serve IPv4 and IPv6 clients. You can find an example here.

Warning

When accessing Network Load Balancers (NLB) from within the same IPv6-only cluster, it is crucial to add the annotation

service.beta.kubernetes.io/aws-load-balancer-target-group-attributes: preserve_client_ip.enabled=false. Without this annotation, if a request is routed by the NLB to the same target instance from which it originated, the client IP and destination IP will be identical. This situation, known as the hair-pinning effect, will prevent the request from being processed. (This also happens for internal load balancers in IPv4 clusters, but is mitigated by the NAT gateway for external IPv4 load balancers.)

Connectivity to IPv4-only Services

The IPv6-only shoot cluster can connect to IPv4-only services via DNS64/NAT64. The cluster is configured to use the DNS64/NAT64 service of the underlying cloud provider. This allows the cluster to resolve IPv4-only DNS names and to connect to IPv4-only services.

Please note that traffic going through NAT64 incurs the same cost as ordinary NAT traffic in an IPv4-only cluster. Therefore, it might be beneficial to prefer IPv6 for services, which provide IPv4 and IPv6.

Dual-Stack Shoot Clusters

Motivation

Dual-stack shoot clusters support IPv4 and IPv6 out-of-the-box. They can be the intermediate step on the way towards IPv6 for any existing (IPv4-only) clusters.

Creating a Dual-Stack Shoot Cluster

To create a dual-stack shoot cluster, the following needs to be specified in the Shoot resource:

kind: Shoot

apiVersion: core.gardener.cloud/v1beta1

metadata:

...

spec:

...

networking:

type: ...

pods: 192.168.128.0/17

nodes: 192.168.0.0/18

services: 192.168.64.0/18

ipFamilies:

- IPv4

- IPv6

...

provider:

type: aws

infrastructureConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

networks:

vpc:

cidr: 192.168.0.0/18

zones:

- name: ...

workers: 192.168.0.0/19

public: 192.168.32.0/20

internal: 192.168.48.0/20

Please note that the only change compared to an IPv4-only shoot cluster is the addition of IPv6 to the .spec.networking.ipFamilies field.

The order of the IP families defines the preference of the IP family.

In this case, IPv4 is preferred over IPv6, e.g. services specifying no IP family will get only an IPv4 address.

Bring your own VPC

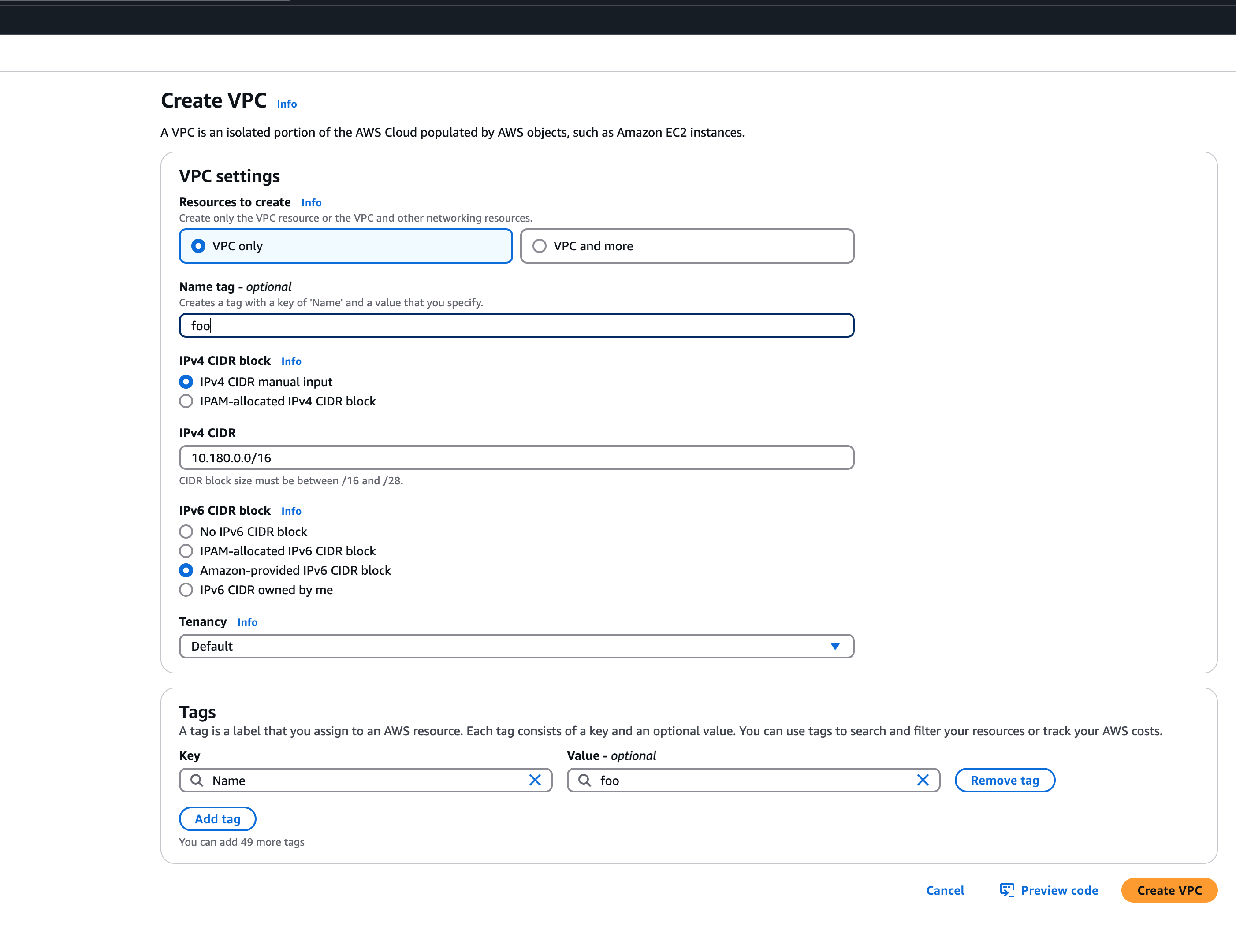

To create an IPv6 shoot cluster or a dual-stack shoot within your own Virtual Private Cloud (VPC), it is necessary to have an Amazon-provided IPv6 CIDR block added to the VPC. This block can be assigned during the initial setup of the VPC, as illustrated in the accompanying screenshot.

An egress-only internet gateway is required for outbound internet traffic (IPv6) from the instances within your VPC. Please create one egress-only internet gateway and attach it to the VPC. Please also make sure that the VPC has an attached internet gateway and the following attributes set:

An egress-only internet gateway is required for outbound internet traffic (IPv6) from the instances within your VPC. Please create one egress-only internet gateway and attach it to the VPC. Please also make sure that the VPC has an attached internet gateway and the following attributes set: enableDnsHostnames and enableDnsSupport as described under usage.

Migration of IPv4-only Shoot Clusters to Dual-Stack

To migrate an IPv4-only shoot cluster to Dual-Stack simply change the .spec.networking.ipFamilies field in the Shoot resource from IPv4 to IPv4, IPv6 as shown below.

kind: Shoot

apiVersion: core.gardener.cloud/v1beta1

metadata:

...

spec:

...

networking:

type: ...

ipFamilies:

- IPv4

- IPv6

...

You can find more information about the process and the steps required here.

Warning

Please note that the dual-stack migration requires the IPv4-only cluster to run in native routing mode, i.e. pod overlay network needs to be disabled. The default quota of routes per route table in AWS is 50. This restricts the cluster size to about 50 nodes. Therefore, please adapt (if necessary) the routes per route table limit in the Amazon Virtual Private Cloud quotas accordingly before switching to native routing. The maximum setting is currently 1000.

Load Balancer Configuration

The AWS Load Balancer Controller is automatically deployed when using a dual-stack shoot cluster. When creating a load balancer, the corresponding annotations need to be configured, see AWS Load Balancer Documentation - Network Load Balancer for details.

Warning

Please note that load balancer services without any special annotations will default to IPv4-only regardless how

.spec.ipFamiliesis set.

The AWS Load Balancer Controller allows dual-stack ingress so that a dual-stack shoot cluster can serve IPv4 and IPv6 clients. You can find an example here.

Warning

When accessing external Network Load Balancers (NLB) from within the same cluster via IPv6 or internal NLBs via IPv4, it is crucial to add the annotation

service.beta.kubernetes.io/aws-load-balancer-target-group-attributes: preserve_client_ip.enabled=false. Without this annotation, if a request is routed by the NLB to the same target instance from which it originated, the client IP and destination IP will be identical. This situation, known as the hair-pinning effect, will prevent the request from being processed.

2.5 - Local Setup

admission-aws

admission-aws is an admission webhook server which is responsible for the validation of the cloud provider (AWS in this case) specific fields and resources. The Gardener API server is cloud provider agnostic and it wouldn’t be able to perform similar validation.

Follow the steps below to run the admission webhook server locally.

Start the Gardener API server.

For details, check the Gardener local setup.

Start the webhook server

Make sure that the

KUBECONFIGenvironment variable is pointing to the local garden cluster.make start-admissionSetup the

ValidatingWebhookConfiguration.hack/dev-setup-admission-aws.shwill configure the webhook Service which will allow the kube-apiserver of your local cluster to reach the webhook server. It will also apply theValidatingWebhookConfigurationmanifest../hack/dev-setup-admission-aws.sh

You are now ready to experiment with the admission-aws webhook server locally.

2.6 - Operations

Using the AWS provider extension with Gardener as operator

The core.gardener.cloud/v1beta1.CloudProfile resource declares a providerConfig field that is meant to contain provider-specific configuration.

Similarly, the core.gardener.cloud/v1beta1.Seed resource is structured.

Additionally, it allows to configure settings for the backups of the main etcds’ data of shoot clusters control planes running in this seed cluster.

This document explains what is necessary to configure for this provider extension.

CloudProfile resource

In this section we are describing how the configuration for CloudProfiles looks like for AWS and provide an example CloudProfile manifest with minimal configuration that you can use to allow creating AWS shoot clusters.

CloudProfileConfig

The cloud profile configuration contains information about the real machine image IDs in the AWS environment (AMIs).

You have to map every version that you specify in .spec.machineImages[].versions here such that the AWS extension knows the AMI for every version you want to offer.

For each AMI an architecture field can be specified which specifies the CPU architecture of the machine on which given machine image can be used.

An example CloudProfileConfig for the AWS extension looks as follows:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: CloudProfileConfig

machineImages:

- name: coreos

versions:

- version: 2135.6.0

regions:

- name: eu-central-1

ami: ami-034fd8c3f4026eb39

# architecture: amd64 # optional

Example CloudProfile manifest

Please find below an example CloudProfile manifest:

apiVersion: core.gardener.cloud/v1beta1

kind: CloudProfile

metadata:

name: aws

spec:

type: aws

kubernetes:

versions:

- version: 1.32.1

- version: 1.31.4

expirationDate: "2022-10-31T23:59:59Z"

machineImages:

- name: coreos

versions:

- version: 2135.6.0

machineTypes:

- name: m5.large

cpu: "2"

gpu: "0"

memory: 8Gi

usable: true

volumeTypes:

- name: gp2

class: standard

usable: true

- name: io1

class: premium

usable: true

regions:

- name: eu-central-1

zones:

- name: eu-central-1a

- name: eu-central-1b

- name: eu-central-1c

providerConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: CloudProfileConfig

machineImages:

- name: coreos

versions:

- version: 2135.6.0

regions:

- name: eu-central-1

ami: ami-034fd8c3f4026eb39

# architecture: amd64 # optional

Seed resource

This provider extension does not support any provider configuration for the Seed’s .spec.provider.providerConfig field.

However, it supports to manage backup infrastructure, i.e., you can specify configuration for the .spec.backup field.

Backup configuration

Please find below an example Seed manifest (partly) that configures backups.

As you can see, the location/region where the backups will be stored can be different to the region where the seed cluster is running.

apiVersion: v1

kind: Secret

metadata:

name: backup-credentials

namespace: garden

type: Opaque

data:

accessKeyID: base64(access-key-id)

secretAccessKey: base64(secret-access-key)

---

apiVersion: core.gardener.cloud/v1beta1

kind: Seed

metadata:

name: my-seed

spec:

provider:

type: aws

region: eu-west-1

backup:

provider: aws

region: eu-central-1

credentialsRef:

apiVersion: v1

kind: Secret

name: backup-credentials

namespace: garden

...

Please look up https://docs.aws.amazon.com/general/latest/gr/aws-sec-cred-types.html#access-keys-and-secret-access-keys as well.

Permissions for AWS IAM user

Please make sure that the provided credentials have the correct privileges. You can use the following AWS IAM policy document and attach it to the IAM user backed by the credentials you provided (please check the official AWS documentation as well):

Click to expand the AWS IAM policy document!

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:*",

"Resource": "*"

}

]

}

Rolling Update Triggers

Changes to the Shoot worker-pools are applied in-place where possible.

In case this is not possible a rolling update of the workers will be performed to apply the new configuration, as outlined in the Gardener documentation.

The exact fields that trigger this behavior are defined in the Gardener doc, with a few additions:

.spec.provider.workers[].providerConfig.spec.provider.workers[].volume.encrypted.spec.provider.workers[].dataVolumes[].size(only the affected worker pool).spec.provider.workers[].dataVolumes[].type(only the affected worker pool).spec.provider.workers[].dataVolumes[].encrypted(only the affected worker pool)

For now, if the feature gate NewWorkerPoolHash is enabled, the same fields are used.

This behavior might change once MCM supports in-place volume updates.

If updateStrategy is set to inPlace and NewWorkerPoolHash is enabled,

all the fields mentioned above except of the providerConifg are used.

If in-place-updates are enabled for a worker-pool, then updates to the fields that trigger rolling updates will be disallowed.

2.7 - Usage

Using the AWS provider extension with Gardener as an end-user

The core.gardener.cloud/v1beta1.Shoot resource declares a few fields that are meant to contain provider-specific configuration.

In this document we are describing how this configuration looks like for AWS and provide an example Shoot manifest with minimal configuration that you can use to create an AWS cluster (modulo the landscape-specific information like cloud profile names, secret binding names, etc.).

Accessing AWS APIs

In order for Gardener to create a Kubernetes cluster using AWS infrastructure components, a Shoot has to provide an authentication mechanism giving sufficient permissions to the desired AWS account.

Every shoot cluster references a SecretBinding or a CredentialsBinding.

Important

While

SecretBindings can only referenceSecrets,CredentialsBindings can also referenceWorkloadIdentitys which provide an alternative authentication method.WorkloadIdentitys do not directly contain credentials but are rather a representation of the workload that is going to access the user’s account. If the user has configured OIDC Federation with Gardener’s Workload Identity Issuer then the AWS infrastructure components can access the user’s account without the need of preliminary exchange of credentials.

Important

The

SecretBinding/CredentialsBindingis configurable in the Shoot cluster with the fieldsecretBindingName/credentialsBindingName.SecretBindings are considered legacy and will be deprecated in the future. It is advised to useCredentialsBindings instead.

Provider Secret Data

Every shoot cluster references a SecretBinding or a CredentialsBinding which itself references a Secret, and this Secret contains the provider credentials of your AWS account.

This Secret must look as follows:

apiVersion: v1

kind: Secret

metadata:

name: core-aws

namespace: garden-dev

type: Opaque

data:

accessKeyID: base64(access-key-id)

secretAccessKey: base64(secret-access-key)

The AWS documentation explains the necessary steps to enable programmatic access, i.e. create access key ID and access key, for the user of your choice.

Warning

For security reasons, we recommend creating a dedicated user with programmatic access only. Please avoid re-using a IAM user which has access to the AWS console (human user).

Depending on your AWS API usage it can be problematic to reuse the same AWS Account for different Shoot clusters in the same region due to rate limits. Please consider spreading your Shoots over multiple AWS Accounts if you are hitting those limits.

AWS Workload Identity Federation

Users can choose to trust Gardener’s Workload Identity Issuer and eliminate the need for providing AWS Access Key credentials.

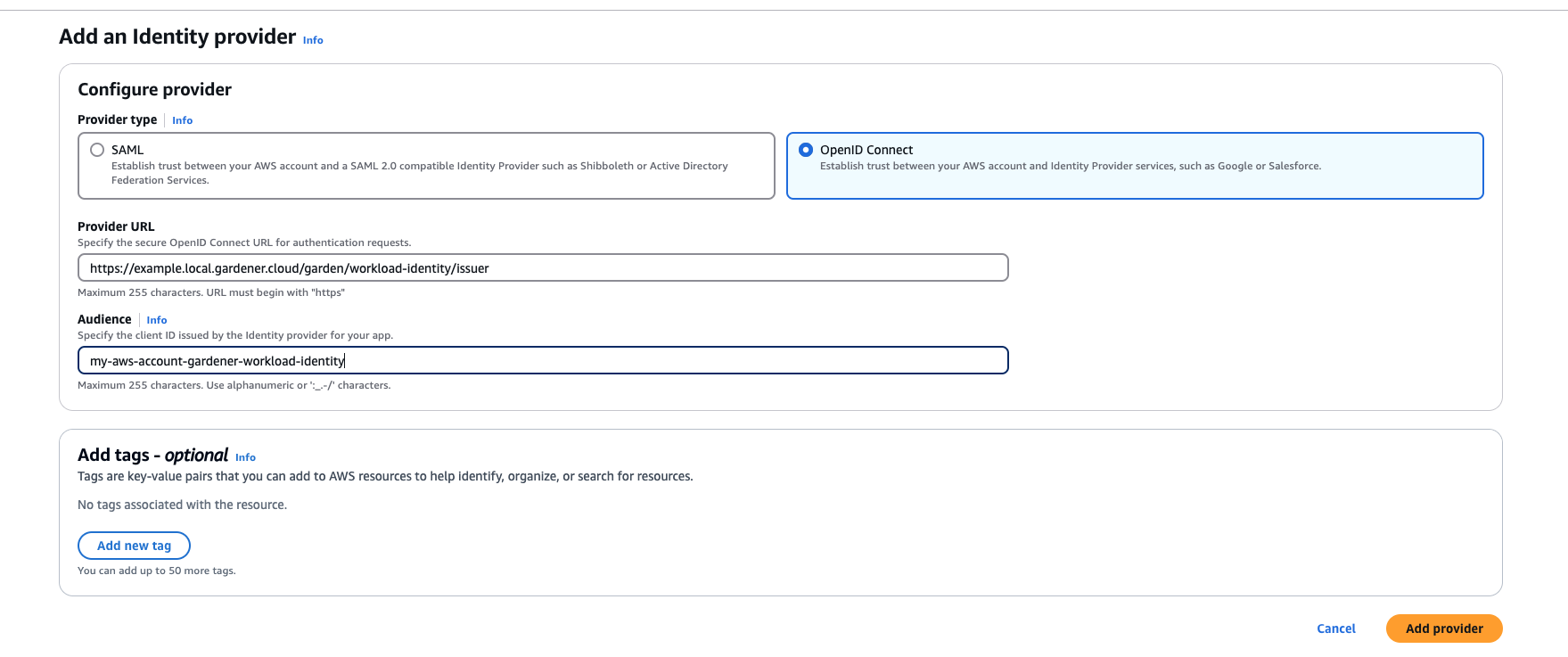

1. Configure OIDC Federation with Gardener’s Workload Identity Issuer.

Tip

You can retrieve Gardener’s Workload Identity Issuer URL directly from the Garden cluster by reading the contents of the Gardener Info ConfigMap.

kubectl -n gardener-system-public get configmap gardener-info -o yaml

Important

Use an audience that will uniquely identify the trust relationship between your AWS account and Gardener.

2. Create a policy listing the required permissions.

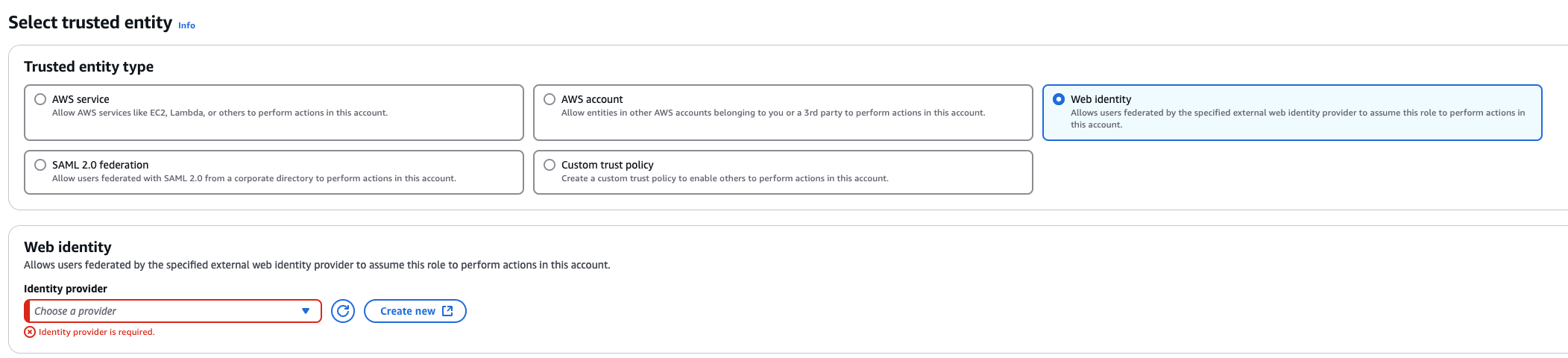

3. Create a role that trusts the external web identity.

In the Identity Provider dropdown menu choose the Gardener’s Workload Identity Provider.

Add the newly created policy that will grant the required permissions.

4. Configure the trust relationship.

Caution

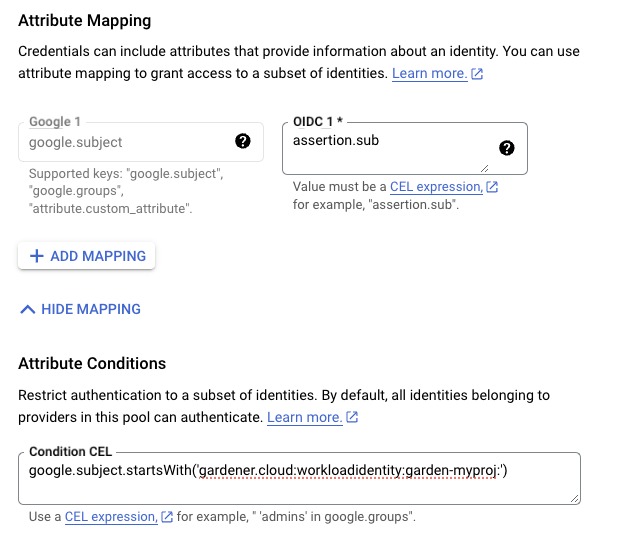

Remember to reduce the scope of the identities that can assume this role only to your own controlled identities! In the example shown below

WorkloadIdentitys that are created in thegarden-myprojnamespace and have the nameawswill be authenticated and granted permissions. Later on, the scope of the trust configuration can be reduced further by replacing the wildcard “*” with the actual id of theWorkloadIdentityand converting the “StringLike” condition to “StringEquals”. This is currently not possible since we do not have the id of theWorkloadIdentityyet.{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": "sts:AssumeRoleWithWebIdentity", "Principal": { "Federated": "arn:aws:iam::123456789012:oidc-provider/example.local.gardener.cloud/garden/workload-identity/issuer" }, "Condition": { "StringEquals": { "example.local.gardener.cloud/garden/workload-identity/issuer:aud": [ "my-aws-account-gardener-workload-identity" ] }, "StringLike": { "example.local.gardener.cloud/garden/workload-identity/issuer:sub": "gardener.cloud:workloadidentity:garden-myproj:aws:*" } } } ] }

5. Create the WorkloadIdentity in the Garden cluster.

This step will require the ARN of the role that was created in the previous step. The identity will be used by infrastructure components to authenticate against AWS APIs. A sample of such resource is shown below:

apiVersion: security.gardener.cloud/v1alpha1

kind: WorkloadIdentity

metadata:

name: aws

namespace: garden-myproj

spec:

audiences:

- my-aws-account-gardener-workload-identity

targetSystem:

type: aws

providerConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: WorkloadIdentityConfig

roleARN: arn:aws:iam::123456789012:role/gardener-workload-identity

Tip

Once created you can extract the whole subject of the workload identity and edit the created Role’s trust relationship configuration to also include the workload identity’s id. Obtain the complete

subby running the following:SUBJECT=$(kubectl -n garden-myproj get workloadidentity aws -o=jsonpath='{.status.sub}') echo "$SUBJECT"

6. Create a CredentialsBinding referencing the AWS WorkloadIdentity and use it in your Shoot definitions.

apiVersion: security.gardener.cloud/v1alpha1

kind: CredentialsBinding

metadata:

name: aws

namespace: garden-myproj

credentialsRef:

apiVersion: security.gardener.cloud/v1alpha1

kind: WorkloadIdentity

name: aws

namespace: garden-myproj

provider:

type: aws

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

metadata:

name: aws

namespace: garden-myproj

spec:

credentialsBindingName: aws

...

Permissions

Please make sure that the provided credentials have the correct privileges. You can use the following AWS IAM policy document and attach it to the IAM user backed by the credentials you provided (please check the official AWS documentation as well):

Click to expand the AWS IAM policy document!

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "autoscaling:*",

"Resource": "*"

},

{

"Effect": "Allow",

"Action": "ec2:*",

"Resource": "*"

},

{

"Effect": "Allow",

"Action": "elasticloadbalancing:*",

"Resource": "*"

},

{

"Action": [

"iam:GetInstanceProfile",

"iam:GetPolicy",

"iam:GetPolicyVersion",

"iam:GetRole",

"iam:GetRolePolicy",

"iam:ListPolicyVersions",

"iam:ListRolePolicies",

"iam:ListAttachedRolePolicies",

"iam:ListInstanceProfilesForRole",

"iam:CreateInstanceProfile",

"iam:CreatePolicy",

"iam:CreatePolicyVersion",

"iam:CreateRole",

"iam:CreateServiceLinkedRole",

"iam:AddRoleToInstanceProfile",

"iam:AttachRolePolicy",

"iam:DetachRolePolicy",

"iam:RemoveRoleFromInstanceProfile",

"iam:DeletePolicy",

"iam:DeletePolicyVersion",

"iam:DeleteRole",

"iam:DeleteRolePolicy",

"iam:DeleteInstanceProfile",

"iam:PutRolePolicy",

"iam:PassRole",

"iam:UpdateAssumeRolePolicy"

],

"Effect": "Allow",

"Resource": "*"

},

// The following permission set is only needed, if AWS Load Balancer controller is enabled (see ControlPlaneConfig)

{

"Effect": "Allow",

"Action": [

"cognito-idp:DescribeUserPoolClient",

"acm:ListCertificates",

"acm:DescribeCertificate",

"iam:ListServerCertificates",

"iam:GetServerCertificate",

"waf-regional:GetWebACL",

"waf-regional:GetWebACLForResource",

"waf-regional:AssociateWebACL",

"waf-regional:DisassociateWebACL",

"wafv2:GetWebACL",

"wafv2:GetWebACLForResource",

"wafv2:AssociateWebACL",

"wafv2:DisassociateWebACL",

"shield:GetSubscriptionState",

"shield:DescribeProtection",

"shield:CreateProtection",

"shield:DeleteProtection"

],

"Resource": "*"

}

]

}

InfrastructureConfig

The infrastructure configuration mainly describes how the network layout looks like in order to create the shoot worker nodes in a later step, thus, prepares everything relevant to create VMs, load balancers, volumes, etc.

An example InfrastructureConfig for the AWS extension looks as follows:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

enableECRAccess: true

dualStack:

enabled: false

networks:

vpc: # specify either 'id' or 'cidr'

# id: vpc-123456

cidr: 10.250.0.0/16

# gatewayEndpoints:

# - s3

zones:

- name: eu-west-1a

internal: 10.250.112.0/22

public: 10.250.96.0/22

workers: 10.250.0.0/19

# elasticIPAllocationID: eipalloc-123456

ignoreTags:

keys: # individual ignored tag keys

- SomeCustomKey

- AnotherCustomKey

keyPrefixes: # ignored tag key prefixes

- user.specific/prefix/

The enableECRAccess flag specifies whether the AWS IAM role policy attached to all worker nodes of the cluster shall contain permissions to access the Elastic Container Registry of the respective AWS account.

If the flag is not provided it is defaulted to true.

Please note that if the iamInstanceProfile is set for a worker pool in the WorkerConfig (see below) then enableECRAccess does not have any effect.

It only applies for those worker pools whose iamInstanceProfile is not set.

Click to expand the default AWS IAM policy document used for the instance profiles!

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:DescribeInstances"

],

"Resource": [

"*"

]

},

// Only if `.enableECRAccess` is `true`.

{

"Effect": "Allow",

"Action": [

"ecr:GetAuthorizationToken",

"ecr:BatchCheckLayerAvailability",

"ecr:GetDownloadUrlForLayer",

"ecr:GetRepositoryPolicy",

"ecr:DescribeRepositories",

"ecr:ListImages",

"ecr:BatchGetImage"

],

"Resource": [

"*"

]

}

]

}

The dualStack.enabled flag specifies whether dual-stack or IPv4-only should be supported by the infrastructure.

When the flag is set to true an Amazon provided IPv6 CIDR block will be attached to the VPC.

All subnets will receive a /64 block from it and a route entry is added to the main route table to route all IPv6 traffic over the IGW.

The networks.vpc section describes whether you want to create the shoot cluster in an already existing VPC or whether to create a new one:

- If

networks.vpc.idis given then you have to specify the VPC ID of the existing VPC that was created by other means (manually, other tooling, …). Please make sure that the VPC has attached an internet gateway - the AWS controller won’t create one automatically for existing VPCs. To make sure the nodes are able to join and operate in your cluster properly, please make sure that your VPC has enabled DNS Support, explicitly the attributesenableDnsHostnamesandenableDnsSupportmust be set totrue. - If

networks.vpc.cidris given then you have to specify the VPC CIDR of a new VPC that will be created during shoot creation. You can freely choose a private CIDR range. - Either

networks.vpc.idornetworks.vpc.cidrmust be present, but not both at the same time. networks.vpc.gatewayEndpointsis optional. If specified then each item is used as service name in a corresponding Gateway VPC Endpoint.

The networks.zones section contains configuration for resources you want to create or use in availability zones.

For every zone, the AWS extension creates three subnets:

- The

internalsubnet is used for internal AWS load balancers. - The

publicsubnet is used for public AWS load balancers. - The

workerssubnet is used for all shoot worker nodes, i.e., VMs which later run your applications.

For every subnet, you have to specify a CIDR range contained in the VPC CIDR specified above, or the VPC CIDR of your already existing VPC. You can freely choose these CIDRs and it is your responsibility to properly design the network layout to suit your needs.

Also, the AWS extension creates a dedicated NAT gateway for each zone.

By default, it also creates a corresponding Elastic IP that it attaches to this NAT gateway and which is used for egress traffic.

The elasticIPAllocationID field allows you to specify the ID of an existing Elastic IP allocation in case you want to bring your own.

If provided, no new Elastic IP will be created and, instead, the Elastic IP specified by you will be used.

Warning

If you change this field for an already existing infrastructure then it will disrupt egress traffic while AWS applies this change. The reason is that the NAT gateway must be recreated with the new Elastic IP association. Also, please note that the existing Elastic IP will be permanently deleted if it was earlier created by the AWS extension.

You can configure Gateway VPC Endpoints by adding items in the optional list networks.vpc.gatewayEndpoints. Each item in the list is used as a service name and a corresponding endpoint is created for it. All created endpoints point to the service within the cluster’s region. For example, consider this (partial) shoot config:

spec:

region: eu-central-1

provider:

type: aws

infrastructureConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

networks:

vpc:

gatewayEndpoints:

- s3

The service name of the S3 Gateway VPC Endpoint in this example is com.amazonaws.eu-central-1.s3.

If you want to use multiple availability zones then add a second, third, … entry to the networks.zones[] list and properly specify the AZ name in networks.zones[].name.

Apart from the VPC and the subnets the AWS extension will also create DHCP options and an internet gateway (only if a new VPC is created), routing tables, security groups, elastic IPs, NAT gateways, EC2 key pairs, IAM roles, and IAM instance profiles.

The ignoreTags section allows to configure which resource tags on AWS resources managed by Gardener should be ignored during

infrastructure reconciliation. By default, all tags that are added outside of Gardener’s

reconciliation will be removed during the next reconciliation. This field allows users and automation to add

custom tags on AWS resources created and managed by Gardener without loosing them on the next reconciliation.

Tags can be ignored either by specifying exact key values (ignoreTags.keys) or key prefixes (ignoreTags.keyPrefixes).

In both cases it is forbidden to ignore the Name tag or any tag starting with kubernetes.io or gardener.cloud.

Please note though, that the tags are only ignored on resources created on behalf of the Infrastructure CR (i.e. VPC,

subnets, security groups, keypair, etc.), while tags on machines, volumes, etc. are not in the scope of this controller.

ControlPlaneConfig

The control plane configuration mainly contains values for the AWS-specific control plane components.

Today, the only component deployed by the AWS extension is the cloud-controller-manager.

An example ControlPlaneConfig for the AWS extension looks as follows:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: ControlPlaneConfig