Registry Cache

Gardener extension controller which deploys pull-through caches for container registries.

Gardener extension controller which deploys pull-through caches for container registries.

Usage

Local Setup and Development

1 - Configuring the Registry Cache Extension

Learn what is the use-case for a pull-through cache, how to enable it and configure it

Configuring the Registry Cache Extension

Introduction

Use Case

For a Shoot cluster, the containerd daemon of every Node goes to the internet and fetches an image that it doesn’t have locally in the Node’s image cache. New Nodes are often created due to events such as auto-scaling (scale up), rolling update, or replacement of unhealthy Node. Such a new Node would need to pull all of the images of the Pods running on it from the internet because the Node’s cache is initially empty. Pulling an image from a registry produces network traffic and registry costs. To avoid these network traffic and registry costs, you can use the registry-cache extension to run a registry as pull-through cache.

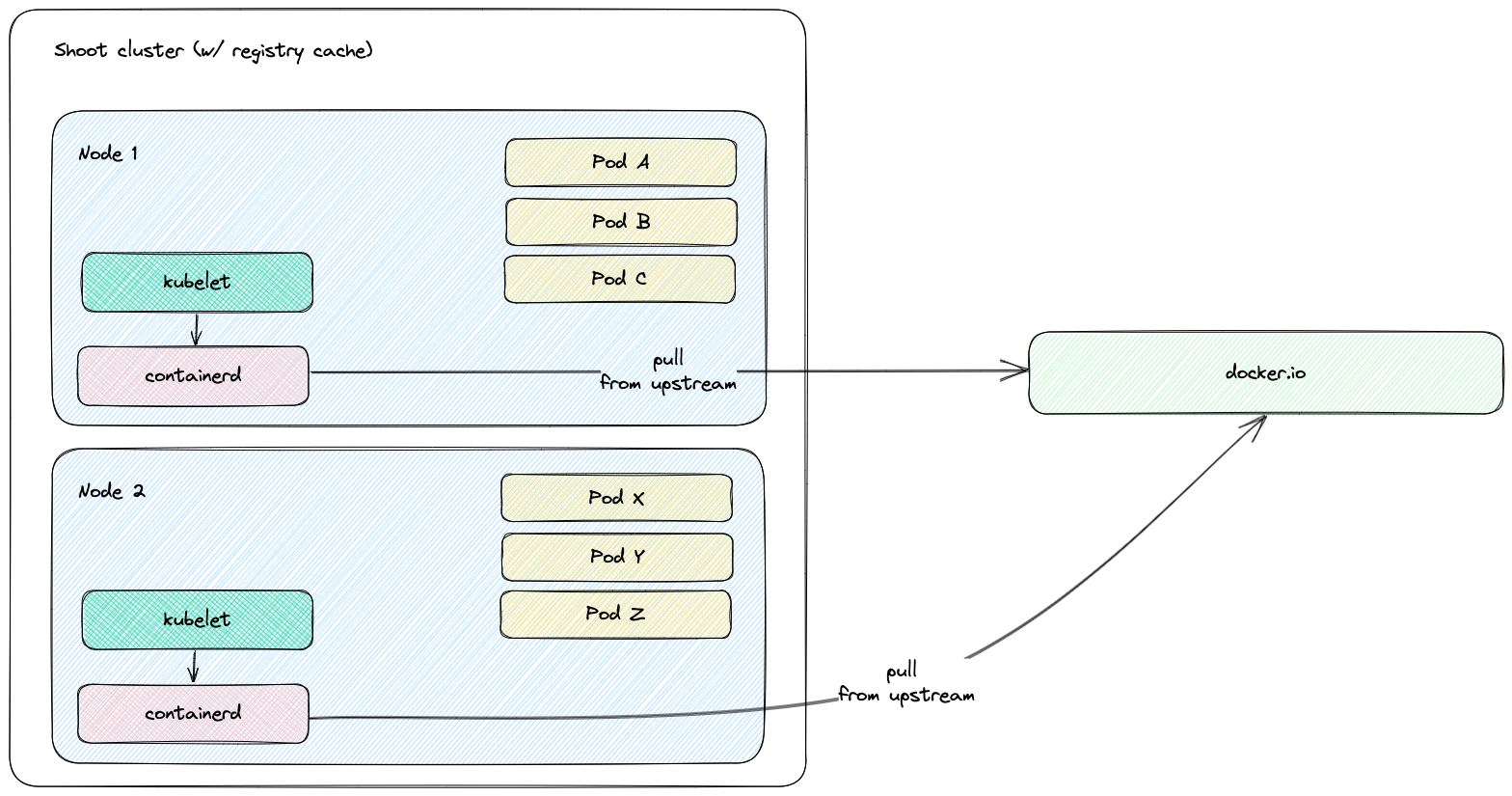

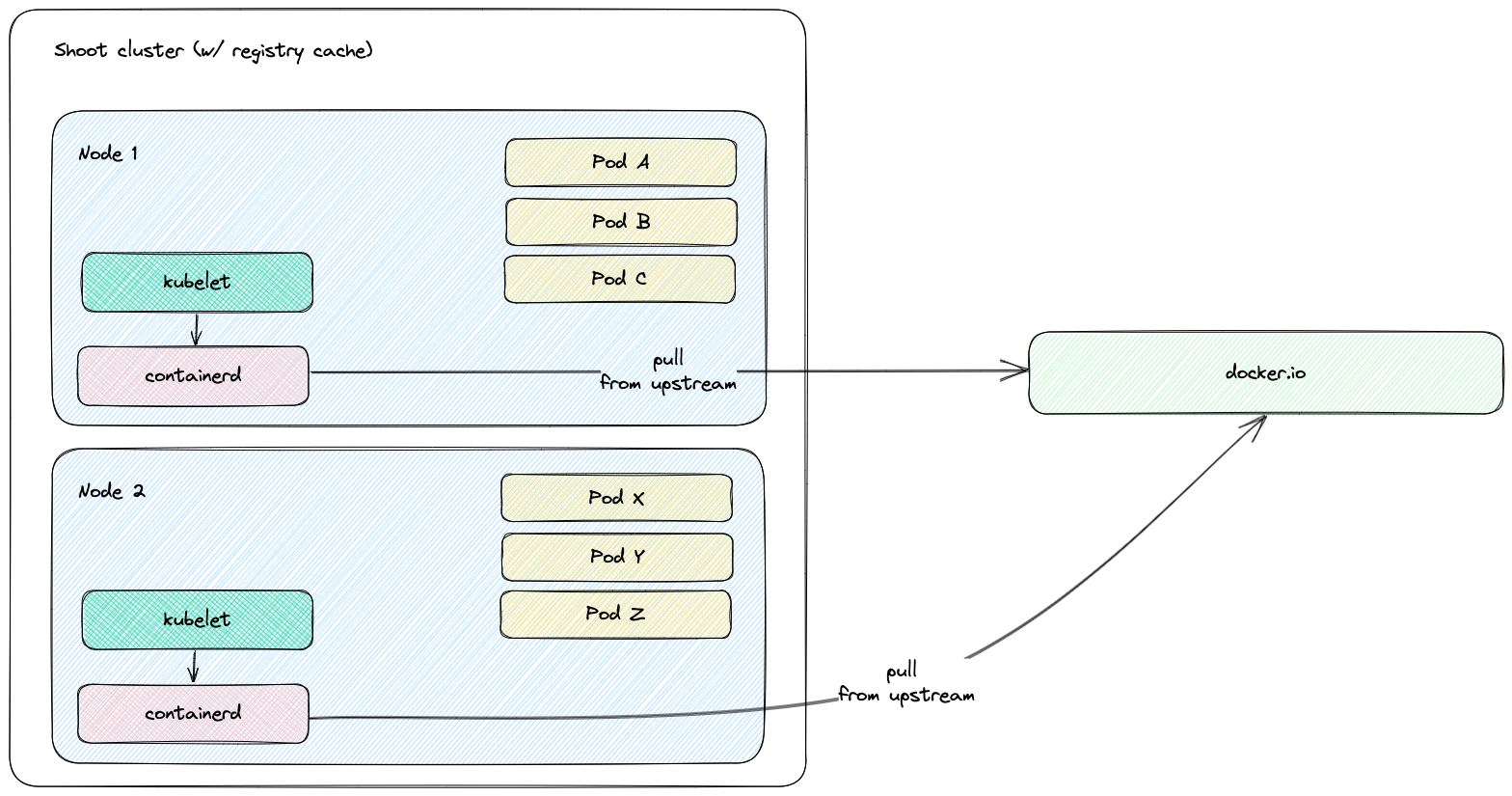

The following diagram shows a rough outline of how an image pull looks like for a Shoot cluster without registry cache:

Solution

The registry-cache extension deploys and manages a registry in the Shoot cluster that runs as pull-through cache. The used registry implementation is distribution/distribution.

How does it work?

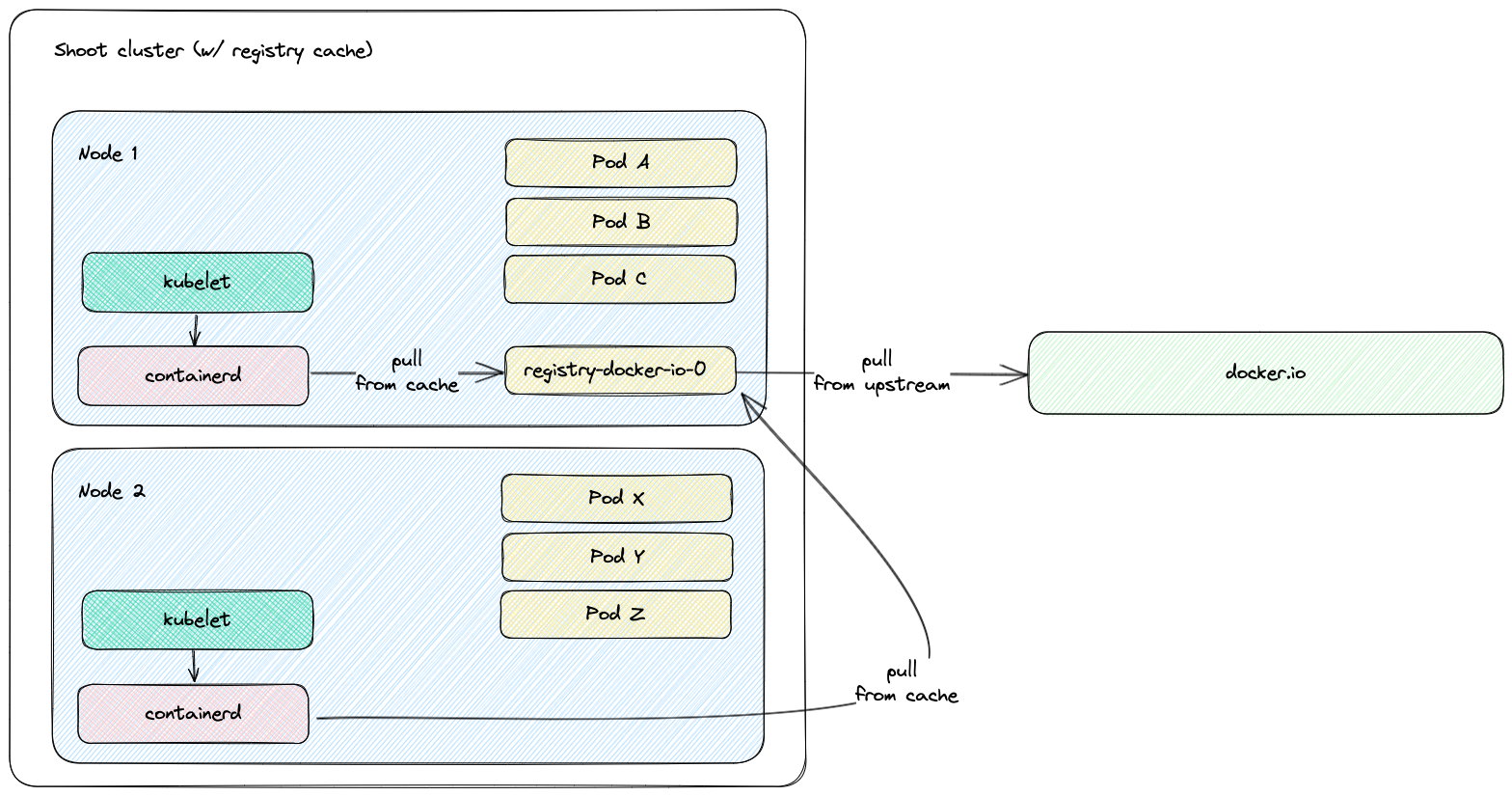

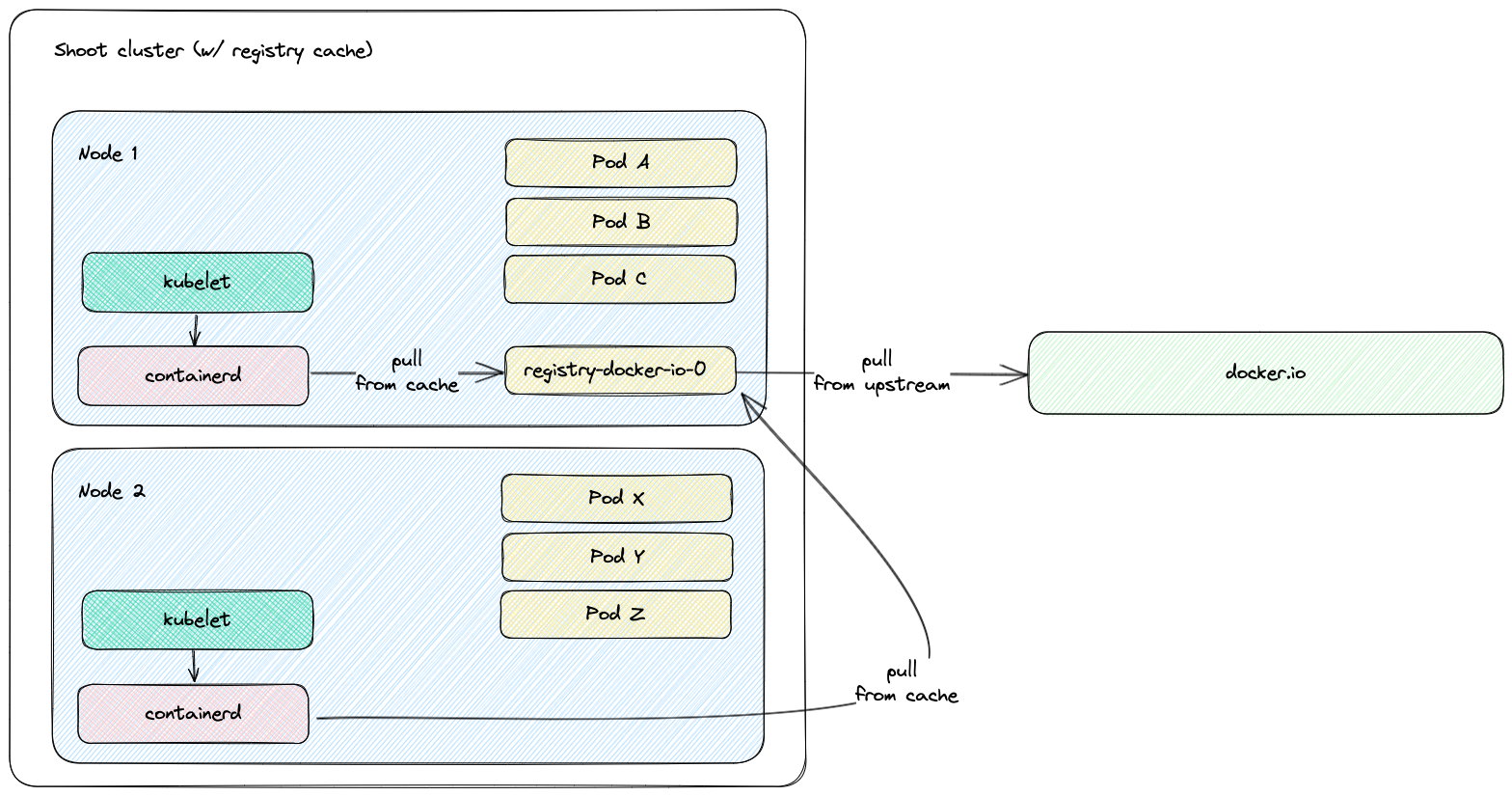

When the extension is enabled, a registry cache for each configured upstream is deployed to the Shoot cluster. Along with this, the containerd daemon on the Shoot cluster Nodes gets configured to use as a mirror the Service IP address of the deployed registry cache. For example, if a registry cache for upstream docker.io is requested via the Shoot spec, then containerd gets configured to first pull the image from the deployed cache in the Shoot cluster. If this image pull operation fails, containerd falls back to the upstream itself (docker.io in that case).

The first time an image is requested from the pull-through cache, it pulls the image from the configured upstream registry and stores it locally, before handing it back to the client. On subsequent requests, the pull-through cache is able to serve the image from its own storage.

The used registry implementation (distribution/distribution) supports mirroring of only one upstream registry.

The following diagram shows a rough outline of how an image pull looks like for a Shoot cluster with registry cache:

Shoot Configuration

The extension is not globally enabled and must be configured per Shoot cluster. The Shoot specification has to be adapted to include the registry-cache extension configuration.

Below is an example of registry-cache extension configuration as part of the Shoot spec:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

metadata:

name: crazy-botany

namespace: garden-dev

spec:

extensions:

- type: registry-cache

providerConfig:

apiVersion: registry.extensions.gardener.cloud/v1alpha3

kind: RegistryConfig

caches:

- upstream: docker.io

volume:

size: 100Gi

# storageClassName: premium

- upstream: ghcr.io

- upstream: quay.io

garbageCollection:

ttl: 0s

secretReferenceName: quay-credentials

- upstream: my-registry.io:5000

remoteURL: http://my-registry.io:5000

# ...

resources:

- name: quay-credentials

resourceRef:

apiVersion: v1

kind: Secret

name: quay-credentials-v1

The providerConfig field is required.

The providerConfig.caches field contains information about the registry caches to deploy. It is a required field. At least one cache has to be specified.

The providerConfig.caches[].upstream field is the remote registry host to cache. It is a required field.

The value must be a valid DNS subdomain (RFC 1123) and optionally a port (i.e. <host>[:<port>]). It must not include a scheme.

The providerConfig.caches[].remoteURL optional field is the remote registry URL. If configured, it must include an https:// or http:// scheme.

If the field is not configured, the remote registry URL defaults to https://<upstream>. In case the upstream is docker.io, it defaults to https://registry-1.docker.io.

The providerConfig.caches[].volume field contains settings for the registry cache volume.

The registry-cache extension deploys a StatefulSet with a volume claim template. A PersistentVolumeClaim is created with the configured size and StorageClass name.

The providerConfig.caches[].volume.size field is the size of the registry cache volume. Defaults to 10Gi. The size must be a positive quantity (greater than 0).

This field is immutable. See Increase the cache disk size on how to resize the disk.

The extension defines alerts for the volume. More information about the registry cache alerts and how to enable notifications for them can be found in the alerts documentation.

The providerConfig.caches[].volume.storageClassName field is the name of the StorageClass used by the registry cache volume.

This field is immutable. If the field is not specified, then the default StorageClass will be used.

The providerConfig.caches[].garbageCollection.ttl field is the time to live of a blob in the cache. If the field is set to 0s, the garbage collection is disabled. Defaults to 168h (7 days). See the Garbage Collection section for more details.

The providerConfig.caches[].secretReferenceName is the name of the reference for the Secret containing the upstream registry credentials. To cache images from a private registry, credentials to the upstream registry should be supplied. For more details, see How to provide credentials for upstream registry.

It is only possible to provide one set of credentials for one private upstream registry.

The providerConfig.caches[].proxy.httpProxy field represents the proxy server for HTTP connections which is used by the registry cache. It must include an https:// or http:// scheme.

The providerConfig.caches[].proxy.httpsProxy field represents the proxy server for HTTPS connections which is used by the registry cache. It must include an https:// or http:// scheme.

The providerConfig.caches[].http.tls field indicates whether TLS is enabled for the HTTP server of the registry cache. Defaults to true.

The providerConfig.caches[].highAvailability.enabled defines if the registry cache is scaled with the high availability feature. See the High Availability section for more details.

Garbage Collection

When the registry cache receives a request for an image that is not present in its local store, it fetches the image from the upstream, returns it to the client and stores the image in the local store. The registry cache runs a scheduler that deletes images when their time to live (ttl) expires. When adding an image to the local store, the registry cache also adds a time to live for the image. The ttl defaults to 168h (7 days) and is configurable. The garbage collection can be disabled by setting the ttl to 0s. Requesting an image from the registry cache does not extend the time to live of the image. Hence, an image is always garbage collected from the registry cache store when its ttl expires.

At the time of writing this document, there is no functionality for garbage collection based on disk size - e.g., garbage collecting images when a certain disk usage threshold is passed.

The garbage collection cannot be enabled once it is disabled. This constraint is added to mitigate distribution/distribution#4249.

Increase the Cache Disk Size

When there is no available disk space, the registry cache continues to respond to requests. However, it cannot store the remotely fetched images locally because it has no free disk space. In such case, it is simply acting as a proxy without being able to cache the images in its local store. The disk has to be resized to ensure that the registry cache continues to cache images.

There are two alternatives to enlarge the cache’s disk size:

[Alternative 1] Resize the PVC

To enlarge the PVC’s size, perform the following steps:

Make sure that the KUBECONFIG environment variable is targeting the correct Shoot cluster.

Find the PVC name to resize for the desired upstream. The below example fetches the PVC for the docker.io upstream:

kubectl -n kube-system get pvc -l upstream-host=docker.io

Patch the PVC’s size to the desired size. The below example patches the size of a PVC to 10Gi:

kubectl -n kube-system patch pvc $PVC_NAME --type merge -p '{"spec":{"resources":{"requests": {"storage": "10Gi"}}}}'

Make sure that the PVC gets resized. Describe the PVC to check the resize operation result:

kubectl -n kube-system describe pvc -l upstream-host=docker.io

Drawback of this approach: The cache’s size in the Shoot spec (providerConfig.caches[].size) diverges from the PVC’s size.

[Alternative 2] Remove and Readd the Cache

There is always the option to remove the cache from the Shoot spec and to readd it again with the updated size.

Drawback of this approach: The already cached images get lost and the cache starts with an empty disk.

High Availability

By default the registry cache runs with a single replica. This fact may lead to concerns for the high availability such as “What happens when the registry cache is down? Does containerd fail to pull the image?”. As outlined in the How does it work? section, containerd is configured to fall back to the upstream registry if it fails to pull the image from the registry cache. Hence, when the registry cache is unavailable, the containerd’s image pull operations are not affected because containerd falls back to image pull from the upstream registry.

In special cases where this is not enough it is possible to set providerConfig.caches[].highAvailability.enabled to true. This will add the label high-availability-config.resources.gardener.cloud/type=server to the StatefulSet and it will be scaled to 2 replicas. Appropriate Pod Topology Spread Constraints will be added to the registry cache Pods according to the Shoot cluster configuration. See also High Availability of Deployed Components. Pay attention that each registry cache replica uses its own volume, so each registry cache pulls the image from the upstream and stores it in its volume.

Possible Pitfalls

- The used registry implementation (the Distribution project) supports mirroring of only one upstream registry. The extension deploys a pull-through cache for each configured upstream.

us-docker.pkg.dev, europe-docker.pkg.dev, and asia-docker.pkg.dev are different upstreams. Hence, configuring pkg.dev as upstream won’t cache images from us-docker.pkg.dev, europe-docker.pkg.dev, or asia-docker.pkg.dev.

Limitations

Images that are pulled before a registry cache Pod is running or before a registry cache Service is reachable from the corresponding Node won’t be cached - containerd will pull these images directly from the upstream.

The reasoning behind this limitation is that a registry cache Pod is running in the Shoot cluster. To have a registry cache’s Service cluster IP reachable from containerd running on the Node, the registry cache Pod has to be running and kube-proxy has to configure iptables/IPVS rules for the registry cache Service. If kube-proxy hasn’t configured iptables/IPVS rules for the registry cache Service, then the image pull times (and new Node bootstrap times) will be increased significantly. For more detailed explanations, see point 2. and gardener/gardener-extension-registry-cache#68.

That’s why the registry configuration on a Node is applied only after the registry cache Service is reachable from the Node. The gardener-node-agent.service systemd unit sends requests to the registry cache’s Service. Once the registry cache responds with HTTP 200, the unit creates the needed registry configuration file (hosts.toml).

As a result, for images from Shoot system components:

- On Shoot creation with the registry cache extension enabled, a registry cache is unable to cache all of the images from the Shoot system components. Usually, until the registry cache Pod is running, containerd pulls from upstream the images from Shoot system components (before the registry configuration gets applied).

- On new Node creation for existing Shoot with the registry cache extension enabled, a registry cache is unable to cache most of the images from Shoot system components. The reachability of the registry cache Service requires the Service network to be set up, i.e., the kube-proxy for that new Node to be running and to have set up iptables/IPVS configuration for the registry cache Service.

containerd requests will time out in 30s in case kube-proxy hasn’t configured iptables/IPVS rules for the registry cache Service - the image pull times will increase significantly.

containerd is configured to fall back to the upstream itself if a request against the cache fails. However, if the cluster IP of the registry cache Service does not exist or if kube-proxy hasn’t configured iptables/IPVS rules for the registry cache Service, then containerd requests against the registry cache time out in 30 seconds. This significantly increases the image pull times because containerd does multiple requests as part of the image pull (HEAD request to resolve the manifest by tag, GET request for the manifest by SHA, GET requests for blobs)

Example: If the Service of a registry cache is deleted, then a new Service will be created. containerd’s registry config will still contain the old Service’s cluster IP. containerd requests against the old Service’s cluster IP will time out and containerd will fall back to upstream.

- Image pull of

docker.io/library/alpine:3.13.2 from the upstream takes ~2s while image pull of the same image with invalid registry cache cluster IP takes ~2m.2s. - Image pull of

eu.gcr.io/gardener-project/gardener/ops-toolbelt:0.18.0 from the upstream takes ~10s while image pull of the same image with invalid registry cache cluster IP takes ~3m.10s.

Amazon Elastic Container Registry is currently not supported. For details see distribution/distribution#4383.

2 - Configuring the Registry Mirror Extension

Learn what is the use-case for a registry mirror, how to enable and configure it

Configuring the Registry Mirror Extension

Introduction

Use Case

containerd allows registry mirrors to be configured. Use cases are:

- Usage of public mirror(s) - for example, circumvent issues with the upstream registry such as rate limiting, outages, and others.

- Usage of private mirror(s) - for example, reduce network costs by using a private mirror running in the same network.

Solution

The registry-mirror extension allows the registry mirror configuration to be configured via the Shoot spec directly.

How does it work?

When the extension is enabled, the containerd daemon on the Shoot cluster Nodes gets configured to use the requested mirrors as a mirror. For example, if for the upstream docker.io the mirror https://mirror.gcr.io is configured in the Shoot spec, then containerd gets configured to first pull the image from the mirror (https://mirror.gcr.io in that case). If this image pull operation fails, containerd falls back to the upstream itself (docker.io in that case).

The extension is based on the contract described in containerd Registry Configuration. The corresponding upstream documentation in containerd is Registry Configuration - Introduction.

Shoot Configuration

The Shoot specification has to be adapted to include the registry-mirror extension configuration.

Below is an example of registry-mirror extension configuration as part of the Shoot spec:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

metadata:

name: crazy-botany

namespace: garden-dev

spec:

extensions:

- type: registry-mirror

providerConfig:

apiVersion: mirror.extensions.gardener.cloud/v1alpha1

kind: MirrorConfig

mirrors:

- upstream: docker.io

hosts:

- host: "https://mirror.gcr.io"

capabilities: ["pull"]

The providerConfig field is required.

The providerConfig.mirrors field contains information about the registry mirrors to configure. It is a required field. At least one mirror has to be specified.

The providerConfig.mirror[].upstream field is the remote registry host to mirror. It is a required field.

The value must be a valid DNS subdomain (RFC 1123) and optionally a port (i.e. <host>[:<port>]). It must not include a scheme.

The providerConfig.mirror[].hosts field represents the mirror hosts to be used for the upstream. At least one mirror host has to be specified.

The providerConfig.mirror[].hosts[].host field is the mirror host. It is a required field.

The value must include a scheme - http:// or https://.

The providerConfig.mirror[].hosts[].capabilities field represents the operations a host is capable of performing. This also represents the set of operations for which the mirror host may be trusted to perform. Defaults to ["pull"]. The supported values are pull and resolve.

See the capabilities field documentation for more information on which operations are considered trusted ones against public/private mirrors.

3 - Deploying Registry Cache Extension in Gardener's Local Setup with Provider Extensions

Learn how to set up a development environment using own Seed clusters on an existing Kubernetes cluster

Deploying Registry Cache Extension in Gardener’s Local Setup with Provider Extensions

Prerequisites

Setting up the Registry Cache Extension

Make sure that your KUBECONFIG environment variable is targeting the local Gardener cluster.

The location of the Gardener project from the Gardener setup step is expected to be under the same root (e.g. ~/go/src/github.com/gardener/). If this is not the case, the location of Gardener project should be specified in GARDENER_REPO_ROOT environment variable:

export GARDENER_REPO_ROOT="<path_to_gardener_project>"

Then you can run:

In case you have added additional Seeds you can specify the seed name:

make remote-extension-up SEED_NAME=<seed-name>

The corresponding make target will build the extension image, push it into the Seed cluster image registry, and deploy the registry-cache ControllerDeployment and ControllerRegistration resources into the kind cluster.

The container image in the ControllerDeployment will be the image that was build and pushed into the Seed cluster image registry.

The make target will then deploy the registry-cache admission component. It will build the admission image, push it into the kind cluster image registry, and finally install the admission component charts to the kind cluster.

Creating a Shoot Cluster

Once the above step is completed, you can create a Shoot cluster. In order to create a Shoot cluster, please create your own Shoot definition depending on providers on your Seed cluster.

Tearing Down the Development Environment

To tear down the development environment, delete the Shoot cluster or disable the registry-cache extension in the Shoot’s specification. When the extension is not used by the Shoot anymore, you can run:

make remote-extension-down

The make target will delete the ControllerDeployment and ControllerRegistration of the extension, and the registry-cache admission helm deployment.

4 - Deploying Registry Cache Extension Locally

Learn how to set up a local development environment

Deploying Registry Cache Extension Locally

Prerequisites

Setting up the Registry Cache Extension

Make sure that your KUBECONFIG environment variable is targeting the local Gardener cluster. When this is ensured, run:

The corresponding make target will build the extension image, load it into the kind cluster Nodes, and deploy the registry-cache ControllerDeployment and ControllerRegistration resources. The container image in the ControllerDeployment will be the image that was build and loaded into the kind cluster Nodes.

The make target will then deploy the registry-cache admission component. It will build the admission image, load it into the kind cluster Nodes, and finally install the admission component charts to the kind cluster.

Creating a Shoot Cluster

Once the above step is completed, you can create a Shoot cluster.

example/shoot-registry-cache.yaml contains a Shoot specification with the registry-cache extension:

kubectl create -f example/shoot-registry-cache.yaml

example/shoot-registry-mirror.yaml contains a Shoot specification with the registry-mirror extension:

kubectl create -f example/shoot-registry-mirror.yaml

Tearing Down the Development Environment

To tear down the development environment, delete the Shoot cluster or disable the registry-cache extension in the Shoot’s specification. When the extension is not used by the Shoot anymore, you can run:

The make target will delete the ControllerDeployment and ControllerRegistration of the extension, and the registry-cache admission helm deployment.

Alternative Setup Using the gardener-operator Local Setup

Alternatively, you can deploy the registry-cache extension in the gardener-operator local setup. To do this, make sure you are have a running local setup based on Alternative Way to Set Up Garden and Seed Leveraging gardener-operator. The KUBECONFIG environment variable should target the operator local KinD cluster (i.e. <path_to_gardener_project>/example/gardener-local/kind/operator/kubeconfig).

Creating the registry-cache Extension.operator.gardener.cloud resource:

make extension-operator-up

The corresponding make target will build the registry-cache admission and extension container images, OCI artifacts for the admission runtime and application charts, and the extension chart. Then, the container images and the OCI artifacts are pushed into the default skaffold registry (i.e. garden.local.gardener.cloud:5001). Next, the registry-cache Extension.operator.gardener.cloud resource is deployed into the KinD cluster. Based on this resource the gardener-operator will deploy the registry-cache admission component, as well as the registry-cache ControllerDeployment and ControllerRegistration resources.

Creating a Shoot Cluster

To create a Shoot cluster the KUBECONFIG environment variable should target virtual garden cluster (i.e. <path_to_gardener_project>/example/operator/virtual-garden/kubeconfig) and then execute:

kubectl create -f example/shoot-registry-cache.yaml

Delete the registry-cache Extension.operator.gardener.cloud resource

Make sure the environment variable KUBECONFIG points to the operator’s local KinD cluster and then run:

make extension-operator-down

The corresponding make target will delete the Extension.operator.gardener.cloud resource. Consequently, the gardener-operator will delete the registry-cache admission component and registry-cache ControllerDeployment and ControllerRegistration resources.

5 - Developer Docs for Gardener Extension Registry Cache

Learn about the inner workings

Developer Docs for Gardener Extension Registry Cache

This document outlines how Shoot reconciliation and deletion works for a Shoot with the registry-cache extension enabled.

Shoot Reconciliation

This section outlines how the reconciliation works for a Shoot with the registry-cache extension enabled.

Extension Enablement / Reconciliation

This section outlines how the extension enablement/reconciliation works, e.g., the extension has been added to the Shoot spec.

- As part of the Shoot reconciliation flow, the gardenlet deploys the Extension resource.

- The registry-cache extension reconciles the Extension resource. pkg/controller/cache/actuator.go contains the implementation of the extension.Actuator interface. The reconciliation of an Extension of type

registry-cache consists of the following steps:- The registry-cache extension deploys resources to the Shoot cluster via ManagedResource. For every configured upstream, it creates a StatefulSet (with PVC), Service, and other resources.

- It lists all Services from the

kube-system namespace that have the upstream-host label. It will return an error (and retry in exponential backoff) until the Services count matches the configured registries count. - When there is a Service created for each configured upstream registry, the registry-cache extension populates the Extension resource status. In the Extension status, for each upstream, it maintains an endpoint (in the format

http://<cluster-ip>:5000) which can be used to access the registry cache from within the Shoot cluster. <cluster-ip> is the cluster IP of the registry cache Service. The cluster IP of a Service is assigned by the Kubernetes API server on Service creation.

- As part of the Shoot reconciliation flow, the gardenlet deploys the OperatingSystemConfig resource.

- The registry-cache extension serves a webhook that mutates the OperatingSystemConfig resource for Shoots having the registry-cache extension enabled (the corresponding namespace gets labeled by the gardenlet with

extensions.gardener.cloud/registry-cache=true). pkg/webhook/cache/ensurer.go contains an implementation of the genericmutator.Ensurer interface.- The webhook appends or updates

RegistryConfig entries in the OperatingSystemConfig CRI configuration that corresponds to configured registry caches in the Shoot. The RegistryConfig readiness probe is enabled so that gardener-node-agent creates a hosts.toml containerd registry configuration file when all RegistryConfig hosts are reachable.

Extension Disablement

This section outlines how the extension disablement works, i.e., the extension has to be removed from the Shoot spec.

- As part of the Shoot reconciliation flow, the gardenlet destroys the Extension resource because it is no longer needed.

- The extension deletes the ManagedResource containing the registry cache resources.

- The OperatingSystemConfig resource will not be mutated and no

RegistryConfig entries will be added or updated. The gardener-node-agent detects that RegistryConfig entries have been removed or changed and deletes or updates corresponding hosts.toml configuration files under /etc/containerd/certs.d folder.

Shoot Deletion

This section outlines how the deletion works for a Shoot with the registry-cache extension enabled.

- As part of the Shoot deletion flow, the gardenlet destroys the Extension resource.

- The extension deletes the ManagedResource containing the registry cache resources.

6 - How to provide credentials for upstream registry?

How to provide credentials for upstream registry?

In Kubernetes, to pull images from private container image registries you either have to specify an image pull Secret (see Pull an Image from a Private Registry) or you have to configure the kubelet to dynamically retrieve credentials using a credential provider plugin (see Configure a kubelet image credential provider). When pulling an image, the kubelet is providing the credentials to the CRI implementation. The CRI implementation uses the provided credentials against the upstream registry to pull the image.

The registry-cache extension is using the Distribution project as pull through cache implementation. The Distribution project does not use the provided credentials from the CRI implementation while fetching an image from the upstream. Hence, the above-described scenarios such as configuring image pull Secret for a Pod or configuring kubelet credential provider plugins don’t work out of the box with the pull through cache provided by the registry-cache extension.

Instead, the Distribution project supports configuring only one set of credentials for a given pull through cache instance (for a given upstream).

This document describe how to supply credentials for the private upstream registry in order to pull private image with the registry cache.

Create an immutable Secret with the upstream registry credentials in the Garden cluster:

kubectl create -f - <<EOF

apiVersion: v1

kind: Secret

metadata:

name: ro-docker-secret-v1

namespace: garden-dev

type: Opaque

immutable: true

data:

username: $(echo -n $USERNAME | base64 -w0)

password: $(echo -n $PASSWORD | base64 -w0)

EOF

For Artifact Registry, the username is _json_key and the password is the service account key in JSON format. To base64 encode the service account key, copy it and run:

echo -n $SERVICE_ACCOUNT_KEY_JSON | base64 -w0

Add the newly created Secret as a reference to the Shoot spec, and then to the registry-cache extension configuration.

In the registry-cache configuration, set the secretReferenceName field. It should point to a resource reference under spec.resources. The resource reference itself points to the Secret in project namespace.

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

# ...

spec:

extensions:

- type: registry-cache

providerConfig:

apiVersion: registry.extensions.gardener.cloud/v1alpha3

kind: RegistryConfig

caches:

- upstream: docker.io

secretReferenceName: docker-secret

# ...

resources:

- name: docker-secret

resourceRef:

apiVersion: v1

kind: Secret

name: ro-docker-secret-v1

# ...

Do not delete the referenced Secret when there is a Shoot still using it.

How to rotate the registry credentials?

To rotate registry credentials perform the following steps:

- Generate a new pair of credentials in the cloud provider account. Do not invalidate the old ones.

- Create a new Secret (e.g.,

ro-docker-secret-v2) with the newly generated credentials as described in step 1. in How to configure the registry cache to use upstream registry credentials?. - Update the Shoot spec with newly created Secret as described in step 2. in How to configure the registry cache to use upstream registry credentials?.

- The above step will trigger a Shoot reconciliation. Wait for it to complete.

- Make sure that the old Secret is no longer referenced by any Shoot cluster. Finally, delete the Secret containing the old credentials (e.g.,

ro-docker-secret-v1). - Delete the corresponding old credentials from the cloud provider account.

Possible Pitfalls

- The registry cache is not protected by any authentication/authorization mechanism. The cached images (incl. private images) can be fetched from the registry cache without authentication/authorization. Note that the registry cache itself is not exposed publicly.

- The registry cache provides the credentials for every request against the corresponding upstream. In some cases, misconfigured credentials can prevent the registry cache to pull even public images from the upstream (for example: invalid service account key for Artifact Registry). However, this behaviour is controlled by the server-side logic of the upstream registry.

- Do not remove the image pull Secrets when configuring credentials for the registry cache. When the registry-cache is not available, containerd falls back to the upstream registry. containerd still needs the image pull Secret to pull the image and in this way to have the fallback mechanism working.

7 - Observability

Registry Cache Observability

The registry-cache extension exposes metrics for the registry caches running in the Shoot cluster so that they can be easily viewed by cluster owners and operators in the Shoot’s Prometheus and Plutono instances. The exposed monitoring data provides an overview of the performance of the pull-through caches, including hit rate and network traffic data.

Metrics

A registry cache serves several metrics. The metrics are scraped by the Shoot’s Prometheus instance.

The Registry Caches dashboard in the Shoot’s Plutono instance contains several panels which are built using the registry cache metrics. From the Registry dropdown menu you can select the upstream for which you wish the metrics to be displayed (by default, metrics are summed for all upstream registries).

Following is a list of all exposed registry cache metrics. The upstream_host label can be used to determine the upstream host to which the metrics are related, while the type label can be used to determine weather the metric is for an image blob or an image manifest:

registry_proxy_requests_total

The number of total incoming request received.

- Type: Counter

- Labels:

upstream_host type

registry_proxy_hits_total

The number of total cache hits; i.e. the requested content exists in the registry cache’s image store and it is served from there (upstream is not contacted at all for serving the requested content).

- Type: Counter

- Labels:

upstream_host type

registry_proxy_misses_total

The number of total cache misses; i.e. the requested content does not exist in the registry cache’s image store and it is fetched from the upstream.

- Type: Counter

- Labels:

upstream_host type

registry_proxy_pulled_bytes_total

The size of total bytes that the registry cache has pulled from the upstream.

- Type: Counter

- Labels:

upstream_host type

registry_proxy_pushed_bytes_total

The size of total bytes pushed to the registry cache’s clients.

- Type: Counter

- Labels:

upstream_host type

Alerts

There are two alerts defined for the registry cache PersistentVolume in the Shoot’s Prometheus instance:

RegistryCachePersistentVolumeUsageCritical

This indicates that the registry cache PersistentVolume is almost full and less than 5% is free. When there is no available disk space, no new images will be cached. However, image pull operations are not affected. An alert is fired when the following expression evaluates to true:

100 * (

kubelet_volume_stats_available_bytes{persistentvolumeclaim=~"^cache-volume-registry-.+$"}

/

kubelet_volume_stats_capacity_bytes{persistentvolumeclaim=~"^cache-volume-registry-.+$"}

) < 5

RegistryCachePersistentVolumeFullInFourDays

This indicates that the registry cache PersistentVolume is expected to fill up within four days based on recent sampling. An alert is fired when the following expression evaluates to true:

100 * (

kubelet_volume_stats_available_bytes{persistentvolumeclaim=~"^cache-volume-registry-.+$"}

/

kubelet_volume_stats_capacity_bytes{persistentvolumeclaim=~"^cache-volume-registry-.+$"}

) < 15

and

predict_linear(kubelet_volume_stats_available_bytes{persistentvolumeclaim=~"^cache-volume-registry-.+$"}[30m], 4 * 24 * 3600) <= 0

Users can subscribe to these alerts by following the Gardener alerting guide.

Logging

To view the registry cache logs in Plutono, navigate to the Explore tab and select vali from the Explore dropdown menu. Afterwards enter the following vali query:

{container_name="registry-cache"} to view the logs for all registries.{pod_name=~"registry-<upstream_host>.+"} to view the logs for specific upstream registry.