This is the multi-page printable view of this section. Click here to print.

Concepts

1 - APIServer Admission Plugins

Overview

Similar to the kube-apiserver, the gardener-apiserver comes with a few in-tree managed admission plugins. If you want to get an overview of the what and why of admission plugins then this document might be a good start.

This document lists all existing admission plugins with a short explanation of what it is responsible for.

BackupBucketValidator

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for BackupBucketss.

When the backup bucket is using WorkloadIdentity as backup credentials, the plugin ensures the backup bucket and the workload identity have the same provider type, i.e. backupBucket.spec.provider.type and workloadIdentity.spec.targetSystem.type have the same value.

ClusterOpenIDConnectPreset, OpenIDConnectPreset

(both enabled by default)

These admission controllers react on CREATE operations for Shoots.

If the Shoot does not specify any OIDC configuration (.spec.kubernetes.kubeAPIServer.oidcConfig=nil), then it tries to find a matching ClusterOpenIDConnectPreset or OpenIDConnectPreset, respectively.

If there are multiple matches, then the one with the highest weight “wins”.

In this case, the admission controller will default the OIDC configuration in the Shoot.

ControllerRegistrationResources

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for ControllerRegistrations.

It validates that there exists only one ControllerRegistration in the system that is primarily responsible for a given kind/type resource combination.

This prevents misconfiguration by the Gardener administrator/operator.

CustomVerbAuthorizer

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for Projects and NamespacedCloudProfiles.

For Projects it validates whether the user is bound to an RBAC role with the modify-spec-tolerations-whitelist verb in case the user tries to change the .spec.tolerations.whitelist field of the respective Project resource.

Usually, regular project members are not bound to this custom verb, allowing the Gardener administrator to manage certain toleration whitelists on Project basis.

For NamespacedCloudProfiles, the modification of specific fields also require the user to be bound to an RBAC role with custom verbs.

Please see this document for more information.

DeletionConfirmation

(enabled by default)

This admission controller reacts on DELETE operations for Projects, Shoots, and ShootStates.

It validates that the respective resource is annotated with a deletion confirmation annotation, namely confirmation.gardener.cloud/deletion=true.

Only if this annotation is present it allows the DELETE operation to pass.

This prevents users from accidental/undesired deletions.

In addition, it applies the “four-eyes principle for deletion” concept if the Project is configured accordingly.

Find all information about it in this document.

Furthermore, this admission controller reacts on CREATE or UPDATE operations for Shoots.

It makes sure that the deletion.gardener.cloud/confirmed-by annotation is properly maintained in case the Shoot deletion is confirmed with above mentioned annotation.

ExposureClass

(enabled by default)

This admission controller reacts on Create operations for Shoots.

It mutates Shoot resources which have an ExposureClass referenced by merging both their shootSelectors and/or tolerations into the Shoot resource.

ExtensionValidator

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for BackupEntrys, BackupBuckets, Seeds, and Shoots.

For all the various extension types in the specifications of these objects, it validates whether there exists a ControllerRegistration in the system that is primarily responsible for the stated extension type(s).

This prevents misconfigurations that would otherwise allow users to create such resources with extension types that don’t exist in the cluster, effectively leading to failing reconciliation loops.

ExtensionLabels

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for BackupBuckets, BackupEntrys, CloudProfiles, NamespacedCloudProfiles, Seeds, SecretBindings, CredentialsBindings, WorkloadIdentitys and Shoots. For all the various extension types in the specifications of these objects, it adds a corresponding label in the resource. This would allow extension admission webhooks to filter out the resources they are responsible for and ignore all others. This label is of the form <extension-type>.extensions.gardener.cloud/<extension-name> : "true". For example, an extension label for provider extension type aws, looks like provider.extensions.gardener.cloud/aws : "true".

FinalizerRemoval

(enabled by default)

This admission controller reacts on UPDATE operations for CredentialsBindings, SecretBindings, Shoots.

It ensures that the finalizers of these resources are not removed by users, as long as the affected resource is still in use.

For CredentialsBindings and SecretBindings this means, that the gardener finalizer can only be removed if the binding is not referenced by any Shoot.

In case of Shoots, the gardener finalizer can only be removed if the last operation of the Shoot indicates a successful deletion.

ProjectValidator

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for Projects.

It prevents creating Projects with a non-empty .spec.namespace if the value in .spec.namespace does not start with garden-.

In addition, the project specification is initialized during creation:

.spec.createdByis set to the user creating the project..spec.ownerdefaults to the value of.spec.createdByif it is not specified.

During subsequent updates, it ensures that the project owner is included in the .spec.members list.

ResourceQuota

(enabled by default)

This admission controller enables object count ResourceQuotas for Gardener resources, e.g. Shoots, SecretBindings, Projects, etc.

⚠️ In addition to this admission plugin, the ResourceQuota controller must be enabled for the Kube-Controller-Manager of your Garden cluster.

ResourceReferenceManager

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for CloudProfiles, Projects, SecretBindings, Seeds, and Shoots.

Generally, it checks whether referred resources stated in the specifications of these objects exist in the system (e.g., if a referenced Secret exists).

However, it also has some special behaviours for certain resources:

CloudProfiles: It rejects removing Kubernetes or machine image versions if there is at least oneShootthat refers to them.

SeedValidator

(enabled by default)

This admission controller reacts on CREATE, UPDATE, and DELETE operations for Seeds.

Rejects the deletion if Shoot(s) reference the seed cluster.

While the seed is still used by Shoot(s), the plugin disallows removal of entries from the seed.spec.provider.zones field.

When the seed is using WorkloadIdentity as backup credentials, the plugin ensures the seed backup and the workload identity have the same provider type, i.e. seed.spec.backup.provider and workloadIdentity.spec.targetSystem.type have the same value.

SeedMutator

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for Seeds.

It maintains the name.seed.gardener.cloud/<name> labels for it.

More specifically, it adds that the name.seed.gardener.cloud/<name>=true label where <name> is

- the name of the

Seedresource (aSeednamedfoowill get labelname.seed.gardener.cloud/foo=true). - the name of the parent

Seedresource in case it is aManagedSeed(aSeednamedfoothat is created by aManagedSeedwhich references aShootrunning aSeedcalledbarwill get labelname.seed.gardener.cloud/bar=true).

ShootDNS

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for Shoots.

It tries to assign a default domain to the Shoot.

It also validates the DNS configuration (.spec.dns) for shoots.

ShootNodeLocalDNSEnabledByDefault

(disabled by default)

This admission controller reacts on CREATE operations for Shoots.

If enabled, it will enable node local dns within the shoot cluster (for more information, see NodeLocalDNS Configuration) by setting spec.systemComponents.nodeLocalDNS.enabled=true for newly created Shoots.

Already existing Shoots and new Shoots that explicitly disable node local dns (spec.systemComponents.nodeLocalDNS.enabled=false)

will not be affected by this admission plugin.

ShootQuotaValidator

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for Shoots.

It validates the resource consumption declared in the specification against applicable Quota resources.

Only if the applicable Quota resources admit the configured resources in the Shoot then it allows the request.

Applicable Quotas are referred in the SecretBinding that is used by the Shoot.

ShootResourceReservation

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for Shoots.

It injects the Kubernetes.Kubelet.KubeReserved setting for kubelet either as global setting for a shoot or on a per worker pool basis.

If the admission configuration (see this example) for the ShootResourceReservation plugin contains useGKEFormula: false (the default), then it sets a static default resource reservation for the shoot.

If useGKEFormula: true is set, then the plugin injects resource reservations based on the machine type similar to GKE’s formula for resource reservation into each worker pool.

Already existing resource reservations are not modified; this also means that resource reservations are not automatically updated if the machine type for a worker pool is changed.

If a shoot contains global resource reservations, then no per worker pool resource reservations are injected.

By default, useGKEFormula: true applies to all Shoots.

Operators can provide an optional label selector via the selector field to limit which Shoots get worker specific resource reservations injected.

ShootVPAEnabledByDefault

(disabled by default)

This admission controller reacts on CREATE operations for Shoots.

If enabled, it will enable the managed VerticalPodAutoscaler components (for more information, see Vertical Pod Auto-Scaling)

by setting spec.kubernetes.verticalPodAutoscaler.enabled=true for newly created Shoots.

Already existing Shoots and new Shoots that explicitly disable VPA (spec.kubernetes.verticalPodAutoscaler.enabled=false)

will not be affected by this admission plugin.

ShootTolerationRestriction

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for Shoots.

It validates the .spec.tolerations used in Shoots against the whitelist of its Project, or against the whitelist configured in the admission controller’s configuration, respectively.

Additionally, it defaults the .spec.tolerations in Shoots with those configured in its Project, and those configured in the admission controller’s configuration, respectively.

ShootValidator

(enabled by default)

This admission controller reacts on CREATE, UPDATE and DELETE operations for Shoots.

It validates certain configurations in the specification against the referred CloudProfile (e.g., machine images, machine types, used Kubernetes version, …).

Generally, it performs validations that cannot be handled by the static API validation due to their dynamic nature (e.g., when something needs to be checked against referred resources).

Additionally, it takes over certain defaulting tasks (e.g., default machine image for worker pools, default Kubernetes version) and setting the gardener.cloud/created-by=<username> annotation for newly created Shoot resources.

ShootManagedSeed

(enabled by default)

This admission controller reacts on UPDATE and DELETE operations for Shoots.

It validates certain configuration values in the specification that are specific to ManagedSeeds (e.g. the nginx-addon of the Shoot has to be disabled, the Shoot VPA has to be enabled).

It rejects the deletion if the Shoot is referred to by a ManagedSeed.

ManagedSeedValidator

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for ManagedSeedss.

It validates certain configuration values in the specification against the referred Shoot, for example Seed provider, network ranges, DNS domain, etc.

Similar to ShootValidator, it performs validations that cannot be handled by the static API validation due to their dynamic nature.

Additionally, it performs certain defaulting tasks, making sure that configuration values that are not specified are defaulted to the values of the referred Shoot, for example Seed provider, network ranges, DNS domain, etc.

ManagedSeedShoot

(enabled by default)

This admission controller reacts on DELETE operations for ManagedSeeds.

It rejects the deletion if there are Shoots that are scheduled onto the Seed that is registered by the ManagedSeed.

ShootDNSRewriting

(disabled by default)

This admission controller reacts on CREATE operations for Shoots.

If enabled, it adds a set of common suffixes configured in its admission plugin configuration to the Shoot (spec.systemComponents.coreDNS.rewriting.commonSuffixes) (for more information, see DNS Search Path Optimization).

Already existing Shoots will not be affected by this admission plugin.

NamespacedCloudProfileValidator

(enabled by default)

This admission controller reacts on CREATE and UPDATE operations for NamespacedCloudProfiles.

It primarily validates if the referenced parent CloudProfile exists in the system. In addition, the admission controller ensures that the NamespacedCloudProfile only configures new machine types, and does not overwrite those from the parent CloudProfile.

2 - Architecture

Official Definition - What is Kubernetes?

“Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.”

Introduction - Basic Principle

The foundation of the Gardener (providing Kubernetes Clusters as a Service) is Kubernetes itself, because Kubernetes is the go-to solution to manage software in the Cloud, even when it’s Kubernetes itself (see also OpenStack which is provisioned more and more on top of Kubernetes as well).

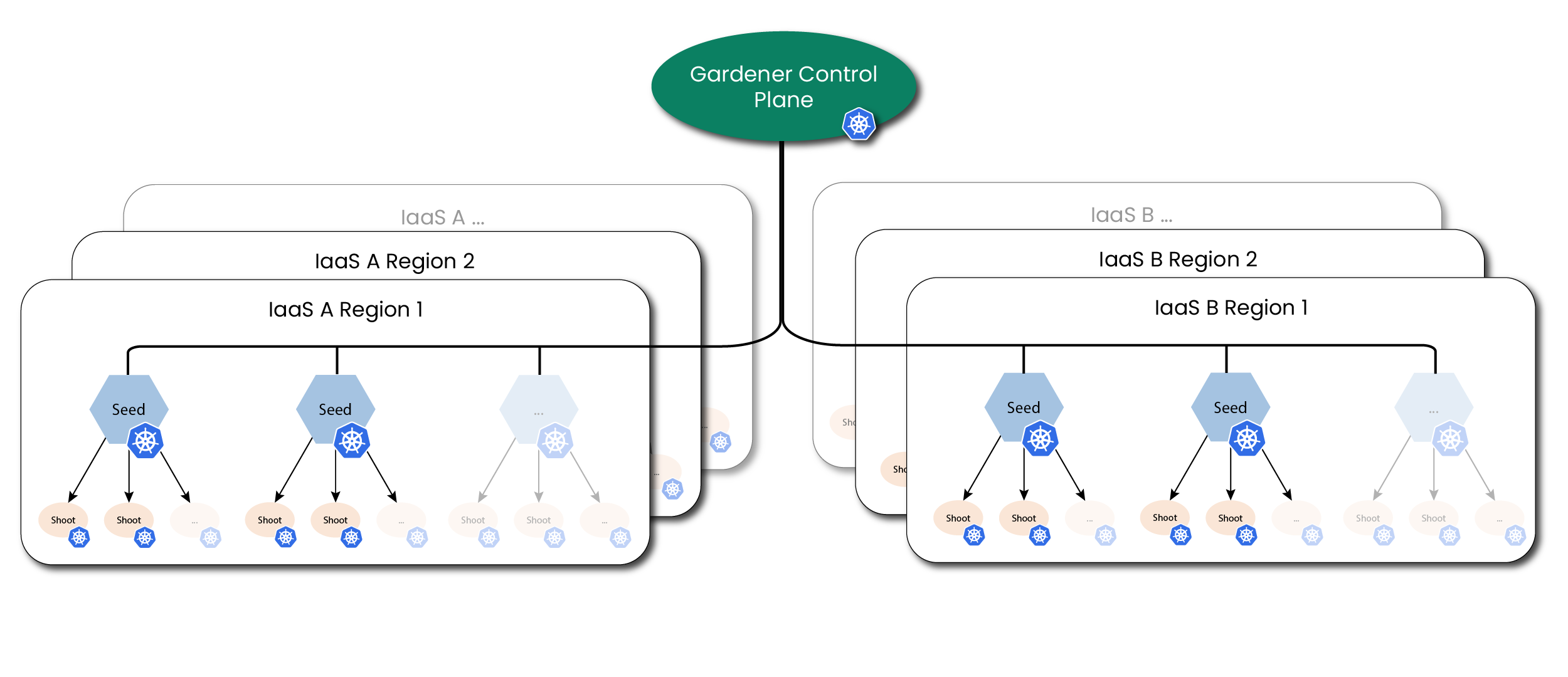

While self-hosting, meaning to run Kubernetes components inside Kubernetes, is a popular topic in the community, we apply a special pattern catering to the needs of our cloud platform to provision hundreds or even thousands of clusters. We take a so-called “seed” cluster and seed the control plane (such as the API server, scheduler, controllers, etcd persistence and others) of an end-user cluster, which we call “shoot” cluster, as pods into the “seed” cluster. That means that one “seed” cluster, of which we will have one per IaaS and region, hosts the control planes of multiple “shoot” clusters. That allows us to avoid dedicated hardware/virtual machines for the “shoot” cluster control planes. We simply put the control plane into pods/containers and since the “seed” cluster watches them, they can be deployed with a replica count of 1 and only need to be scaled out when the control plane gets under pressure, but no longer for HA reasons. At the same time, the deployments get simpler (standard Kubernetes deployment) and easier to update (standard Kubernetes rolling update). The actual “shoot” cluster consists only of the worker nodes (no control plane) and therefore the users may get full administrative access to their clusters.

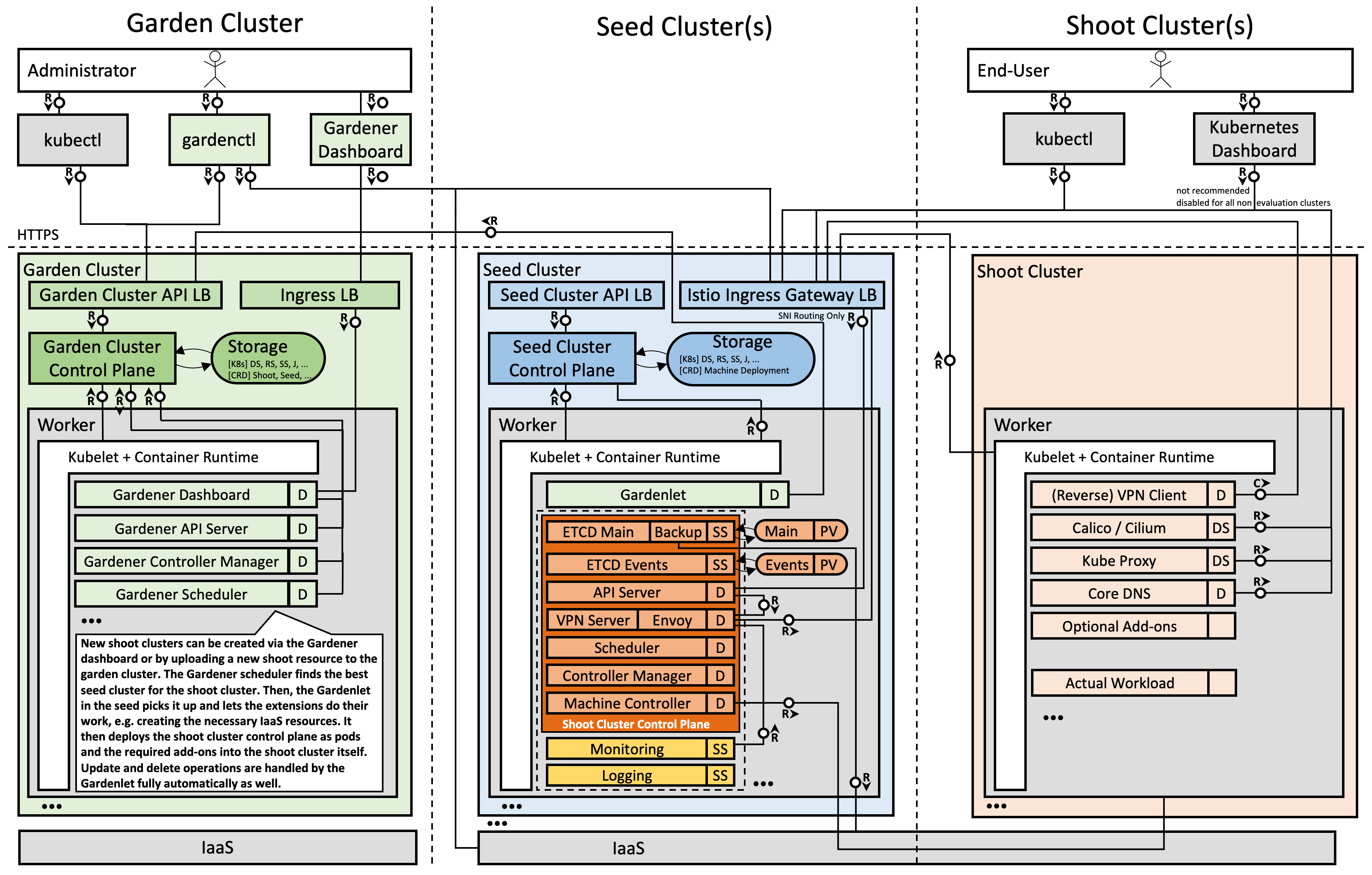

Setting The Scene - Components and Procedure

We provide a central operator UI, which we call the “Gardener Dashboard”. It talks to a dedicated cluster, which we call the “Garden” cluster, and uses custom resources managed by an aggregated API server (one of the general extension concepts of Kubernetes) to represent “shoot” clusters. In this “Garden” cluster runs the “Gardener”, which is basically a Kubernetes controller that watches the custom resources and acts upon them, i.e. creates, updates/modifies, or deletes “shoot” clusters. The creation follows basically these steps:

- Create a namespace in the “seed” cluster for the “shoot” cluster, which will host the “shoot” cluster control plane.

- Generate secrets and credentials, which the worker nodes will need to talk to the control plane.

- Create the infrastructure (using Terraform), which basically consists out of the network setup.

- Deploy the “shoot” cluster control plane into the “shoot” namespace in the “seed” cluster, containing the “machine-controller-manager” pod.

- Create machine CRDs in the “seed” cluster, describing the configuration and the number of worker machines for the “shoot” (the machine-controller-manager watches the CRDs and creates virtual machines out of it).

- Wait for the “shoot” cluster API server to become responsive (pods will be scheduled, persistent volumes and load balancers are created by Kubernetes via the respective cloud provider).

- Finally, we deploy

kube-systemdaemons likekube-proxyand further add-ons like thedashboardinto the “shoot” cluster and the cluster becomes active.

Overview Architecture Diagram

Detailed Architecture Diagram

Note: The kubelet, as well as the pods inside the “shoot” cluster, talks through the front-door (load balancer IP; public Internet) to its “shoot” cluster API server running in the “seed” cluster. The reverse communication from the API server to the pod, service, and node networks happens through a VPN connection that we deploy into the “seed” and “shoot” clusters.

3 - Backup and Restore

Overview

Kubernetes uses etcd as the key-value store for its resource definitions. Gardener supports the backup and restore of etcd. It is the responsibility of the shoot owners to backup the workload data.

Gardener uses an etcd-backup-restore component to backup the etcd backing the Shoot cluster regularly and restore it in case of disaster. It is deployed as sidecar via etcd-druid. This doc mainly focuses on the backup and restore configuration used by Gardener when deploying these components. For more details on the design and internal implementation details, please refer to GEP-06 and the documentation on individual repositories.

Bucket Provisioning

Refer to the backup bucket extension document to find out details about configuring the backup bucket.

Backup Policy

etcd-backup-restore supports full snapshot and delta snapshots over full snapshot. In Gardener, this configuration is currently hard-coded to the following parameters:

- Full Snapshot schedule:

- Daily,

24hrinterval. - For each Shoot, the schedule time in a day is randomized based on the configured Shoot maintenance window.

- Daily,

- Delta Snapshot schedule:

- At

5mininterval. - If aggregated events size since last snapshot goes beyond

100Mib.

- At

- Backup History / Garbage backup deletion policy:

- Gardener configures backup restore to have

Exponentialgarbage collection policy. - As per policy, the following backups are retained:

- All full backups and delta backups for the previous hour.

- Latest full snapshot of each previous hour for the day.

- Latest full snapshot of each previous day for 7 days.

- Latest full snapshot of the previous 4 weeks.

- Garbage Collection is configured at

12hrinterval.

- Gardener configures backup restore to have

- Listing:

- Gardener doesn’t have any API to list out the backups.

- To find the backups list, an admin can checkout the

BackupEntryresource associated with the Shoot which holds the bucket and prefix details on the object store.

Restoration

The restoration process of etcd is automated through the etcd-backup-restore component from the latest snapshot. Gardener doesn’t support Point-In-Time-Recovery (PITR) of etcd. In case of an etcd disaster, the etcd is recovered from the latest backup automatically. For further details, please refer the Restoration topic. Post restoration of etcd, the Shoot reconciliation loop brings the cluster back to its previous state.

Again, the Shoot owner is responsible for maintaining the backup/restore of his workload. Gardener only takes care of the cluster’s etcd.

4 - Cluster API

Relation Between Gardener API and Cluster API (SIG Cluster Lifecycle)

The Cluster API (CAPI) and Gardener approach Kubernetes cluster management with different, albeit related, philosophies. In essence, Cluster API primarily harmonizes how to get to clusters, while Gardener goes a significant step further by also harmonizing the clusters themselves.

Gardener already provides a declarative, Kubernetes-native API to manage the full lifecycle of conformant Kubernetes clusters. This is a key distinction, as many other managed Kubernetes services are often exposed via proprietary REST APIs or imperative CLIs, whereas Gardener’s API is Kubernetes. Gardener is inherently multi-cloud and, by design, unifies far more aspects of a cluster’s make-up and operational behavior than Cluster API currently aims to.

Gardener’s Homogeneity vs. CAPI’s Provider Model

The Cluster API delegates the specifics of cluster creation and management to providers for infrastructures (e.g., AWS, Azure, GCP) and control planes, each with their own Custom Resource Definitions (CRDs). This means that different Cluster API providers can result in vastly different Kubernetes clusters in terms of their configuration (see, e.g., AKS vs GKE), available Kubernetes versions, operating systems, control plane setup, included add-ons, and operational behavior.

In stark contrast, Gardener provides homogeneous clusters. Regardless of the underlying infrastructure (AWS, Azure, GCP, OpenStack, etc.), you get clusters with the exact same Kubernetes version, operating system choices, control plane configuration (API server, kubelet, etc.), core add-ons (overlay network, DNS, metrics, logging, etc.), and consistent behavior for updates, auto-scaling, self-healing, credential rotation, you name it. This deep harmonization is a core design goal of Gardener, aimed at simplifying operations for teams developing and shipping software on Kubernetes across diverse environments. Gardener’s extensive coverage in the official Kubernetes conformance test grid underscores this commitment.

Historical Context and Evolution

Gardener has actively followed and contributed to Cluster API. Notably, Gardener heavily influenced the Machine API concepts within Cluster API through its Machine Controller Manager and was the first to adopt it. A joint KubeCon talk between SIG Cluster Lifecycle and Gardener further details this collaboration.

Cluster API has evolved significantly, especially from v1alpha1 to v1alpha2, which put a strong emphasis on a machine-based paradigm (The Cluster API Book - Updating a v1alpha1 provider to a v1alpha2 infrastructure provider). This “demoted” v1alpha1 providers to mere infrastructure providers, creating an “impedance mismatch” for fully managed services like GKE (which runs on Borg), Gardener (which uses a “kubeception” model — running control planes as pods in a seed cluster, a.k.a. “hosted control planes”) and others, making direct adoption difficult.

Challenges of Integrating Fully Managed Services with Cluster API

Despite the recent improvements, integrating fully managed Kubernetes services with Cluster API presents inherent challenges:

Opinionated Structure: Cluster API’s

Cluster/ControlPlane/MachineDeployment/MachinePoolstructure is opinionated and doesn’t always align naturally with how fully managed services architect their offerings. In particular, the separation betweenControlPlaneandInfraClusterobjects can be difficult to map cleanly. Fully managed services often abstract away the details of how control planes are provisioned and operated, making the distinction between these two components less meaningful or even redundant in those contexts.Provider-Specific CRDs: While CAPI provides a common

Clusterresource, the crucialControlPlaneand infrastructure-specific resources (e.g.,AWSManagedControlPlane,GCPManagedCluster,AzureManagedControlPlane) are entirely different for each provider. This means you cannot simply swapprovider: foowithprovider: barin a CAPI manifest and expect it to work. Users still need to understand the unique CRDs and capabilities of each CAPI provider.Limited Unification: CAPI unifies the act of requesting a cluster, but not the resulting cluster’s features, available Kubernetes versions, release cycles, supported operating systems, specific add-ons, or nuanced operational behaviors like credential rotation procedures and their side effects.

Experimental or Limited Support: For some managed services, CAPI provider support is still experimental or limited. For example:

- The CAPI provider for AKS notes that the

AzureManagedClusterTemplateis “basically a no-op,” with most configuration inAzureManagedControlPlaneTemplate(CAPZ Docs). - The CAPI provider for GKE states: “Provisioning managed clusters (GKE) is an experimental feature…” and is disabled by default (CAPG Docs).

- The CAPI provider for AKS notes that the

Platform Governance and Lifecycle Management: While Cluster API standardizes the request for a cluster, it doesn’t inherently provide the comprehensive governance and lifecycle management features that platform operators require, especially in enterprise settings. A platform solution needs to go beyond provisioning and offer robust mechanisms for:

- Defining and Enforcing Policies: Platform administrators need to control which Kubernetes versions, machine images, operating systems, and add-on versions are available to end-users. This includes classifying them (e.g., “preview,” “supported,” “deprecated”) and setting clear expiration dates.

- Communicating Lifecycle Changes: End-users must be informed about upcoming deprecations or required upgrades to plan their own application maintenance and avoid disruptions.

- Automated Maintenance and Compliance: The platform should facilitate or even automate updates and compliance checks based on these defined policies.

Gardener is designed with these enterprise platform needs at its core. It provides a rich API and control plane that allows administrators to manage the entire lifecycle of these components. For instance, Gardener enables platform teams to:

- Clearly mark Kubernetes versions or machine images as “supported,” “preview,” or “deprecated,” complete with expiration dates.

- Offer end-users the ability to opt-into automated maintenance schedules or provide them with the necessary information to build their own automated update logic for their workloads before underlying components are deprecated.

This level of granular control, policy enforcement, and lifecycle communication is crucial for maintaining stability, security, and operational efficiency across a large number of clusters. For organizations adopting Cluster API directly, implementing such a comprehensive governance layer becomes an additional, significant development and operational burden that they must address on top of CAPI’s provisioning capabilities.

Gardener’s Perspective on a Cluster API Provider

Given that Gardener already offers a robust, Kubernetes-native API for homogenous multi-cloud cluster management, a Cluster API provider for Gardener could still act as a bridge for users transitioning from CAPI to Gardener, enabling them to gradually adopt Gardener’s capabilities while maintaining compatibility with existing CAPI workflows.

- For users exclusively using Gardener: Wrapping Gardener’s comprehensive API within CAPI’s structure offers limited additional value other than maintaining compatibility with existing CAPI workflows, as Gardener’s native API is already declarative and Kubernetes-centric, meaning any tool or language binding that can handle Kubernetes CRDs will work with Gardener.

- For users managing diverse clusters (e.g., ACK, AKS, EKS, GKE, Gardener,

kubeadm) via CAPI: Cluster API offers a unified interface for initiating cluster provisioning across diverse managed services, but it does not harmonize the differences in provider-specific CRDs, capabilities, or operational behaviors. This can limit its ability to leverage the unique strengths of each service. However, we understand that a CAPI provider for Gardener could open doors for users familiar with CAPI’s workflows. It would allow them to explore Gardener’s enterprise-grade, homogeneous cluster management while maintaining compatibility with existing CAPI workflows, fostering a smoother transition to Gardener.

The mapping from the Gardener API to a potential Cluster API provider for Gardener would be mostly syntactic. However, the fundamental value proposition of Gardener — providing homogeneous Kubernetes clusters across all supported infrastructures — extends beyond what Cluster API currently aims to achieve.

We follow Cluster API’s development with great interest and remain active members of the community.

5 - etcd

etcd - Key-Value Store for Kubernetes

etcd is a strongly consistent key-value store and the most prevalent choice for the Kubernetes

persistence layer. All API cluster objects like Pods, Deployments, Secrets, etc., are stored in etcd, which

makes it an essential part of a Kubernetes control plane.

Garden or Shoot Cluster Persistence

Each garden or shoot cluster gets its very own persistence for the control plane.

It runs in the shoot namespace on the respective seed cluster (or in the garden namespace in the garden cluster, respectively).

Concretely, there are two etcd instances per shoot cluster, which the kube-apiserver is configured to use in the following way:

etcd-main

A store that contains all “cluster critical” or “long-term” objects. These object kinds are typically considered for a backup to prevent any data loss.

etcd-events

A store that contains all Event objects (events.k8s.io) of a cluster.

Events usually have a short retention period and occur frequently, but are not essential for a disaster recovery.

The setup above prevents both, the critical etcd-main is not flooded by Kubernetes Events, as well as backup space is not occupied by non-critical data.

This separation saves time and resources.

etcd Operator

Configuring, maintaining, and health-checking etcd is outsourced to a dedicated operator called etcd Druid.

When a gardenlet reconciles a Shoot resource or a gardener-operator reconciles a Garden resource, they manage an Etcd resource in the seed or garden cluster, containing necessary information (backup information, defragmentation schedule, resources, etc.).

etcd-druid needs to manage the lifecycle of the desired etcd instance (today main or events).

Likewise, when the Shoot or Garden is deleted, gardenlet or gardener-operator deletes the Etcd resources and etcd Druid takes care of cleaning up all related objects, e.g. the backing StatefulSets.

Backup

If Seeds specify backups for etcd (example), then Gardener and the respective provider extensions are responsible for creating a bucket on the cloud provider’s side (modelled through a BackupBucket resource).

The bucket stores backups of Shoots scheduled on that Seed.

Furthermore, Gardener creates a BackupEntry, which subdivides the bucket and thus makes it possible to store backups of multiple shoot clusters.

How long backups are stored in the bucket after a shoot has been deleted depends on the configured retention period in the Seed resource.

Please see this example configuration for more information.

For Gardens specifying backups for etcd (example), the bucket must be pre-created externally and provided via the Garden specification.

Both etcd instances are configured to run with a special backup-restore sidecar. It takes care about regularly backing up etcd data and restoring it in case of data loss (in the main etcd only). The sidecar also performs defragmentation and other house-keeping tasks. More information can be found in the component’s GitHub repository.

Housekeeping

etcd maintenance tasks must be performed from time to time in order to re-gain database storage and to ensure the system’s reliability. The backup-restore sidecar takes care about this job as well.

For both Shoots and Gardens, a random time within the shoot’s maintenance time is chosen for scheduling these tasks.

6 - gardenadm

Caution

This tool is currently under development and considered highly experimental. Do not use it in production environments. Read more about it in GEP-28.

Overview

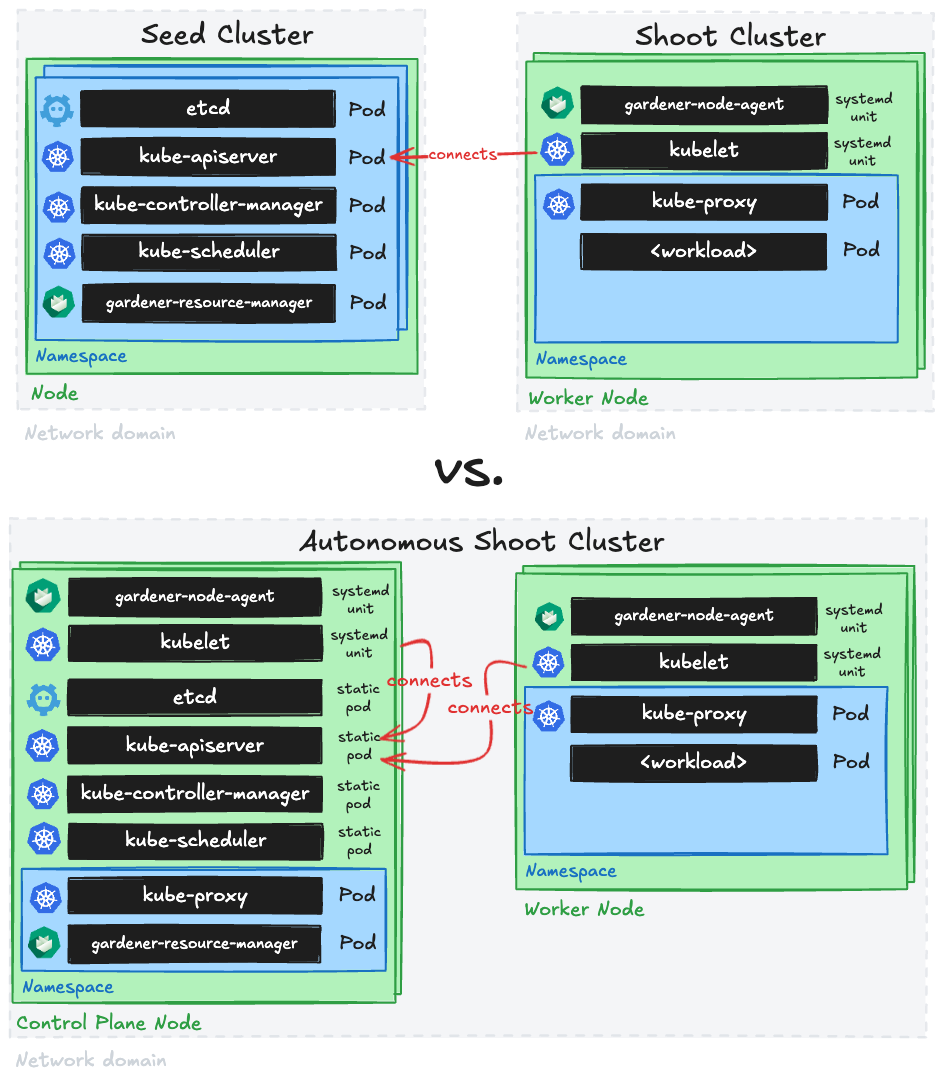

gardenadm is a command line tool for bootstrapping Kubernetes clusters called “Autonomous Shoot Clusters”.

In contrast to usual Gardener-managed clusters (called Shoot Clusters), the Kubernetes control plane components run as static pods on a dedicated control plane worker pool in the cluster itself (instead of running them as pods on another Kubernetes cluster (called Seed Cluster)).

Autonomous shoot clusters can be bootstrapped without an existing Gardener installation.

Hence, they can host a Gardener installation itself and/or serve as the initial seed cluster of a Gardener installation.

Furthermore, autonomous shoot clusters can only be created by the gardenadm tool and not via an API of an existing Gardener system.

Such autonomous shoot clusters are meant to operate autonomously, but not to exist completely independently of Gardener.

Hence, after their initial creation, they are connected to an existing Gardener system such that the established cluster management functionality via the Shoot API can be applied.

I.e., day-2 operations for autonomous shoot clusters are only supported after connecting them to a Gardener system.

This Gardener system could also run in an autonomous shoot cluster itself (in this case, you would first need to deploy it before being able to connect the autonomous shoot cluster to it).

Furthermore, autonomous shoot clusters are not considered a replacement or alternative for regular shoot clusters. They should be only used for special use-cases or requirements as creating them is more complex and as their costs will most likely be higher (since control plane nodes are typically not fully utilized in such architecture). In this light, a high cluster creation/deletion churn rate is neither expected nor in scope.

Getting Started Locally

This document walks you through deploying Autonomous Shoot Clusters using gardenadm on your local machine.

This setup can be used for trying out and developing gardenadm locally without additional infrastructure.

The setup is also used for running e2e tests for gardenadm in CI.

Scenarios

We distinguish between two different scenarios for bootstrapping autonomous shoot clusters:

- High Touch, meaning that there is no programmable infrastructure available. We consider this the “bare metal” or “edge” use-case, where at first machines must be (often manually) prepared by human operators. In this case, network setup (e.g., VPCs, subnets, route tables, etc.) and machine management are out of scope.

- Medium Touch, meaning that there is programmable infrastructure available where we can leverage provider extensions and

machine-controller-managerin order to manage the network setup and the machines.

The general procedure of bootstrapping an autonomous shoot cluster is similar in both scenarios.

7 - Gardener Admission Controller

Overview

While the Gardener API server works with admission plugins to validate and mutate resources belonging to Gardener related API groups, e.g. core.gardener.cloud, the same is needed for resources belonging to non-Gardener API groups as well, e.g. secrets in the core API group.

Therefore, the Gardener Admission Controller runs a http(s) server with the following handlers which serve as validating/mutating endpoints for admission webhooks.

It is also used to serve http(s) handlers for authorization webhooks.

Admission Webhook Handlers

This section describes the admission webhook handlers that are currently served.

Authentication Configuration Validator

In Shoots, it is possible to reference structured authentication configurations.

This validation handler validates that such configurations are valid.

Authorization Configuration Validator

In Shoots, it is possible to reference structured authorization configurations.

This validation handler validates that such configurations are valid.

Admission Plugin Secret Validator

In Shoots, AdmissionPlugin can have reference to other files. This validation handler validates the referred admission plugin secret and ensures that the secret always contains the required data kubeconfig.

Kubeconfig Secret Validator

Malicious Kubeconfigs applied by end users may cause a leakage of sensitive data.

This handler checks if the incoming request contains a Kubernetes secret with a .data.kubeconfig field and denies the request if the Kubeconfig structure violates Gardener’s security standards.

Namespace Validator

Namespaces are the backing entities of Gardener projects in which shoot cluster objects reside.

This validation handler protects active namespaces against premature deletion requests.

Therefore, it denies deletion requests if a namespace still contains shoot clusters or if it belongs to a non-deleting Gardener project (without .metadata.deletionTimestamp).

Resource Size Validator

Since users directly apply Kubernetes native objects to the Garden cluster, it also involves the risk of being vulnerable to DoS attacks because these resources are continuously watched and read by controllers. One example is the creation of shoot resources with large annotation values (up to 256 kB per value), which can cause severe out-of-memory issues for the gardenlet component. Vertical autoscaling can help to mitigate such situations, but we cannot expect to scale infinitely, and thus need means to block the attack itself.

The Resource Size Validator checks arbitrary incoming admission requests against a configured maximum size for the resource’s group-version-kind combination. It denies the request if the object exceeds the quota.

Note

The contents of

statussubresources andmetadata.managedFieldsare not taken into account for the resource size calculation.

Example for Gardener Admission Controller configuration:

server:

resourceAdmissionConfiguration:

limits:

- apiGroups: ["core.gardener.cloud"]

apiVersions: ["*"]

resources: ["shoots"]

size: 100k

- apiGroups: [""]

apiVersions: ["v1"]

resources: ["secrets"]

size: 100k

unrestrictedSubjects:

- kind: Group

name: gardener.cloud:system:seeds

apiGroup: rbac.authorization.k8s.io

# - kind: User

# name: admin

# apiGroup: rbac.authorization.k8s.io

# - kind: ServiceAccount

# name: "*"

# namespace: garden

# apiGroup: ""

operationMode: block #log

With the configuration above, the Resource Size Validator denies requests for shoots with Gardener’s core API group which exceed a size of 100 kB. The same is done for Kubernetes secrets.

As this feature is meant to protect the system from malicious requests sent by users, it is recommended to exclude trusted groups, users or service accounts from the size restriction via resourceAdmissionConfiguration.unrestrictedSubjects.

For example, the backing user for the gardenlet should always be capable of changing the shoot resource instead of being blocked due to size restrictions.

This is because the gardenlet itself occasionally changes the shoot specification, labels or annotations, and might violate the quota if the existing resource is already close to the quota boundary.

Also, operators are supposed to be trusted users and subjecting them to a size limitation can inhibit important operational tasks.

Wildcard ("*") in subject name is supported.

Size limitations depend on the individual Gardener setup and choosing the wrong values can affect the availability of your Gardener service.

resourceAdmissionConfiguration.operationMode allows to control if a violating request is actually denied (default) or only logged.

It’s recommended to start with log, check the logs for exceeding requests, adjust the limits if necessary and finally switch to block.

SeedRestriction

Please refer to Scoped API Access for Gardenlets for more information.

UpdateRestriction

Gardener stores public data regarding shoot clusters, i.e. certificate authority bundles, OIDC discovery documents, etc.

This information can be used by third parties so that they establish trust to specific authorities, making the integrity of the stored data extremely important in a way that any unwanted modifications can be considered a security risk.

This handler protects secrets and configmaps against tampering.

It denies CREATE, UPDATE and DELETE requests if the resource is labeled with gardener.cloud/update-restriction=true and the request is not made by a gardenlet.

In addition, the following service accounts are allowed to perform certain operations:

system:serviceaccount:kube-system:generic-garbage-collectoris allowed toDELETErestricted resources.system:serviceaccount:kube-system:gardener-internalis allowed toUPDATErestricted resources.

Authorization Webhook Handlers

This section describes the authorization webhook handlers that are currently served.

SeedAuthorization

Please refer to Scoped API Access for Gardenlets for more information.

8 - Gardener API Server

Overview

The Gardener API server is a Kubernetes-native extension based on its aggregation layer.

It is registered via an APIService object and designed to run inside a Kubernetes cluster whose API it wants to extend.

After registration, it exposes the following resources:

CloudProfiles

CloudProfiles are resources that describe a specific environment of an underlying infrastructure provider, e.g. AWS, Azure, etc.

Each shoot has to reference a CloudProfile to declare the environment it should be created in.

In a CloudProfile, the gardener operator specifies certain constraints like available machine types, regions, which Kubernetes versions they want to offer, etc.

End-users can read CloudProfiles to see these values, but only operators can change the content or create/delete them.

When a shoot is created or updated, then an admission plugin checks that only allowed values are used via the referenced CloudProfile.

Additionally, a CloudProfile may contain a providerConfig, which is a special configuration dedicated for the infrastructure provider.

Gardener does not evaluate or understand this config, but extension controllers might need it for declaration of provider-specific constraints, or global settings.

Please see this example manifest and consult the documentation of your provider extension controller to get information about its providerConfig.

NamespacedCloudProfiles

In addition to CloudProfiles, NamespacedCloudProfiles exist to enable project-level customizations of CloudProfiles.

Project administrators can create and manage cloud profiles with overrides or extensions specific to their project.

Please see this example manifest and this usage documentation for further information.

InternalSecrets

End-users can read and/or write Secrets in their project namespaces in the garden cluster. This prevents Gardener components from storing such “Gardener-internal” secrets in the respective project namespace.

InternalSecrets are resources that contain shoot or project-related secrets that are “Gardener-internal”, i.e., secrets used and managed by the system that end-users don’t have access to.

InternalSecrets are defined like plain Kubernetes Secrets, behave exactly like them, and can be used in the same manners. The only difference is, that the InternalSecret resource is a dedicated API resource (exposed by gardener-apiserver).

This allows separating access to “normal” secrets and internal secrets by the usual RBAC means.

Gardener uses an InternalSecret per Shoot for syncing the client CA to the project namespace in the garden cluster (named <shoot-name>.ca-client). The shoots/adminkubeconfig subresource signs short-lived client certificates by retrieving the CA from the InternalSecret.

Operators should configure gardener-apiserver to encrypt the internalsecrets.core.gardener.cloud resource in etcd.

Please see this example manifest.

Seeds

Seeds are resources that represent seed clusters.

Gardener does not care about how a seed cluster got created - the only requirement is that it is of at least Kubernetes v1.27 and passes the Kubernetes conformance tests.

The Gardener operator has to either deploy the gardenlet into the cluster they want to use as seed (recommended, then the gardenlet will create the Seed object itself after bootstrapping) or provide the kubeconfig to the cluster inside a secret (that is referenced by the Seed resource) and create the Seed resource themselves.

Please see this, this, and optionally this example manifests.

Shoot Quotas

To allow end-users not having their dedicated infrastructure account to try out Gardener, the operator can register an account owned by them that they allow to be used for trial clusters. Trial clusters can be put under quota so that they don’t consume too many resources (resulting in costs) and that one user cannot consume all resources on their own. These clusters are automatically terminated after a specified time, but end-users may extend the lifetime manually if needed.

Please see this example manifest.

Projects

The first thing before creating a shoot cluster is to create a Project.

A project is used to group multiple shoot clusters together.

End-users can invite colleagues to the project to enable collaboration, and they can either make them admin or viewer.

After an end-user has created a project, they will get a dedicated namespace in the garden cluster for all their shoots.

Please see this example manifest.

SecretBindings

Now that the end-user has a namespace the next step is registering their infrastructure provider account.

Please see this example manifest and consult the documentation of the extension controller for the respective infrastructure provider to get information about which keys are required in this secret.

After the secret has been created, the end-user has to create a special SecretBinding resource that binds this secret.

Later, when creating shoot clusters, they will reference such binding.

Please see this example manifest.

Shoots

Shoot cluster contain various settings that influence how end-user Kubernetes clusters will look like in the end. As Gardener heavily relies on extension controllers for operating system configuration, networking, and infrastructure specifics, the end-user has the possibility (and responsibility) to provide these provider-specific configurations as well. Such configurations are not evaluated by Gardener (because it doesn’t know/understand them), but they are only transported to the respective extension controller.

⚠️ This means that any configuration issues/mistake on the end-user side that relates to a provider-specific flag or setting cannot be caught during the update request itself but only later during the reconciliation (unless a validator webhook has been registered in the garden cluster by an operator).

Please see this example manifest and consult the documentation of the provider extension controller to get information about its spec.provider.controlPlaneConfig, .spec.provider.infrastructureConfig, and .spec.provider.workers[].providerConfig.

(Cluster)OpenIDConnectPresets

Please see this separate documentation file.

Overview Data Model

9 - Gardener Controller Manager

Overview

The gardener-controller-manager (often referred to as “GCM”) is a component that runs next to the Gardener API server, similar to the Kubernetes Controller Manager.

It runs several controllers that do not require talking to any seed or shoot cluster.

Also, as of today, it exposes an HTTP server that is serving several health check endpoints and metrics.

This document explains the various functionalities of the gardener-controller-manager and their purpose.

Controllers

Bastion Controller

Bastion resources have a limited lifetime which can be extended up to a certain amount by performing a heartbeat on them.

The Bastion controller is responsible for deleting expired or rotten Bastions.

- “expired” means a

Bastionhas exceeded itsstatus.expirationTimestamp. - “rotten” means a

Bastionis older than the configuredmaxLifetime.

The maxLifetime defaults to 24 hours and is an option in the BastionControllerConfiguration which is part of gardener-controller-managers ControllerManagerControllerConfiguration, see the example config file for details.

The controller also deletes Bastions in case the referenced Shoot:

- no longer exists

- is marked for deletion (i.e., have a non-

nil.metadata.deletionTimestamp) - was migrated to another seed (i.e.,

Shoot.spec.seedNameis different thanBastion.spec.seedName).

The deletion of Bastions triggers the gardenlet to perform the necessary cleanups in the Seed cluster, so some time can pass between deletion and the Bastion actually disappearing.

Clients like gardenctl are advised to not re-use Bastions whose deletion timestamp has been set already.

Refer to GEP-15 for more information on the lifecycle of

Bastion resources.

CertificateSigningRequest Controller

After the gardenlet gets deployed on the Seed cluster, it needs to establish itself as a trusted party to communicate with the Gardener API server. It runs through a bootstrap flow similar to the kubelet bootstrap process.

On startup, the gardenlet uses a kubeconfig with a bootstrap token which authenticates it as being part of the system:bootstrappers group. This kubeconfig is used to create a CertificateSigningRequest (CSR) against the Gardener API server.

The controller in gardener-controller-manager checks whether the CertificateSigningRequest has the expected organization, common name and usages which the gardenlet would request.

It only auto-approves the CSR if the client making the request is allowed to “create” the

certificatesigningrequests/seedclient subresource. Clients with the system:bootstrappers group are bound to the gardener.cloud:system:seed-bootstrapper ClusterRole, hence, they have such privileges. As the bootstrap kubeconfig for the gardenlet contains a bootstrap token which is authenticated as being part of the systems:bootstrappers group, its created CSR gets auto-approved.

CloudProfile Controller

CloudProfiles are essential when it comes to reconciling Shoots since they contain constraints (like valid machine types, Kubernetes versions, or machine images) and sometimes also some global configuration for the respective environment (typically via provider-specific configuration in .spec.providerConfig).

Consequently, to ensure that CloudProfiles in-use are always present in the system until the last referring Shoot or NamespacedCloudProfile gets deleted, the controller adds a finalizer which is only released when there is no Shoot or NamespacedCloudProfile referencing the CloudProfile anymore.

NamespacedCloudProfile Controller

NamespacedCloudProfiles provide a project-scoped extension to CloudProfiles, allowing for adjustments of a parent CloudProfile (e.g. by overriding expiration dates of Kubernetes versions or machine images). This allows for modifications without global project visibility. Like CloudProfiles do in their spec, NamespacedCloudProfiles also expose the resulting Shoot constraints as a CloudProfileSpec in their status.

The controller ensures that NamespacedCloudProfiles in-use remain present in the system until the last referring Shoot is deleted by adding a finalizer that is only released when there is no Shoot referencing the NamespacedCloudProfile anymore.

ControllerDeployment Controller

Extensions are registered in the garden cluster via ControllerRegistration and deployment of respective extensions are specified via ControllerDeployment. For more info refer to Registering Extension Controllers.

This controller ensures that ControllerDeployment in-use always exists until the last ControllerRegistration referencing them gets deleted. The controller adds a finalizer which is only released when there is no ControllerRegistration referencing the ControllerDeployment anymore.

ControllerRegistration Controller

The ControllerRegistration controller makes sure that the required Gardener Extensions specified by the ControllerRegistration resources are present in the seed clusters.

It also takes care of the creation and deletion of ControllerInstallation objects for a given seed cluster.

The controller has three reconciliation loops.

“Main” Reconciler

This reconciliation loop watches the Seed objects and determines which ControllerRegistrations are required for them and reconciles the corresponding ControllerInstallation resources to reach the determined state.

To begin with, it computes the kind/type combinations of extensions required for the seed.

For this, the controller examines a live list of ControllerRegistrations, ControllerInstallations, BackupBuckets, BackupEntrys, Shoots, and Secrets from the garden cluster.

For example, it examines the shoots running on the seed and deducts the kind/type, like Infrastructure/gcp.

The seed (seed.spec.provider.type) and DNS (seed.spec.dns.provider.type) provider types are considered when calculating the list of required ControllerRegistrations, as well.

It also decides whether they should always be deployed based on the .spec.deployment.policy.

For the configuration options, please see this section.

Based on these required combinations, each of them are mapped to ControllerRegistration objects and then to their corresponding ControllerInstallation objects (if existing).

The controller then creates or updates the required ControllerInstallation objects for the given seed.

It also deletes every existing ControllerInstallation whose referenced ControllerRegistration is not part of the required list.

For example, if the shoots in the seed are no longer using the DNS provider aws-route53, then the controller proceeds to delete the respective ControllerInstallation object.

"ControllerRegistration Finalizer" Reconciler

This reconciliation loop watches the ControllerRegistration resource and adds finalizers to it when they are created.

In case a deletion request comes in for the resource, i.e., if a .metadata.deletionTimestamp is set, it actively scans for a ControllerInstallation resource using this ControllerRegistration, and decides whether the deletion can be allowed.

In case no related ControllerInstallation is present, it removes the finalizer and marks it for deletion.

"Seed Finalizer" Reconciler

This loop also watches the Seed object and adds finalizers to it at creation.

If a .metadata.deletionTimestamp is set for the seed, then the controller checks for existing ControllerInstallation objects which reference this seed.

If no such objects exist, then it removes the finalizer and allows the deletion.

“Extension ClusterRole” Reconciler

This reconciler watches two resources in the garden cluster:

ClusterRoles labelled withauthorization.gardener.cloud/custom-extensions-permissions=trueServiceAccounts in seed namespaces matching the selector provided via theauthorization.gardener.cloud/extensions-serviceaccount-selectorannotation of suchClusterRoles.

Its core task is to maintain a ClusterRoleBinding resource referencing the respective ClusterRole.

This gets bound to all ServiceAccounts in seed namespaces whose labels match the selector provided via the authorization.gardener.cloud/extensions-serviceaccount-selector annotation of such ClusterRoles.

You can read more about the purpose of this reconciler in this document.

CredentialsBinding Controller

CredentialsBindings reference Secrets, WorkloadIdentitys and Quotas and are themselves referenced by Shoots.

The controller adds finalizers to the referenced objects to ensure they don’t get deleted while still being referenced.

Similarly, to ensure that CredentialsBindings in-use are always present in the system until the last referring Shoot gets deleted, the controller adds a finalizer which is only released when there is no Shoot referencing the CredentialsBinding anymore.

Referenced Secrets and WorkloadIdentitys will also be labeled with provider.shoot.gardener.cloud/<type>=true, where <type> is the value of the .provider.type of the CredentialsBinding.

Also, all referenced Secrets and WorkloadIdentitys, as well as Quotas, will be labeled with reference.gardener.cloud/credentialsbinding=true to allow for easily filtering for objects referenced by CredentialsBindings.

Event Controller

With the Gardener Event Controller, you can prolong the lifespan of events related to Shoot clusters. This is an optional controller which will become active once you provide the below mentioned configuration.

All events in K8s are deleted after a configurable time-to-live (controlled via a kube-apiserver argument called --event-ttl (defaulting to 1 hour)).

The need to prolong the time-to-live for Shoot cluster events frequently arises when debugging customer issues on live systems.

This controller leaves events involving Shoots untouched, while deleting all other events after a configured time.

In order to activate it, provide the following configuration:

concurrentSyncs: The amount of goroutines scheduled for reconciling events.ttlNonShootEvents: When an event reaches this time-to-live it gets deleted unless it is a Shoot-related event (defaults to1h, equivalent to theevent-ttldefault).

⚠️ In addition, you should also configure the

--event-ttlfor the kube-apiserver to define an upper-limit of how long Shoot-related events should be stored. The--event-ttlshould be larger than thettlNonShootEventsor this controller will have no effect.

ExposureClass Controller

ExposureClass abstracts the ability to expose a Shoot clusters control plane in certain network environments (e.g. corporate networks, DMZ, internet) on all Seeds or a subset of the Seeds. For more information, see ExposureClasses.

Consequently, to ensure that ExposureClasses in-use are always present in the system until the last referring Shoot gets deleted, the controller adds a finalizer which is only released when there is no Shoot referencing the ExposureClass anymore.

ManagedSeedSet Controller

ManagedSeedSet objects maintain a stable set of replicas of ManagedSeeds, i.e. they guarantee the availability of a specified number of identical ManagedSeeds on an equal number of identical Shoots.

The ManagedSeedSet controller creates and deletes ManagedSeeds and Shoots in response to changes to the replicas and selector fields. For more information, refer to the ManagedSeedSet proposal document.

- The reconciler first gets all the replicas of the given

ManagedSeedSetin theManagedSeedSet’s namespace and with the matching selector. Each replica is a struct that contains aManagedSeed, its correspondingSeedandShootobjects. - Then the pending replica is retrieved, if it exists.

- Next it determines the ready, postponed, and deletable replicas.

- A replica is considered

readywhen aSeedowned by aManagedSeedhas been registered either directly or by deployinggardenletinto aShoot, theSeedisReadyand theShoot’s status isHealthy. - If a replica is not ready and it is not pending, i.e. it is not specified in the

ManagedSeed’sstatus.pendingReplicafield, then it is added to thepostponedreplicas. - A replica is deletable if it has no scheduled

Shoots and the replica’sShootandManagedSeeddo not have theseedmanagement.gardener.cloud/protect-from-deletionannotation.

- A replica is considered

- Finally, it checks the actual and target replica counts. If the actual count is less than the target count, the controller scales up the replicas by creating new replicas to match the desired target count. If the actual count is more than the target, the controller deletes replicas to match the desired count. Before scale-out or scale-in, the controller first reconciles the pending replica (there can always only be one) and makes sure the replica is ready before moving on to the next one.

Scale-out(actual count < target count)- During the scale-out phase, the controller first creates the

Shootobject from theManagedSeedSet’sspec.shootTemplatefield and adds the replica to thestatus.pendingReplicaof theManagedSeedSet. - For the subsequent reconciliation steps, the controller makes sure that the pending replica is ready before proceeding to the next replica. Once the

Shootis created successfully, theManagedSeedobject is created from theManagedSeedSet’sspec.template. TheManagedSeedobject is reconciled by theManagedSeedcontroller and aSeedobject is created for the replica. Once the replica’sSeedbecomes ready and theShootbecomes healthy, the replica also becomes ready.

- During the scale-out phase, the controller first creates the

Scale-in(actual count > target count)- During the scale-in phase, the controller first determines the replica that can be deleted. From the deletable replicas, it chooses the one with the lowest priority and deletes it. Priority is determined in the following order:

- First, compare replica statuses. Replicas with “less advanced” status are considered lower priority. For example, a replica with

StatusShootReconcilingstatus has a lower value than a replica withStatusShootReconciledstatus. Hence, in this case, a replica with aStatusShootReconcilingstatus will have lower priority and will be considered for deletion. - Then, the replicas are compared with the readiness of their

Seeds. Replicas with non-readySeeds are considered lower priority. - Then, the replicas are compared with the health statuses of their

Shoots. Replicas with “worse” statuses are considered lower priority. - Finally, the replica ordinals are compared. Replicas with lower ordinals are considered lower priority.

- First, compare replica statuses. Replicas with “less advanced” status are considered lower priority. For example, a replica with

- During the scale-in phase, the controller first determines the replica that can be deleted. From the deletable replicas, it chooses the one with the lowest priority and deletes it. Priority is determined in the following order:

Quota Controller

Quota object limits the resources consumed by shoot clusters either per provider secret or per project/namespace.

Consequently, to ensure that Quotas in-use are always present in the system until the last SecretBinding or CredentialsBinding that references them gets deleted, the controller adds a finalizer which is only released when there is no SecretBinding or CredentialsBinding referencing the Quota anymore.

Project Controller

There are multiple controllers responsible for different aspects of Project objects.

Please also refer to the Project documentation.

“Main” Reconciler

This reconciler manages a dedicated Namespace for each Project.

The namespace name can either be specified explicitly in .spec.namespace (must be prefixed with garden-) or it will be determined by the controller.

If .spec.namespace is set, it tries to create it. If it already exists, it tries to adopt it.

This will only succeed if the Namespace was previously labeled with gardener.cloud/role=project and project.gardener.cloud/name=<project-name>.

This is to prevent end-users from being able to adopt arbitrary namespaces and escalate their privileges, e.g. the kube-system namespace.

After the namespace was created/adopted, the controller creates several ClusterRoles and ClusterRoleBindings that allow the project members to access related resources based on their roles.

These RBAC resources are prefixed with gardener.cloud:system:project{-member,-viewer}:<project-name>.

Gardener administrators and extension developers can define their own roles. For more information, see Extending Project Roles for more information.

In addition, operators can configure the Project controller to maintain a default ResourceQuota for project namespaces.

Quotas can especially limit the creation of user facing resources, e.g. Shoots, SecretBindings, CredentialsBinding, Secrets and thus protect the garden cluster from massive resource exhaustion but also enable operators to align quotas with respective enterprise policies.

⚠️ Gardener itself is not exempted from configured quotas. For example, Gardener creates

Secretsfor every shoot cluster in the project namespace and at the same time increases the available quota count. Please mind this additional resource consumption.

The controller configuration provides a template section controllers.project.quotas where such a ResourceQuota (see the example below) can be deposited.

controllers:

project:

quotas:

- config:

apiVersion: v1

kind: ResourceQuota

spec:

hard:

count/shoots.core.gardener.cloud: "100"

count/secretbindings.core.gardener.cloud: "10"

count/credentialsbindings.security.gardener.cloud: "10"

count/secrets: "800"

projectSelector: {}

The Project controller takes the specified config and creates a ResourceQuota with the name gardener in the project namespace.

If a ResourceQuota resource with the name gardener already exists, the controller will only update fields in spec.hard which are unavailable at that time.

This is done to configure a default Quota in all projects but to allow manual quota increases as the projects’ demands increase.

spec.hard fields in the ResourceQuota object that are not present in the configuration are removed from the object.

Labels and annotations on the ResourceQuota config get merged with the respective fields on existing ResourceQuotas.

An optional projectSelector narrows down the amount of projects that are equipped with the given config.

If multiple configs match for a project, then only the first match in the list is applied to the project namespace.

The .status.phase of the Project resources is set to Ready or Failed by the reconciler to indicate whether the reconciliation loop was performed successfully.

Also, it generates Events to provide further information about its operations.

When a Project is marked for deletion, the controller ensures that there are no Shoots left in the project namespace.

Once all Shoots are gone, the Namespace and Project are released.

“Stale Projects” Reconciler

As Gardener is a large-scale Kubernetes as a Service, it is designed for being used by a large amount of end-users.

Over time, it is likely to happen that some of the hundreds or thousands of Project resources are no longer actively used.

Gardener offers the “stale projects” reconciler which will take care of identifying such stale projects, marking them with a “warning”, and eventually deleting them after a certain time period. This reconciler is enabled by default and works as follows:

- Projects are considered as “stale”/not actively used when all of the following conditions apply: The namespace associated with the

Projectdoes not have any…Shootresources.BackupEntryresources.Secretresources that are referenced by aSecretBindingor aCredentialsBindingthat is in use by aShoot(not necessarily in the same namespace).WorkloadIdentityresources that are referenced by aCredentialsBindingthat is in use by aShoot(not necessarily in the same namespace).Quotaresources that are referenced by aSecretBindingor aCredentialsBindingthat is in use by aShoot(not necessarily in the same namespace).- The time period when the project was used for the last time (

status.lastActivityTimestamp) is longer than the configuredminimumLifetimeDays

If a project is considered “stale”, then its .status.staleSinceTimestamp will be set to the time when it was first detected to be stale.

If it gets actively used again, this timestamp will be removed.

After some time, the .status.staleAutoDeleteTimestamp will be set to a timestamp after which Gardener will auto-delete the Project resource if it still is not actively used.

The component configuration of the gardener-controller-manager offers to configure the following options:

minimumLifetimeDays: Don’t consider newly createdProjects as “stale” too early to give people/end-users some time to onboard and get familiar with the system. The “stale project” reconciler won’t set any timestamp forProjects younger thanminimumLifetimeDays. When you change this value, then projects marked as “stale” may be no longer marked as “stale” in case they are young enough, or vice versa.staleGracePeriodDays: Don’t compute auto-delete timestamps for staleProjects that are unused for less thanstaleGracePeriodDays. This is to not unnecessarily make people/end-users nervous “just because” they haven’t actively used theirProjectfor a given amount of time. When you change this value, then already assigned auto-delete timestamps may be removed if the new grace period is not yet exceeded.staleExpirationTimeDays: Expiration time after which staleProjects are finally auto-deleted (after.status.staleSinceTimestamp). If this value is changed and an auto-delete timestamp got already assigned to the projects, then the new value will only take effect if it’s increased. Hence, decreasing thestaleExpirationTimeDayswill not decrease already assigned auto-delete timestamps.

Gardener administrators/operators can exclude specific

Projects from the stale check by annotating the relatedNamespaceresource withproject.gardener.cloud/skip-stale-check=true.

“Activity” Reconciler

Since the other two reconcilers are unable to actively monitor the relevant objects that are used in a Project (Shoot, Secret, etc.), there could be a situation where the user creates and deletes objects in a short period of time. In that case, the Stale Project Reconciler could not see that there was any activity on that project and it will still mark it as a Stale, even though it is actively used.

The Project Activity Reconciler is implemented to take care of such cases. An event handler will notify the reconciler for any activity and then it will update the status.lastActivityTimestamp. This update will also trigger the Stale Project Reconciler.

SecretBinding Controller

SecretBindings reference Secrets and Quotas and are themselves referenced by Shoots.

The controller adds finalizers to the referenced objects to ensure they don’t get deleted while still being referenced.

Similarly, to ensure that SecretBindings in-use are always present in the system until the last referring Shoot gets deleted, the controller adds a finalizer which is only released when there is no Shoot referencing the SecretBinding anymore.

Referenced Secrets will also be labeled with provider.shoot.gardener.cloud/<type>=true, where <type> is the value of the .provider.type of the SecretBinding.

Also, all referenced Secrets, as well as Quotas, will be labeled with reference.gardener.cloud/secretbinding=true to allow for easily filtering for objects referenced by SecretBindings.

Seed Controller

The Seed controller in the gardener-controller-manager reconciles Seed objects with the help of the following reconcilers.

“Main” Reconciler

This reconciliation loop takes care of seed related operations in the garden cluster. When a new Seed object is created,

the reconciler creates a new Namespace in the garden cluster seed-<seed-name>. Namespaces dedicated to single

seed clusters allow us to segregate access permissions i.e., a gardenlet must not have permissions to access objects in

all Namespaces in the garden cluster.

There are objects in a Garden environment which are created once by the operator e.g., default domain secret,

alerting credentials, and are required for operations happening in the gardenlet. Therefore, we not only need a seed specific

Namespace but also a copy of these “shared” objects.

The “main” reconciler takes care about this replication:

| Kind | Namespace | Label Selector |

|---|---|---|

| Secret | garden | gardener.cloud/role |

“Backup Buckets Check” Reconciler

Every time a BackupBucket object is created or updated, the referenced Seed object is enqueued for reconciliation.

It’s the reconciler’s task to check the status subresource of all existing BackupBuckets that reference this Seed.

If at least one BackupBucket has .status.lastError != nil, the BackupBucketsReady condition on the Seed will be set to False, and consequently the Seed is considered as NotReady.

If the SeedBackupBucketsCheckControllerConfiguration (which is part of gardener-controller-managers component configuration) contains a conditionThreshold for the BackupBucketsReady, the condition will instead first be set to Progressing and eventually to False once the conditionThreshold expires. See the example config file for details.

Once the BackupBucket is healthy again, the seed will be re-queued and the condition will turn true.

“Extensions Check” Reconciler

This reconciler reconciles Seed objects and checks whether all ControllerInstallations referencing them are in a healthy state.

Concretely, all three conditions Valid, Installed, and Healthy must have status True and the Progressing condition must have status False.

Based on this check, it maintains the ExtensionsReady condition in the respective Seed’s .status.conditions list.

“Lifecycle” Reconciler

The “Lifecycle” reconciler processes Seed objects which are enqueued every 10 seconds in order to check if the responsible

gardenlet is still responding and operable. Therefore, it checks renewals via Lease objects of the seed in the garden cluster

which are renewed regularly by the gardenlet.

In case a Lease is not renewed for the configured amount in config.controllers.seed.monitorPeriod.duration:

- The reconciler assumes that the

gardenletstopped operating and updates theGardenletReadycondition toUnknown. - Additionally, the conditions and constraints of all

Shootresources scheduled on the affected seed are set toUnknownas well, because a strikinggardenletwon’t be able to maintain these conditions any more. - If the gardenlet’s client certificate has expired (identified based on the

.status.clientCertificateExpirationTimestampfield in theSeedresource) and if it is managed by aManagedSeed, then this will be triggered for a reconciliation. This will trigger the bootstrapping process again and allows gardenlets to obtain a fresh client certificate.

“Reference” Reconciler

Seed objects may specify references to other objects in the garden namespace in the garden cluster which are required for certain features.

For example, operators can configure additional data for extensions via .spec.resources[].

Such objects need a special protection against deletion requests as long as they are still being referenced by one or multiple seeds.

Therefore, this reconciler checks Seeds for referenced objects and adds the finalizer gardener.cloud/reference-protection to their .metadata.finalizers list.

The reconciled Seed also gets this finalizer to enable a proper garbage collection in case the gardener-controller-manager is offline at the moment of an incoming deletion request.

When an object is not actively referenced anymore because the Seed specification has changed or all related seeds were deleted (are in deletion), the controller will remove the added finalizer again so that the object can safely be deleted or garbage collected.

This reconciler inspects the following references:

Secrets andConfigMaps from.spec.resources[]

The checks naturally grow with the number of references that are added to the Seed specification.

Shoot Controller

“Conditions” Reconciler

In case the reconciled Shoot is registered via a ManagedSeed as a seed cluster, this reconciler merges the conditions in the respective Seed’s .status.conditions into the .status.conditions of the Shoot.

This is to provide a holistic view on the status of the registered seed cluster by just looking at the Shoot resource.

“Hibernation” Reconciler

This reconciler is responsible for hibernating or awakening shoot clusters based on the schedules defined in their .spec.hibernation.schedules.

It ignores failed Shoots and those marked for deletion.

“Maintenance” Reconciler

This reconciler is responsible for maintaining shoot clusters based on the time window defined in their .spec.maintenance.timeWindow.

It might auto-update the Kubernetes version or the operating system versions specified in the worker pools (.spec.provider.workers).

It could also add some operation or task annotations. For more information, see Shoot Maintenance.

“Quota” Reconciler