This is the multi-page printable view of this section. Click here to print.

Deployment

1 - Authentication Gardener Control Plane

Authentication of Gardener Control Plane Components Against the Garden Cluster

Note: This document refers to Gardener’s API server, admission controller, controller manager and scheduler components. Any reference to the term Gardener control plane component can be replaced with any of the mentioned above.

There are several authentication possibilities depending on whether or not the concept of Virtual Garden is used.

Virtual Garden is not used, i.e., the runtime Garden cluster is also the target Garden cluster.

Automounted Service Account Token

The easiest way to deploy a Gardener control plane component is to not provide a kubeconfig at all. This way in-cluster configuration and an automounted service account token will be used. The drawback of this approach is that the automounted token will not be automatically rotated.

Service Account Token Volume Projection

Another solution is to use Service Account Token Volume Projection combined with a kubeconfig referencing a token file (see the example below).

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority-data: <CA-DATA>

server: https://default.kubernetes.svc.cluster.local

name: garden

contexts:

- context:

cluster: garden

user: garden

name: garden

current-context: garden

users:

- name: garden

user:

tokenFile: /var/run/secrets/projected/serviceaccount/token

This will allow for automatic rotation of the service account token by the kubelet. The configuration can be achieved by setting both .Values.global.<GardenerControlPlaneComponent>.serviceAccountTokenVolumeProjection.enabled: true and .Values.global.<GardenerControlPlaneComponent>.kubeconfig in the respective chart’s values.yaml file.

Virtual Garden is used, i.e., the runtime Garden cluster is different from the target Garden cluster.

Service Account

The easiest way to setup the authentication is to create a service account and the respective roles will be bound to this service account in the target cluster. Then use the generated service account token and craft a kubeconfig, which will be used by the workload in the runtime cluster. This approach does not provide a solution for the rotation of the service account token. However, this setup can be achieved by setting .Values.global.deployment.virtualGarden.enabled: true and following these steps:

- Deploy the

applicationpart of the charts in thetargetcluster. - Get the service account token and craft the

kubeconfig. - Set the crafted

kubeconfigand deploy theruntimepart of the charts in theruntimecluster.

Client Certificate

Another solution is to bind the roles in the target cluster to a User subject instead of a service account and use a client certificate for authentication. This approach does not provide a solution for the client certificate rotation. However, this setup can be achieved by setting both .Values.global.deployment.virtualGarden.enabled: true and .Values.global.deployment.virtualGarden.<GardenerControlPlaneComponent>.user.name, then following these steps:

- Generate a client certificate for the

targetcluster for the respective user. - Deploy the

applicationpart of the charts in thetargetcluster. - Craft a

kubeconfigusing the already generated client certificate. - Set the crafted

kubeconfigand deploy theruntimepart of the charts in theruntimecluster.

Projected Service Account Token

This approach requires an already deployed and configured oidc-webhook-authenticator for the target cluster. Also, the runtime cluster should be registered as a trusted identity provider in the target cluster. Then, projected service accounts tokens from the runtime cluster can be used to authenticate against the target cluster. The needed steps are as follows:

- Deploy OWA and establish the needed trust.

- Set

.Values.global.deployment.virtualGarden.enabled: trueand.Values.global.deployment.virtualGarden.<GardenerControlPlaneComponent>.user.name.Note: username value will depend on the trust configuration, e.g.,

<prefix>:system:serviceaccount:<namespace>:<serviceaccount> - Set

.Values.global.<GardenerControlPlaneComponent>.serviceAccountTokenVolumeProjection.enabled: trueand.Values.global.<GardenerControlPlaneComponent>.serviceAccountTokenVolumeProjection.audience.Note: audience value will depend on the trust configuration, e.g.,

<client-id-from-trust-config>. - Craft a kubeconfig (see the example below).

- Deploy the

applicationpart of the charts in thetargetcluster. - Deploy the

runtimepart of the charts in theruntimecluster.

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority-data: <CA-DATA>

server: https://virtual-garden.api

name: virtual-garden

contexts:

- context:

cluster: virtual-garden

user: virtual-garden

name: virtual-garden

current-context: virtual-garden

users:

- name: virtual-garden

user:

tokenFile: /var/run/secrets/projected/serviceaccount/token

2 - Configuring Logging

Configuring the Logging Stack via gardenlet Configurations

Enable the Logging

In order to install the Gardener logging stack, the logging.enabled configuration option has to be enabled in the Gardenlet configuration:

logging:

enabled: true

From now on, each Seed is going to have a logging stack which will collect logs from all pods and some systemd services. Logs related to Shoots with testing purpose are dropped in the fluent-bit output plugin. Shoots with a purpose different than testing have the same type of log aggregator (but different instance) as the Seed. The logs can be viewed in the Plutono in the garden namespace for the Seed components and in the respective shoot control plane namespaces.

Enable Logs from the Shoot’s Node systemd Services

The logs from the systemd services on each node can be retrieved by enabling the logging.shootNodeLogging option in the gardenlet configuration:

logging:

enabled: true

shootNodeLogging:

shootPurposes:

- "evaluation"

- "deployment"

Under the shootPurpose section, just list all the shoot purposes for which the Shoot node logging feature will be enabled. Specifying the testing purpose has no effect because this purpose prevents the logging stack installation.

Logs can be viewed in the operator Plutono!

The dedicated labels are unit, syslog_identifier, and nodename in the Explore menu.

Configuring Central Vali Storage Capacity

By default, the central Vali has 100Gi of storage capacity.

To overwrite the current central Vali storage capacity, the logging.vali.garden.storage setting in the gardenlet’s component configuration should be altered.

If you need to increase it, you can do so without losing the current data by specifying a higher capacity. By doing so, the Vali’s PersistentVolume capacity will be increased instead of deleting the current PV.

However, if you specify less capacity, then the PersistentVolume will be deleted and with it the logs, too.

logging:

enabled: true

vali:

garden:

storage: "200Gi"

3 - Deploy Gardenlet

Deploying Gardenlets

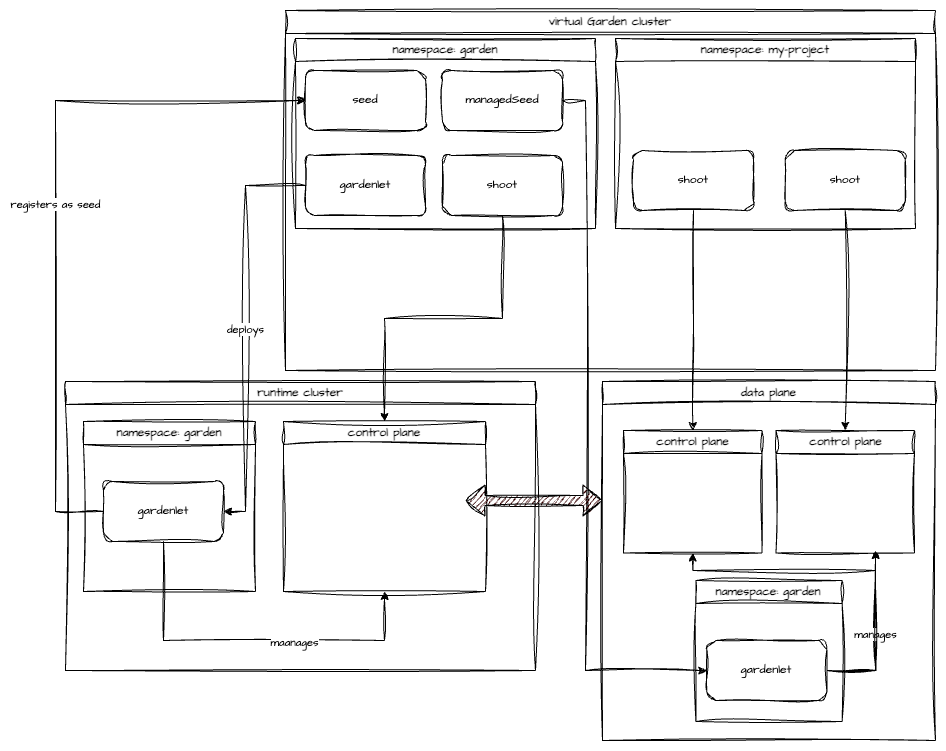

Gardenlets act as decentralized agents to manage the shoot clusters of a seed cluster.

Procedure

After you have deployed the Gardener control plane, you need one or more seed clusters in order to be able to create shoot clusters.

You can either register an existing cluster as “seed” (this could also be the cluster in which the control plane runs), or you can create new clusters (typically shoots, i.e., this approach registers at least one first initial seed) and then register them as “seeds”.

The following sections describe the scenarios.

Register A First Seed Cluster

If you have not registered a seed cluster yet (thus, you need to deploy a first, so-called “unmanaged seed”), your approach depends on how you deployed the Gardener control plane.

Gardener Control Plane Deployed Via gardener/controlplane Helm chart

You can follow Deploy a gardenlet Manually.

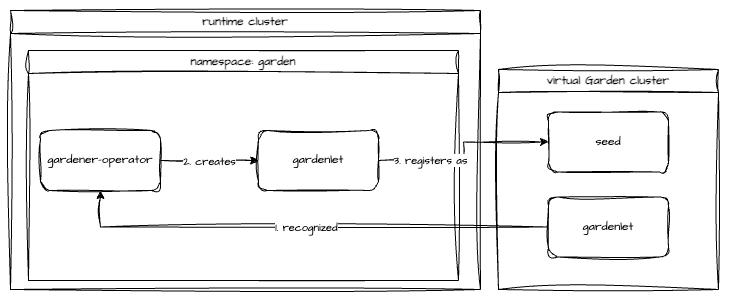

Gardener Control Plane Deployed Via gardener-operator

- If you want to register the same cluster in which

gardener-operatorruns, or if you want to register another cluster that is reachable (network-wise) forgardener-operator, you can follow Deploy gardenlet viagardener-operator. - If you want to register a cluster that is not reachable (network-wise) (e.g., because it runs behind a firewall), you can follow Deploy a gardenlet Manually.

Register Further Seed Clusters

If you already have a seed cluster, and you want to deploy further seed clusters (so-called “managed seeds”), you can follow Deploy a gardenlet Automatically.

4 - Deploy Gardenlet Automatically

Deploy a gardenlet Automatically

The gardenlet can automatically deploy itself into shoot clusters, and register them as seed clusters. These clusters are called “managed seeds” (aka “shooted seeds”). This procedure is the preferred way to add additional seed clusters, because shoot clusters already come with production-grade qualities that are also demanded for seed clusters.

Prerequisites

The only prerequisite is to register an initial cluster as a seed cluster that already has a deployed gardenlet (for available options see Deploying Gardenlets).

Tip

The initial seed cluster can be the garden cluster itself, but for better separation of concerns, it is recommended to only register other clusters as seeds.

Auto-Deployment of Gardenlets into Shoot Clusters

For a better scalability of your Gardener landscape (e.g., when the total number of Shoots grows), you usually need more seed clusters that you can create, as follows:

- Use the initial seed cluster (“unmanaged seed”) to create shoot clusters that you later register as seed clusters.

- The gardenlet deployed in the initial cluster can deploy itself into the shoot clusters (which eventually makes them getting registered as seeds) if

ManagedSeedresources are created.

The advantage of this approach is that there’s only one initial gardenlet installation required. Every other managed seed cluster gets an automatically deployed gardenlet.

Related Links

5 - Deploy Gardenlet Manually

Deploy a gardenlet Manually

Manually deploying a gardenlet is usually only required if the Kubernetes cluster to be registered as a seed cluster is managed via third-party tooling (i.e., the Kubernetes cluster is not a shoot cluster, so Deploy a gardenlet Automatically cannot be used).

In this case, gardenlet needs to be deployed manually, meaning that its Helm chart must be installed.

Tip

Once you’ve deployed a gardenlet manually, you can deploy new gardenlets automatically. The manually deployed gardenlet is then used as a template for the new gardenlets. For more information, see Deploy a gardenlet Automatically.

Prerequisites

Kubernetes Cluster that Should Be Registered as a Seed Cluster

Verify that the cluster has a supported Kubernetes version.

Determine the nodes, pods, and services CIDR of the cluster. You need to configure this information in the

Seedconfiguration. Gardener uses this information to check that the shoot cluster isn’t created with overlapping CIDR ranges.Every seed cluster needs an Ingress controller which distributes external requests to internal components like Plutono and Prometheus. For this, configure the following lines in your Seed resource:

spec: dns: provider: type: aws-route53 secretRef: name: ingress-secret namespace: garden ingress: domain: ingress.my-seed.example.com controller: kind: nginx providerConfig: <some-optional-provider-specific-config-for-the-ingressController>

Procedure Overview

Create a Bootstrap Token Secret in the kube-system Namespace of the Garden Cluster

The gardenlet needs to talk to the Gardener API server residing in the garden cluster.

Use gardenlet’s ability to request a signed certificate for the garden cluster by leveraging Kubernetes Certificate Signing Requests. The gardenlet performs a TLS bootstrapping process that is similar to the Kubelet TLS Bootstrapping. Make sure that the API server of the garden cluster has bootstrap token authentication enabled.

The client credentials required for the gardenlet’s TLS bootstrapping process need to be either token or certificate (OIDC isn’t supported) and have permissions to create a Certificate Signing Request (CSR).

It’s recommended to use bootstrap tokens due to their desirable security properties (such as a limited token lifetime).

Therefore, first create a bootstrap token secret for the garden cluster:

apiVersion: v1

kind: Secret

metadata:

# Name MUST be of form "bootstrap-token-<token id>"

name: bootstrap-token-07401b

namespace: kube-system

# Type MUST be 'bootstrap.kubernetes.io/token'

type: bootstrap.kubernetes.io/token

stringData:

# Human readable description. Optional.

description: "Token to be used by the gardenlet for Seed `sweet-seed`."

# Token ID and secret. Required.

token-id: 07401b # 6 characters

token-secret: f395accd246ae52d # 16 characters

# Expiration. Optional.

# expiration: 2017-03-10T03:22:11Z

# Allowed usages.

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

When you later prepare the gardenlet Helm chart, a kubeconfig based on this token is shared with the gardenlet upon deployment.

Prepare the gardenlet Helm Chart

This section only describes the minimal configuration, using the global configuration values of the gardenlet Helm chart. For an overview over all values, see the configuration values. We refer to the global configuration values as gardenlet configuration in the following procedure.

Create a gardenlet configuration

gardenlet-values.yamlbased on this template.Create a bootstrap

kubeconfigbased on the bootstrap token created in the garden cluster.Replace the

<bootstrap-token>withtoken-id.token-secret(from our previous example:07401b.f395accd246ae52d) from the bootstrap token secret.apiVersion: v1 kind: Config current-context: gardenlet-bootstrap@default clusters: - cluster: certificate-authority-data: <ca-of-garden-cluster> server: https://<endpoint-of-garden-cluster> name: default contexts: - context: cluster: default user: gardenlet-bootstrap name: gardenlet-bootstrap@default users: - name: gardenlet-bootstrap user: token: <bootstrap-token>In the

gardenClientConnection.bootstrapKubeconfigsection of your gardenlet configuration, provide the bootstrapkubeconfigtogether with a name and namespace to the gardenlet Helm chart.gardenClientConnection: bootstrapKubeconfig: name: gardenlet-kubeconfig-bootstrap namespace: garden kubeconfig: | <bootstrap-kubeconfig> # will be base64 encoded by helmThe bootstrap

kubeconfigis stored in the specified secret.In the

gardenClientConnection.kubeconfigSecretsection of your gardenlet configuration, define a name and a namespace where the gardenlet stores the realkubeconfigthat it creates during the bootstrap process. If the secret doesn’t exist, the gardenlet creates it for you.gardenClientConnection: kubeconfigSecret: name: gardenlet-kubeconfig namespace: garden

Updating the Garden Cluster CA

The kubeconfig created by the gardenlet in step 4 will not be recreated as long as it exists, even if a new bootstrap kubeconfig is provided.

To enable rotation of the garden cluster CA certificate, a new bundle can be provided via the gardenClientConnection.gardenClusterCACert field.

If the provided bundle differs from the one currently in the gardenlet’s kubeconfig secret then it will be updated.

To remove the CA completely (e.g. when switching to a publicly trusted endpoint), this field can be set to either none or null.

Prepare Seed Specification

When gardenlet starts, it tries to register a Seed resource in the garden cluster based on the specification provided in seedConfig in its configuration.

This procedure doesn’t describe all the possible configurations for the

Seedresource. For more information, see:

Supply the

Seedresource in theseedConfigsection of your gardenlet configurationgardenlet-values.yaml.Add the

seedConfigto your gardenlet configurationgardenlet-values.yaml. The fieldseedConfig.spec.provider.typespecifies the infrastructure provider type (for example,aws) of the seed cluster. For all supported infrastructure providers, see Known Extension Implementations.# ... seedConfig: metadata: name: sweet-seed labels: environment: evaluation annotations: custom.gardener.cloud/option: special spec: dns: provider: type: <provider> secretRef: name: ingress-secret namespace: garden ingress: # see prerequisites domain: ingress.dev.my-seed.example.com controller: kind: nginx networks: # see prerequisites nodes: 10.240.0.0/16 pods: 100.244.0.0/16 services: 100.32.0.0/13 shootDefaults: # optional: non-overlapping default CIDRs for shoot clusters of that Seed pods: 100.96.0.0/11 services: 100.64.0.0/13 provider: region: eu-west-1 type: <provider>

Apart from the seed’s name, seedConfig.metadata can optionally contain labels and annotations.

gardenlet will set the labels of the registered Seed object to the labels given in the seedConfig plus gardener.cloud/role=seed.

Any custom labels on the Seed object will be removed on the next restart of gardenlet.

If a label is removed from the seedConfig it is removed from the Seed object as well.

In contrast to labels, annotations in the seedConfig are added to existing annotations on the Seed object.

Thus, custom annotations that are added to the Seed object during runtime are not removed by gardenlet on restarts.

Furthermore, if an annotation is removed from the seedConfig, gardenlet does not remove it from the Seed object.

Optional: Enable HA Mode

You may consider running gardenlet with multiple replicas, especially if the seed cluster is configured to host HA shoot control planes.

Therefore, the following Helm chart values define the degree of high availability you want to achieve for the gardenlet deployment.

replicaCount: 2 # or more if a higher failure tolerance is required.

failureToleranceType: zone # One of `zone` or `node` - defines how replicas are spread.

Optional: Enable Backup and Restore

The seed cluster can be set up with backup and restore for the main etcds of shoot clusters.

Gardener uses etcd-backup-restore that integrates with different storage providers to store the shoot cluster’s main etcd backups.

Make sure to obtain client credentials that have sufficient permissions with the chosen storage provider.

Create a secret in the garden cluster with client credentials for the storage provider. The format of the secret is cloud provider specific and can be found in the repository of the respective Gardener extension. For example, the secret for AWS S3 can be found in the AWS provider extension (30-etcd-backup-secret.yaml).

apiVersion: v1

kind: Secret

metadata:

name: sweet-seed-backup

namespace: garden

type: Opaque

data:

# client credentials format is provider specific

Configure the Seed resource in the seedConfig section of your gardenlet configuration to use backup and restore:

# ...

seedConfig:

metadata:

name: sweet-seed

spec:

backup:

provider: <provider>

credentialsRef:

apiVersion: v1

kind: Secret

name: sweet-seed-backup

namespace: garden

Optional: Enable Self-Upgrades

In order to take off the continuous task of deploying gardenlet’s Helm chart in case you want to upgrade its version, it supports self-upgrades.

The way this works is that it pulls information (its configuration and deployment values) from a seedmanagement.gardener.cloud/v1alpha1.Gardenlet resource in the garden cluster.

This resource must be in the garden namespace and must have the same name as the Seed the gardenlet is responsible for.

For more information, see this section.

In order to make gardenlet automatically create a corresponding seedmanagement.gardener.cloud/v1alpha1.Gardenlet resource, you must provide

selfUpgrade:

deployment:

helm:

ociRepository:

ref: <url-to-oci-repository-containing-gardenlet-helm-chart>

in your gardenlet-values.yaml file.

Please replace the ref placeholder with the URL to the OCI repository containing the gardenlet Helm chart you are installing.

Note

If you don’t configure this

selfUpgradesection in the initial deployment, you can also do it later, or you directly create the correspondingseedmanagement.gardener.cloud/v1alpha1.Gardenletresource in the garden cluster.

Deploy the gardenlet

The gardenlet-values.yaml looks something like this (with backup for shoot clusters enabled):

# <default config>

# ...

config:

gardenClientConnection:

# ...

bootstrapKubeconfig:

name: gardenlet-bootstrap-kubeconfig

namespace: garden

kubeconfig: |

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <dummy>

server: <my-garden-cluster-endpoint>

name: my-kubernetes-cluster

# ...

kubeconfigSecret:

name: gardenlet-kubeconfig

namespace: garden

# ...

# <default config>

# ...

seedConfig:

metadata:

name: sweet-seed

spec:

dns:

provider:

type: <provider>

secretRef:

name: ingress-secret

namespace: garden

ingress: # see prerequisites

domain: ingress.dev.my-seed.example.com

controller:

kind: nginx

networks:

nodes: 10.240.0.0/16

pods: 100.244.0.0/16

services: 100.32.0.0/13

shootDefaults:

pods: 100.96.0.0/11

services: 100.64.0.0/13

provider:

region: eu-west-1

type: <provider>

backup:

provider: <provider>

credentialsRef:

apiVersion: v1

kind: Secret

name: sweet-seed-backup

namespace: garden

Deploy the gardenlet Helm chart to the Kubernetes cluster:

helm install gardenlet charts/gardener/gardenlet \

--namespace garden \

-f gardenlet-values.yaml \

--wait

This Helm chart creates:

- A service account

gardenletthat the gardenlet can use to talk to the Seed API server. - RBAC roles for the service account (full admin rights at the moment).

- The secret (

garden/gardenlet-bootstrap-kubeconfig) containing the bootstrapkubeconfig. - The gardenlet deployment in the

gardennamespace.

Check that the gardenlet Is Successfully Deployed

Check that the gardenlets certificate bootstrap was successful.

Check if the secret

gardenlet-kubeconfigin the namespacegardenin the seed cluster is created and contains akubeconfigwith a valid certificate.Get the

kubeconfigfrom the created secret.$ kubectl -n garden get secret gardenlet-kubeconfig -o json | jq -r .data.kubeconfig | base64 -dTest against the garden cluster and verify it’s working.

Extract the

client-certificate-datafrom the usergardenlet.View the certificate:

$ openssl x509 -in ./gardenlet-cert -noout -text

Check that the bootstrap secret

gardenlet-bootstrap-kubeconfighas been deleted from the seed cluster in namespacegarden.Check that the seed cluster is registered and

READYin the garden cluster.Check that the seed cluster

sweet-seedexists and all conditions indicate that it’s available. If so, the Gardenlet is sending regular heartbeats and the seed bootstrapping was successful.Check that the conditions on the

Seedresource look similar to the following:$ kubectl get seed sweet-seed -o json | jq .status.conditions [ { "lastTransitionTime": "2020-07-17T09:17:29Z", "lastUpdateTime": "2020-07-17T09:17:29Z", "message": "Gardenlet is posting ready status.", "reason": "GardenletReady", "status": "True", "type": "GardenletReady" }, { "lastTransitionTime": "2020-07-17T09:17:49Z", "lastUpdateTime": "2020-07-17T09:53:17Z", "message": "Backup Buckets are available.", "reason": "BackupBucketsAvailable", "status": "True", "type": "BackupBucketsReady" } ]

Self Upgrades

In order to keep your gardenlets in such “unmanaged seeds” up-to-date (i.e., in seeds which are no shoot clusters), its Helm chart must be regularly deployed. This requires network connectivity to such clusters which can be challenging if they reside behind a firewall or in restricted environments. It is much simpler if gardenlet could keep itself up-to-date, based on configuration read from the garden cluster. This approach greatly reduces operational complexity.

gardenlet runs a controller which watches for seedmanagement.gardener.cloud/v1alpha1.Gardenlet resources in the garden cluster in the garden namespace having the same name as the Seed the gardenlet is responsible for.

Such resources contain its component configuration and deployment values.

Most notably, a URL to an OCI repository containing gardenlet’s Helm chart is included.

An example Gardenlet resource looks like this:

apiVersion: seedmanagement.gardener.cloud/v1alpha1

kind: Gardenlet

metadata:

name: local

namespace: garden

spec:

deployment:

replicaCount: 1

revisionHistoryLimit: 2

helm:

ociRepository:

ref: <url-to-gardenlet-chart-repository>:v1.97.0

config:

apiVersion: gardenlet.config.gardener.cloud/v1alpha1

kind: GardenletConfiguration

gardenClientConnection:

kubeconfigSecret:

name: gardenlet-kubeconfig

namespace: garden

controllers:

shoot:

reconcileInMaintenanceOnly: true

respectSyncPeriodOverwrite: true

shootState:

concurrentSyncs: 0

featureGates:

DefaultSeccompProfile: true

logging:

enabled: true

vali:

enabled: true

shootNodeLogging:

shootPurposes:

- infrastructure

- production

- development

- evaluation

seedConfig:

apiVersion: core.gardener.cloud/v1beta1

kind: Seed

metadata:

labels:

base: kind

spec:

backup:

provider: local

region: local

credentialsRef:

apiVersion: v1

kind: Secret

name: backup-local

namespace: garden

dns:

provider:

secretRef:

name: internal-domain-internal-local-gardener-cloud

namespace: garden

type: local

ingress:

controller:

kind: nginx

domain: ingress.local.seed.local.gardener.cloud

networks:

nodes: 172.18.0.0/16

pods: 10.1.0.0/16

services: 10.2.0.0/16

shootDefaults:

pods: 10.3.0.0/16

services: 10.4.0.0/16

provider:

region: local

type: local

zones:

- "0"

settings:

excessCapacityReservation:

enabled: false

scheduling:

visible: true

verticalPodAutoscaler:

enabled: true

On reconciliation, gardenlet downloads the Helm chart, renders it with the provided values, and then applies it to its own cluster. Hence, in order to keep a gardenlet up-to-date, it is enough to update the tag/digest of the OCI repository ref for the Helm chart:

spec:

deployment:

helm:

ociRepository:

ref: <url-to-gardenlet-chart-repository>:v1.97.0

This way, network connectivity to the cluster in which gardenlet runs is not required at all (at least for deployment purposes).

When you delete this resource, nothing happens: gardenlet remains running with the configuration as before.

However, self-upgrades are obviously not possible anymore.

In order to upgrade it, you have to either recreate the Gardenlet object, or redeploy the Helm chart.

Related Links

6 - Deploy Gardenlet Via Operator

Deploy a gardenlet Via gardener-operator

The gardenlet can automatically be deployed by gardener-operator into existing Kubernetes clusters in order to register them as seeds.

Prerequisites

Using this method only works when gardener-operator is managing the garden cluster.

If you have used the gardener/controlplane Helm chart for the deployment of the Gardener control plane, please refer to this document.

Tip

The initial seed cluster can be the garden cluster itself, but for better separation of concerns, it is recommended to only register other clusters as seeds.

Deployment of gardenlets

Using this method, gardener-operator is only taking care of the very first deployment of gardenlet.

Once running, the gardenlet leverages the self-upgrade strategy in order to keep itself up-to-date.

Concretely, gardener-operator only acts when there is no respective Seed resource yet.

In order to request a gardenlet deployment, create following resource in the (virtual) garden cluster:

apiVersion: seedmanagement.gardener.cloud/v1alpha1

kind: Gardenlet

metadata:

name: local

namespace: garden

spec:

deployment:

replicaCount: 1

revisionHistoryLimit: 2

helm:

ociRepository:

ref: <url-to-gardenlet-chart-repository>:v1.97.0

config:

apiVersion: gardenlet.config.gardener.cloud/v1alpha1

kind: GardenletConfiguration

controllers:

shoot:

reconcileInMaintenanceOnly: true

respectSyncPeriodOverwrite: true

shootState:

concurrentSyncs: 0

logging:

enabled: true

vali:

enabled: true

shootNodeLogging:

shootPurposes:

- infrastructure

- production

- development

- evaluation

seedConfig:

apiVersion: core.gardener.cloud/v1beta1

kind: Seed

metadata:

labels:

base: kind

spec:

backup:

provider: local

region: local

credentialsRef:

apiVersion: v1

kind: Secret

name: backup-local

namespace: garden

dns:

provider:

secretRef:

name: internal-domain-internal-local-gardener-cloud

namespace: garden

type: local

ingress:

controller:

kind: nginx

domain: ingress.local.seed.local.gardener.cloud

networks:

nodes: 172.18.0.0/16

pods: 10.1.0.0/16

services: 10.2.0.0/16

shootDefaults:

pods: 10.3.0.0/16

services: 10.4.0.0/16

provider:

region: local

type: local

zones:

- "0"

settings:

excessCapacityReservation:

enabled: false

scheduling:

visible: true

verticalPodAutoscaler:

enabled: true

This causes gardener-operator to deploy gardenlet to the same cluster where it is running.

Once it comes up, gardenlet will create a Seed resource with the same name and uses the Gardenlet resource for self-upgrades (see this document).

Remote Clusters

If you want gardener-operator to deploy gardenlet into some other cluster, create a kubeconfig Secret and reference it in the Gardenlet resource:

apiVersion: v1

kind: Secret

metadata:

name: remote-cluster-kubeconfig

namespace: garden

type: Opaque

data:

kubeconfig: base64(kubeconfig-to-remote-cluster)

---

apiVersion: seedmanagement.gardener.cloud/v1alpha1

kind: Gardenlet

metadata:

name: local

namespace: garden

spec:

kubeconfigSecretRef:

name: remote-cluster-kubeconfig

# ...

Important

After successful deployment of gardenlet,

gardener-operatorwill delete theremote-cluster-kubeconfigSecretand set.spec.kubeconfigSecretReftonil. This is because the kubeconfig will never ever be needed anymore (gardener-operatoris only responsible for initial deployment, and gardenlet updates itself with an in-cluster kubeconfig). In case your landscape is managed via a GitOps approach, you might want to reflect this change in your repository.

Forceful Re-Deployment

In certain scenarios, it might be necessary to forcefully re-deploy the gardenlet.

For example, in case the gardenlet client certificate has been expired or is “lost”, or the gardenlet Deployment has been “accidentally” (😉) deleted from the seed cluster.

You can trigger the forceful re-deployment by annotating the Gardenlet with

gardener.cloud/operation=force-redeploy

Tip

Do not forget to create the kubeconfig

Secretand re-add the.spec.kubeconfigSecretRefto theGardenletspecification if this is a remote cluster.

gardener-operator will remove the operation annotation after it’s done.

Just like after the initial deployment, it’ll also delete the kubeconfig Secret and set .spec.kubeconfigSecretRef to nil, see above.

Configuring the connection to garden cluster

The garden cluster connection of your seeds are configured automatically by gardener-operator.

You could also specify the gardenClusterAddress and gardenClusterCACert in the Gardenlet resource manually, but this is not recommended.

If GardenClusterAddress is unset gardener-operator will determine the address automatically based on the Garden resource.

It is set to "api." + garden.spec.virtualCluster.dns.domains[0] which should cover most use cases since this is the immutable address of the garden cluster.

If the runtime cluster is used as a seed cluster and IstioTLSTermination feature is not active, gardenlet overwrites the address with the internal service address of the garden cluster at runtime.

This happens for this single seed cluster only, so any managed seed running on this seed cluster will still use the default address of the garden cluster.

gardenClusterCACert is deprecated and should not be set. In this case, gardenlet will update the garden cluster CA certificate automatically from the garden cluster.

If a seed managed by a Gardenlet resource loses permanent access to the garden cluster for some reason, you can re-establish the connection by using the Forceful Re-Deployment feature.

7 - Feature Gates

Feature Gates in Gardener

This page contains an overview of the various feature gates an administrator can specify on different Gardener components.

Overview

Feature gates are a set of key=value pairs that describe Gardener features. You can turn these features on or off using the component configuration file for a specific component.

Each Gardener component lets you enable or disable a set of feature gates that are relevant to that component. For example, this is the configuration of the gardenlet component.

The following tables are a summary of the feature gates that you can set on different Gardener components.

- The “Since” column contains the Gardener release when a feature is introduced or its release stage is changed.

- The “Until” column, if not empty, contains the last Gardener release in which you can still use a feature gate.

- If a feature is in the Alpha or Beta state, you can find the feature listed in the Alpha/Beta feature gate table.

- If a feature is stable you can find all stages for that feature listed in the Graduated/Deprecated feature gate table.

- The Graduated/Deprecated feature gate table also lists deprecated and withdrawn features.

Feature Gates for Alpha or Beta Features

| Feature | Default | Stage | Since | Until |

|---|---|---|---|---|

| DefaultSeccompProfile | false | Alpha | 1.54 | |

| UseNamespacedCloudProfile | false | Alpha | 1.92 | 1.111 |

| UseNamespacedCloudProfile | true | Beta | 1.112 | |

| ShootCredentialsBinding | false | Alpha | 1.98 | 1.106 |

| ShootCredentialsBinding | true | Beta | 1.107 | |

| NewWorkerPoolHash | false | Alpha | 1.98 | |

| CredentialsRotationWithoutWorkersRollout | false | Alpha | 1.112 | 1.120 |

| CredentialsRotationWithoutWorkersRollout | true | Beta | 1.121 | |

| InPlaceNodeUpdates | false | Alpha | 1.113 | |

| IstioTLSTermination | false | Alpha | 1.114 | |

| CloudProfileCapabilities | false | Alpha | 1.117 | |

| DoNotCopyBackupCredentials | false | Alpha | 1.121 | 1.122 |

| DoNotCopyBackupCredentials | true | Beta | 1.123 |

Feature Gates for Graduated or Deprecated Features

| Feature | Default | Stage | Since | Until |

|---|---|---|---|---|

| NodeLocalDNS | false | Alpha | 1.7 | 1.25 |

| NodeLocalDNS | Removed | 1.26 | ||

| KonnectivityTunnel | false | Alpha | 1.6 | 1.26 |

| KonnectivityTunnel | Removed | 1.27 | ||

| MountHostCADirectories | false | Alpha | 1.11 | 1.25 |

| MountHostCADirectories | true | Beta | 1.26 | 1.27 |

| MountHostCADirectories | true | GA | 1.27 | 1.29 |

| MountHostCADirectories | Removed | 1.30 | ||

| DisallowKubeconfigRotationForShootInDeletion | false | Alpha | 1.28 | 1.31 |

| DisallowKubeconfigRotationForShootInDeletion | true | Beta | 1.32 | 1.35 |

| DisallowKubeconfigRotationForShootInDeletion | true | GA | 1.36 | 1.37 |

| DisallowKubeconfigRotationForShootInDeletion | Removed | 1.38 | ||

| Logging | false | Alpha | 0.13 | 1.40 |

| Logging | Removed | 1.41 | ||

| AdminKubeconfigRequest | false | Alpha | 1.24 | 1.38 |

| AdminKubeconfigRequest | true | Beta | 1.39 | 1.41 |

| AdminKubeconfigRequest | true | GA | 1.42 | 1.49 |

| AdminKubeconfigRequest | Removed | 1.50 | ||

| UseDNSRecords | false | Alpha | 1.27 | 1.38 |

| UseDNSRecords | true | Beta | 1.39 | 1.43 |

| UseDNSRecords | true | GA | 1.44 | 1.49 |

| UseDNSRecords | Removed | 1.50 | ||

| CachedRuntimeClients | false | Alpha | 1.7 | 1.33 |

| CachedRuntimeClients | true | Beta | 1.34 | 1.44 |

| CachedRuntimeClients | true | GA | 1.45 | 1.49 |

| CachedRuntimeClients | Removed | 1.50 | ||

| DenyInvalidExtensionResources | false | Alpha | 1.31 | 1.41 |

| DenyInvalidExtensionResources | true | Beta | 1.42 | 1.44 |

| DenyInvalidExtensionResources | true | GA | 1.45 | 1.49 |

| DenyInvalidExtensionResources | Removed | 1.50 | ||

| RotateSSHKeypairOnMaintenance | false | Alpha | 1.28 | 1.44 |

| RotateSSHKeypairOnMaintenance | true | Beta | 1.45 | 1.47 |

| RotateSSHKeypairOnMaintenance (deprecated) | false | Beta | 1.48 | 1.50 |

| RotateSSHKeypairOnMaintenance (deprecated) | Removed | 1.51 | ||

| ShootForceDeletion | false | Alpha | 1.81 | 1.90 |

| ShootForceDeletion | true | Beta | 1.91 | 1.110 |

| ShootForceDeletion | true | GA | 1.111 | 1.119 |

| ShootForceDeletion | Removed | 1.120 | ||

| ShootMaxTokenExpirationOverwrite | false | Alpha | 1.43 | 1.44 |

| ShootMaxTokenExpirationOverwrite | true | Beta | 1.45 | 1.47 |

| ShootMaxTokenExpirationOverwrite | true | GA | 1.48 | 1.50 |

| ShootMaxTokenExpirationOverwrite | Removed | 1.51 | ||

| ShootMaxTokenExpirationValidation | false | Alpha | 1.43 | 1.45 |

| ShootMaxTokenExpirationValidation | true | Beta | 1.46 | 1.47 |

| ShootMaxTokenExpirationValidation | true | GA | 1.48 | 1.50 |

| ShootMaxTokenExpirationValidation | Removed | 1.51 | ||

| WorkerPoolKubernetesVersion | false | Alpha | 1.35 | 1.45 |

| WorkerPoolKubernetesVersion | true | Beta | 1.46 | 1.49 |

| WorkerPoolKubernetesVersion | true | GA | 1.50 | 1.51 |

| WorkerPoolKubernetesVersion | Removed | 1.52 | ||

| DisableDNSProviderManagement | false | Alpha | 1.41 | 1.49 |

| DisableDNSProviderManagement | true | Beta | 1.50 | 1.51 |

| DisableDNSProviderManagement | true | GA | 1.52 | 1.59 |

| DisableDNSProviderManagement | Removed | 1.60 | ||

| SecretBindingProviderValidation | false | Alpha | 1.38 | 1.50 |

| SecretBindingProviderValidation | true | Beta | 1.51 | 1.52 |

| SecretBindingProviderValidation | true | GA | 1.53 | 1.54 |

| SecretBindingProviderValidation | Removed | 1.55 | ||

| SeedKubeScheduler | false | Alpha | 1.15 | 1.54 |

| SeedKubeScheduler | false | Deprecated | 1.55 | 1.60 |

| SeedKubeScheduler | Removed | 1.61 | ||

| ShootCARotation | false | Alpha | 1.42 | 1.50 |

| ShootCARotation | true | Beta | 1.51 | 1.56 |

| ShootCARotation | true | GA | 1.57 | 1.59 |

| ShootCARotation | Removed | 1.60 | ||

| ShootSARotation | false | Alpha | 1.48 | 1.50 |

| ShootSARotation | true | Beta | 1.51 | 1.56 |

| ShootSARotation | true | GA | 1.57 | 1.59 |

| ShootSARotation | Removed | 1.60 | ||

| ReversedVPN | false | Alpha | 1.22 | 1.41 |

| ReversedVPN | true | Beta | 1.42 | 1.62 |

| ReversedVPN | true | GA | 1.63 | 1.69 |

| ReversedVPN | Removed | 1.70 | ||

| ForceRestore | Removed | 1.66 | ||

| SeedChange | false | Alpha | 1.12 | 1.52 |

| SeedChange | true | Beta | 1.53 | 1.68 |

| SeedChange | true | GA | 1.69 | 1.72 |

| SeedChange | Removed | 1.73 | ||

| CopyEtcdBackupsDuringControlPlaneMigration | false | Alpha | 1.37 | 1.52 |

| CopyEtcdBackupsDuringControlPlaneMigration | true | Beta | 1.53 | 1.68 |

| CopyEtcdBackupsDuringControlPlaneMigration | true | GA | 1.69 | 1.72 |

| CopyEtcdBackupsDuringControlPlaneMigration | Removed | 1.73 | ||

| ManagedIstio | false | Alpha | 1.5 | 1.18 |

| ManagedIstio | true | Beta | 1.19 | 1.47 |

| ManagedIstio | true | Deprecated | 1.48 | 1.69 |

| ManagedIstio | Removed | 1.70 | ||

| APIServerSNI | false | Alpha | 1.7 | 1.18 |

| APIServerSNI | true | Beta | 1.19 | 1.47 |

| APIServerSNI | true | Deprecated | 1.48 | 1.72 |

| APIServerSNI | Removed | 1.73 | ||

| HAControlPlanes | false | Alpha | 1.49 | 1.70 |

| HAControlPlanes | true | Beta | 1.71 | 1.72 |

| HAControlPlanes | true | GA | 1.73 | 1.73 |

| HAControlPlanes | Removed | 1.74 | ||

| FullNetworkPoliciesInRuntimeCluster | false | Alpha | 1.66 | 1.70 |

| FullNetworkPoliciesInRuntimeCluster | true | Beta | 1.71 | 1.72 |

| FullNetworkPoliciesInRuntimeCluster | true | GA | 1.73 | 1.73 |

| FullNetworkPoliciesInRuntimeCluster | Removed | 1.74 | ||

| DisableScalingClassesForShoots | false | Alpha | 1.73 | 1.78 |

| DisableScalingClassesForShoots | true | Beta | 1.79 | 1.80 |

| DisableScalingClassesForShoots | true | GA | 1.81 | 1.81 |

| DisableScalingClassesForShoots | Removed | 1.82 | ||

| ContainerdRegistryHostsDir | false | Alpha | 1.77 | 1.85 |

| ContainerdRegistryHostsDir | true | Beta | 1.86 | 1.86 |

| ContainerdRegistryHostsDir | true | GA | 1.87 | 1.87 |

| ContainerdRegistryHostsDir | Removed | 1.88 | ||

| WorkerlessShoots | false | Alpha | 1.70 | 1.78 |

| WorkerlessShoots | true | Beta | 1.79 | 1.85 |

| WorkerlessShoots | true | GA | 1.86 | 1.87 |

| WorkerlessShoots | Removed | 1.88 | ||

| MachineControllerManagerDeployment | false | Alpha | 1.73 | 1.80 |

| MachineControllerManagerDeployment | true | Beta | 1.81 | 1.81 |

| MachineControllerManagerDeployment | true | GA | 1.82 | 1.91 |

| MachineControllerManagerDeployment | Removed | 1.92 | ||

| APIServerFastRollout | true | Beta | 1.82 | 1.89 |

| APIServerFastRollout | true | GA | 1.90 | 1.91 |

| APIServerFastRollout | Removed | 1.92 | ||

| UseGardenerNodeAgent | false | Alpha | 1.82 | 1.88 |

| UseGardenerNodeAgent | true | Beta | 1.89 | 1.89 |

| UseGardenerNodeAgent | true | GA | 1.90 | 1.91 |

| UseGardenerNodeAgent | Removed | 1.92 | ||

| CoreDNSQueryRewriting | false | Alpha | 1.55 | 1.95 |

| CoreDNSQueryRewriting | true | Beta | 1.96 | 1.96 |

| CoreDNSQueryRewriting | true | GA | 1.97 | 1.100 |

| CoreDNSQueryRewriting | Removed | 1.101 | ||

| MutableShootSpecNetworkingNodes | false | Alpha | 1.64 | 1.95 |

| MutableShootSpecNetworkingNodes | true | Beta | 1.96 | 1.96 |

| MutableShootSpecNetworkingNodes | true | GA | 1.97 | 1.100 |

| MutableShootSpecNetworkingNodes | Removed | 1.101 | ||

| VPAForETCD | false | Alpha | 1.94 | 1.96 |

| VPAForETCD | true | Beta | 1.97 | 1.104 |

| VPAForETCD | true | GA | 1.105 | 1.108 |

| VPAForETCD | Removed | 1.109 | ||

| VPAAndHPAForAPIServer | false | Alpha | 1.95 | 1.100 |

| VPAAndHPAForAPIServer | true | Beta | 1.101 | 1.104 |

| VPAAndHPAForAPIServer | true | GA | 1.105 | 1.108 |

| VPAAndHPAForAPIServer | Removed | 1.109 | ||

| HVPA | false | Alpha | 0.31 | 1.105 |

| HVPA | false | Deprecated | 1.106 | 1.108 |

| HVPA | Removed | 1.109 | ||

| HVPAForShootedSeed | false | Alpha | 0.32 | 1.105 |

| HVPAForShootedSeed | false | Deprecated | 1.106 | 1.108 |

| HVPAForShootedSeed | Removed | 1.109 | ||

| IPv6SingleStack | false | Alpha | 1.63 | 1.106 |

| IPv6SingleStack | Removed | 1.107 | ||

| ShootManagedIssuer | false | Alpha | 1.93 | 1.110 |

| ShootManagedIssuer | Removed | 1.111 | ||

| NewVPN | false | Alpha | 1.104 | 1.114 |

| NewVPN | true | Beta | 1.115 | 1.115 |

| NewVPN | true | GA | 1.116 | |

| RemoveAPIServerProxyLegacyPort | false | Alpha | 1.113 | 1.118 |

| RemoveAPIServerProxyLegacyPort | true | Beta | 1.119 | 1.121 |

| RemoveAPIServerProxyLegacyPort | true | GA | 1.122 | 1.122 |

| RemoveAPIServerProxyLegacyPort | Removed | 1.123 | ||

| NodeAgentAuthorizer | false | Alpha | 1.109 | 1.115 |

| NodeAgentAuthorizer | true | Beta | 1.116 | 1.122 |

| NodeAgentAuthorizer | true | GA | 1.123 |

Using a Feature

A feature can be in Alpha, Beta or GA stage. An Alpha feature means:

- Disabled by default.

- Might be buggy. Enabling the feature may expose bugs.

- Support for feature may be dropped at any time without notice.

- The API may change in incompatible ways in a later software release without notice.

- Recommended for use only in short-lived testing clusters, due to increased risk of bugs and lack of long-term support.

A Beta feature means:

- Enabled by default.

- The feature is well tested. Enabling the feature is considered safe.

- Support for the overall feature will not be dropped, though details may change.

- The schema and/or semantics of objects may change in incompatible ways in a subsequent beta or stable release. When this happens, we will provide instructions for migrating to the next version. This may require deleting, editing, and re-creating API objects. The editing process may require some thought. This may require downtime for applications that rely on the feature.

- Recommended for only non-critical uses because of potential for incompatible changes in subsequent releases.

Please do try Beta features and give feedback on them! After they exit beta, it may not be practical for us to make more changes.

A General Availability (GA) feature is also referred to as a stable feature. It means:

- The feature is always enabled; you cannot disable it.

- The corresponding feature gate is no longer needed.

- Stable versions of features will appear in released software for many subsequent versions.

List of Feature Gates

Note: All feature gates that are relevant for

gardenlet, are also relevant forgardenadm.

| Feature | Relevant Components | Description |

|---|---|---|

| DefaultSeccompProfile | gardenlet, gardener-operator | Enables the defaulting of the seccomp profile for Gardener managed workload in the garden or seed to RuntimeDefault. |

| UseNamespacedCloudProfile | gardener-apiserver | Enables usage of NamespacedCloudProfiles in Shoots. |

| ShootManagedIssuer | gardenlet | Enables the shoot managed issuer functionality described in GEP 24. |

| ShootCredentialsBinding | gardener-apiserver | Enables usage of CredentialsBindingName in Shoots. |

| NewWorkerPoolHash | gardenlet | Enables usage of the new worker pool hash calculation. The new calculation supports rolling worker pools if kubeReserved, systemReserved, evictionHard or cpuManagerPolicy in the kubelet configuration are changed. All provider extensions must be upgraded to support this feature first. Existing worker pools are not immediately migrated to the new hash variant, since this would trigger the replacement of all nodes. The migration happens when a rolling update is triggered according to the old or new hash version calculation. |

| NewVPN | gardenlet | Enables usage of the new implementation of the VPN (go rewrite) using an IPv6 transfer network. |

| NodeAgentAuthorizer | gardenlet, gardener-node-agent | Enables authorization of gardener-node-agent to kube-apiserver of shoot clusters using an authorization webhook. It restricts the permissions of each gardener-node-agent instance to the objects belonging to its own node only. |

| CredentialsRotationWithoutWorkersRollout | gardener-apiserver | CredentialsRotationWithoutWorkersRollout enables starting the credentials rotation without immediately causing a rolling update of all worker nodes. Instead, the rolling update can be triggered manually by the user at a later point in time of their convenience. This should only be enabled when all deployed provider extensions vendor at least gardener/gardener@v1.111+. |

| InPlaceNodeUpdates | gardener-apiserver | Enables setting the update strategy of worker pools to AutoInPlaceUpdate or ManualInPlaceUpdate in the Shoot API. |

| IstioTLSTermination | gardenlet, gardener-operator | Enables TLS termination for the Istio Ingress Gateway instead of TLS termination at the kube-apiserver. It allows load-balancing of requests to the kube-apiserver on request level instead of connection level. |

| CloudProfileCapabilities | gardener-apiserver | Enables the usage of capabilities in the CloudProfile. Capabilities are used to create a relation between machineTypes and machineImages. It allows to validate worker groups of a shoot ensuring the selected image and machine combination will boot up successfully. Capabilities are also used to determine valid upgrade paths during automated maintenance operation. |

| DoNotCopyBackupCredentials | gardenlet | Disables the copying of Shoot infrastructure credentials as backup credentials when the Shoot is used as a ManagedSeed. Operators are responsible for providing the credentials for backup explicitly. Credentials that were already copied will be labeled with secret.backup.gardener.cloud/status=previously-managed and would have to be cleaned up by operators. |

8 - Getting Started Locally

Deploying Gardener Locally

This document will walk you through deploying Gardener on your local machine. If you encounter difficulties, please open an issue so that we can make this process easier.

Overview

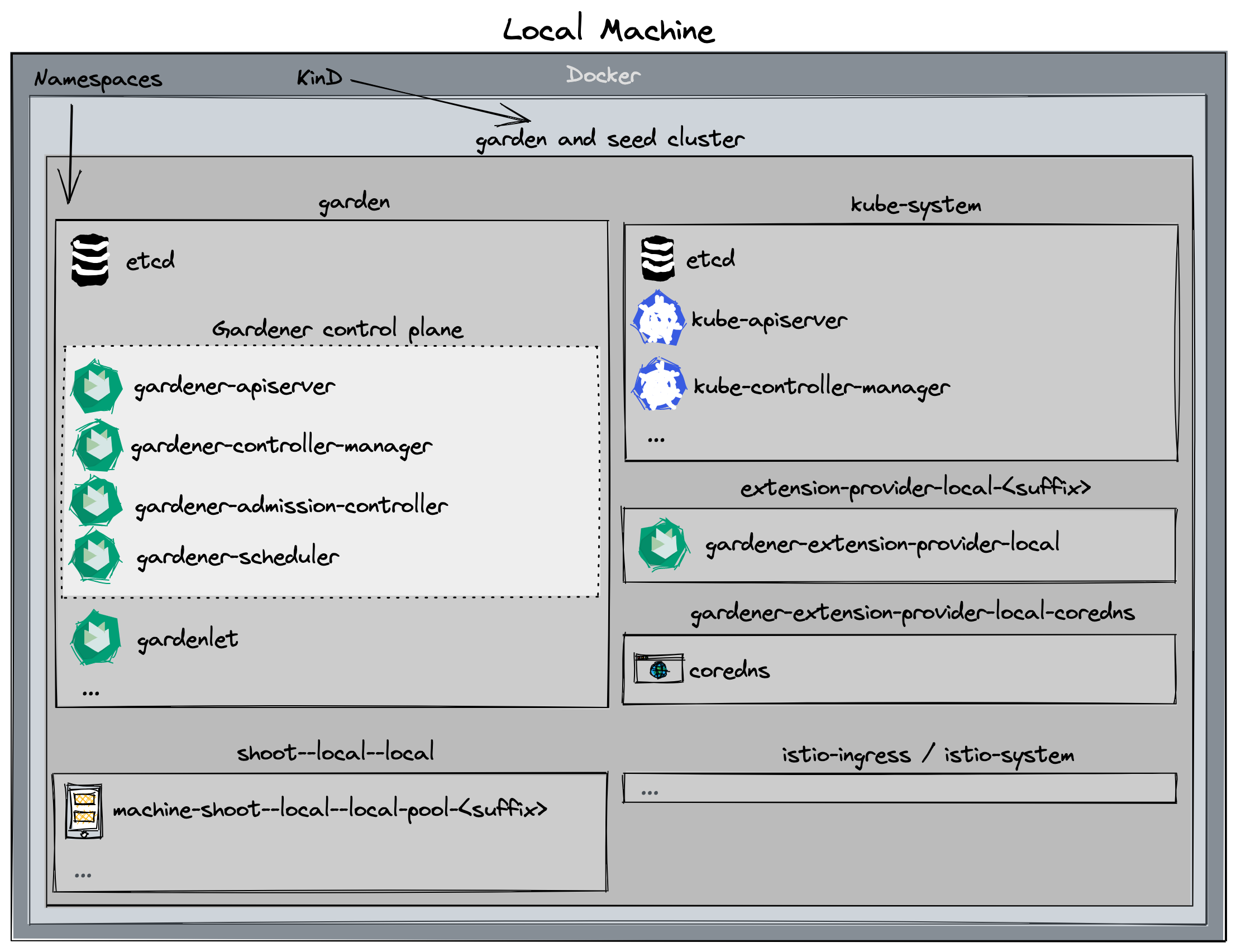

Gardener runs in any Kubernetes cluster. In this guide, we will start a KinD cluster which is used as both garden and seed cluster (please refer to the architecture overview) for simplicity.

Based on Skaffold, the container images for all required components will be built and deployed into the cluster (via their Helm charts).

Alternatives

When deploying Gardener on your local machine you might face several limitations:

- Your machine doesn’t have enough compute resources (see prerequisites) for hosting a second seed cluster or multiple shoot clusters.

- Testing Gardener’s IPv6 features requires a Linux machine and native IPv6 connectivity to the internet, but you’re on macOS or don’t have IPv6 connectivity in your office environment or via your home ISP.

In these cases, you might want to check out one of the following options that run the setup described in this guide elsewhere for circumventing these limitations:

- remote local setup: deploy on a remote pod for more compute resources

- dev box on Google Cloud: deploy on a Google Cloud machine for more compute resource and/or simple IPv4/IPv6 dual-stack networking

Prerequisites

- Make sure that you have followed the Local Setup guide up until the Get the sources step.

- Make sure your Docker daemon is up-to-date, up and running and has enough resources (at least

8CPUs and8Gimemory; see here how to configure the resources for Docker for Mac).Please note that 8 CPU / 8Gi memory might not be enough for more than two

Shootclusters, i.e., you might need to increase these values if you want to run additionalShoots. If you plan on following the optional steps to create a second seed cluster, the required resources will be more - at least10CPUs and18Gimemory. Additionally, please configure at least120Giof disk size for the Docker daemon. Tip: You can clean up unused data withdocker system dfanddocker system prune -a.

Setting Up the KinD Cluster (Garden and Seed)

make kind-up

If you want to setup an IPv6 KinD cluster, use

make kind-up IPFAMILY=ipv6instead.

This command sets up a new KinD cluster named gardener-local and stores the kubeconfig in the ./example/gardener-local/kind/local/kubeconfig file.

It might be helpful to copy this file to

$HOME/.kube/config, since you will need to target this KinD cluster multiple times. Alternatively, make sure to set yourKUBECONFIGenvironment variable to./example/gardener-local/kind/local/kubeconfigfor all future steps viaexport KUBECONFIG=$PWD/example/gardener-local/kind/local/kubeconfig.

All following steps assume that you are using this kubeconfig.

Additionally, this command also deploys a local container registry to the cluster, as well as a few registry mirrors, that are set up as a pull-through cache for all upstream registries Gardener uses by default. This is done to speed up image pulls across local clusters.

You will need to add

127.0.0.1 garden.local.gardener.cloudto your /etc/hosts.

The local registry can now be accessed either via localhost:5001 or garden.local.gardener.cloud:5001 for pushing and pulling.

The storage directories of the registries are mounted to the host machine under dev/local-registry.

With this, mirrored images don’t have to be pulled again after recreating the cluster.

The command also deploys a default calico installation as the cluster’s CNI implementation with NetworkPolicy support (the default kindnet CNI doesn’t provide NetworkPolicy support).

Furthermore, it deploys the metrics-server in order to support HPA and VPA on the seed cluster.

Setting Up IPv6 Single-Stack Networking (optional)

First, ensure that your /etc/hosts file contains an entry resolving garden.local.gardener.cloud to the IPv6 loopback address:

::1 garden.local.gardener.cloud

Typically, only ip6-localhost is mapped to ::1 on linux machines.

However, we need garden.local.gardener.cloud to resolve to both 127.0.0.1 and ::1 so that we can talk to our registry via a single address (garden.local.gardener.cloud:5001).

Next, we need to configure NAT for outgoing traffic from the kind network to the internet.

After executing make kind-up IPFAMILY=ipv6, execute the following command to set up the corresponding iptables rules:

ip6tables -t nat -A POSTROUTING -o $(ip route show default | awk '{print $5}') -s fd00:10::/64 -j MASQUERADE

Setting Up Gardener

make gardener-up

If you want to setup an IPv6 ready Gardener, use

make gardener-up IPFAMILY=ipv6instead.

This will first build the base images (which might take a bit if you do it for the first time). Afterwards, the Gardener resources will be deployed into the cluster.

Developing Gardener

make gardener-dev

This is similar to make gardener-up but additionally starts a skaffold dev loop.

After the initial deployment, skaffold starts watching source files.

Once it has detected changes, press any key to trigger a new build and deployment of the changed components.

Tip: you can set the SKAFFOLD_MODULE environment variable to select specific modules of the skaffold configuration (see skaffold.yaml) that skaffold should watch, build, and deploy.

This significantly reduces turnaround times during development.

For example, if you want to develop changes to gardenlet:

# initial deployment of all components

make gardener-up

# start iterating on gardenlet without deploying other components

make gardener-dev SKAFFOLD_MODULE=gardenlet

Debugging Gardener

make gardener-debug

This is using skaffold debugging features. In the Gardener case, Go debugging using Delve is the most relevant use case. Please see the skaffold debugging documentation how to set up your IDE accordingly or check the examples below (GoLand, VS Code).

SKAFFOLD_MODULE environment variable is working the same way as described for Developing Gardener. However, skaffold is not watching for changes when debugging,

because it would like to avoid interrupting your debugging session.

For example, if you want to debug gardenlet:

# initial deployment of all components

make gardener-up

# start debugging gardenlet without deploying other components

make gardener-debug SKAFFOLD_MODULE=gardenlet

In debugging flow, skaffold builds your container images, reconfigures your pods and creates port forwardings for the Delve debugging ports to your localhost.

The default port is 56268. If you debug multiple pods at the same time, the port of the second pod will be forwarded to 56269 and so on.

Please check your console output for the concrete port-forwarding on your machine.

Note: Resuming or stopping only a single goroutine (Go Issue 25578, 31132) is currently not supported, so the action will cause all the goroutines to get activated or paused. (vscode-go wiki)

This means that when a goroutine of gardenlet (or any other gardener-core component you try to debug) is paused on a breakpoint, all the other goroutines are paused. Hence, when the whole gardenlet process is paused, it can not renew its lease and can not respond to the liveness and readiness probes. Skaffold automatically increases timeoutSeconds of liveness and readiness probes to 600. Anyway, we were facing problems when debugging that pods have been killed after a while.

Thus, leader election, health and readiness checks for gardener-admission-controller, gardener-apiserver, gardener-controller-manager, gardener-scheduler,gardenlet and operator are disabled when debugging.

If you have similar problems with other components which are not deployed by skaffold, you could temporarily turn off the leader election and disable liveness and readiness probes there too.

Debugging in GoLand

- Edit your Run/Debug Configurations.

- Add a new Go Remote configuration.

- Set the port to

56268(or any increment of it when debugging multiple components). - Recommended: Change the behavior of On disconnect to Leave it running.

Debugging in VS Code

- Create or edit your

.vscode/launch.jsonconfiguration. - Add the following configuration:

{

"name": "go remote",

"type": "go",

"request": "attach",

"mode": "remote",

"port": 56268, // or any increment of it when debugging multiple components

"host": "127.0.0.1"

}

Since the ko builder is used in Skaffold to build the images, it’s not necessary to specify the cwd and remotePath options as they match the workspace folder (ref).

Creating a Shoot Cluster

You can wait for the Seed to be ready by running:

./hack/usage/wait-for.sh seed local GardenletReady SeedSystemComponentsHealthy ExtensionsReady

Alternatively, you can run kubectl get seed local and wait for the STATUS to indicate readiness:

NAME STATUS PROVIDER REGION AGE VERSION K8S VERSION

local Ready local local 4m42s vX.Y.Z-dev v1.28.1

In order to create a first shoot cluster, just run:

kubectl apply -f example/provider-local/shoot.yaml

You can wait for the Shoot to be ready by running:

NAMESPACE=garden-local ./hack/usage/wait-for.sh shoot local APIServerAvailable ControlPlaneHealthy ObservabilityComponentsHealthy EveryNodeReady SystemComponentsHealthy

Alternatively, you can run kubectl -n garden-local get shoot local and wait for the LAST OPERATION to reach 100%:

NAME CLOUDPROFILE PROVIDER REGION K8S VERSION HIBERNATION LAST OPERATION STATUS AGE

local local local local 1.28.1 Awake Create Processing (43%) healthy 94s

If you don’t need any worker pools, you can create a workerless Shoot by running:

kubectl apply -f example/provider-local/shoot-workerless.yaml

(Optional): You could also execute a simple e2e test (creating and deleting a shoot) by running:

make test-e2e-local-simple KUBECONFIG="$PWD/example/gardener-local/kind/local/kubeconfig"

Accessing the Shoot Cluster

⚠️ Please note that in this setup, shoot clusters are not accessible by default when you download the kubeconfig and try to communicate with them.

The reason is that your host most probably cannot resolve the DNS names of the clusters since provider-local extension runs inside the KinD cluster (for more details, see DNSRecord).

Hence, if you want to access the shoot cluster, you have to run the following command which will extend your /etc/hosts file with the required information to make the DNS names resolvable:

cat <<EOF | sudo tee -a /etc/hosts

# Begin of Gardener local setup section

# Shoot API server domains

172.18.255.1 api.local.local.external.local.gardener.cloud

172.18.255.1 api.local.local.internal.local.gardener.cloud

# Ingress

172.18.255.1 p-seed.ingress.local.seed.local.gardener.cloud

172.18.255.1 g-seed.ingress.local.seed.local.gardener.cloud

172.18.255.1 gu-local--local.ingress.local.seed.local.gardener.cloud

172.18.255.1 p-local--local.ingress.local.seed.local.gardener.cloud

172.18.255.1 v-local--local.ingress.local.seed.local.gardener.cloud

# E2E tests

172.18.255.1 api.e2e-managedseed.garden.external.local.gardener.cloud

172.18.255.1 api.e2e-managedseed.garden.internal.local.gardener.cloud

172.18.255.1 api.e2e-hib.local.external.local.gardener.cloud

172.18.255.1 api.e2e-hib.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-hib-wl.local.external.local.gardener.cloud

172.18.255.1 api.e2e-hib-wl.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-unpriv.local.external.local.gardener.cloud

172.18.255.1 api.e2e-unpriv.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-wake-up.local.external.local.gardener.cloud

172.18.255.1 api.e2e-wake-up.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-wake-up-wl.local.external.local.gardener.cloud

172.18.255.1 api.e2e-wake-up-wl.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-wake-up-ncp.local.external.local.gardener.cloud

172.18.255.1 api.e2e-wake-up-ncp.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-migrate.local.external.local.gardener.cloud

172.18.255.1 api.e2e-migrate.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-migrate-wl.local.external.local.gardener.cloud

172.18.255.1 api.e2e-migrate-wl.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-mgr-hib.local.external.local.gardener.cloud

172.18.255.1 api.e2e-mgr-hib.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-rotate.local.external.local.gardener.cloud

172.18.255.1 api.e2e-rotate.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-rotate-wl.local.external.local.gardener.cloud

172.18.255.1 api.e2e-rotate-wl.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-rot-noroll.local.external.local.gardener.cloud

172.18.255.1 api.e2e-rot-noroll.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-rot-ip.local.external.local.gardener.cloud

172.18.255.1 api.e2e-rot-ip.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-rot-nr-ip.local.external.local.gardener.cloud

172.18.255.1 api.e2e-rot-nr-ip.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-default.local.external.local.gardener.cloud

172.18.255.1 api.e2e-default.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-default-wl.local.external.local.gardener.cloud

172.18.255.1 api.e2e-default-wl.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-default-ip.local.external.local.gardener.cloud

172.18.255.1 api.e2e-default-ip.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-force-delete.local.external.local.gardener.cloud

172.18.255.1 api.e2e-force-delete.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-fd-hib.local.external.local.gardener.cloud

172.18.255.1 api.e2e-fd-hib.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-upd-node.local.external.local.gardener.cloud

172.18.255.1 api.e2e-upd-node.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-upd-node-wl.local.external.local.gardener.cloud

172.18.255.1 api.e2e-upd-node-wl.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-upgrade.local.external.local.gardener.cloud

172.18.255.1 api.e2e-upgrade.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-upgrade-wl.local.external.local.gardener.cloud

172.18.255.1 api.e2e-upgrade-wl.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-upg-hib.local.external.local.gardener.cloud

172.18.255.1 api.e2e-upg-hib.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-upg-hib-wl.local.external.local.gardener.cloud

172.18.255.1 api.e2e-upg-hib-wl.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-auth-one.local.external.local.gardener.cloud

172.18.255.1 api.e2e-auth-one.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-auth-two.local.external.local.gardener.cloud

172.18.255.1 api.e2e-auth-two.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-layer4-lb.local.internal.local.gardener.cloud

172.18.255.1 api.e2e-layer4-lb.local.external.local.gardener.cloud

172.18.255.1 gu-local--e2e-rotate.ingress.local.seed.local.gardener.cloud

172.18.255.1 gu-local--e2e-rotate-wl.ingress.local.seed.local.gardener.cloud

172.18.255.1 gu-local--e2e-rot-noroll.ingress.local.seed.local.gardener.cloud

172.18.255.1 gu-local--e2e-rot-ip.ingress.local.seed.local.gardener.cloud

172.18.255.1 gu-local--e2e-rot-nr-ip.ingress.local.seed.local.gardener.cloud

# End of Gardener local setup section

EOF

To access the Shoot, you can acquire a kubeconfig by using the shoots/adminkubeconfig subresource.

For convenience a helper script is provided in the hack directory. By default the script will generate a kubeconfig for a Shoot named “local” in the garden-local namespace valid for one hour.

./hack/usage/generate-admin-kubeconf.sh > admin-kubeconf.yaml

If you want to change the default namespace or shoot name, you can do so by passing different values as arguments.

./hack/usage/generate-admin-kubeconf.sh --namespace <namespace> --shoot-name <shootname> > admin-kubeconf.yaml

To access an Ingress resource from the Seed, use the Ingress host with port 8448 (https://<ingress-host>:8448, for example https://gu-local--local.ingress.local.seed.local.gardener.cloud:8448).

(Optional): Setting Up a Second Seed Cluster

There are cases where you would want to create a second seed cluster in your local setup. For example, if you want to test the control plane migration feature. The following steps describe how to do that.

Start by setting up the second KinD cluster:

make kind2-up

This command sets up a new KinD cluster named gardener-local2 and stores its kubeconfig in the ./example/gardener-local/kind/local2/kubeconfig file.

It adds another IP address (172.18.255.2) to your loopback device which is necessary for you to reach the new cluster locally.

In order to deploy required resources in the KinD cluster that you just created, run:

make gardenlet-kind2-up

The following steps assume that you are using the kubeconfig that points to the gardener-local cluster (first KinD cluster): export KUBECONFIG=$PWD/example/gardener-local/kind/local/kubeconfig.

You can wait for the local2 Seed to be ready by running:

./hack/usage/wait-for.sh seed local2 GardenletReady SeedSystemComponentsHealthy ExtensionsReady

Alternatively, you can run kubectl get seed local2 and wait for the STATUS to indicate readiness:

NAME STATUS PROVIDER REGION AGE VERSION K8S VERSION

local2 Ready local local 4m42s vX.Y.Z-dev v1.25.1

If you want to perform control plane migration, you can follow the steps outlined in Control Plane Migration to migrate the shoot cluster to the second seed you just created.

Deleting the Shoot Cluster

./hack/usage/delete shoot local garden-local

(Optional): Tear Down the Second Seed Cluster

make kind2-down

On macOS, if you want to remove the additional IP address on your loopback device run the following script:

sudo ip addr del 172.18.255.2 dev lo0

Tear Down the Gardener Environment

make kind-down

Alternative Way to Set Up Garden and Seed Leveraging gardener-operator

Instead of starting Garden and Seed via make kind-up gardener-up, you can also use gardener-operator to create your local dev landscape.

In this setup, the virtual garden cluster has its own load balancer, so you have to create an own DNS entry in your /etc/hosts:

cat <<EOF | sudo tee -a /etc/hosts

# Begin of Gardener Operator local setup section

172.18.255.3 api.virtual-garden.local.gardener.cloud

172.18.255.3 plutono-garden.ingress.runtime-garden.local.gardener.cloud

# End of Gardener Operator local setup section

EOF

You can bring up gardener-operator with this command:

make kind-operator-multi-zone-up operator-up

Afterwards, you can create your local Garden and install gardenlet into the KinD cluster with this command:

make operator-seed-up

You find the kubeconfig for the KinD cluster at ./example/gardener-local/kind/operator/kubeconfig.

The one for the virtual garden is accessible at ./dev-setup/kubeconfigs/virtual-garden/kubeconfig.

Important

When you create non-HA shoot clusters (i.e.,

Shoots with.spec.controlPlane.highAvailability.failureTolerance != zone), then they are not exposed via172.18.255.1(ref). Instead, you need to find out under which Istio instance they got exposed, and put the corresponding IP address into your/etc/hostsfile:# replace <shoot-namespace> with your shoot namespace (e.g., `shoot--foo--bar`): kubectl -n "$(kubectl -n <shoot-namespace> get gateway kube-apiserver -o jsonpath={.spec.selector.istio} | sed 's/.*--/istio-ingress--/')" get svc istio-ingressgateway -o jsonpath={.status.loadBalancer.ingress..ip}When the shoot cluster is HA (i.e.,

.spec.controlPlane.highAvailability.failureTolerance == zone), then you can access it via172.18.255.1.

Similar as in the section Developing Gardener it’s possible to run a Skaffold development loop as well using:

make operator-seed-dev

ℹ️ Please note that in this setup Skaffold is only watching for changes in the following components:

gardenletgardenlet/chartgardener-resource-managergardener-node-agent

Finally, please use this command to tear down your environment:

make kind-operator-multi-zone-down

This setup supports creating shoots and managed seeds the same way as explained in the previous chapters. However, the development loop has limitations and the debugging setup is not working yet.

Remote Local Setup

Just like Prow is executing the KinD-based e2e tests in a K8s pod, it is possible to interactively run this KinD based Gardener development environment, aka “local setup”, in a “remote” K8s pod.

k apply -f docs/deployment/content/remote-local-setup.yaml

k exec -it remote-local-setup-0 -- sh

tmux a

Caveats

Please refer to the TMUX documentation for working effectively inside the remote-local-setup pod.

To access Plutono, Prometheus or other components in a browser, two port forwards are needed:

The port forward from the laptop to the pod:

k port-forward remote-local-setup-0 3000

The port forward in the remote-local-setup pod to the respective component:

k port-forward -n shoot--local--local deployment/plutono 3000

Related Links

9 - Getting Started Locally With Extensions

Deploying Gardener Locally and Enabling Provider-Extensions

This document will walk you through deploying Gardener on your local machine and bootstrapping your own seed clusters on an existing Kubernetes cluster. It is supposed to run your local Gardener developments on a real infrastructure. For running Gardener only entirely local, please check the getting started locally documentation. If you encounter difficulties, please open an issue so that we can make this process easier.

Overview

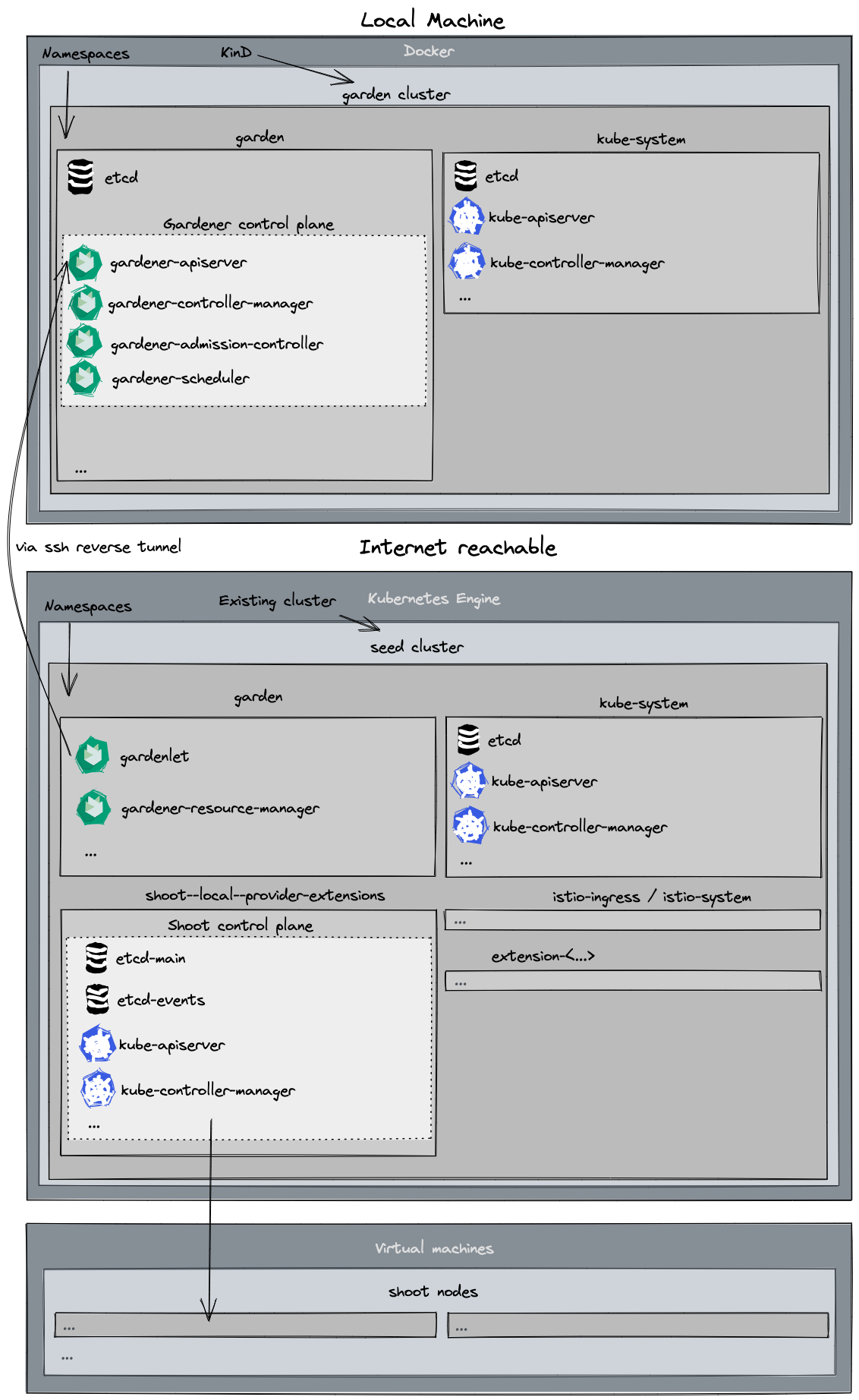

Gardener runs in any Kubernetes cluster. In this guide, we will start a KinD cluster which is used as garden cluster. Any Kubernetes cluster could be used as seed clusters in order to support provider extensions (please refer to the architecture overview). This guide is tested for using Kubernetes clusters provided by Gardener, AWS, Azure, and GCP as seed so far.

Based on Skaffold, the container images for all required components will be built and deployed into the clusters (via their Helm charts).

Prerequisites

- Make sure that you have followed the Local Setup guide up until the Get the sources step.

- Make sure your Docker daemon is up-to-date, up and running and has enough resources (at least

8CPUs and8Gimemory; see the Docker documentation for how to configure the resources for Docker for Mac).Additionally, please configure at least

120Giof disk size for the Docker daemon. Tip: You can clean up unused data withdocker system dfanddocker system prune -a. - Make sure that you have access to a Kubernetes cluster you can use as a seed cluster in this setup.

- The seed cluster requires at least 16 CPUs in total to run one shoot cluster

- You could use any Kubernetes cluster for your seed cluster. However, using a Gardener shoot cluster for your seed simplifies some configuration steps.

- When bootstrapping

gardenletto the cluster, your new seed will have the same provider type as the shoot cluster you use - an AWS shoot will become an AWS seed, a GCP shoot will become a GCP seed, etc. (only relevant when using a Gardener shoot as seed).

Provide Infrastructure Credentials and Configuration

As this setup is running on a real infrastructure, you have to provide credentials for DNS, the infrastructure, and the kubeconfig for the Kubernetes cluster you want to use as seed.

There are

.gitignoreentries for all files and directories which include credentials. Nevertheless, please double check and make sure that credentials are not committed to the version control system.

DNS

Gardener control plane requires DNS for default and internal domains. Thus, you have to configure a valid DNS provider for your setup.

Please maintain your DNS provider configuration and credentials at ./example/provider-extensions/garden/controlplane/domain-secrets.yaml.

You can find a template for the file at ./example/provider-extensions/garden/controlplane/domain-secrets.yaml.tmpl.

Infrastructure

Infrastructure secrets and the corresponding secret bindings should be maintained at:

./example/provider-extensions/garden/project/credentials/infrastructure-secrets.yaml./example/provider-extensions/garden/project/credentials/secretbindings.yaml

There are templates with .tmpl suffixes for the files in the same folder.

Projects

The projects and the namespaces associated with them should be maintained at ./example/provider-extensions/garden/project/project.yaml.

You can find a template for the file at ./example/provider-extensions/garden/project/project.yaml.tmpl.

Seed Cluster Preparation

The kubeconfig of your Kubernetes cluster you would like to use as seed should be placed at ./example/provider-extensions/seed/kubeconfig.

Additionally, please maintain the configuration of your seed in ./example/provider-extensions/gardenlet/values.yaml. It is automatically copied from values.yaml.tmpl in the same directory when you run make gardener-extensions-up for the first time. It also includes explanations of the properties you should set.

Using a Gardener Shoot cluster as seed simplifies the process, because some configuration options can be taken from shoot-info and creating DNS entries and TLS certificates is automated.

However, you can use different Kubernetes clusters for your seed too and configure these things manually. Please configure the options of ./example/provider-extensions/gardenlet/values.yaml upfront. For configuring DNS and TLS certificates, make gardener-extensions-up, which is explained later, will pause and tell you what to do.

External Controllers

You might plan to deploy and register external controllers for networking, operating system, providers, etc. Please put ControllerDeployments and ControllerRegistrations into the ./example/provider-extensions/garden/controllerregistrations directory. The whole content of this folder will be applied to your KinD cluster.

CloudProfiles

There are no demo CloudProfiles yet. Thus, please copy CloudProfiles from another landscape to the ./example/provider-extensions/garden/cloudprofiles directory or create your own CloudProfiles based on the gardener examples. Please check the GitHub repository of your desired provider-extension. Most of them include example CloudProfiles. All files you place in this folder will be applied to your KinD cluster.

Setting Up the KinD Cluster

make kind-extensions-up

This command sets up a new KinD cluster named gardener-extensions and stores the kubeconfig in the ./example/gardener-local/kind/extensions/kubeconfig file.

It might be helpful to copy this file to