Extensions

Extensibility Overview

Initially, everything was developed in-tree in the Gardener project. All cloud providers and the configuration for all the supported operating systems were released together with the Gardener core itself.

But as the project grew, it got more and more difficult to add new providers and maintain the existing code base.

As a consequence and in order to become agile and flexible again, we proposed GEP-1 (Gardener Enhancement Proposal) and later gardener/gardener#9635 as an enhancement.

The document describes an out-of-tree extension architecture that keeps the Gardener core logic independent of provider-specific knowledge (similar to what Kubernetes has achieved with out-of-tree cloud providers or with CSI volume plugins).

Basic Concepts

Gardener components run in the garden and seed clusters, implementing the core logic for garden, seed, and shoot cluster reconciliation and deletion.

Extensions are Kubernetes controllers themselves (like Gardener) and run in the garden runtime and seed clusters.

As usual, we try to use Kubernetes wherever applicable.

We rely on Kubernetes extension concepts in order to enable extensibility for Gardener.

Building Blocks

Extensions consist of the following building blocks:

- A Helm chart as the vehicle to generally deploy extension controllers to a Kubernetes clusters

- Extension controllers that reconcile objects of the API group

extensions.gardener.cloud. These controllers take over outsourced tasks, like creating the shoot infrastructure or deploying components to the control-plane. Optionally, extensions can bring their own webhooks to mutate resources deployed by Gardener. - Optionally, a Helm chart with an admission component inside. The admission controller runs in the garden runtime cluster and validates extension specific settings of the

Shoot (given in providerConfig fields). See admission for more details.

Registration

Before an extension can be used, it needs to be made known to the system. The gardener-operator automates much of the registration process, making Extension resources (group operator.gardener.cloud) the preferred method for registering extensions. For more information, see the Registration documentation.

Practically, many extensions provide basic example manifests to start with the registration in their example directory (example1, example2).

Kinds and Types

Extensions are defined by their Kinds (defined by Gardener - see resources) and Types.

For example, the following is an extension resource of Kind Infrastructure and Type local, which means we need a Gardener extension local that reconciles Infrastructure resources.

apiVersion: extensions.gardener.cloud/v1alpha1

kind: Infrastructure

metadata:

name: infrastructure

namespace: shoot--core--aws-01

spec:

type: local

Classes

A Gardener landscape consists of various cluster types which extension controllers may consider during reconciliation.

The .spec.class field identifies the different deployment cases.

Garden

Extension controllers serve the garden (run in garden runtime), e.g., installing certificates for API and ingress endpoints.

In the course of the Garden reconciliation, the gardener-operator creates BackupBucket, DNSRecord and Extension resources (group extensions.gardener.cloud) which triggers the responsible extension controllers to reconcile them.

Seed

Extension controllers serve the seed (run in seed), e.g., requesting a wildcard certificate for the seed’s ingress domain.

In the course of the Seed reconciliation, the gardenlet creates DNSRecord and Extension resources (group extensions.gardener.cloud) which triggers the responsible extension controllers to reconcile them.

Shoot

Extension controllers serve the shoot (run in seed), e.g., deploying a certificate controller into the control-plane namespace.

In the course of the Shoot reconciliation, the gardenlet creates various extension resources (group extensions.gardener.cloud) which triggers the responsible extension controllers to reconcile them.

gardenlet Reconciliation Walkthrough

Resources of group extensions.gardener.cloud are always created by Gardener itself, either in the garden runtime or in the seed cluster.

To get a better understanding of how the concept works, we will walk through the reconciliation process of a Shoot resource in the seed cluster.

During the shoot reconciliation process, Gardener will write CRDs into the seed cluster that are watched and managed by the extension controllers. They will reconcile (based on the .spec) and report whether everything went well or errors occurred in the CRD’s .status field.

Gardener keeps deploying the provider-independent control plane components (etcd, kube-apiserver, etc.). However, some of these components might still need little customization by providers, e.g., additional configuration, flags, etc. In this case, the extension controllers register webhooks in order to manipulate the manifests.

Example 1:

Gardener creates a new AWS shoot cluster and requires the preparation of infrastructure in order to proceed (networks, security groups, etc.).

It writes the following CRD into the seed cluster:

apiVersion: extensions.gardener.cloud/v1alpha1

kind: Infrastructure

metadata:

name: infrastructure

namespace: shoot--core--aws-01

spec:

type: aws

providerConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

networks:

vpc:

cidr: 10.250.0.0/16

internal:

- 10.250.112.0/22

public:

- 10.250.96.0/22

workers:

- 10.250.0.0/19

zones:

- eu-west-1a

dns:

apiserver: api.aws-01.core.example.com

region: eu-west-1

secretRef:

name: my-aws-credentials

sshPublicKey: |

base64(key)

Please note that the .spec.providerConfig is a raw blob and not evaluated or known in any way by Gardener.

Instead, it was specified by the user (in the Shoot resource) and just “forwarded” to the extension controller.

Only the AWS controller understands this configuration and will now start provisioning/reconciling the infrastructure.

It reports in the .status field the result:

status:

observedGeneration: ...

state: ...

lastError: ..

lastOperation: ...

providerStatus:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureStatus

vpc:

id: vpc-1234

subnets:

- id: subnet-acbd1234

name: workers

zone: eu-west-1

securityGroups:

- id: sg-xyz12345

name: workers

iam:

nodesRoleARN: <some-arn>

instanceProfileName: foo

ec2:

keyName: bar

Gardener waits until the .status.lastOperation / .status.lastError indicates that the operation reached a final state and either continuous with the next step, or stops and reports the potential error.

The extension-specific output in .status.providerStatus is - similar to .spec.providerConfig - not evaluated, and simply forwarded to CRDs in subsequent steps.

Example 2:

Gardener deploys the control plane components into the seed cluster, e.g., the kube-controller-manager deployment with the following flags:

apiVersion: apps/v1

kind: Deployment

...

spec:

template:

spec:

containers:

- command:

- /usr/local/bin/kube-controller-manager

- --allocate-node-cidrs=true

- --attach-detach-reconcile-sync-period=1m0s

- --controllers=*,bootstrapsigner,tokencleaner

- --cluster-cidr=100.96.0.0/11

- --cluster-name=shoot--core--aws-01

- --cluster-signing-cert-file=/srv/kubernetes/ca/ca.crt

- --cluster-signing-key-file=/srv/kubernetes/ca/ca.key

- --concurrent-deployment-syncs=10

- --concurrent-replicaset-syncs=10

...

The AWS controller requires some additional flags in order to make the cluster functional.

It needs to provide a Kubernetes cloud-config and also some cloud-specific flags.

Consequently, it registers a MutatingWebhookConfiguration on Deployments and adds these flags to the container:

- --cloud-provider=external

- --external-cloud-volume-plugin=aws

- --cloud-config=/etc/kubernetes/cloudprovider/cloudprovider.conf

Of course, it would have needed to create a ConfigMap containing the cloud config and to add the proper volume and volumeMounts to the manifest as well.

(Please note for this special example: The Kubernetes community is also working on making the kube-controller-manager provider-independent.

However, there will most probably be still components other than the kube-controller-manager which need to be adapted by extensions.)

If you are interested in writing an extension, or generally in digging deeper to find out the nitty-gritty details of the extension concepts, please read GEP-1.

We are truly looking forward to your feedback!

Known Extensions

We track all extensions of Gardener in the known Gardener Extensions List repo.

1 - Access to the Garden Cluster for Extensions

Access to the Garden Cluster for Extensions

Gardener offers different means to provide or equip registered extensions with a kubeconfig which may be used to connect to the garden cluster.

Admission Controllers

For extensions with an admission controller deployment, gardener-operator injects a token-based kubeconfig as a volume and volume mount.

The token is valid for 12h, automatically renewed, and associated with a dedicated ServiceAccount in the garden cluster.

The path to this kubeconfig is revealed under the GARDEN_KUBECONFIG environment variable, also added to the pod spec(s).

Extensions on Seed Clusters

Extensions that are installed on seed clusters via a ControllerInstallation can request gardenlet to inject a kubeconfig and a token for the garden cluster.

In order to do so, injectGardenKubeconfig must be set to true in the referenced ControllerDeployment.

If it should still be disabled for an individual workload resource (Deployment, StatefulSet, etc.), they must be labeled with extensions.gardener.cloud/inject-garden-kubeconfig=false.

When enabled, extensions can then simply read the kubeconfig file specified by the GARDEN_KUBECONFIG environment variable to create a garden cluster client.

With this, they use a short-lived token (valid for 12h) associated with a dedicated ServiceAccount in the seed-<seed-name> namespace to securely access the garden cluster.

The used ServiceAccounts are granted permissions in the garden cluster similar to gardenlet clients.

Background

Historically, gardenlet has been the only component running in the seed cluster that has access to both the seed cluster and the garden cluster.

Accordingly, extensions running on the seed cluster didn’t have access to the garden cluster.

Starting from Gardener v1.74.0, there is a new mechanism for components running on seed clusters to get access to the garden cluster.

For this, gardenlet runs an instance of the TokenRequestor for requesting tokens that can be used to communicate with the garden cluster.

Using Gardenlet-Managed Garden Access

By default, extensions are equipped with secure access to the garden cluster using a dedicated ServiceAccount without requiring any additional action.

They can simply read the file specified by the GARDEN_KUBECONFIG and construct a garden client with it.

When installing a ControllerInstallation, gardenlet creates two secrets in the installation’s namespace: a generic garden kubeconfig (generic-garden-kubeconfig-<hash>) and a garden access secret (garden-access-extension).

Note that the ServiceAccount created based on this access secret will be created in the respective seed-* namespace in the garden cluster and labelled with controllerregistration.core.gardener.cloud/name=<name>.

Additionally, gardenlet injects volume, volumeMounts, and two environment variables into all (init) containers in all objects in the apps and batch API groups:

GARDEN_KUBECONFIG: points to the path where the generic garden kubeconfig is mounted.SEED_NAME: set to the name of the Seed where the extension is installed.

This is useful for restricting watches in the garden cluster to relevant objects.

If an object already contains the GARDEN_KUBECONFIG environment variable, it is not overwritten and injection of volume and volumeMounts is skipped.

For example, a Deployment deployed via a ControllerInstallation will be mutated as follows:

apiVersion: apps/v1

kind: Deployment

metadata:

name: gardener-extension-provider-local

annotations:

reference.resources.gardener.cloud/secret-795f7ca6: garden-access-extension

reference.resources.gardener.cloud/secret-d5f5a834: generic-garden-kubeconfig-81fb3a88

spec:

template:

metadata:

annotations:

reference.resources.gardener.cloud/secret-795f7ca6: garden-access-extension

reference.resources.gardener.cloud/secret-d5f5a834: generic-garden-kubeconfig-81fb3a88

spec:

containers:

- name: gardener-extension-provider-local

env:

- name: GARDEN_KUBECONFIG

value: /var/run/secrets/gardener.cloud/garden/generic-kubeconfig/kubeconfig

- name: SEED_NAME

value: local

volumeMounts:

- mountPath: /var/run/secrets/gardener.cloud/garden/generic-kubeconfig

name: garden-kubeconfig

readOnly: true

volumes:

- name: garden-kubeconfig

projected:

defaultMode: 420

sources:

- secret:

items:

- key: kubeconfig

path: kubeconfig

name: generic-garden-kubeconfig-81fb3a88

optional: false

- secret:

items:

- key: token

path: token

name: garden-access-extension

optional: false

The generic garden kubeconfig will look like this:

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority-data: LS0t...

server: https://garden.local.gardener.cloud:6443

name: garden

contexts:

- context:

cluster: garden

user: extension

name: garden

current-context: garden

users:

- name: extension

user:

tokenFile: /var/run/secrets/gardener.cloud/garden/generic-kubeconfig/token

Manually Requesting a Token for the Garden Cluster

Seed components that need to communicate with the garden cluster can request a token in the garden cluster by creating a garden access secret.

This secret has to be labelled with resources.gardener.cloud/purpose=token-requestor and resources.gardener.cloud/class=garden, e.g.:

apiVersion: v1

kind: Secret

metadata:

name: garden-access-example

namespace: example

labels:

resources.gardener.cloud/purpose: token-requestor

resources.gardener.cloud/class: garden

annotations:

serviceaccount.resources.gardener.cloud/name: example

type: Opaque

This will instruct gardenlet to create a new ServiceAccount named example in its own seed-<seed-name> namespace in the garden cluster, request a token for it, and populate the token in the secret’s data under the token key.

Permissions in the Garden Cluster

Both the SeedAuthorizer and the SeedRestriction plugin handle extensions clients and generally grant the same permissions in the garden cluster to them as to gardenlet clients.

With this, extensions are restricted to work with objects in the garden cluster that are related to seed they are running one just like gardenlet.

Note that if the plugins are not enabled, extension clients are only granted read access to global resources like CloudProfiles (this is granted to all authenticated users).

There are a few exceptions to the granted permissions as documented here.

Additional Permissions

If an extension needs access to additional resources in the garden cluster (e.g., extension-specific custom resources), permissions need to be granted via the usual RBAC means.

Let’s consider the following example: An extension requires the privileges to create authorization.k8s.io/v1.SubjectAccessReviews (which is not covered by the “default” permissions mentioned above).

This requires a human Gardener operator to create a ClusterRole in the garden cluster with the needed rules:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: extension-create-subjectaccessreviews

annotations:

authorization.gardener.cloud/extensions-serviceaccount-selector: '{"matchLabels":{"controllerregistration.core.gardener.cloud/name":"<extension-name>"}}'

labels:

authorization.gardener.cloud/custom-extensions-permissions: "true"

rules:

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

Note the label authorization.gardener.cloud/extensions-serviceaccount-selector which contains a label selector for ServiceAccounts.

There is a controller part of gardener-controller-manager which takes care of maintaining the respective ClusterRoleBinding resources.

It binds all ServiceAccounts in the seed namespaces in the garden cluster (i.e., all extension clients) whose labels match.

You can read more about this controller here.

Custom Permissions

If an extension wants to create a dedicated ServiceAccount for accessing the garden cluster without automatically inheriting all permissions of the gardenlet, it first needs to create a garden access secret in its extension namespace in the seed cluster:

apiVersion: v1

kind: Secret

metadata:

name: my-custom-component

namespace: <extension-namespace>

labels:

resources.gardener.cloud/purpose: token-requestor

resources.gardener.cloud/class: garden

annotations:

serviceaccount.resources.gardener.cloud/name: my-custom-component-extension-foo

serviceaccount.resources.gardener.cloud/labels: '{"foo":"bar}'

type: Opaque

❗️️Do not prefix the service account name with extension- to prevent inheriting the gardenlet permissions! It is still recommended to add the extension name (e.g., as a suffix) for easier identification where this ServiceAccount comes from.

Next, you can follow the same approach described above.

However, the authorization.gardener.cloud/extensions-serviceaccount-selector annotation should not contain controllerregistration.core.gardener.cloud/name=<extension-name> but rather custom labels, e.g. foo=bar.

This way, the created ServiceAccount will only get the permissions of above ClusterRole and nothing else.

Renewing All Garden Access Secrets

Operators can trigger an automatic renewal of all garden access secrets in a given Seed and their requested ServiceAccount tokens, e.g., when rotating the garden cluster’s ServiceAccount signing key.

For this, the Seed has to be annotated with gardener.cloud/operation=renew-garden-access-secrets.

2 - CA Rotation

CA Rotation in Extensions

GEP-18 proposes adding support for automated rotation of Shoot cluster certificate authorities (CAs).

This document outlines all the requirements that Gardener extensions need to fulfill in order to support the CA rotation feature.

Requirements for Shoot Cluster CA Rotation

- Extensions must not rely on static CA

Secret names managed by the gardenlet, because their names are changing during CA rotation. - Extensions cannot issue or use client certificates for authenticating against shoot API servers. Instead, they should use short-lived auto-rotated

ServiceAccount tokens via gardener-resource-manager’s TokenRequestor. Also see Conventions and TokenRequestor documents. - Extensions need to generate dedicated CAs for signing server certificates (e.g.

cloud-controller-manager). There should be one CA per controller and purpose in order to bind the lifecycle to the reconciliation cycle of the respective object for which it is created. - CAs managed by extensions should be rotated in lock-step with the shoot cluster CA.

When the user triggers a rotation, the gardenlet writes phase and initiation time to

Shoot.status.credentials.rotation.certificateAuthorities.{phase,lastInitiationTime}. See GEP-18 for a detailed description on what needs to happen in each phase.

Extensions can retrieve this information from Cluster.shoot.status.

Utilities for Secrets Management

In order to fulfill the requirements listed above, extension controllers can reuse the SecretsManager that the gardenlet uses to manage all shoot cluster CAs, certificates, and other secrets as well.

It implements the core logic for managing secrets that need to be rotated, auto-renewed, etc.

Additionally, there are utilities for reusing SecretsManager in extension controllers.

They already implement the above requirements based on the Cluster resource and allow focusing on the extension controllers’ business logic.

For example, a simple SecretsManager usage in an extension controller could look like this:

const (

// identity for SecretsManager instance in ControlPlane controller

identity = "provider-foo-controlplane"

// secret config name of the dedicated CA

caControlPlaneName = "ca-provider-foo-controlplane"

)

func Reconcile() {

var (

cluster *extensionscontroller.Cluster

client client.Client

// define wanted secrets with options

secretConfigs = []extensionssecretsmanager.SecretConfigWithOptions{

{

// dedicated CA for ControlPlane controller

Config: &secretutils.CertificateSecretConfig{

Name: caControlPlaneName,

CommonName: "ca-provider-foo-controlplane",

CertType: secretutils.CACert,

},

// persist CA so that it gets restored on control plane migration

Options: []secretsmanager.GenerateOption{secretsmanager.Persist()},

},

{

// server cert for control plane component

Config: &secretutils.CertificateSecretConfig{

Name: "cloud-controller-manager",

CommonName: "cloud-controller-manager",

DNSNames: kutil.DNSNamesForService("cloud-controller-manager", namespace),

CertType: secretutils.ServerCert,

},

// sign with our dedicated CA

Options: []secretsmanager.GenerateOption{secretsmanager.SignedByCA(caControlPlaneName)},

},

}

)

// initialize SecretsManager based on Cluster object

sm, err := extensionssecretsmanager.SecretsManagerForCluster(ctx, logger.WithName("secretsmanager"), clock.RealClock{}, client, cluster, identity, secretConfigs)

// generate all wanted secrets (first CAs, then the rest)

secrets, err := extensionssecretsmanager.GenerateAllSecrets(ctx, sm, secretConfigs)

// cleanup any secrets that are not needed any more (e.g. after rotation)

err = sm.Cleanup(ctx)

}

Please pay attention to the following points:

- There should be one

SecretsManager identity per controller in order to prevent conflicts between different instances.

E.g., there should be different identities for Infrastructrue, Worker, ControlPlane controller, etc. - All other points in Reusing the SecretsManager in Other Components.

3 - Cluster

Cluster Resource

As part of the extensibility epic, a lot of responsibility that was previously taken over by Gardener directly has now been shifted to extension controllers running in the seed clusters.

These extensions often serve a well-defined purpose (e.g., the management of DNS records, infrastructure).

We have introduced a couple of extension CRDs in the seeds whose specification is written by Gardener, and which are acted up by the extensions.

However, the extensions sometimes require more information that is not directly part of the specification.

One example of that is the GCP infrastructure controller which needs to know the shoot’s pod and service network.

Another example is the Azure infrastructure controller which requires some information out of the CloudProfile resource.

The problem is that Gardener does not know which extension requires which information so that it can write it into their specific CRDs.

In order to deal with this problem we have introduced the Cluster extension resource.

This CRD is written into the seeds, however, it does not contain a status, so it is not expected that something acts upon it.

Instead, you can treat it like a ConfigMap which contains data that might be interesting for you.

In the context of Gardener, seeds and shoots, and extensibility the Cluster resource contains the CloudProfile, Seed, and Shoot manifest.

Extension controllers can take whatever information they want out of it that might help completing their individual tasks.

---

apiVersion: extensions.gardener.cloud/v1alpha1

kind: Cluster

metadata:

name: shoot--foo--bar

spec:

cloudProfile:

apiVersion: core.gardener.cloud/v1beta1

kind: CloudProfile

...

seed:

apiVersion: core.gardener.cloud/v1beta1

kind: Seed

...

shoot:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

...

The resource is written by Gardener before it starts the reconciliation flow of the shoot.

⚠️ All Gardener components use the core.gardener.cloud/v1beta1 version, i.e., the Cluster resource will contain the objects in this version.

There are some fields in the Shoot specification that might be interesting to take into account.

.spec.hibernation.enabled={true,false}: Extension controllers might want to behave differently if the shoot is hibernated or not (probably they might want to scale down their control plane components, for example)..status.lastOperation.state=Failed: If Gardener sets the shoot’s last operation state to Failed, it means that Gardener won’t automatically retry to finish the reconciliation/deletion flow because an error occurred that could not be resolved within the last 24h (default). In this case, end-users are expected to manually re-trigger the reconciliation flow in case they want Gardener to try again. Extension controllers are expected to follow the same principle. This means they have to read the shoot state out of the Cluster resource.

Extension Resources Not Associated with a Shoot

In some cases, Gardener may create extension resources that are not associated with a shoot, but are needed to support some functionality internal to Gardener. Such resources will be created in the garden namespace of a seed cluster.

For example, if the managed ingress controller is active on the seed, Gardener will create a DNSRecord resource(s) in the garden namespace of the seed cluster for the ingress DNS record.

Extension controllers that may be expected to reconcile extension resources in the garden namespace should make sure that they can tolerate the absence of a cluster resource. This means that they should not attempt to read the cluster resource in such cases, or if they do they should ignore the “not found” error.

References and Additional Resources

4 - ControlPlane Webhooks

ControlPlane Customization Webhooks

Gardener creates the Shoot controlplane in several steps of the Shoot flow. At different point of this flow, it:

- Deploys standard controlplane components such as kube-apiserver, kube-controller-manager, and kube-scheduler by creating the corresponding deployments, services, and other resources in the Shoot namespace.

- Initiates the deployment of custom controlplane components by ControlPlane controllers by creating a

ControlPlane resource in the Shoot namespace.

In order to apply any provider-specific changes to the configuration provided by Gardener for the standard controlplane components, cloud extension providers can install mutating admission webhooks for the resources created by Gardener in the Shoot namespace.

What needs to be implemented to support a new cloud provider?

In order to support a new cloud provider, you should install “controlplane” mutating webhooks for any of the following resources:

- Deployment with name

kube-apiserver, kube-controller-manager, or kube-scheduler - Service with name

kube-apiserver OperatingSystemConfig with any name, and purpose reconcile

See Contract Specification for more details on the contract that Gardener and webhooks should adhere to regarding the content of the above resources.

You can install 2 different kinds of controlplane webhooks:

Shoot, or controlplane webhooks apply changes needed by the Shoot cloud provider, for example the --cloud-provider command line flag of kube-apiserver and kube-controller-manager. Such webhooks should only operate on Shoot namespaces labeled with shoot.gardener.cloud/provider=<provider>.Seed, or seedprovider webhooks apply changes needed by the Seed cloud provider, for example adapting the storage class and capacity on Etcd objects. Such webhooks should only operate on Shoot namespaces labeled with seed.gardener.cloud/provider=<provider>.

The labels shoot.gardener.cloud/provider and seed.gardener.cloud/provider are added by Gardener when it creates the Shoot namespace.

The resources mutated by the “controlplane” mutating webhooks are labeled with provider.extensions.gardener.cloud/mutated-by-controlplane-webhook: true by gardenlet. The provider extensions can add an object selector to their “controlplane” mutating webhooks to not intercept requests for unrelated objects.

Contract Specification

This section specifies the contract that Gardener and webhooks should adhere to in order to ensure smooth interoperability. Note that this contract can’t be specified formally and is therefore easy to violate, especially by Gardener. The Gardener team will nevertheless do its best to adhere to this contract in the future and to ensure via additional measures (tests, validations) that it’s not unintentionally broken. If it needs to be changed intentionally, this can only happen after proper communication has taken place to ensure that the affected provider webhooks could be adapted to work with the new version of the contract.

Note: The contract described below may not necessarily be what Gardener does currently (as of May 2019). Rather, it reflects the target state after changes for Gardener extensibility have been introduced.

kube-apiserver

To deploy kube-apiserver, Gardener shall create a deployment and a service both named kube-apiserver in the Shoot namespace. They can be mutated by webhooks to apply any provider-specific changes to the standard configuration provided by Gardener.

The pod template of the kube-apiserver deployment shall contain a container named kube-apiserver.

The command field of the kube-apiserver container shall contain the kube-apiserver command line. It shall contain a number of provider-independent flags that should be ignored by webhooks, such as:

- admission plugins (

--enable-admission-plugins, --disable-admission-plugins) - secure communications (

--etcd-cafile, --etcd-certfile, --etcd-keyfile, …) - audit log (

--audit-log-*) - ports (

--secure-port)

The kube-apiserver command line shall not contain any provider-specific flags, such as:

--cloud-provider--cloud-config

These flags can be added by webhooks if needed.

The kube-apiserver command line may contain a number of additional provider-independent flags. In general, webhooks should ignore these unless they are known to interfere with the desired kube-apiserver behavior for the specific provider. Among the flags to be considered are:

--endpoint-reconciler-type--advertise-address--feature-gates

Gardener uses SNI to expose the apiserver. In this case, Gardener expects that the --endpoint-reconciler-type and --advertise-address flags of the kube-apiserver’s Deployment are not modified.

The --enable-admission-plugins flag may contain admission plugins that are not compatible with CSI plugins such as PersistentVolumeLabel. Webhooks should therefore ensure that such admission plugins are either explicitly enabled (if CSI plugins are not used) or disabled (otherwise).

The env field of the kube-apiserver container shall not contain any provider-specific environment variables (so it will be empty). If any provider-specific environment variables are needed, they should be added by webhooks.

The volumes field of the pod template of the kube-apiserver deployment, and respectively the volumeMounts field of the kube-apiserver container shall not contain any provider-specific Secret or ConfigMap resources. If such resources should be mounted as volumes, this should be done by webhooks.

The kube-apiserver Service will be of type ClusterIP. In this case, Gardener expects that for this Service no mutations happen.

kube-controller-manager

To deploy kube-controller-manager, Gardener shall create a deployment named kube-controller-manager in the Shoot namespace. It can be mutated by webhooks to apply any provider-specific changes to the standard configuration provided by Gardener.

The pod template of the kube-controller-manager deployment shall contain a container named kube-controller-manager.

The command field of the kube-controller-manager container shall contain the kube-controller-manager command line. It shall contain a number of provider-independent flags that should be ignored by webhooks, such as:

--kubeconfig, --authentication-kubeconfig, --authorization-kubeconfig--leader-elect- secure communications (

--tls-cert-file, --tls-private-key-file, …) - cluster CIDR and identity (

--cluster-cidr, --cluster-name) - sync settings (

--concurrent-deployment-syncs, --concurrent-replicaset-syncs) - horizontal pod autoscaler (

--horizontal-pod-autoscaler-*) - ports (

--port, --secure-port)

The kube-controller-manager command line shall not contain any provider-specific flags, such as:

--cloud-provider--cloud-config--configure-cloud-routes--external-cloud-volume-plugin

These flags can be added by webhooks if needed.

The kube-controller-manager command line may contain a number of additional provider-independent flags. In general, webhooks should ignore these unless they are known to interfere with the desired kube-controller-manager behavior for the specific provider. Among the flags to be considered are:

The env field of the kube-controller-manager container shall not contain any provider-specific environment variables (so it will be empty). If any provider-specific environment variables are needed, they should be added by webhooks.

The volumes field of the pod template of the kube-controller-manager deployment, and respectively the volumeMounts field of the kube-controller-manager container shall not contain any provider-specific Secret or ConfigMap resources. If such resources should be mounted as volumes, this should be done by webhooks.

kube-scheduler

To deploy kube-scheduler, Gardener shall create a deployment named kube-scheduler in the Shoot namespace. It can be mutated by webhooks to apply any provider-specific changes to the standard configuration provided by Gardener.

The pod template of the kube-scheduler deployment shall contain a container named kube-scheduler.

The command field of the kube-scheduler container shall contain the kube-scheduler command line. It shall contain a number of provider-independent flags that should be ignored by webhooks, such as:

--config--authentication-kubeconfig, --authorization-kubeconfig- secure communications (

--tls-cert-file, --tls-private-key-file, …) - ports (

--port, --secure-port)

The kube-scheduler command line may contain additional provider-independent flags. In general, webhooks should ignore these unless they are known to interfere with the desired kube-controller-manager behavior for the specific provider. Among the flags to be considered are:

The kube-scheduler command line can’t contain provider-specific flags, and it makes no sense to specify provider-specific environment variables or mount provider-specific Secret or ConfigMap resources as volumes.

etcd-main and etcd-events

To deploy etcd, Gardener shall create 2 Etcd named etcd-main and etcd-events in the Shoot namespace. They can be mutated by webhooks to apply any provider-specific changes to the standard configuration provided by Gardener.

Gardener shall configure the Etcd resource completely to set up an etcd cluster which uses the default storage class of the seed cluster.

cloud-controller-manager

Gardener shall not deploy a cloud-controller-manager. If it is needed, it should be added by a ControlPlane controller

CSI Controllers

Gardener shall not deploy a CSI controller. If it is needed, it should be added by a ControlPlane controller

kubelet

To specify the kubelet configuration, Gardener shall create a OperatingSystemConfig resource with any name and purpose reconcile in the Shoot namespace. It can therefore also be mutated by webhooks to apply any provider-specific changes to the standard configuration provided by Gardener. Gardener may write multiple such resources with different type to the same Shoot namespaces if multiple OSs are used.

The OSC resource shall contain a unit named kubelet.service, containing the corresponding systemd unit configuration file. The [Service] section of this file shall contain a single ExecStart option having the kubelet command line as its value.

The OSC resource shall contain a file with path /var/lib/kubelet/config/kubelet, which contains a KubeletConfiguration resource in YAML format. Most of the flags that can be specified in the kubelet command line can alternatively be specified as options in this configuration as well.

The kubelet command line shall contain a number of provider-independent flags that should be ignored by webhooks, such as:

--config--bootstrap-kubeconfig, --kubeconfig--network-plugin (and, if it equals cni, also --cni-bin-dir and --cni-conf-dir)--node-labels

The kubelet command line shall not contain any provider-specific flags, such as:

--cloud-provider--cloud-config--provider-id

These flags can be added by webhooks if needed.

The kubelet command line / configuration may contain a number of additional provider-independent flags / options. In general, webhooks should ignore these unless they are known to interfere with the desired kubelet behavior for the specific provider. Among the flags / options to be considered are:

--enable-controller-attach-detach (enableControllerAttachDetach) - should be set to true if CSI plugins are used, but in general can also be ignored since its default value is also true, and this should work both with and without CSI plugins.--feature-gates (featureGates) - should contain a list of specific feature gates if CSI plugins are used. If CSI plugins are not used, the corresponding feature gates can be ignored since enabling them should not harm in any way.

5 - Conventions

General Conventions

All the extensions that are registered to Gardener are deployed to the garden runtime and seed clusters on which they are required (also see extension registration documentation).

Some of these extensions might need to create global resources in the seed (e.g., ClusterRoles), i.e., it’s important to have a naming scheme to avoid conflicts as it cannot be checked or validated upfront that two extensions don’t use the same names.

Consequently, this page should help answering some general questions that might come up when it comes to developing an extension.

Extension Classes

Each extension resource has a .spec.class field that is used to distinguish between different instances of the same extension type.

For extensions configured in Shoots the class is named shoot (or unspecified for backwards compatibility), for Seeds the class is named seed.

Extension controllers ought to use the class field for event filtering (see HasClass Predicate) and during reconciliation.

PriorityClasses

Extensions are not supposed to create and use self-defined PriorityClasses.

Instead, they can and should rely on well-known PriorityClasses managed by gardenlet.

High Availability of Deployed Components

Extensions might deploy components via Deployments, StatefulSets, etc., as part of the shoot control plane, or the seed or shoot system components.

In case a seed or shoot cluster is highly available, there are various failure tolerance types. For more information, see Highly Available Shoot Control Plane.

Accordingly, the replicas, topologySpreadConstraints or affinity settings of the deployed components might need to be adapted.

Instead of doing this one-by-one for each and every component, extensions can rely on a mutating webhook provided by Gardener.

Please refer to High Availability of Deployed Components for details.

To reduce costs and to improve the network traffic latency in multi-zone clusters, extensions can make a Service topology-aware.

Please refer to this document for details.

Is there a naming scheme for (global) resources?

As there is no formal process to validate non-existence of conflicts between two extensions, please follow these naming schemes when creating resources (especially, when creating global resources, but it’s in general a good idea for most created resources):

The resource name should be prefixed with extensions.gardener.cloud:<extension-type>-<extension-name>:<resource-name>, for example:

extensions.gardener.cloud:provider-aws:some-controller-managerextensions.gardener.cloud:extension-certificate-service:cert-broker

How to create resources in the shoot cluster?

Some extensions might not only create resources in the seed cluster itself but also in the shoot cluster. Usually, every extension comes with a ServiceAccount and the required RBAC permissions when it gets installed to the seed.

However, there are no credentials for the shoot for every extension.

Extensions are supposed to use ManagedResources to manage resources in shoot clusters.

gardenlet deploys gardener-resource-manager instances into all shoot control planes, that will reconcile ManagedResources without a specified class (spec.class=null) in shoot clusters. Mind that Gardener acts on ManagedResources with the origin=gardener label. In order to prevent unwanted behavior, extensions should omit the origin label or provide their own unique value for it when creating such resources.

If you need to deploy a non-DaemonSet resource, Gardener automatically ensures that it only runs on nodes that are allowed to host system components and extensions. For more information, see System Components Webhook.

How to create kubeconfigs for the shoot cluster?

Historically, Gardener extensions used to generate kubeconfigs with client certificates for components they deploy into the shoot control plane.

For this, they reused the shoot cluster CA secret (ca) to issue new client certificates.

With gardener/gardener#4661 we moved away from using client certificates in favor of short-lived, auto-rotated ServiceAccount tokens. These tokens are managed by gardener-resource-manager’s TokenRequestor.

Extensions are supposed to reuse this mechanism for requesting tokens and a generic-token-kubeconfig for authenticating against shoot clusters.

With GEP-18 (Shoot cluster CA rotation), a dedicated CA will be used for signing client certificates (gardener/gardener#5779) which will be rotated when triggered by the shoot owner.

With this, extensions cannot reuse the ca secret anymore to issue client certificates.

Hence, extensions must switch to short-lived ServiceAccount tokens in order to support the CA rotation feature.

The generic-token-kubeconfig secret contains the CA bundle for establishing trust to shoot API servers. However, as the secret is immutable, its name changes with the rotation of the cluster CA.

Extensions need to look up the generic-token-kubeconfig.secret.gardener.cloud/name annotation on the respective Cluster object in order to determine which secret contains the current CA bundle.

The helper function extensionscontroller.GenericTokenKubeconfigSecretNameFromCluster can be used for this task.

You can take a look at CA Rotation in Extensions for more details on the CA rotation feature in regard to extensions.

How to create certificates for the shoot cluster?

Gardener creates several certificate authorities (CA) that are used to create server certificates for various components.

For example, the shoot’s etcd has its own CA, the kube-aggregator has its own CA as well, and both are different to the actual cluster’s CA.

With GEP-18 (Shoot cluster CA rotation), extensions are required to do the same and generate dedicated CAs for their components (e.g. for signing a server certificate for cloud-controller-manager). They must not depend on the CA secrets managed by gardenlet.

Please see CA Rotation in Extensions for the exact requirements that extensions need to fulfill in order to support the CA rotation feature.

How to enforce a Pod Security Standard for extension namespaces?

The pod-security.kubernetes.io/enforce namespace label enforces the Pod Security Standards.

You can set the pod-security.kubernetes.io/enforce label for extension namespace by adding the security.gardener.cloud/pod-security-enforce annotation to your ControllerRegistration. The value of the annotation would be the value set for the pod-security.kubernetes.io/enforce label. It is advised to set the annotation with the most restrictive pod security standard that your extension pods comply with.

If you are using the ./hack/generate-controller-registration.sh script to generate your ControllerRegistration you can use the -e, –pod-security-enforce option to set the security.gardener.cloud/pod-security-enforce annotation. If the option is not set, it defaults to baseline.

6 - Force Deletion

Force Deletion

From v1.81, Gardener supports Shoot Force Deletion. All extension controllers should also properly support it. This document outlines some important points that extension maintainers should keep in mind to support force deletion in their extensions.

Overall Principles

The following principles should always be upheld:

- All resources pertaining to the extension and managed by it should be appropriately handled and cleaned up by the extension when force deletion is initiated.

Implementation Details

ForceDelete Actuator Methods

Most extension controller implementations follow a common pattern where a generic Reconciler implementation delegates to an Actuator interface that contains the methods Reconcile, Delete, Migrate and Restore provided by the extension. A new method, ForceDelete has been added to all such Actuator interfaces; see the infrastructure Actuator interface as an example. The generic reconcilers call this method if the Shoot has annotation confirmation.gardener.cloud/force-deletion=true. Thus, it should be implemented by the extension controller to forcefully delete resources if not possible to delete them gracefully. If graceful deletion is possible, then in the ForceDelete, they can simply call the Delete method.

Extension Controllers Based on Generic Actuators

In practice, the implementation of many extension controllers (for example, the controlplane and worker controllers in most provider extensions) are based on a generic Actuator implementation that only delegates to extension methods for behavior that is truly provider-specific. In all such cases, the ForceDelete method has already been implemented with a method that should suit most of the extensions. If it doesn’t suit your extension, then the ForceDelete method needs to be overridden; see the Azure controlplane controller as an example.

Extension Controllers Not Based on Generic Actuators

The implementation of some extension controllers (for example, the infrastructure controllers in all provider extensions) are not based on a generic Actuator implementation. Such extension controllers must always provide a proper implementation of the ForceDelete method according to the above guidelines; see the AWS infrastructure controller as an example. In practice, this might result in code duplication between the different extensions, since the ForceDelete code is usually not OS-specific.

Some General Implementation Examples

- If the extension deploys only resources in the shoot cluster not backed by infrastructure in third-party systems, then performing the regular deletion code (

actuator.Delete) will suffice in the majority of cases. (e.g - https://github.com/gardener/gardener-extension-shoot-networking-filter/blob/1d95a483d803874e8aa3b1de89431e221a7d574e/pkg/controller/lifecycle/actuator.go#L175-L178) - If the extension deploys resources which are backed by infrastructure in third-party systems:

- If the resource is in the Seed cluster, the extension should remove the finalizers and delete the resource. This is needed especially if the resource is a custom resource since

gardenlet will not be aware of this resource and cannot take action. - If the resource is in the Shoot and if it’s deployed by a

ManagedResource, then gardenlet will take care to forcefully delete it in a later step of force-deletion. If the resource is not deployed via a ManagedResource, then it wouldn’t block the deletion flow anyway since it is in the Shoot cluster. In both cases, the extension controller can ignore the resource and return nil.

7 - Healthcheck Library

Health Check Library

Goal

Typically, an extension reconciles a specific resource (Custom Resource Definitions (CRDs)) and creates / modifies resources in the cluster (via helm, managed resources, kubectl, …).

We call these API Objects ‘dependent objects’ - as they are bound to the lifecycle of the extension.

The goal of this library is to enable extensions to setup health checks for their ‘dependent objects’ with minimal effort.

Usage

The library provides a generic controller with the ability to register any resource that satisfies the extension object interface.

An example is the Worker CRD.

Health check functions for commonly used dependent objects can be reused and registered with the controller, such as:

- Deployment

- DaemonSet

- StatefulSet

- ManagedResource (Gardener specific)

See the below example taken from the provider-aws.

health.DefaultRegisterExtensionForHealthCheck(

aws.Type,

extensionsv1alpha1.SchemeGroupVersion.WithKind(extensionsv1alpha1.WorkerResource),

func() runtime.Object { return &extensionsv1alpha1.Worker{} },

mgr, // controller runtime manager

opts, // options for the health check controller

nil, // custom predicates

map[extensionshealthcheckcontroller.HealthCheck]string{

general.CheckManagedResource(genericactuator.McmShootResourceName): string(gardencorev1beta1.ShootSystemComponentsHealthy),

general.CheckSeedDeployment(aws.MachineControllerManagerName): string(gardencorev1beta1.ShootEveryNodeReady),

worker.SufficientNodesAvailable(): string(gardencorev1beta1.ShootEveryNodeReady),

})

This creates a health check controller that reconciles the extensions.gardener.cloud/v1alpha1.Worker resource with the spec.type ‘aws’.

Three health check functions are registered that are executed during reconciliation.

Each health check is mapped to a single HealthConditionType that results in conditions with the same condition.type (see below).

To contribute to the Shoot’s health, the following conditions can be used: SystemComponentsHealthy, EveryNodeReady, ControlPlaneHealthy, ObservabilityComponentsHealthy. In case of workerless Shoot the EveryNodeReady condition is not present, so it can’t be used.

The Gardener/Gardenlet checks each extension for conditions matching these types.

However, extensions are free to choose any HealthConditionType.

For more information, see Contributing to Shoot Health Status Conditions.

A health check has to satisfy the below interface.

You can find implementation examples in the healtcheck folder.

type HealthCheck interface {

// Check is the function that executes the actual health check

Check(context.Context, types.NamespacedName) (*SingleCheckResult, error)

// InjectSeedClient injects the seed client

InjectSeedClient(client.Client)

// InjectShootClient injects the shoot client

InjectShootClient(client.Client)

// SetLoggerSuffix injects the logger

SetLoggerSuffix(string, string)

// DeepCopy clones the healthCheck

DeepCopy() HealthCheck

}

The health check controller regularly (default: 30s) reconciles the extension resource and executes the registered health checks for the dependent objects.

As a result, the controller writes condition(s) to the status of the extension containing the health check result.

In our example, two checks are mapped to ShootEveryNodeReady and one to ShootSystemComponentsHealthy, leading to conditions with two distinct HealthConditionTypes (condition.type):

status:

conditions:

- lastTransitionTime: "20XX-10-28T08:17:21Z"

lastUpdateTime: "20XX-11-28T08:17:21Z"

message: (1/1) Health checks successful

reason: HealthCheckSuccessful

status: "True"

type: SystemComponentsHealthy

- lastTransitionTime: "20XX-10-28T08:17:21Z"

lastUpdateTime: "20XX-11-28T08:17:21Z"

message: (2/2) Health checks successful

reason: HealthCheckSuccessful

status: "True"

type: EveryNodeReady

Please note that there are four statuses: True, False, Unknown, and Progressing.

True should be used for successful health checks.False should be used for unsuccessful/failing health checks.Unknown should be used when there was an error trying to determine the health status.Progressing should be used to indicate that the health status did not succeed but for expected reasons (e.g., a cluster scale up/down could make the standard health check fail because something is wrong with the Machines, however, it’s actually an expected situation and known to be completed within a few minutes.)

Health checks that report Progressing should also provide a timeout, after which this “progressing situation” is expected to be completed.

The health check library will automatically transition the status to False if the timeout was exceeded.

Additional Considerations

It is up to the extension to decide how to conduct health checks, though it is recommended to make use of the build-in health check functionality of managedresources for trivial checks.

By deploying the depending resources via managed resources, the gardener resource manager conducts basic checks for different API objects out-of-the-box (e.g Deployments, DaemonSets, …) - and writes health conditions.

By default, Gardener performs health checks for all the ManagedResources created in the shoot namespaces.

Their status will be aggregated to the Shoot conditions according to the following rules:

- Health checks of

ManagedResource with .spec.class=nil are aggregated to the SystemComponentsHealthy condition - Health checks of

ManagedResource with .spec.class!=nil are aggregated to the ControlPlaneHealthy condition unless the ManagedResource is labeled with care.gardener.cloud/condition-type=<other-condition-type>. In such case, it is aggregated to the <other-condition-type>.

More sophisticated health checks should be implemented by the extension controller itself (implementing the HealthCheck interface).

8 - Heartbeat

Heartbeat Controller

The heartbeat controller renews a dedicated Lease object named gardener-extension-heartbeat at regular 30 second intervals by default. This Lease is used for heartbeats similar to how gardenlet uses Lease objects for seed heartbeats (see gardenlet heartbeats).

The gardener-extension-heartbeat Lease can be checked by other controllers to verify that the corresponding extension controller is still running. Currently, gardenlet checks this Lease when performing shoot health checks and expects to find the Lease inside the namespace where the extension controller is deployed by the corresponding ControllerInstallation. For each extension resource deployed in the Shoot control plane, gardenlet finds the corresponding gardener-extension-heartbeat Lease resource and checks whether the Lease’s .spec.renewTime is older than the allowed threshold for stale extension health checks - in this case, gardenlet considers the health check report for an extension resource as “outdated” and reflects this in the Shoot status.

9 - Logging And Monitoring

Logging and Monitoring for Extensions

Gardener provides an integrated logging and monitoring stack for alerting, monitoring, and troubleshooting of its managed components by operators or end users. For further information how to make use of it in these roles, refer to the corresponding guides for exploring logs and for monitoring with Plutono.

The components that constitute the logging and monitoring stack are managed by Gardener. By default, it deploys Prometheus and Alertmanager (managed via prometheus-operator, and Plutono into the garden namespace of all seed clusters. If the logging is enabled in the gardenlet configuration (logging.enabled), it will deploy fluent-operator and Vali in the garden namespace too.

Each shoot namespace hosts managed logging and monitoring components. As part of the shoot reconciliation flow, Gardener deploys a shoot-specific Prometheus, blackbox-exporter, Plutono, and, if configured, an Alertmanager into the shoot namespace, next to the other control plane components. If the logging is enabled in the gardenlet configuration (logging.enabled) and the shoot purpose is not testing, it deploys a shoot-specific Vali in the shoot namespace too.

The logging and monitoring stack is extensible by configuration. Gardener extensions can take advantage of that and contribute monitoring configurations encoded in ConfigMaps for their own, specific dashboards, alerts and other supported assets and integrate with it. As with other Gardener resources, they will be continuously reconciled. The extensions can also deploy directly fluent-operator custom resources which will be created in the seed cluster and plugged into the fluent-bit instance.

This guide is about the roles and extensibility options of the logging and monitoring stack components, and how to integrate extensions with:

Monitoring

Seed Cluster

Cache Prometheus

The central Prometheus instance in the garden namespace (called “cache Prometheus”) fetches metrics and data from all seed cluster nodes and all seed cluster pods.

It uses the federation concept to allow the shoot-specific instances to scrape only the metrics for the pods of the control plane they are responsible for.

This mechanism allows to scrape the metrics for the nodes/pods once for the whole cluster, and to have them distributed afterwards.

For more details, continue reading here.

Typically, this is not necessary, but in case an extension wants to extend the configuration for this cache Prometheus, they can create the prometheus-operator’s custom resources and label them with prometheus=cache, for example:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

prometheus: cache

name: cache-my-component

namespace: garden

spec:

selector:

matchLabels:

app: my-component

endpoints:

- metricRelabelings:

- action: keep

regex: ^(metric1|metric2|...)$

sourceLabels:

- __name__

port: metrics

Seed Prometheus

Another Prometheus instance in the garden namespace (called “seed Prometheus”) fetches metrics and data from seed system components, kubelets, cAdvisors, and extensions.

If you want your extension pods to be scraped then they must be annotated with prometheus.io/scrape=true and prometheus.io/port=<metrics-port>.

For more details, continue reading here.

Typically, this is not necessary, but in case an extension wants to extend the configuration for this seed Prometheus, they can create the prometheus-operator’s custom resources and label them with prometheus=seed, for example:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

prometheus: seed

name: seed-my-component

namespace: garden

spec:

selector:

matchLabels:

app: my-component

endpoints:

- metricRelabelings:

- action: keep

regex: ^(metric1|metric2|...)$

sourceLabels:

- __name__

port: metrics

Aggregate Prometheus

Another Prometheus instance in the garden namespace (called “aggregate Prometheus”) stores pre-aggregated data from the cache Prometheus and shoot Prometheus.

An ingress exposes this Prometheus instance allowing it to be scraped from another cluster.

For more details, continue reading here.

Typically, this is not necessary, but in case an extension wants to extend the configuration for this aggregate Prometheus, they can create the prometheus-operator’s custom resources and label them with prometheus=aggregate, for example:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

prometheus: aggregate

name: aggregate-my-component

namespace: garden

spec:

selector:

matchLabels:

app: my-component

endpoints:

- metricRelabelings:

- action: keep

regex: ^(metric1|metric2|...)$

sourceLabels:

- __name__

port: metrics

Plutono

A Plutono instance is deployed by gardenlet into the seed cluster’s garden namespace for visualizing monitoring metrics and logs via dashboards.

In order to provide custom dashboards, create a ConfigMap in the garden namespace labelled with dashboard.monitoring.gardener.cloud/seed=true that contains the respective JSON documents, for example:

apiVersion: v1

kind: ConfigMap

metadata:

labels:

dashboard.monitoring.gardener.cloud/seed: "true"

name: extension-foo-my-custom-dashboard

namespace: garden

data:

my-custom-dashboard.json: <dashboard-JSON-document>

Shoot Cluster

Shoot Prometheus

The shoot-specific metrics are then made available to operators and users in the shoot Plutono, using the shoot Prometheus as data source.

Extension controllers might deploy components as part of their reconciliation next to the shoot’s control plane.

Examples for this would be a cloud-controller-manager or CSI controller deployments. Extensions that want to have their managed control plane components integrated with monitoring can contribute their per-shoot configuration for scraping Prometheus metrics, Alertmanager alerts or Plutono dashboards.

Extensions Monitoring Integration

In case an extension wants to extend the configuration for the shoot Prometheus, they can create the prometheus-operator’s custom resources and label them with prometheus=shoot.

ServiceMonitor

When the component runs in the seed cluster (e.g., as part of the shoot control plane), ServiceMonitor resources should be used:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

prometheus: shoot

name: shoot-my-controlplane-component

namespace: shoot--foo--bar

spec:

selector:

matchLabels:

app: my-component

endpoints:

- metricRelabelings:

- action: keep

regex: ^(metric1|metric2|...)$

sourceLabels:

- __name__

port: metrics

In case HTTPS scheme is used, the CA certificate should be provided like this:

spec:

scheme: HTTPS

tlsConfig:

ca:

secret:

name: <name-of-ca-bundle-secret>

key: bundle.crt

In case the component requires credentials when contacting its metrics endpoint, provide them like this:

spec:

authorization:

credentials:

name: <name-of-secret-containing-credentials>

key: <data-keyin-secret>

If the component delegates authorization to the kube-apiserver of the shoot cluster, you can use the shoot-access-prometheus-shoot secret:

spec:

authorization:

credentials:

name: shoot-access-prometheus-shoot

key: token

# in case the component's server certificate is signed by the cluster CA:

scheme: HTTPS

tlsConfig:

ca:

secret:

name: <name-of-ca-bundle-secret>

key: bundle.crt

ScrapeConfigs

If the component runs in the shoot cluster itself, metrics are scraped via the kube-apiserver proxy.

In this case, Prometheus needs to authenticate itself with the API server.

This can be done like this:

apiVersion: monitoring.coreos.com/v1alpha1

kind: ScrapeConfig

metadata:

labels:

prometheus: shoot

name: shoot-my-cluster-component

namespace: shoot--foo--bar

spec:

authorization:

credentials:

name: shoot-access-prometheus-shoot

key: token

scheme: HTTPS

tlsConfig:

ca:

secret:

name: <name-of-ca-bundle-secret>

key: bundle.crt

kubernetesSDConfigs:

- apiServer: https://kube-apiserver

authorization:

credentials:

name: shoot-access-prometheus-shoot

key: token

followRedirects: true

namespaces:

names:

- kube-system

role: endpoints

tlsConfig:

ca:

secret:

name: <name-of-ca-bundle-secret>

key: bundle.crt

cert: {}

metricRelabelings:

- sourceLabels:

- __name__

action: keep

regex: ^(metric1|metric2)$

- sourceLabels:

- namespace

action: keep

regex: kube-system

relabelings:

- action: replace

replacement: my-cluster-component

targetLabel: job

- sourceLabels: [__meta_kubernetes_service_name, __meta_kubernetes_pod_container_port_name]

separator: ;

regex: my-component-service;metrics

replacement: $1

action: keep

- sourceLabels: [__meta_kubernetes_endpoint_node_name]

separator: ;

regex: (.*)

targetLabel: node

replacement: $1

action: replace

- sourceLabels: [__meta_kubernetes_pod_name]

separator: ;

regex: (.*)

targetLabel: pod

replacement: $1

action: replace

- targetLabel: __address__

replacement: kube-apiserver:443

- sourceLabels: [__meta_kubernetes_pod_name, __meta_kubernetes_pod_container_port_number]

separator: ;

regex: (.+);(.+)

targetLabel: __metrics_path__

replacement: /api/v1/namespaces/kube-system/pods/${1}:${2}/proxy/metrics

action: replace

Developers can make use of the pkg/component/observability/monitoring/prometheus/shoot.ClusterComponentScrapeConfigSpec function in order to generate a ScrapeConfig like above.

PrometheusRule

Similar to ServiceMonitors, PrometheusRules can be created with the prometheus=shoot label:

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: shoot

name: shoot-my-component

namespace: shoot--foo--bar

spec:

groups:

- name: my.rules

rules:

# ...

Plutono Dashboards

A Plutono instance is deployed by gardenlet into the shoot cluster’s namespace for visualizing monitoring metrics and logs via dashboards.

In order to provide custom dashboards, create a ConfigMap in the shoot cluster’s namespace labelled with dashboard.monitoring.gardener.cloud/shoot=true that contains the respective JSON documents, for example:

apiVersion: v1

kind: ConfigMap

metadata:

labels:

dashboard.monitoring.gardener.cloud/shoot: "true"

name: extension-foo-my-custom-dashboard

namespace: shoot--project--name

data:

my-custom-dashboard.json: <dashboard-JSON-document>

Logging

In Kubernetes clusters, container logs are non-persistent and do not survive stopped and destroyed containers. Gardener addresses this problem for the components hosted in a seed cluster by introducing its own managed logging solution. It is integrated with the Gardener monitoring stack to have all troubleshooting context in one place.

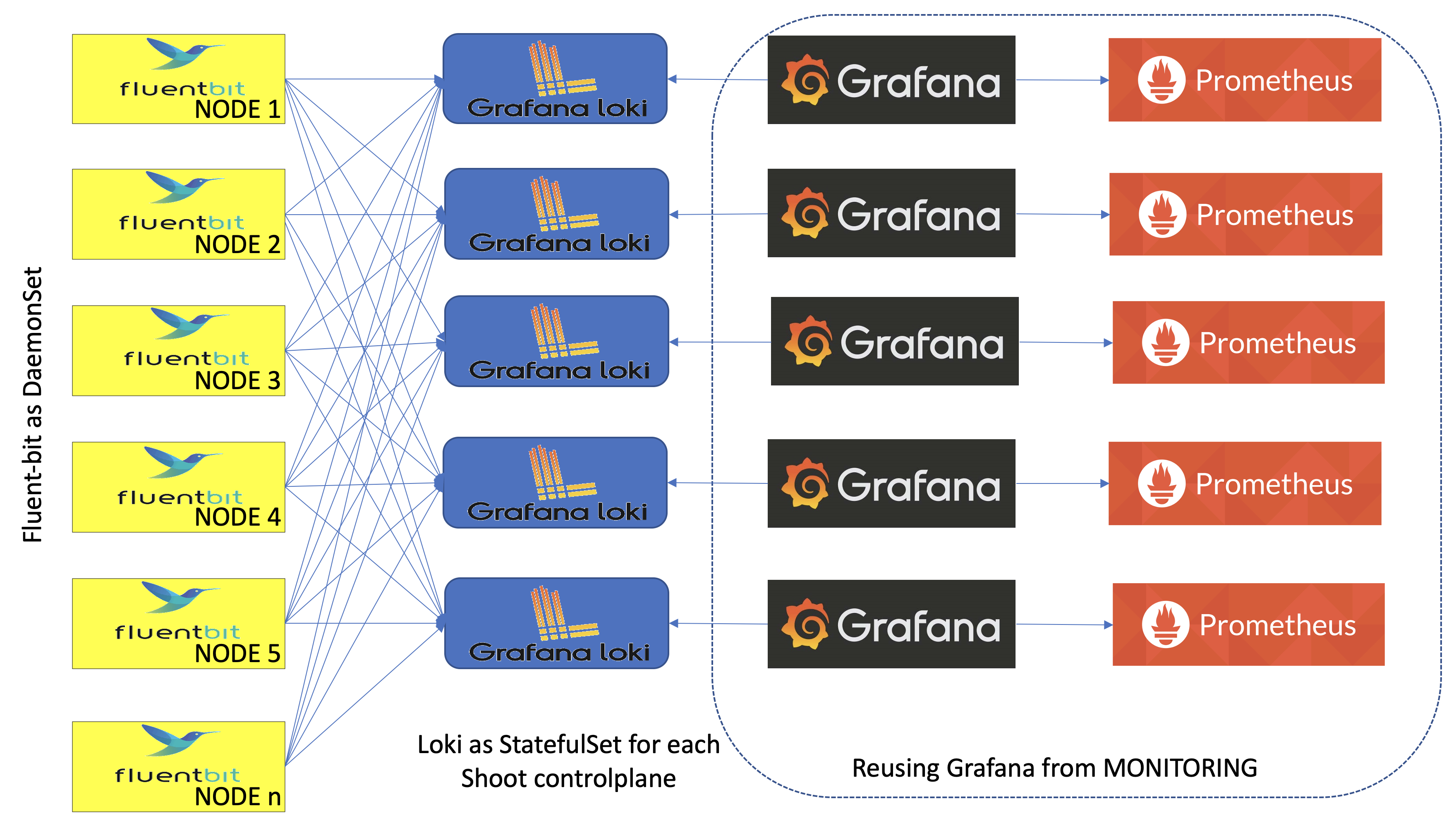

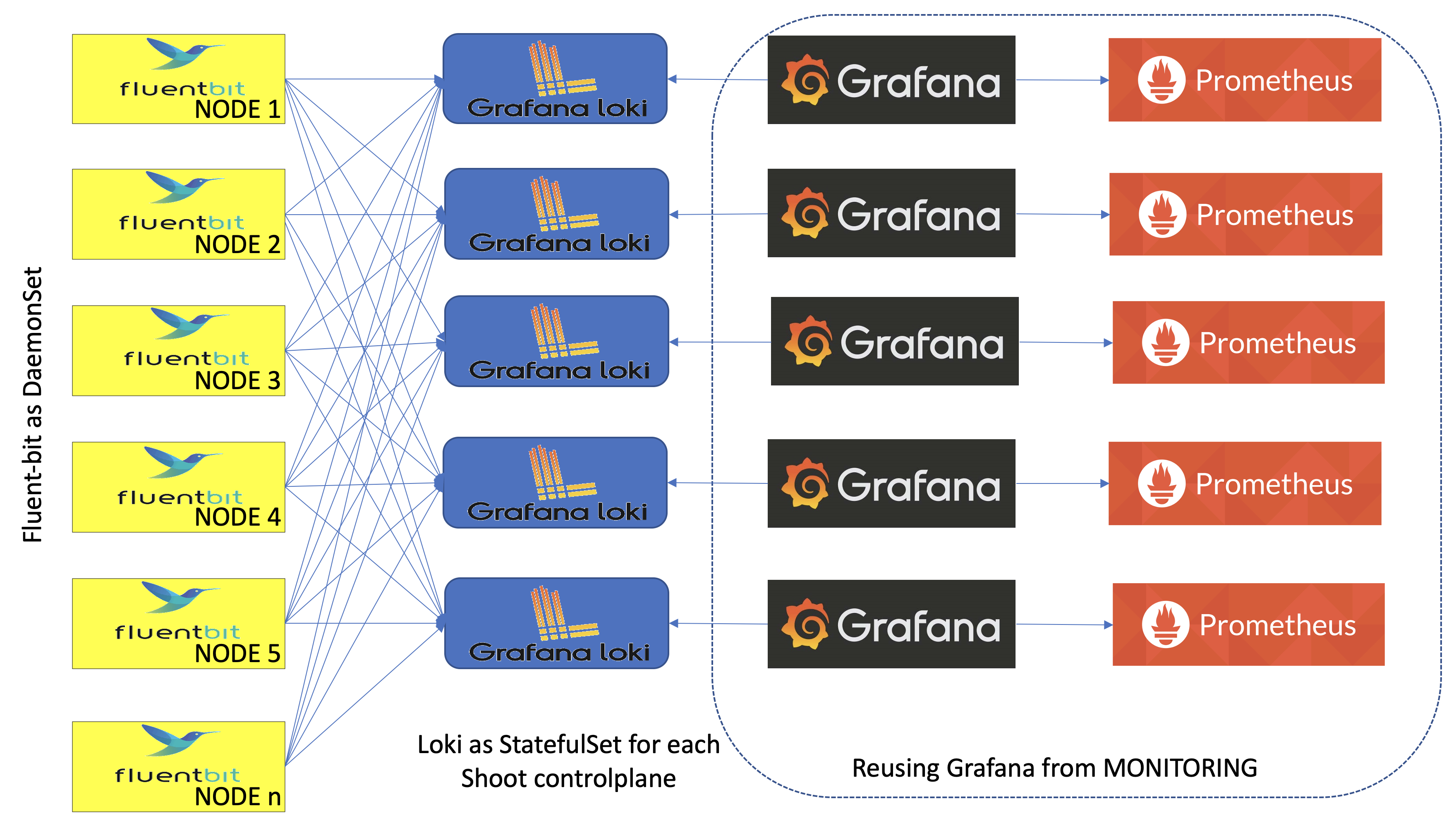

Gardener logging consists of components in three roles - log collectors and forwarders, log persistency and exploration/consumption interfaces. All of them live in the seed clusters in multiple instances:

- Logs are persisted by Vali instances deployed as StatefulSets - one per shoot namespace, if the logging is enabled in the

gardenlet configuration (logging.enabled) and the shoot purpose is not testing, and one in the garden namespace. The shoot instances store logs from the control plane components hosted there. The garden Vali instance is responsible for logs from the rest of the seed namespaces - kube-system, garden, extension-*, and others. - Fluent-bit DaemonSets deployed by the fluent-operator on each seed node collect logs from it. A custom plugin takes care to distribute the collected log messages to the Vali instances that they are intended for. This allows to fetch the logs once for the whole cluster, and to distribute them afterwards.

- Plutono is the UI component used to explore monitoring and log data together for easier troubleshooting and in context. Plutono instances are configured to use the corresponding Vali instances, sharing the same namespace as data providers. There is one Plutono Deployment in the

garden namespace and one Deployment per shoot namespace (exposed to the end users and to the operators).

Logs can be produced from various sources, such as containers or systemd, and in different formats. The fluent-bit design supports configurable data pipeline to address that problem. Gardener provides such configuration for logs produced by all its core managed components as ClusterFilters and ClusterParsers . Extensions can contribute their own, specific configurations as fluent-operator custom resources too. See for example the logging configuration for the Gardener AWS provider extension.

Fluent-bit Log Parsers and Filters

To integrate with Gardener logging, extensions can and should specify how fluent-bit will handle the logs produced by the managed components that they contribute to Gardener. Normally, that would require to configure a parser for the specific logging format, if none of the available is applicable, and a filter defining how to apply it. For a complete reference for the configuration options, refer to fluent-bit’s documentation.

To contribute its own configuration to the fluent-bit agents data pipelines, an extension must deploy a fluent-operator custom resource labeled with fluentbit.gardener/type: seed in the seed cluster.

Note: Take care to provide the correct data pipeline elements in the corresponding fields and not to mix them.

Example: Logging configuration for provider-specific cloud-controller-manager deployed into shoot namespaces that reuses the kube-apiserver-parser defined in logging.go to parse the component logs:

apiVersion: fluentbit.fluent.io/v1alpha2

kind: ClusterFilter

metadata:

labels:

fluentbit.gardener/type: "seed"

name: cloud-controller-manager-aws-cloud-controller-manager

spec:

filters:

- parser:

keyName: log

parser: kube-apiserver-parser

reserveData: true

match: kubernetes.*cloud-controller-manager*aws-cloud-controller-manager*

Further details how to define parsers and use them with examples can be found in the following guide.

Plutono

The two types of Plutono instances found in a seed cluster are configured to expose logs of different origin in their dashboards:

- Garden Plutono dashboards expose logs from non-shoot namespaces of the seed clusters

- Shoot Plutono dashboards expose logs from the shoot cluster namespace where they belong

- Kube Apiserver

- Kube Controller Manager

- Kube Scheduler

- Cluster Autoscaler

- VPA components

- Kubernetes Pods

If the type of logs exposed in the Plutono instances needs to be changed, it is necessary to update the corresponding instance dashboard configurations.

Tips

- Be careful to create

ClusterFilters and ClusterParsers with unique names because they are not namespaced. We use pod_name for filters with one container and pod_name--container_name for pods with multiple containers. - Be careful to match exactly the log names that you need for a particular parser in your filters configuration. The regular expression you will supply will match names in the form

kubernetes.pod_name.<metadata>.container_name. If there are extensions with the same container and pod names, they will all match the same parser in a filter. That may be a desired effect, if they all share the same log format. But it will be a problem if they don’t. To solve it, either the pod or container names must be unique, and the regular expression in the filter has to match that unique pattern. A recommended approach is to prefix containers with the extension name and tune the regular expression to match it. For example, using myextension-container as container name and a regular expression kubernetes.mypod.*myextension-container will guarantee match of the right log name. Make sure that the regular expression does not match more than you expect. For example, kubernetes.systemd.*systemd.* will match both systemd-service and systemd-monitor-service. You will want to be as specific as possible. - It’s a good idea to put the logging configuration into the Helm chart that also deploys the extension controller, while the monitoring configuration can be part of the Helm chart/deployment routine that deploys the component managed by the controller.

- For monitoring to work in the Gardener context, scrape targets need to be labelled appropriately, see

NetworkPolicys In Garden, Seed, Shoot Clusters for details.

References and Additional Resources

10 - Managedresources

Deploy Resources to the Shoot Cluster

We have introduced a component called gardener-resource-manager that is deployed as part of every shoot control plane in the seed.

One of its tasks is to manage CRDs, so called ManagedResources.

Managed resources contain Kubernetes resources that shall be created, reconciled, updated, and deleted by the gardener-resource-manager.

Extension controllers may create these ManagedResources in the shoot namespace if they need to create any resource in the shoot cluster itself, for example RBAC roles (or anything else).

Please take a look at the respective documentation.

11 - Migration

Control Plane Migration

Control Plane Migration is a new Gardener feature that has been recently implemented as proposed in GEP-7 Shoot Control Plane Migration. It should be properly supported by all extensions controllers. This document outlines some important points that extension maintainers should keep in mind to properly support migration in their extensions.

Overall Principles

The following principles should always be upheld:

- All states maintained by the extension that is external from the seed cluster, for example infrastructure resources in a cloud provider, DNS entries, etc., should be kept during the migration. No such state should be deleted and then recreated, as this might cause disruption in the availability of the shoot cluster.

- All Kubernetes resources maintained by the extension in the shoot cluster itself should also be kept during the migration. No such resources should be deleted and then recreated.

Migrate and Restore Operations

Two new operations have been introduced in Gardener. They can be specified as values of the gardener.cloud/operation annotation on an extension resource to indicate that an operation different from a normal reconcile should be performed by the corresponding extension controller:

- The

migrate operation is used to ask the extension controller in the source seed to stop reconciling extension resources (in case they are requeued due to errors) and perform cleanup activities, if such are required. These cleanup activities might involve removing finalizers on resources in the shoot namespace that have been previously created by the extension controller and deleting them without actually deleting any resources external to the seed cluster. This is also the last opportunity for extensions to persist their state into the .status.state field of the reconciled extension resource before its restored in the new destination seed cluster. - The

restore operation is used to ask the extension controller in the destination seed to restore any state saved in the extension resource status, before performing the actual reconciliation.

Unlike the reconcile operation, extension controllers must remove the gardener.cloud/operation annotation at the end of a successful reconciliation when the current operation is migrate or restore, not at the beginning of a reconciliation.

Cleaning-Up Source Seed Resources

All resources in the source seed that have been created by an extension controller, for example secrets, config maps, managed resources, etc., should be properly cleaned up by the extension controller when the current operation is migrate. As mentioned above, such resources should be deleted without actually deleting any resources external to the seed cluster.

There is one exception to this: Secrets labeled with persist=true created via the secrets manager. They should be kept (i.e., the Cleanup function of secrets manager should not be called) and will be garbage collected automatically at the end of the migrate operation. This ensures that they can be properly persisted in the ShootState resource and get restored on the new destination seed cluster.