This is the multi-page printable view of this section. Click here to print.

Features

1 - Hibernation

Hibernation

Some clusters need to be up all the time - typically, they would be hosting some kind of production workload. Others might be used for development purposes or testing during business hours only. Keeping them up and running all the time is a waste of money. Gardener can help you here with its “hibernation” feature. Essentially, hibernation means to shut down all components of a cluster.

How Hibernation Works

The hibernation flow for a shoot attempts to reduce the resources consumed as much as possible. Hence everything not state-related is being decommissioned.

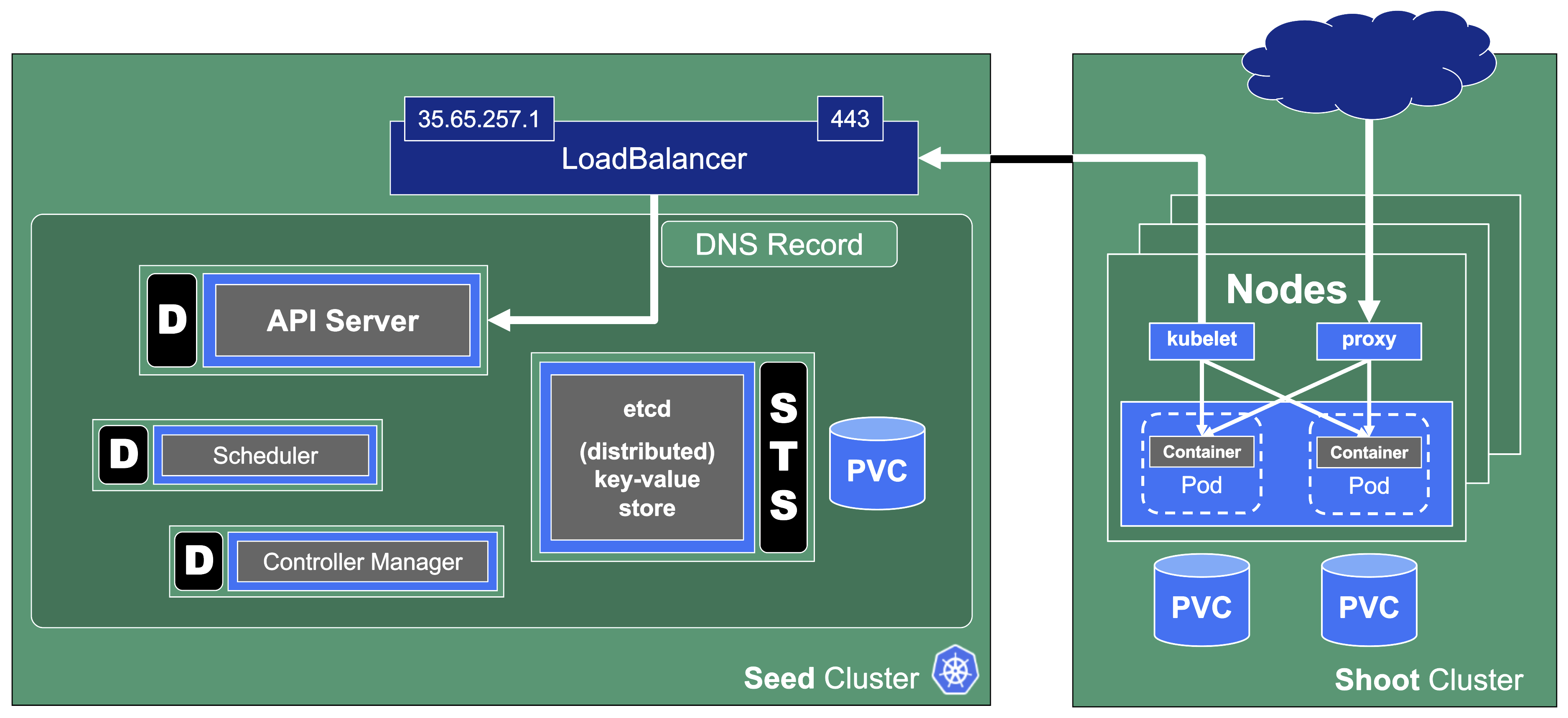

Data Plane

All nodes will be drained and the VMs will be deleted. As a result, all pods will be “stuck” in a Pending state since no new nodes are added. Of course, PVC / PV holding data is not deleted.

Services of type LoadBalancer will keep their external IP addresses.

Control Plane

All components will be scaled down and no pods will remain running. ETCD data is kept safe on the disk.

The DNS records routing traffic for the API server are also destroyed. Trying to connect to a hibernated cluster via kubectl will result in a DNS lookup failure / no-such-host message.

When waking up a cluster, all control plane components will be scaled up again and the DNS records will be re-created. Nodes will be created again and pods scheduled to run on them.

How to Configure / Trigger Hibernation

The easiest way to configure hibernation schedules is via the dashboard. Of course, this is reflected in the shoot’s spec and can also be maintained there. Before a cluster is hibernated, constraints in the shoot’s status will be evaluated. There might be conditions (mostly revolving around mutating / validating webhooks) that would block a successful wake-up. In such a case, the constraint will block hibernation in the first place.

To wake-up or hibernate a shoot immediately, the dashboard can be used or a patch to the shoot’s spec can be applied directly.

2 - Workerless Shoots

Controlplane as a Service

Sometimes, there may be use cases for Kubernetes clusters that don’t require pods but only features of the control plane. Gardener can create the so-called “workerless” shoots, which are exactly that. A Kubernetes cluster without nodes (and without any controller related to them).

In a scenario where you already have multiple clusters, you can use it for orchestration (leases) or factor out components that require many CRDs.

As part of the control plane, the following components are deployed in the seed cluster for workerless shoot:

- etcds

- kube-apiserver

- kube-controller-manager

- gardener-resource-manager

- Logging and monitoring components

- Extension components (to find out if they support workerless shoots, see the Extensions documentation)

3 - Credential Rotation

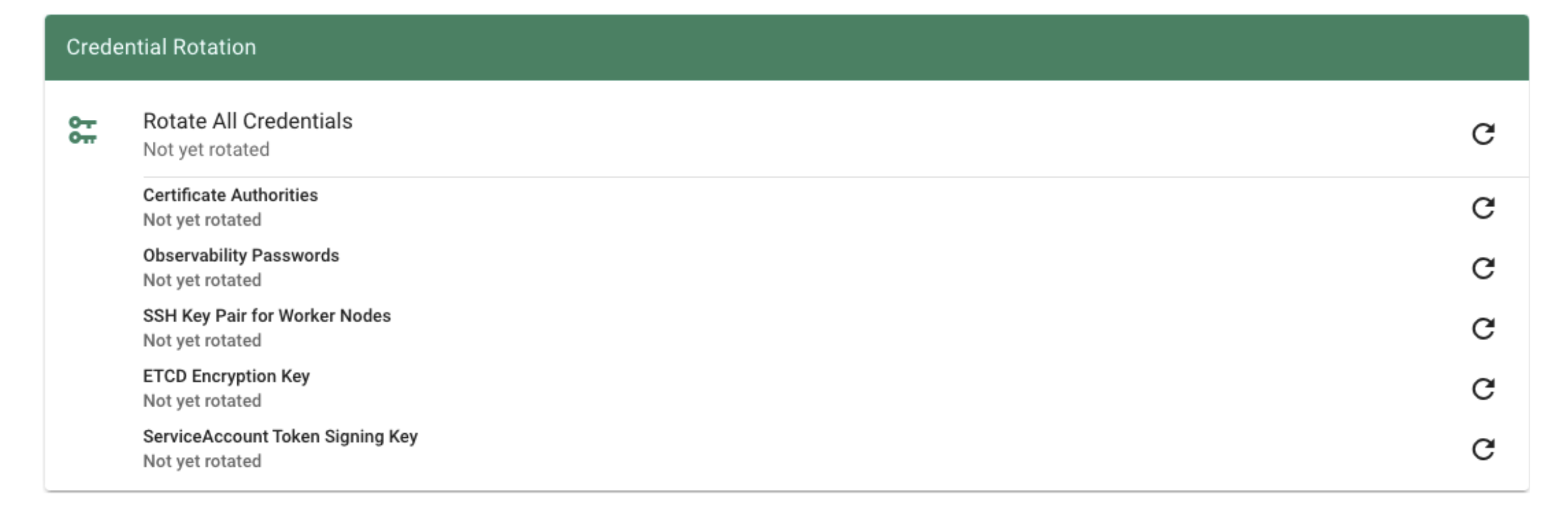

Keys

There are plenty of keys in Gardener. The ETCD needs one to store resources like secrets encrypted at rest. Gardener generates certificate authorities (CAs) to ensure secured communication between the various components and actors and service account tokens are signed with a dedicated key. There is also an SSH key pair to allow debugging of nodes and the observability stack has its own passwords too.

All of these keys share a common property: they are managed by Gardener. Rotating them, however, is potentially very disruptive. Hence, Gardener does not do it automatically, but offers you means to perform these tasks easily. For a single cluster, you may conveniently use the dashboard.

Where possible, the rotation happens in two phases - Preparing and Completing.

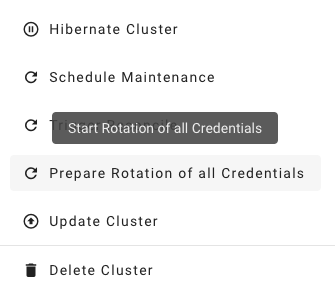

Prepare Rotation of All Credentials

The Preparing phase introduces new keys while the old ones are still valid. Users can safely exchange keys / CA bundles wherever they are used. It is possible to start the preparation by annotating the shoot resource accordingly:

kubectl -n <shoot-namespace> annotate shoot <shoot-name> gardener.cloud/operation=rotate-credentials-start

Complete Rotation of All Credentials

Afterward, the Completing phase will invalidate the old keys / CA bundles. Annotate the shoot resource accordingly:

kubectl -n <shoot-namespace> annotate shoot <shoot-name> gardener.cloud/operation=rotate-credentials-complete

Rotation Phases

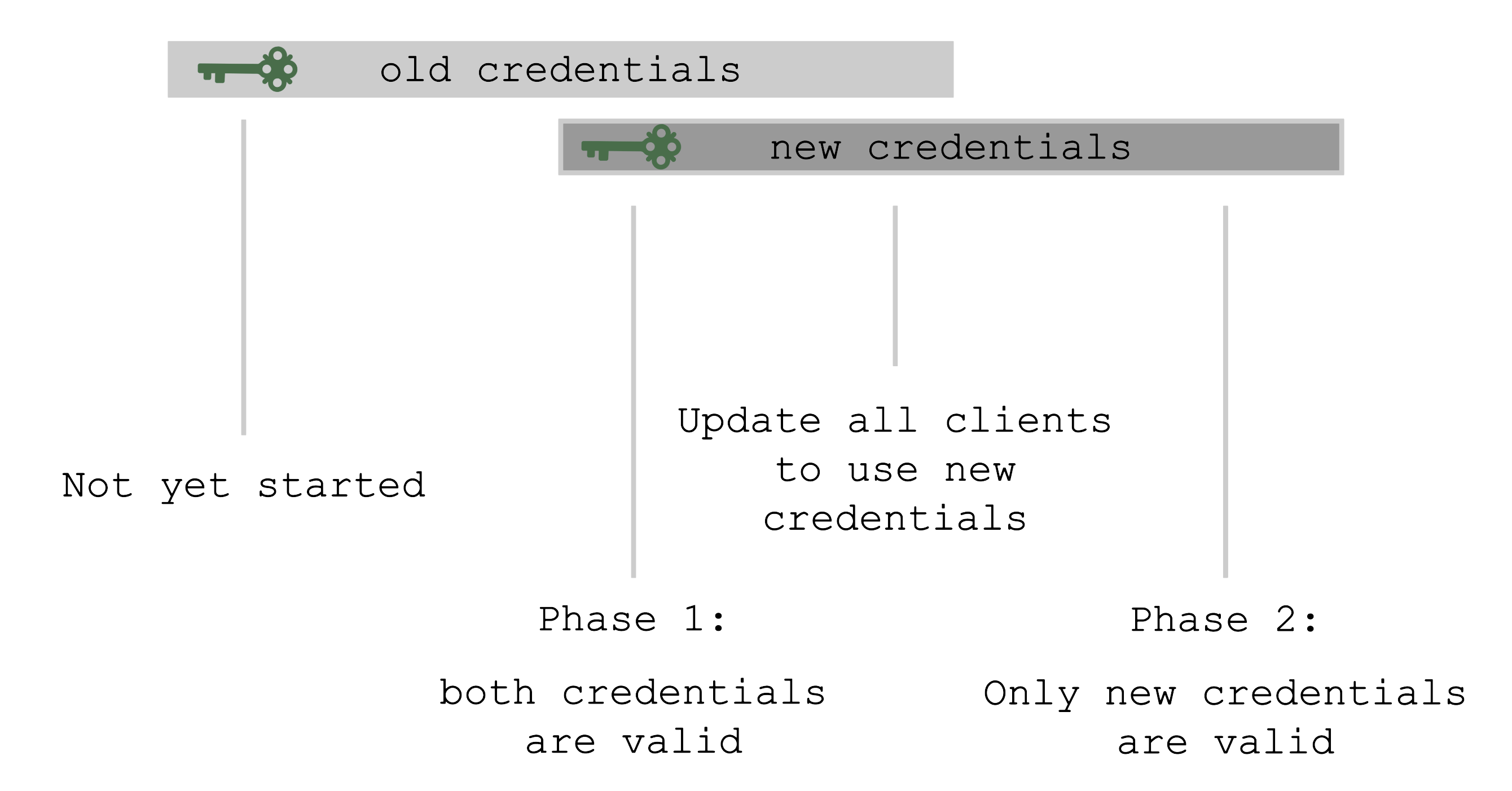

At the beginning, only the old set of credentials exists. By triggering the rotation, new credentials are created in the Preparing phase and both sets are valid. Now, all clients have to update and start using the new credentials. Only afterward it is safe to trigger the Completing phase, which invalidates the old credentials.

The shoot’s status will always show the current status / phase of the rotation.

For more information, see Credentials Rotation for Shoot Clusters.

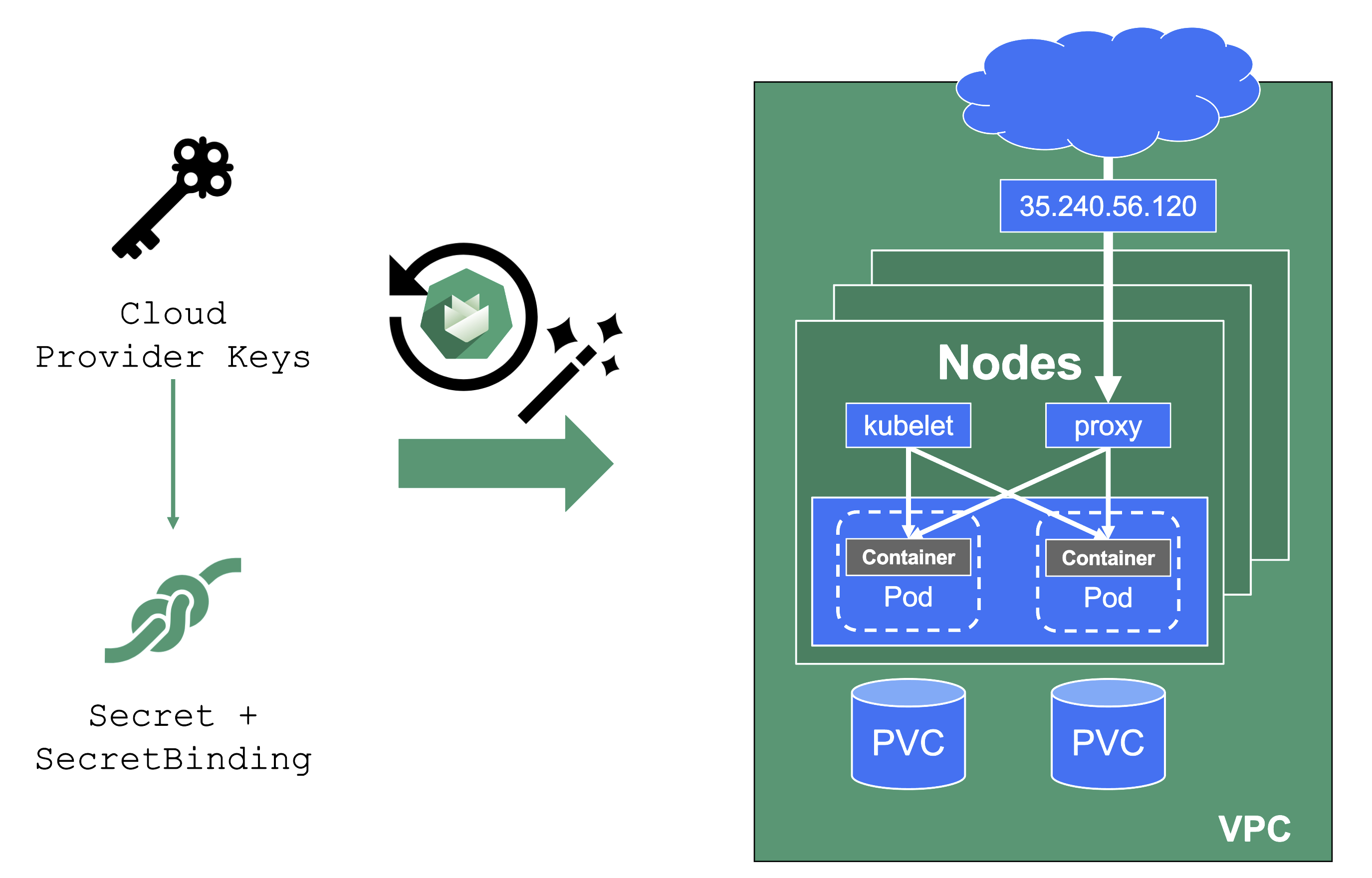

User-Provided Credentials

You grant Gardener permissions to create resources by handing over cloud provider keys. These keys are stored in a secret and referenced to a shoot via a SecretBinding. Gardener uses the keys to create the network for the cluster resources, routes, VMs, disks, and IP addresses.

When you rotate credentials, the new keys have to be stored in the same secret and the shoot needs to reconcile successfully to ensure the replication to every controller. Afterward, the old keys can be deleted safely from Gardener’s perspective.

While the reconciliation can be triggered manually, there is no need for it (if you’re not in a hurry). Each shoot reconciles once within 24h and the new keys will be picked up during the next maintenance window.

Note

It is not possible to move a shoot to a different infrastructure account (at all!).

4 - External DNS Management

External DNS Management

When you deploy to Kubernetes, there is no native management of external DNS. Instead, the cloud-controller-manager requests (mostly IPv4) addresses for every service of type LoadBalancer. Of course, the Ingress resource helps here, but how is the external DNS entry for the ingress controller managed?

Essentially, some sort of automation for DNS management is missing.

Automating DNS Management

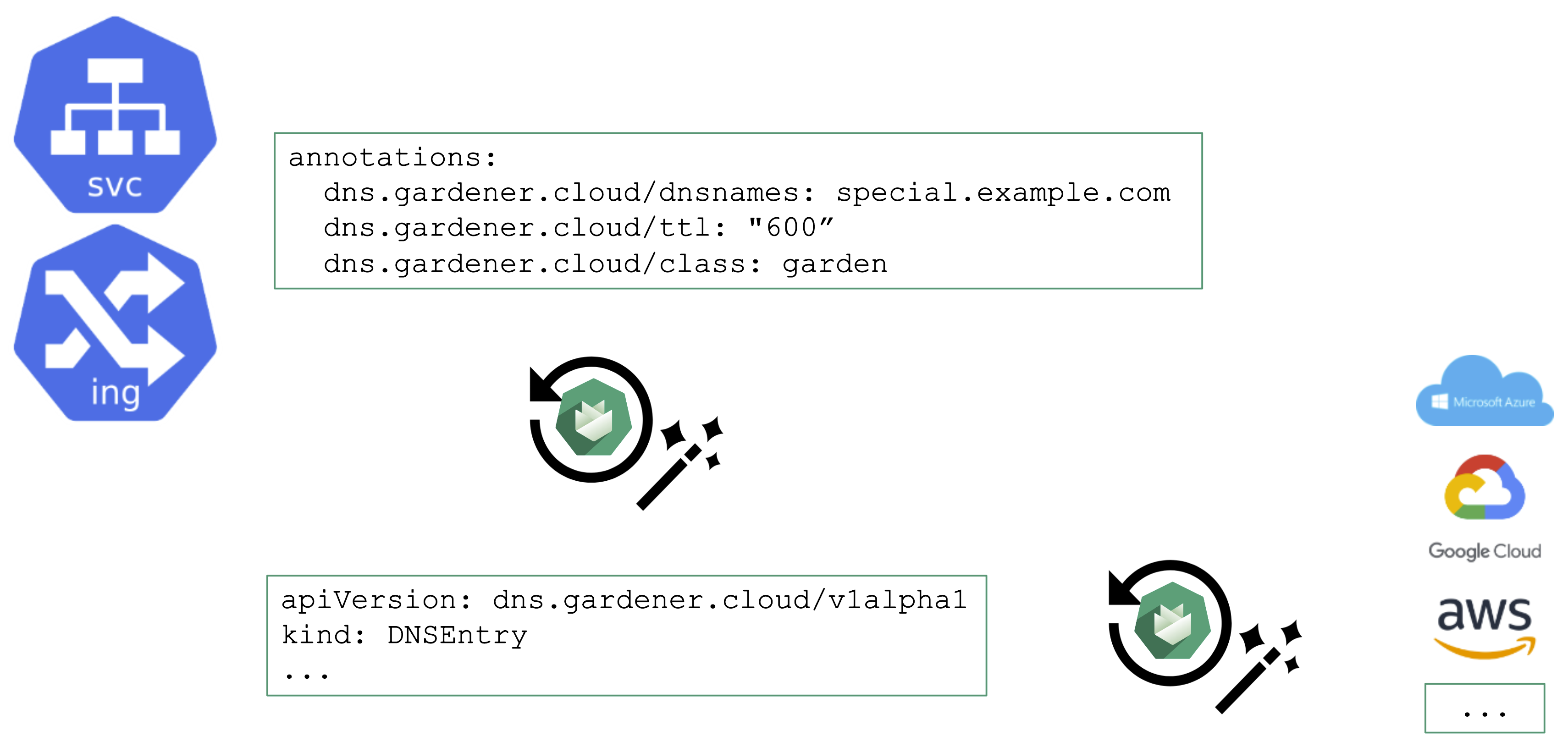

From a user’s perspective, it is desirable to work with already known resources and concepts. Hence, the DNS management offered by Gardener plugs seamlessly into Kubernetes resources and you do not need to “leave” the context of the shoot cluster.

To request a DNS record creation / update, a Service or Ingress resource is annotated accordingly. The shoot-dns-service extension will (if configured) will pick up the request and create a DNSEntry resource + reconcile it to have an actual DNS record created at a configured DNS provider. Gardener supports the following providers:

- aws-route53

- azure-dns

- azure-private-dns

- google-clouddns

- openstack-designate

- alicloud-dns

- cloudflare-dns

For more information, see DNS Names.

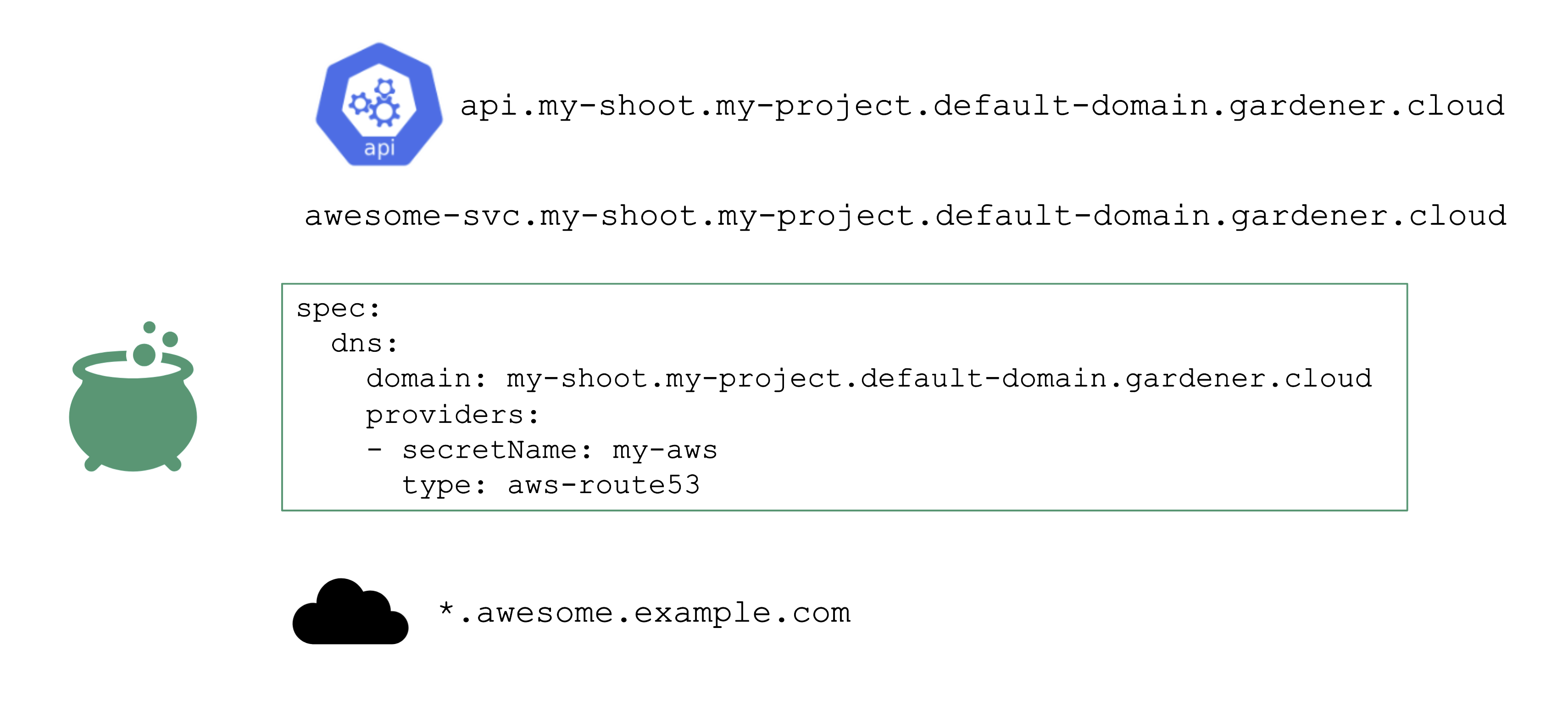

DNS Provider

For the above to work, we need some ingredients. Primarily, this is implemented via a so-called DNSProvider. Every shoot has a default provider that is used to set up the API server’s public DNS record. It can be used to request sub-domains as well.

In addition, a shoot can reference credentials to a DNS provider. Those can be used to manage custom domains.

Please have a look at the documentation for further details.

5 - Certificate Management

Certificate Management

For proper consumption, any service should present a TLS certificate to its consumers. However, self-signed certificates are not fit for this purpose - the certificate should be signed by a CA trusted by an application’s userbase. Luckily, Issuers like Let’s Encrypt and others help here by offering a signing service that issues certificates based on the ACME challenge (Automatic Certificate Management Environment).

There are plenty of tools you can use to perform the challenge. For Kubernetes, cert-manager certainly is the most common, however its configuration is rather cumbersome and error prone. So let’s see how a Gardener extension can help here.

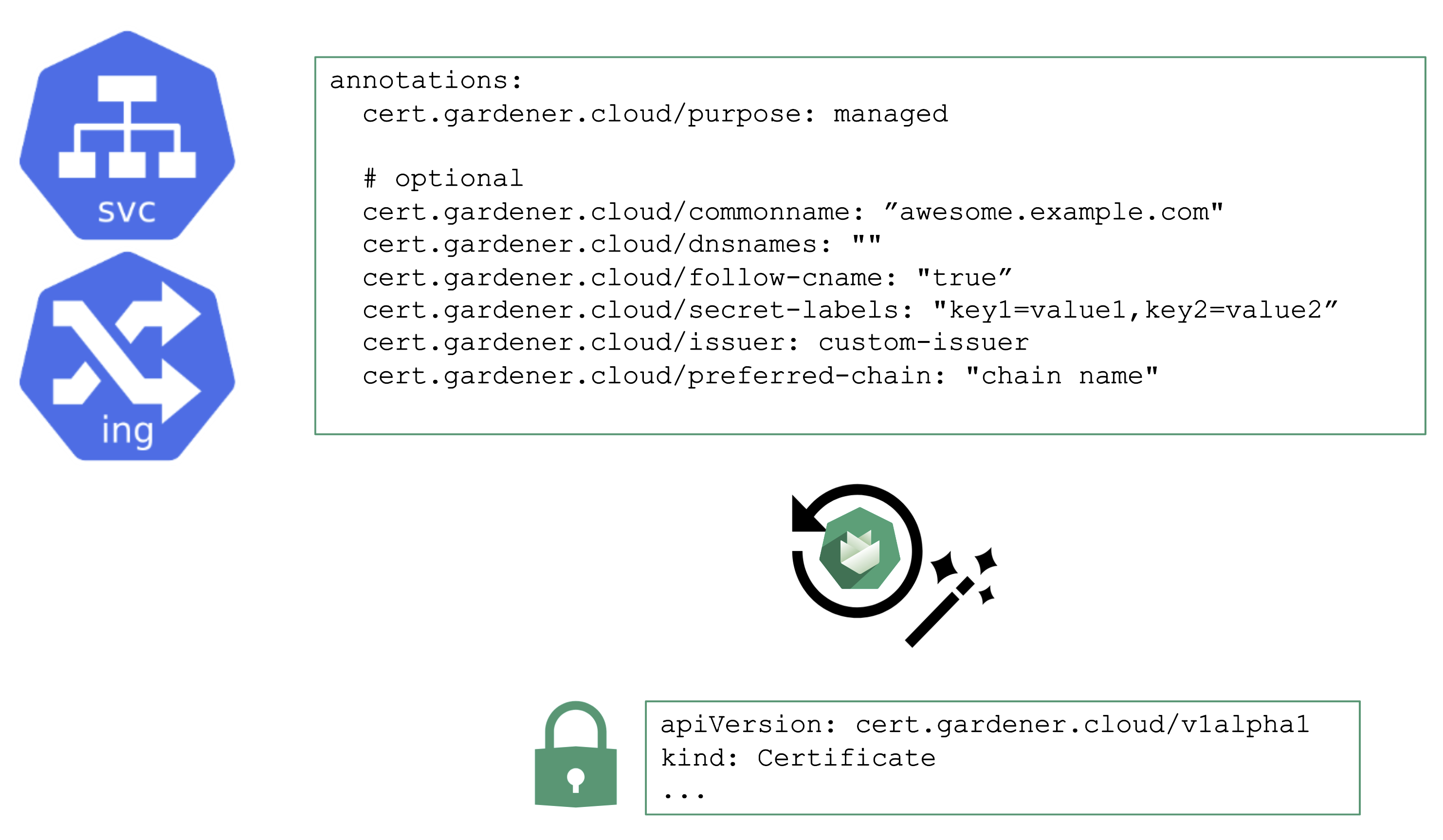

Manage Certificates with Gardener

You may annotate a Service or Ingress resource to trigger the cert-manager to request a certificate from the any configured issuer (e.g. Let’s Encrypt) and perform the challenge. A Gardener operator can add a default issuer for convenience.

With the DNS extension discussed previously, setting up the DNS TXT record for the ACME challenge is fairly easy. The requested certificate can be customized by the means of several other annotations known to the controller. Most notably, it is possible to specify SANs via cert.gardener.cloud/dnsnames to accommodate domain names that have more than 64 characters (the limit for the CN field).

The user’s request for a certificate manifests as a certificate resource. The status, issuer, and other properties can be checked there.

Once successful, the resulting certificate will be stored in a secret and is ready for usage.

With additional configuration, it is also possible to define custom issuers of certificates.

For more information, see the Manage certificates with Gardener for public domain topic and the cert-management repository.

6 - Vertical Pod Autoscaler

Vertical Pod Autoscaler

When a pod’s resource CPU or memory grows, it will hit a limit eventually. Either the pod has resource limits specified or the node will run short of resources. In both cases, the workload might be throttled or even terminated. When this happens, it is often desirable to increase the request or limits. To do this autonomously within certain boundaries is the goal of the Vertical Pod Autoscaler project.

Since it is not part of the standard Kubernetes API, you have to install the CRDs and controller manually. With Gardener, you can simply flip the switch in the shoot’s spec and start creating your VPA objects.

Please be aware that VPA and HPA operate in similar domains and might interfere.

A controller & CRDs for vertical pod auto-scaling can be activated via the shoot’s spec.

7 - Cluster Autoscaler

Obtaining Aditional Nodes

The scheduler will assign pods to nodes, as long as they have capacity (CPU, memory, Pod limit, # attachable disks, …). But what happens when all nodes are fully utilized and the scheduler does not find any suitable target?

Option 1: Evict other pods based on priority. However, this has the downside that other workloads with lower priority might become unschedulable.

Option 2: Add more nodes. There is an upstream Cluster Autoscaler project that does exactly this. It simulates the scheduling and reacts to pods not being schedulable events. Gardener has forked it to make it work with machine-controller-manager abstraction of how node (groups) are defined in Gardener. The cluster autoscaler respects the limits (min / max) of any worker pool in a shoot’s spec. It can also scale down nodes based on utilization thresholds. For more details, see the autoscaler documentation.

Scaling by Priority

For clusters with more than one node pool, the cluster autoscaler has to decide which group to scale up. By default, it randomly picks from the available / applicable. However, this behavior is customizable by the use of so-called expanders.

This section will focus on the priority based expander.

Each worker pool gets a priority and the cluster autoscaler will scale up the one with the highest priority until it reaches its limit.

To get more information on the current status of the autoscaler, you can check a “status” configmap in the kube-system namespace with the following command:

kubectl get cm -n kube-system cluster-autoscaler-status -oyaml

To obtain information about the decision making, you can check the logs of the cluster-autoscaler pod by using the shoot’s monitoring stack.

For more information, see the cluster-autoscaler FAQ and the Priority based expander for cluster-autoscaler topic.