This is the multi-page printable view of this section. Click here to print.

Guides

1 - Set Up Client Tools

1.1 - Fun with kubectl Aliases

Speed up Your Terminal Workflow

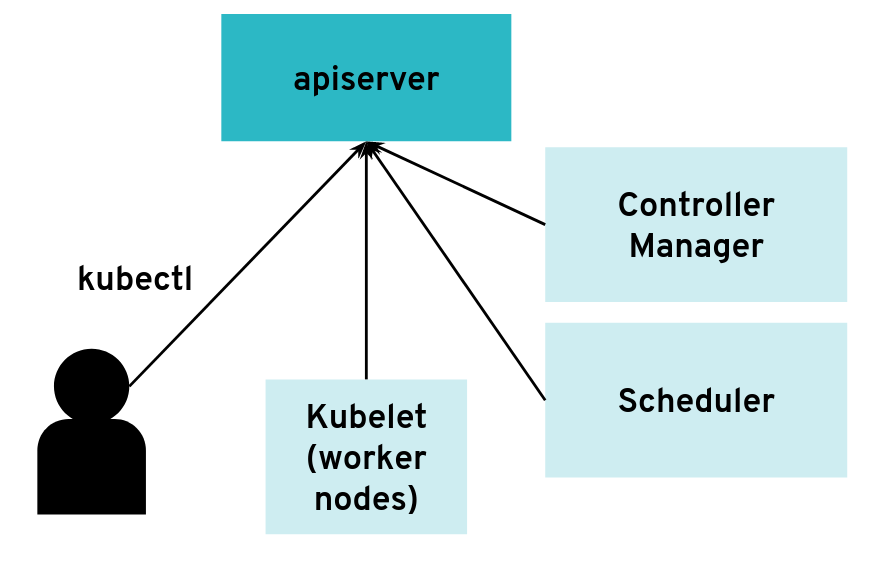

Use the Kubernetes command-line tool, kubectl, to deploy and manage applications on Kubernetes. Using kubectl, you can inspect cluster resources, as well as create, delete, and update components.

You will probably run more than a hundred kubectl commands on some days and you should speed up your terminal workflow with with some shortcuts. Of course, there are good shortcuts and bad shortcuts (lazy coding, lack of security review, etc.), but let’s stick with the positives and talk about a good shortcut: bash aliases in your .profile.

What are those mysterious .profile and .bash_profile files you’ve heard about?

Note

The contents of a .profile file are executed on every log-in of the owner of the file

What’s the .bash_profile then? It’s exactly the same, but under a different name. The unix shell you are logging into, in this case OS X, looks for etc/profile and loads it if it exists. Then it looks for ~/.bash_profile, ~/.bash_login and finally ~/.profile, and loads the first one of these it finds.

Populating the .profile File

Here is the fantastic time saver that needs to be in your shell profile:

# time save number one. shortcut for kubectl

#

alias k="kubectl"

# Start a shell in a pod AND kill them after leaving

#

alias ksh="kubectl run busybox -i --tty --image=busybox --restart=Never --rm -- sh"

# opens a bash

#

alias kbash="kubectl run busybox -i --tty --image=busybox --restart=Never --rm -- ash"

# activate/exports the kuberconfig.yaml in the current working directory

#

alias kexport="export KUBECONFIG=`pwd`/kubeconfig.yaml"

# usage: kurl http://your-svc.namespace.cluster.local

#

# we need for this our very own image...never trust an unknown image..

alias kurl="docker run --rm byrnedo/alpine-curl"

All the kubectl tab completions still work fine with these aliases, so you’re not losing that speed.

Note

If the approach above does not work for you, add the following lines in your ~/.bashrc instead:

# time save number one. shortcut for kubectl

#

alias k="kubectl"

# Enable kubectl completion

source <(k completion bash | sed s/kubectl/k/g)

1.2 - Kubeconfig Context as bash Prompt

Overview

Use the Kubernetes command-line tool, kubectl, to deploy and manage applications on Kubernetes. Using kubectl, you can inspect cluster resources, as well as create, delete, and update components.

By default, the kubectl configuration is located at ~/.kube/config.

Let us suppose that you have two clusters, one for development work and one for scratch work.

How to handle this easily without copying the used configuration always to the right place?

Export the KUBECONFIG Environment Variable

bash$ export KUBECONFIG=<PATH-TO-M>-CONFIG>/kubeconfig-dev.yaml

How to determine which cluster is used by the kubectl command?

Determine Active Cluster

bash$ kubectl cluster-info

Kubernetes master is running at https://api.dev.garden.shoot.canary.k8s-hana.ondemand.com

KubeDNS is running at https://api.dev.garden.shoot.canary.k8s-hana.ondemand.com/api/v1/proxy/namespaces/kube-system/services/kube-dns

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

bash$

Display Cluster in the bash - Linux and Alike

I found this tip on Stackoverflow and find it worth to be added here.

Edit your ~/.bash_profile and add the following code snippet to show the current K8s context in the shell’s prompt:

prompt_k8s(){

k8s_current_context=$(kubectl config current-context 2> /dev/null)

if [[ $? -eq 0 ]] ; then echo -e "(${k8s_current_context}) "; fi

}

PS1+='$(prompt_k8s)'

After this, your bash command prompt contains the active KUBECONFIG context and you always know which cluster is active - develop or production.

For example:

bash$ export KUBECONFIG=/Users/d023280/Documents/workspace/gardener-ui/kubeconfig_gardendev.yaml

bash (garden_dev)$

Note the (garden_dev) prefix in the bash command prompt.

This helps immensely to avoid thoughtless mistakes.

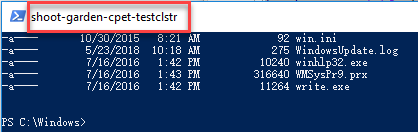

Display Cluster in the PowerShell - Windows

Display the current K8s cluster in the title of PowerShell window.

Create a profile file for your shell under %UserProfile%\Documents\WindowsPowerShell\Microsoft.PowerShell_profile.ps1

Copy following code to Microsoft.PowerShell_profile.ps1

function prompt_k8s {

$k8s_current_context = (kubectl config current-context) | Out-String

if($?) {

return $k8s_current_context

}else {

return "No K8S contenxt found"

}

}

$host.ui.rawui.WindowTitle = prompt_k8s

If you want to switch to different cluster, you can set KUBECONFIG to new value, and re-run the file Microsoft.PowerShell_profile.ps1

1.3 - Organizing Access Using kubeconfig Files

Overview

The kubectl command-line tool uses kubeconfig files to find the information it needs to choose a cluster and communicate with the API server of a cluster.

Problem

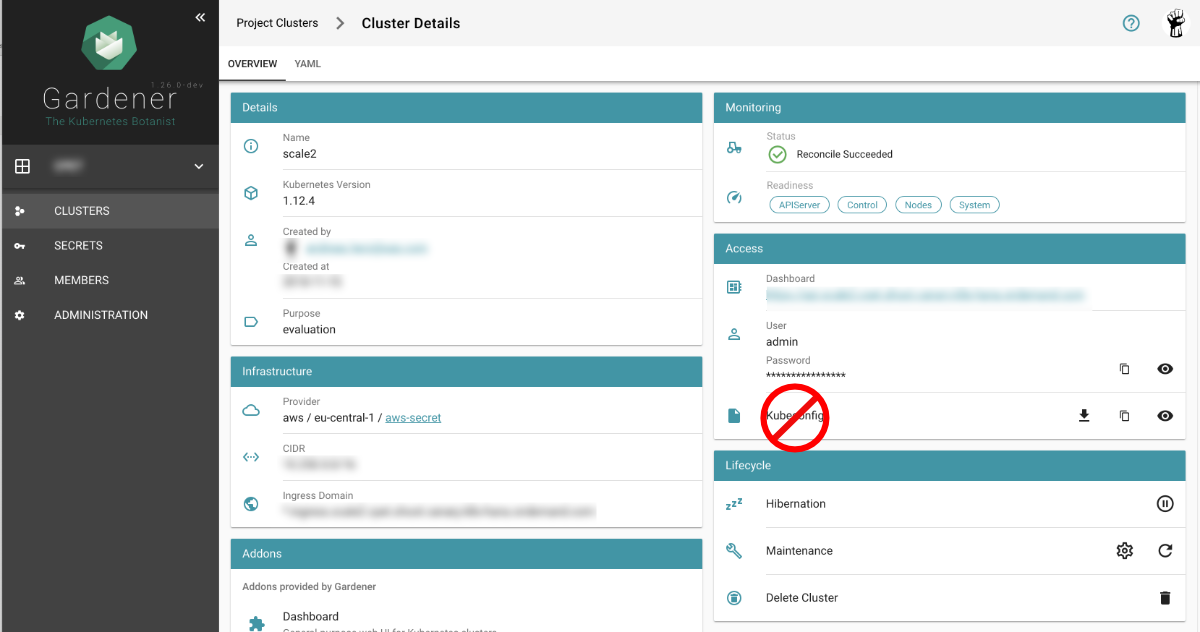

If you’ve become aware of a security breach that affects you, you may want to revoke or cycle credentials in case anything was leaked. However, this is not possible with the initial or master kubeconfig from your cluster.

Pitfall

Never distribute the kubeconfig, which you can download directly within the Gardener dashboard, for a productive cluster.

Create a Custom kubeconfig File for Each User

Create a separate kubeconfig for each user. One of the big advantages of this approach is that you can revoke them and control the permissions better. A limitation to single namespaces is also possible here.

The script creates a new ServiceAccount with read privileges in the whole cluster (Secrets are excluded).

To run the script, Deno, a secure TypeScript runtime, must be installed.

#!/usr/bin/env -S deno run --allow-run

/*

* This script create Kubernetes ServiceAccount and other required resource and print KUBECONFIG to console.

* Depending on your requirements you might want change clusterRoleBindingTemplate() function

*

* In order to execute this script it's required to install Deno.js https://deno.land/ (TypeScript & JavaScript runtime).

* It's single executable binary for the major OSs from the original author of the Node.js

* example: deno run --allow-run kubeconfig-for-custom-user.ts d00001

* example: deno run --allow-run kubeconfig-for-custom-user.ts d00001 --delete

*

* known issue: shebang does works under the Linux but not for Windows Linux Subsystem

*/

const KUBECTL = "/usr/local/bin/kubectl" //or

// const KUBECTL = "C:\\Program Files\\Docker\\Docker\\resources\\bin\\kubectl.exe"

const serviceAccName = Deno.args[0]

const deleteIt = Deno.args[1]

if (serviceAccName == undefined || serviceAccName == "--delete" ) {

console.log("please provide username as an argument, for example: deno run --allow-run kubeconfig-for-custom-user.ts USER_NAME [--delete]")

Deno.exit(1)

}

if (deleteIt == "--delete") {

exec([KUBECTL, "delete", "serviceaccount", serviceAccName])

exec([KUBECTL, "delete", "secret", `${serviceAccName}-secret`])

exec([KUBECTL, "delete", "clusterrolebinding", `view-${serviceAccName}-global`])

Deno.exit(0)

}

await exec([KUBECTL, "create", "serviceaccount", serviceAccName, "-o", "json"])

await exec([KUBECTL, "create", "-o", "json", "-f", "-"], secretYamlTemplate())

let secret = await exec([KUBECTL, "get", "secret", `${serviceAccName}-secret`, "-o", "json"])

let caCRT = secret.data["ca.crt"];

let userToken = atob(secret.data["token"]); //decode base64

let kubeConfig = await exec([KUBECTL, "config", "view", "--minify", "-o", "json"]);

let clusterApi = kubeConfig.clusters[0].cluster.server

let clusterName = kubeConfig.clusters[0].name

await exec([KUBECTL, "create", "-o", "json", "-f", "-"], clusterRoleBindingTemplate())

console.log(kubeConfigTemplate(caCRT, userToken, clusterApi, clusterName, serviceAccName + "-" + clusterName))

async function exec(args: string[], stdInput?: string): Promise<Object> {

console.log("# "+args.join(" "))

let opt: Deno.RunOptions = {

cmd: args,

stdout: "piped",

stderr: "piped",

stdin: "piped",

};

const p = Deno.run(opt);

if (stdInput != undefined) {

await p.stdin.write(new TextEncoder().encode(stdInput));

await p.stdin.close();

}

const status = await p.status()

const output = await p.output()

const stderrOutput = await p.stderrOutput()

if (status.code === 0) {

return JSON.parse(new TextDecoder().decode(output))

} else {

let error = new TextDecoder().decode(stderrOutput);

return ""

}

}

function clusterRoleBindingTemplate() {

return `

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: view-${serviceAccName}-global

subjects:

- kind: ServiceAccount

name: ${serviceAccName}

namespace: default

roleRef:

kind: ClusterRole

name: view

apiGroup: rbac.authorization.k8s.io

`

}

function secretYamlTemplate() {

return `

apiVersion: v1

kind: Secret

metadata:

name: ${serviceAccName}-secret

annotations:

kubernetes.io/service-account.name: ${serviceAccName}

type: kubernetes.io/service-account-token`

}

function kubeConfigTemplate(certificateAuthority: string, token: string, clusterApi: string, clusterName: string, username: string) {

return `

## KUBECONFIG generated on ${new Date()}

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: ${certificateAuthority}

server: ${clusterApi}

name: ${clusterName}

contexts:

- context:

cluster: ${clusterName}

user: ${username}

name: ${clusterName}

current-context: ${clusterName}

kind: Config

preferences: {}

users:

- name: ${username}

user:

token: ${token}

`

}

If edit or admin rights are to be assigned, the ClusterRoleBinding must be adapted in the roleRef section

with the roles listed below.

Furthermore, you can restrict this to a single namespace by not creating a ClusterRoleBinding but only a RoleBinding

within the desired namespace.

| Default ClusterRole | Default ClusterRoleBinding | Description |

|---|---|---|

| cluster-admin | system:masters group | Allows super-user access to perform any action on any resource. When used in a ClusterRoleBinding, it gives full control over every resource in the cluster and in all namespaces. When used in a RoleBinding, it gives full control over every resource in the rolebinding’s namespace, including the namespace itself. |

| admin | None | Allows admin access, intended to be granted within a namespace using a RoleBinding. If used in a RoleBinding, allows read/write access to most resources in a namespace, including the ability to create roles and rolebindings within the namespace. It does not allow write access to resource quota or to the namespace itself. |

| edit | None | Allows read/write access to most objects in a namespace. It does not allow viewing or modifying roles or rolebindings. |

| view | None | Allows read-only access to see most objects in a namespace. It does not allow viewing roles or rolebindings. It does not allow viewing secrets, since those are escalating. |

2 - High Availability

2.1 - Best Practices

Implementing High Availability and Tolerating Zone Outages

Developing highly available workload that can tolerate a zone outage is no trivial task. You will find here various recommendations to get closer to that goal. While many recommendations are general enough, the examples are specific in how to achieve this in a Gardener-managed cluster and where/how to tweak the different control plane components. If you do not use Gardener, it may be still a worthwhile read.

First however, what is a zone outage? It sounds like a clear-cut “thing”, but it isn’t. There are many things that can go haywire. Here are some examples:

- Elevated cloud provider API error rates for individual or multiple services

- Network bandwidth reduced or latency increased, usually also effecting storage sub systems as they are network attached

- No networking at all, no DNS, machines shutting down or restarting, …

- Functional issues, of either the entire service (e.g. all block device operations) or only parts of it (e.g. LB listener registration)

- All services down, temporarily or permanently (the proverbial burning down data center 🔥)

This and everything in between make it hard to prepare for such events, but you can still do a lot. The most important recommendation is to not target specific issues exclusively - tomorrow another service will fail in an unanticipated way. Also, focus more on meaningful availability than on internal signals (useful, but not as relevant as the former). Always prefer automation over manual intervention (e.g. leader election is a pretty robust mechanism, auto-scaling may be required as well, etc.).

Also remember that HA is costly - you need to balance it against the cost of an outage as silly as this may sound, e.g. running all this excess capacity “just in case” vs. “going down” vs. a risk-based approach in between where you have means that will kick in, but they are not guaranteed to work (e.g. if the cloud provider is out of resource capacity). Maybe some of your components must run at the highest possible availability level, but others not - that’s a decision only you can make.

Control Plane

The Kubernetes cluster control plane is managed by Gardener (as pods in separate infrastructure clusters to which you have no direct access) and can be set up with no failure tolerance (control plane pods will be recreated best-effort when resources are available) or one of the failure tolerance types node or zone.

Strictly speaking, static workload does not depend on the (high) availability of the control plane, but static workload doesn’t rhyme with Cloud and Kubernetes and also means, that when you possibly need it the most, e.g. during a zone outage, critical self-healing or auto-scaling functionality won’t be available to you and your workload, if your control plane is down as well. That’s why, even though the resource consumption is significantly higher, we generally recommend to use the failure tolerance type zone for the control planes of productive clusters, at least in all regions that have 3+ zones. Regions that have only 1 or 2 zones don’t support the failure tolerance type zone and then your second best option is the failure tolerance type node, which means a zone outage can still take down your control plane, but individual node outages won’t.

In the shoot resource it’s merely only this what you need to add:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

controlPlane:

highAvailability:

failureTolerance:

type: zone # valid values are `node` and `zone` (only available if your control plane resides in a region with 3+ zones)

This setting will scale out all control plane components for a Gardener cluster as necessary, so that no single zone outage can take down the control plane for longer than just a few seconds for the fail-over to take place (e.g. lease expiration and new leader election or readiness probe failure and endpoint removal). Components run highly available in either active-active (servers) or active-passive (controllers) mode at all times, the persistence (ETCD), which is consensus-based, will tolerate the loss of one zone and still maintain quorum and therefore remain operational. These are all patterns that we will revisit down below also for your own workload.

Worker Pools

Now that you have configured your Kubernetes cluster control plane in HA, i.e. spread it across multiple zones, you need to do the same for your own workload, but in order to do so, you need to spread your nodes across multiple zones first.

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

provider:

workers:

- name: ...

minimum: 6

maximum: 60

zones:

- ...

Prefer regions with at least 2, better 3+ zones and list the zones in the zones section for each of your worker pools. Whether you need 2 or 3 zones at a minimum depends on your fail-over concept:

- Consensus-based software components (like ETCD) depend on maintaining a quorum of

(n/2)+1, so you need at least 3 zones to tolerate the outage of 1 zone. - Primary/Secondary-based software components need just 2 zones to tolerate the outage of 1 zone.

- Then there are software components that can scale out horizontally. They are probably fine with 2 zones, but you also need to think about the load-shift and that the remaining zone must then pick up the work of the unhealthy zone. With 2 zones, the remaining zone must cope with an increase of 100% load. With 3 zones, the remaining zones must only cope with an increase of 50% load (per zone).

In general, the question is also whether you have the fail-over capacity already up and running or not. If not, i.e. you depend on re-scheduling to a healthy zone or auto-scaling, be aware that during a zone outage, you will see a resource crunch in the healthy zones. If you have no automation, i.e. only human operators (a.k.a. “red button approach”), you probably will not get the machines you need and even with automation, it may be tricky. But holding the capacity available at all times is costly. In the end, that’s a decision only you can make. If you made that decision, please adapt the minimum, maximum, maxSurge and maxUnavailable settings for your worker pools accordingly (visit this section for more information).

Also, consider fall-back worker pools (with different/alternative machine types) and cluster autoscaler expanders using a priority-based strategy.

Gardener-managed clusters deploy the cluster autoscaler or CA for short and you can tweak the general CA knobs for Gardener-managed clusters like this:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

kubernetes:

clusterAutoscaler:

expander: "least-waste"

scanInterval: 10s

scaleDownDelayAfterAdd: 60m

scaleDownDelayAfterDelete: 0s

scaleDownDelayAfterFailure: 3m

scaleDownUnneededTime: 30m

scaleDownUtilizationThreshold: 0.5

In addition to that, it is also possible to configure the cluster-autoscaler priority expander by adding priorities to the worker groups like this:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

provider:

workers:

- name: worker1

priority: 40 # priority of this worker group

machine:

type: local

- name: worker2

machine:

type: local

When at least one worker group has a priority defined, the respective ConfigMap will be generated and deployed, which the cluster-autoscaler uses.

When only a part of the worker groups have priorities configured, those who do not have these configured, will be defaulted to 0.

If you want to be ready for a sudden spike or have some buffer in general, over-provision nodes by means of “placeholder” pods with low priority and appropriate resource requests. This way, they will demand nodes to be provisioned for them, but if any pod comes up with a regular/higher priority, the low priority pods will be evicted to make space for the more important ones. Strictly speaking, this is not related to HA, but it may be important to keep this in mind as you generally want critical components to be rescheduled as fast as possible and if there is no node available, it may take 3 minutes or longer to do so (depending on the cloud provider). Besides, not only zones can fail, but also individual nodes.

Replicas (Horizontal Scaling)

Now let’s talk about your workload. In most cases, this will mean to run multiple replicas. If you cannot do that (a.k.a. you have a singleton), that’s a bad situation to be in. Maybe you can run a spare (secondary) as backup? If you cannot, you depend on quick detection and rescheduling of your singleton (more on that below).

Obviously, things get messier with persistence. If you have persistence, you should ideally replicate your data, i.e. let your spare (secondary) “follow” your main (primary). If your software doesn’t support that, you have to deploy other means, e.g. volume snapshotting or side-backups (specific to the software you deploy; keep the backups regional, so that you can switch to another zone at all times). If you have to do those, your HA scenario becomes more a DR scenario and terms like RPO and RTO become relevant to you:

- Recovery Point Objective (RPO): Potential data loss, i.e. how much data will you lose at most (time between backups)

- Recovery Time Objective (RTO): Time until recovery, i.e. how long does it take you to be operational again (time to restore)

Also, keep in mind that your persistent volumes are usually zonal, i.e. once you have a volume in one zone, it’s bound to that zone and you cannot get up your pod in another zone w/o first recreating the volume yourself (Kubernetes won’t help you here directly).

Anyway, best avoid that, if you can (from technical and cost perspective). The best solution (and also the most costly one) is to run multiple replicas in multiple zones and keep your data replicated at all times, so that your RPO is always 0 (best). That’s what we do for Gardener-managed cluster HA control planes (ETCD) as any data loss may be disastrous and lead to orphaned resources (in addition, we deploy side cars that do side-backups for disaster recovery, with full and incremental snapshots with an RPO of 5m).

So, how to run with multiple replicas? That’s the easiest part in Kubernetes and the two most important resources, Deployments and StatefulSet, support that out of the box:

apiVersion: apps/v1

kind: Deployment | StatefulSet

spec:

replicas: ...

The problem comes with the number of replicas. It’s easy only if the number is static, e.g. 2 for active-active/passive or 3 for consensus-based software components, but what with software components that can scale out horizontally? Here you usually do not set the number of replicas statically, but make use of the horizontal pod autoscaler or HPA for short (built-in; part of the kube-controller-manager). There are also other options like the cluster proportional autoscaler, but while the former works based on metrics, the latter is more a guesstimate approach that derives the number of replicas from the number of nodes/cores in a cluster. Sometimes useful, but often blind to the actual demand.

So, HPA it is then for most of the cases. However, what is the resource (e.g. CPU or memory) that drives the number of desired replicas? Again, this is up to you, but not always are CPU or memory the best choices. In some cases, custom metrics may be more appropriate, e.g. requests per second (it was also for us).

You will have to create specific HorizontalPodAutoscaler resources for your scale target and can tweak the general HPA knobs for Gardener-managed clusters like this:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

kubernetes:

kubeControllerManager:

horizontalPodAutoscaler:

syncPeriod: 15s

tolerance: 0.1

downscaleStabilization: 5m0s

initialReadinessDelay: 30s

cpuInitializationPeriod: 5m0s

Resources (Vertical Scaling)

While it is important to set a sufficient number of replicas, it is also important to give the pods sufficient resources (CPU and memory). This is especially true when you think about HA. When a zone goes down, you might need to get up replacement pods, if you don’t have them running already to take over the load from the impacted zone. Likewise, e.g. with active-active software components, you can expect the remaining pods to receive more load. If you cannot scale them out horizontally to serve the load, you will probably need to scale them out (or rather up) vertically. This is done by the vertical pod autoscaler or VPA for short (not built-in; part of the kubernetes/autoscaler repository).

A few caveats though:

- You cannot use HPA and VPA on the same metrics as they would influence each other, which would lead to pod trashing (more replicas require fewer resources; fewer resources require more replicas)

- Scaling horizontally doesn’t cause downtimes (at least not when out-scaling and only one replica is affected when in-scaling), but scaling vertically does (if the pod runs OOM anyway, but also when new recommendations are applied, resource requests for existing pods may be changed, which causes the pods to be rescheduled). Although the discussion is going on for a very long time now, that is still not supported in-place yet (see KEP 1287, implementation in Kubernetes, implementation in VPA).

VPA is a useful tool and Gardener-managed clusters deploy a VPA by default for you (HPA is supported anyway as it’s built into the kube-controller-manager). You will have to create specific VerticalPodAutoscaler resources for your scale target and can tweak the general VPA knobs for Gardener-managed clusters like this:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

kubernetes:

verticalPodAutoscaler:

enabled: true

evictAfterOOMThreshold: 10m0s

evictionRateBurst: 1

evictionRateLimit: -1

evictionTolerance: 0.5

recommendationMarginFraction: 0.15

updaterInterval: 1m0s

recommenderInterval: 1m0s

While horizontal pod autoscaling is relatively straight-forward, it takes a long time to master vertical pod autoscaling. We saw performance issues, hard-coded behavior (on OOM, memory is bumped by +20% and it may take a few iterations to reach a good level), unintended pod disruptions by applying new resource requests (after 12h all targeted pods will receive new requests even though individually they would be fine without, which also drives active-passive resource consumption up), difficulties to deal with spiky workload in general (due to the algorithmic approach it takes), recommended requests may exceed node capacity, limit scaling is proportional and therefore often questionable, and more. VPA is a double-edged sword: useful and necessary, but not easy to handle.

For the Gardener-managed components, we mostly removed limits. Why?

- CPU limits have almost always only downsides. They cause needless CPU throttling, which is not even easily visible. CPU requests turn into

cpu shares, so if the node has capacity, the pod may consume the freely available CPU, but not if you have set limits, which curtail the pod by means ofcpu quota. There are only certain scenarios in which they may make sense, e.g. if you set requests=limits and thereby define a pod withguaranteedQoS, which influences yourcgroupplacement. However, that is difficult to do for the components you implement yourself and practically impossible for the components you just consume, because what’s the correct value for requests/limits and will it hold true also if the load increases and what happens if a zone goes down or with the next update/version of this component? If anything, CPU limits caused outages, not helped prevent them. - As for memory limits, they are slightly more useful, because CPU is compressible and memory is not, so if one pod runs berserk, it may take others down (with CPU,

cpu sharesmake it as fair as possible), depending on which OOM killer strikes (a complicated topic by itself). You don’t want the operating system OOM killer to strike as the result is unpredictable. Better, it’s the cgroup OOM killer or even thekubelet’s eviction, if the consumption is slow enough as it takes priorities into consideration even. If your component is critical and a singleton (e.g. node daemon set pods), you are better off also without memory limits, because letting the pod go OOM because of artificial/wrong memory limits can mean that the node becomes unusable. Hence, such components also better run only with no or a very high memory limit, so that you can catch the occasional memory leak (bug) eventually, but under normal operation, if you cannot decide about a true upper limit, rather not have limits and cause endless outages through them or when you need the pods the most (during a zone outage) where all your assumptions went out of the window.

The downside of having poor or no limits and poor and no requests is that nodes may “die” more often. Contrary to the expectation, even for managed services, the managed service is not responsible or cannot guarantee the health of a node under all circumstances, since the end user defines what is run on the nodes (shared responsibility). If the workload exhausts any resource, it will be the end of the node, e.g. by compressing the CPU too much (so that the kubelet fails to do its work), exhausting the main memory too fast, disk space, file handles, or any other resource.

The kubelet allows for explicit reservation of resources for operating system daemons (system-reserved) and Kubernetes daemons (kube-reserved) that are subtracted from the actual node resources and become the allocatable node resources for your workload/pods. All managed services configure these settings “by rule of thumb” (a balancing act), but cannot guarantee that the values won’t waste resources or always will be sufficient. You will have to fine-tune them eventually and adapt them to your needs. In addition, you can configure soft and hard eviction thresholds to give the kubelet some headroom to evict “greedy” pods in a controlled way. These settings can be configured for Gardener-managed clusters like this:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

kubernetes:

kubelet:

kubeReserved: # explicit resource reservation for Kubernetes daemons

cpu: 100m

memory: 1Gi

ephemeralStorage: 1Gi

pid: 1000

evictionSoft: # soft, i.e. graceful eviction (used if the node is about to run out of resources, avoiding hard evictions)

memoryAvailable: 200Mi

imageFSAvailable: 10%

imageFSInodesFree: 10%

nodeFSAvailable: 10%

nodeFSInodesFree: 10%

evictionSoftGracePeriod: # caps pod's `terminationGracePeriodSeconds` value during soft evictions (specific grace periods)

memoryAvailable: 1m30s

imageFSAvailable: 1m30s

imageFSInodesFree: 1m30s

nodeFSAvailable: 1m30s

nodeFSInodesFree: 1m30s

evictionHard: # hard, i.e. immediate eviction (used if the node is out of resources, avoiding the OS generally run out of resources fail processes indiscriminately)

memoryAvailable: 100Mi

imageFSAvailable: 5%

imageFSInodesFree: 5%

nodeFSAvailable: 5%

nodeFSInodesFree: 5%

evictionMinimumReclaim: # additional resources to reclaim after hitting the hard eviction thresholds to not hit the same thresholds soon after again

memoryAvailable: 0Mi

imageFSAvailable: 0Mi

imageFSInodesFree: 0Mi

nodeFSAvailable: 0Mi

nodeFSInodesFree: 0Mi

evictionMaxPodGracePeriod: 90 # caps pod's `terminationGracePeriodSeconds` value during soft evictions (general grace periods)

evictionPressureTransitionPeriod: 5m0s # stabilization time window to avoid flapping of node eviction state

You can tweak these settings also individually per worker pool (spec.provider.workers.kubernetes.kubelet...), which makes sense especially with different machine types (and also workload that you may want to schedule there).

Physical memory is not compressible, but you can overcome this issue to some degree (alpha since Kubernetes v1.22 in combination with the feature gate NodeSwap on the kubelet) with swap memory. You can read more in this introductory blog and the docs. If you chose to use it (still only alpha at the time of this writing) you may want to consider also the risks associated with swap memory:

- Reduced performance predictability

- Reduced performance up to page trashing

- Reduced security as secrets, normally held only in memory, could be swapped out to disk

That said, the various options mentioned above are only remotely related to HA and will not be further explored throughout this document, but just to remind you: if a zone goes down, load patterns will shift, existing pods will probably receive more load and will require more resources (especially because it is often practically impossible to set “proper” resource requests, which drive node allocation - limits are always ignored by the scheduler) or more pods will/must be placed on the existing and/or new nodes and then these settings, which are generally critical (especially if you switch on bin-packing for Gardener-managed clusters as a cost saving measure), will become even more critical during a zone outage.

Probes

Before we go down the rabbit hole even further and talk about how to spread your replicas, we need to talk about probes first, as they will become relevant later. Kubernetes supports three kinds of probes: startup, liveness, and readiness probes. If you are a visual thinker, also check out this slide deck by Tim Hockin (Kubernetes networking SIG chair).

Basically, the startupProbe and the livenessProbe help you restart the container, if it’s unhealthy for whatever reason, by letting the kubelet that orchestrates your containers on a node know, that it’s unhealthy. The former is a special case of the latter and only applied at the startup of your container, if you need to handle the startup phase differently (e.g. with very slow starting containers) from the rest of the lifetime of the container.

Now, the readinessProbe helps you manage the ready status of your container and thereby pod (any container that is not ready turns the pod not ready). This again has impact on endpoints and pod disruption budgets:

- If the pod is not ready, the endpoint will be removed and the pod will not receive traffic anymore

- If the pod is not ready, the pod counts into the pod disruption budget and if the budget is exceeded, no further voluntary pod disruptions will be permitted for the remaining ready pods (e.g. no eviction, no voluntary horizontal or vertical scaling, if the pod runs on a node that is about to be drained or in draining, draining will be paused until the max drain timeout passes)

As you can see, all of these probes are (also) related to HA (mostly the readinessProbe, but depending on your workload, you can also leverage livenessProbe and startupProbe into your HA strategy). If Kubernetes doesn’t know about the individual status of your container/pod, it won’t do anything for you (right away). That said, later/indirectly something might/will happen via the node status that can also be ready or not ready, which influences the pods and load balancer listener registration (a not ready node will not receive cluster traffic anymore), but this process is worker pool global and reacts delayed and also doesn’t discriminate between the containers/pods on a node.

In addition, Kubernetes also offers pod readiness gates to amend your pod readiness with additional custom conditions (normally, only the sum of the container readiness matters, but pod readiness gates additionally count into the overall pod readiness). This may be useful if you want to block (by means of pod disruption budgets that we will talk about next) the roll-out of your workload/nodes in case some (possibly external) condition fails.

Pod Disruption Budgets

One of the most important resources that help you on your way to HA are pod disruption budgets or PDB for short. They tell Kubernetes how to deal with voluntary pod disruptions, e.g. during the deployment of your workload, when the nodes are rolled, or just in general when a pod shall be evicted/terminated. Basically, if the budget is reached, they block all voluntary pod disruptions (at least for a while until possibly other timeouts act or things happen that leave Kubernetes no choice anymore, e.g. the node is forcefully terminated). You should always define them for your workload.

Very important to note is that they are based on the readinessProbe, i.e. even if all of your replicas are lively, but not enough of them are ready, this blocks voluntary pod disruptions, so they are very critical and useful. Here an example (you can specify either minAvailable or maxUnavailable in absolute numbers or as percentage):

apiVersion: policy/v1

kind: PodDisruptionBudget

spec:

maxUnavailable: 1

selector:

matchLabels:

...

And please do not specify a PDB of maxUnavailable being 0 or similar. That’s pointless, even detrimental, as it blocks then even useful operations, forces always the hard timeouts that are less graceful and it doesn’t make sense in the context of HA. You cannot “force” HA by preventing voluntary pod disruptions, you must work with the pod disruptions in a resilient way. Besides, PDBs are really only about voluntary pod disruptions - something bad can happen to a node/pod at any time and PDBs won’t make this reality go away for you.

PDBs will not always work as expected and can also get in your way, e.g. if the PDB is violated or would be violated, it may possibly block whatever you are trying to do to salvage the situation, e.g. drain a node or deploy a patch version (if the PDB is or would be violated, not even unhealthy pods would be evicted as they could theoretically become healthy again, which Kubernetes doesn’t know). In order to overcome this issue, it is now possible (alpha since Kubernetes v1.26 in combination with the feature gate PDBUnhealthyPodEvictionPolicy on the API server, beta and enabled by default since Kubernetes v1.27) to configure the so-called unhealthy pod eviction policy. The default is still IfHealthyBudget as a change in default would have changed the behavior (as described above), but you can now also set AlwaysAllow at the PDB (spec.unhealthyPodEvictionPolicy). For more information, please check out this discussion, the PR and this document and balance the pros and cons for yourself. In short, the new AlwaysAllow option is probably the better choice in most of the cases while IfHealthyBudget is useful only if you have frequent temporary transitions or for special cases where you have already implemented controllers that depend on the old behavior.

Pod Topology Spread Constraints

Pod topology spread constraints or PTSC for short (no official abbreviation exists, but we will use this in the following) are enormously helpful to distribute your replicas across multiple zones, nodes, or any other user-defined topology domain. They complement and improve on pod (anti-)affinities that still exist and can be used in combination.

PTSCs are an improvement, because they allow for maxSkew and minDomains. You can steer the “level of tolerated imbalance” with maxSkew, e.g. you probably want that to be at least 1, so that you can perform a rolling update, but this all depends on your deployment (maxUnavailable and maxSurge), etc. Stateful sets are a bit different (maxUnavailable) as they are bound to volumes and depend on them, so there usually cannot be 2 pods requiring the same volume. minDomains is a hint to tell the scheduler how far to spread, e.g. if all nodes in one zone disappeared because of a zone outage, it may “appear” as if there are only 2 zones in a 3 zones cluster and the scheduling decisions may end up wrong, so a minDomains of 3 will tell the scheduler to spread to 3 zones before adding another replica in one zone. Be careful with this setting as it also means, if one zone is down the “spread” is already at least 1, if pods run in the other zones. This is useful where you have exactly as many replicas as you have zones and you do not want any imbalance. Imbalance is critical as if you end up with one, nobody is going to do the (active) re-balancing for you (unless you deploy and configure additional non-standard components such as the descheduler). So, for instance, if you have something like a DBMS that you want to spread across 2 zones (active-passive) or 3 zones (consensus-based), you better specify minDomains of 2 respectively 3 to force your replicas into at least that many zones before adding more replicas to another zone (if supported).

Anyway, PTSCs are critical to have, but not perfect, so we saw (unsurprisingly, because that’s how the scheduler works), that the scheduler may block the deployment of new pods because it takes the decision pod-by-pod (see for instance #109364).

Pod Affinities and Anti-Affinities

As said, you can combine PTSCs with pod affinities and/or anti-affinities. Especially inter-pod (anti-)affinities may be helpful to place pods apart, e.g. because they are fall-backs for each other or you do not want multiple potentially resource-hungry “best-effort” or “burstable” pods side-by-side (noisy neighbor problem), or together, e.g. because they form a unit and you want to reduce the failure domain, reduce the network latency, and reduce the costs.

Topology Aware Hints

While topology aware hints are not directly related to HA, they are very relevant in the HA context. Spreading your workload across multiple zones may increase network latency and cost significantly, if the traffic is not shaped. Topology aware hints (beta since Kubernetes v1.23, replacing the now deprecated topology aware traffic routing with topology keys) help to route the traffic within the originating zone, if possible. Basically, they tell kube-proxy how to setup your routing information, so that clients can talk to endpoints that are located within the same zone.

Be aware however, that there are some limitations. Those are called safeguards and if they strike, the hints are off and traffic is routed again randomly. Especially controversial is the balancing limitation as there is the assumption, that the load that hits an endpoint is determined by the allocatable CPUs in that topology zone, but that’s not always, if even often, the case (see for instance #113731 and #110714). So, this limitation hits far too often and your hints are off, but then again, it’s about network latency and cost optimization first, so it’s better than nothing.

Networking

We have talked about networking only to some small degree so far (readiness probes, pod disruption budgets, topology aware hints). The most important component is probably your ingress load balancer - everything else is managed by Kubernetes. AWS, Azure, GCP, and also OpenStack offer multi-zonal load balancers, so make use of them. In Azure and GCP, LBs are regional whereas in AWS and OpenStack, they need to be bound to a zone, which the cloud-controller-manager does by observing the zone labels at the nodes (please note that this behavior is not always working as expected, see #570 where the AWS cloud-controller-manager is not readjusting to newly observed zones).

Please be reminded that even if you use a service mesh like Istio, the off-the-shelf installation/configuration usually never comes with productive settings (to simplify first-time installation and improve first-time user experience) and you will have to fine-tune your installation/configuration, much like the rest of your workload.

Relevant Cluster Settings

Following now a summary/list of the more relevant settings you may like to tune for Gardener-managed clusters:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

controlPlane:

highAvailability:

failureTolerance:

type: zone # valid values are `node` and `zone` (only available if your control plane resides in a region with 3+ zones)

kubernetes:

kubeAPIServer:

defaultNotReadyTolerationSeconds: 300

defaultUnreachableTolerationSeconds: 300

kubelet:

...

kubeScheduler:

featureGates:

MinDomainsInPodTopologySpread: true

kubeControllerManager:

nodeMonitorGracePeriod: 40s

horizontalPodAutoscaler:

syncPeriod: 15s

tolerance: 0.1

downscaleStabilization: 5m0s

initialReadinessDelay: 30s

cpuInitializationPeriod: 5m0s

verticalPodAutoscaler:

enabled: true

evictAfterOOMThreshold: 10m0s

evictionRateBurst: 1

evictionRateLimit: -1

evictionTolerance: 0.5

recommendationMarginFraction: 0.15

updaterInterval: 1m0s

recommenderInterval: 1m0s

clusterAutoscaler:

expander: "least-waste"

scanInterval: 10s

scaleDownDelayAfterAdd: 60m

scaleDownDelayAfterDelete: 0s

scaleDownDelayAfterFailure: 3m

scaleDownUnneededTime: 30m

scaleDownUtilizationThreshold: 0.5

provider:

workers:

- name: ...

minimum: 6

maximum: 60

maxSurge: 3

maxUnavailable: 0

zones:

- ... # list of zones you want your worker pool nodes to be spread across, see above

kubernetes:

kubelet:

... # similar to `kubelet` above (cluster-wide settings), but here per worker pool (pool-specific settings), see above

machineControllerManager: # optional, it allows to configure the machine-controller settings.

machineCreationTimeout: 20m

machineHealthTimeout: 10m

machineDrainTimeout: 60h

systemComponents:

coreDNS:

autoscaling:

mode: horizontal # valid values are `horizontal` (driven by CPU load) and `cluster-proportional` (driven by number of nodes/cores)

On spec.controlPlane.highAvailability.failureTolerance.type

If set, determines the degree of failure tolerance for your control plane. zone is preferred, but only available if your control plane resides in a region with 3+ zones. See above and the docs.

On spec.kubernetes.kubeAPIServer.defaultUnreachableTolerationSeconds and defaultNotReadyTolerationSeconds

This is a very interesting API server setting that lets Kubernetes decide how fast to evict pods from nodes whose status condition of type Ready is either Unknown (node status unknown, a.k.a unreachable) or False (kubelet not ready) (see node status conditions; please note that kubectl shows both values as NotReady which is a somewhat “simplified” visualization).

You can also override the cluster-wide API server settings individually per pod:

spec:

tolerations:

- key: "node.kubernetes.io/unreachable"

operator: "Exists"

effect: "NoExecute"

tolerationSeconds: 0

- key: "node.kubernetes.io/not-ready"

operator: "Exists"

effect: "NoExecute"

tolerationSeconds: 0

This will evict pods on unreachable or not-ready nodes immediately, but be cautious: 0 is very aggressive and may lead to unnecessary disruptions. Again, you must decide for your own workload and balance out the pros and cons (e.g. long startup time).

Please note, these settings replace spec.kubernetes.kubeControllerManager.podEvictionTimeout that was deprecated with Kubernetes v1.26 (and acted as an upper bound).

On spec.kubernetes.kubeScheduler.featureGates.MinDomainsInPodTopologySpread

Required to be enabled for minDomains to work with PTSCs (beta since Kubernetes v1.25, but off by default). See above and the docs. This tells the scheduler, how many topology domains to expect (=zones in the context of this document).

On spec.kubernetes.kubeControllerManager.nodeMonitorGracePeriod

This is another very interesting kube-controller-manager setting that can help you speed up or slow down how fast a node shall be considered Unknown (node status unknown, a.k.a unreachable) when the kubelet is not updating its status anymore (see node status conditions), which effects eviction (see spec.kubernetes.kubeAPIServer.defaultUnreachableTolerationSeconds and defaultNotReadyTolerationSeconds above). The shorter the time window, the faster Kubernetes will act, but the higher the chance of flapping behavior and pod trashing, so you may want to balance that out according to your needs, otherwise stick to the default which is a reasonable compromise.

On spec.kubernetes.kubeControllerManager.horizontalPodAutoscaler...

This configures horizontal pod autoscaling in Gardener-managed clusters. See above and the docs for the detailed fields.

On spec.kubernetes.verticalPodAutoscaler...

This configures vertical pod autoscaling in Gardener-managed clusters. See above and the docs for the detailed fields.

On spec.kubernetes.clusterAutoscaler...

This configures node auto-scaling in Gardener-managed clusters. See above and the docs for the detailed fields, especially about expanders, which may become life-saving in case of a zone outage when a resource crunch is setting in and everybody rushes to get machines in the healthy zones.

In case of a zone outage, it is critical to understand how the cluster autoscaler will put a worker pool in one zone into “back-off” and what the consequences for your workload will be. Unfortunately, the official cluster autoscaler documentation does not explain these details, but you can find hints in the source code:

If a node fails to come up, the node group (worker pool in that zone) will go into “back-off”, at first 5m, then exponentially longer until the maximum of 30m is reached. The “back-off” is reset after 3 hours. This in turn means, that nodes must be first considered Unknown, which happens when spec.kubernetes.kubeControllerManager.nodeMonitorGracePeriod lapses (e.g. at the beginning of a zone outage). Then they must either remain in this state until spec.provider.workers.machineControllerManager.machineHealthTimeout lapses for them to be recreated, which will fail in the unhealthy zone, or spec.kubernetes.kubeAPIServer.defaultUnreachableTolerationSeconds lapses for the pods to be evicted (usually faster than node replacements, depending on your configuration), which will trigger the cluster autoscaler to create more capacity, but very likely in the same zone as it tries to balance its node groups at first, which will fail in the unhealthy zone. It will be considered failed only when maxNodeProvisionTime lapses (usually close to spec.provider.workers.machineControllerManager.machineCreationTimeout) and only then put the node group into “back-off” and not retry for 5m (at first and then exponentially longer). Only then you can expect new node capacity to be brought up somewhere else.

During the time of ongoing node provisioning (before a node group goes into “back-off”), the cluster autoscaler may have “virtually scheduled” pending pods onto those new upcoming nodes and will not reevaluate these pods anymore unless the node provisioning fails (which will fail during a zone outage, but the cluster autoscaler cannot know that and will therefore reevaluate its decision only after it has given up on the new nodes).

It’s critical to keep that in mind and accommodate for it. If you have already capacity up and running, the reaction time is usually much faster with leases (whatever you set) or endpoints (spec.kubernetes.kubeControllerManager.nodeMonitorGracePeriod), but if you depend on new/fresh capacity, the above should inform you how long you will have to wait for it and for how long pods might be pending (because capacity is generally missing and pending pods may have been “virtually scheduled” to new nodes that won’t come up until the node group goes eventually into “back-off” and nodes in the healthy zones come up).

On spec.provider.workers.minimum, maximum, maxSurge, maxUnavailable, zones, and machineControllerManager

Each worker pool in Gardener may be configured differently. Among many other settings like machine type, root disk, Kubernetes version, kubelet settings, and many more you can also specify the lower and upper bound for the number of machines (minimum and maximum), how many machines may be added additionally during a rolling update (maxSurge) and how many machines may be in termination/recreation during a rolling update (maxUnavailable), and of course across how many zones the nodes shall be spread (zones).

Gardener divides minimum, maximum, maxSurge, maxUnavailable values by the number of zones specified for this worker pool. This fact must be considered when you plan the sizing of your worker pools.

Example:

provider:

workers:

- name: ...

minimum: 6

maximum: 60

maxSurge: 3

maxUnavailable: 0

zones: ["a", "b", "c"]

- The resulting

MachineDeployments per zone will getminimum: 2,maximum: 20,maxSurge: 1,maxUnavailable: 0. - If another zone is added all values will be divided by

4, resulting in:- Less workers per zone.

- ⚠️ One

MachineDeploymentwithmaxSurge: 0, i.e. there will be a replacement of nodes without rolling updates.

Interesting is also the configuration for Gardener’s machine-controller-manager or MCM for short that provisions, monitors, terminates, replaces, or updates machines that back your nodes:

- The shorter

machineCreationTimeoutis, the faster MCM will retry to create a machine/node, if the process is stuck on cloud provider side. It is set to useful/practical timeouts for the different cloud providers and you probably don’t want to change those (in the context of HA at least). Please align with the cluster autoscaler’smaxNodeProvisionTime. - The shorter

machineHealthTimeoutis, the faster MCM will replace machines/nodes in case the kubelet isn’t reporting back, which translates toUnknown, or reports back withNotReady, or the node-problem-detector that Gardener deploys for you reports a non-recoverable issue/condition (e.g. read-only file system). If it is too short however, you risk node and pod trashing, so be careful. - The shorter

machineDrainTimeoutis, the faster you can get rid of machines/nodes that MCM decided to remove, but this puts a cap on the grace periods and PDBs. They are respected up until the drain timeout lapses - then the machine/node will be forcefully terminated, whether or not the pods are still in termination or not even terminated because of PDBs. Those PDBs will then be violated, so be careful here as well. Please align with the cluster autoscaler’smaxGracefulTerminationSeconds.

Especially the last two settings may help you recover faster from cloud provider issues.

On spec.systemComponents.coreDNS.autoscaling

DNS is critical, in general and also within a Kubernetes cluster. Gardener-managed clusters deploy CoreDNS, a graduated CNCF project. Gardener supports 2 auto-scaling modes for it, horizontal (using HPA based on CPU) and cluster-proportional (using cluster proportional autoscaler that scales the number of pods based on the number of nodes/cores, not to be confused with the cluster autoscaler that scales nodes based on their utilization). Check out the docs, especially the trade-offs why you would chose one over the other (cluster-proportional gives you more configuration options, if CPU-based horizontal scaling is insufficient to your needs). Consider also Gardener’s feature node-local DNS to decouple you further from the DNS pods and stabilize DNS. Again, that’s not strictly related to HA, but may become important during a zone outage, when load patterns shift and pods start to initialize/resolve DNS records more frequently in bulk.

More Caveats

Unfortunately, there are a few more things of note when it comes to HA in a Kubernetes cluster that may be “surprising” and hard to mitigate:

- If the

kubeletrestarts, it will report all pods asNotReadyon startup until it reruns its probes (#100277), which leads to temporary endpoint and load balancer target removal (#102367). This topic is somewhat controversial. Gardener uses rolling updates and a jitter to spread necessarykubeletrestarts as good as possible. - If a

kube-proxypod on a node turnsNotReady, all load balancer traffic to all pods (on this node) under services withexternalTrafficPolicylocalwill cease as the load balancer will then take this node out of serving. This topic is somewhat controversial as well. So, please remember thatexternalTrafficPolicylocalnot only has the disadvantage of imbalanced traffic spreading, but also a dependency to the kube-proxy pod that may and will be unavailable during updates. Gardener uses rolling updates to spread necessarykube-proxyupdates as good as possible.

These are just a few additional considerations. They may or may not affect you, but other intricacies may. It’s a reminder to be watchful as Kubernetes may have one or two relevant quirks that you need to consider (and will probably only find out over time and with extensive testing).

Meaningful Availability

Finally, let’s go back to where we started. We recommended to measure meaningful availability. For instance, in Gardener, we do not trust only internal signals, but track also whether Gardener or the control planes that it manages are externally available through the external DNS records and load balancers, SNI-routing Istio gateways, etc. (the same path all users must take). It’s a huge difference whether the API server’s internal readiness probe passes or the user can actually reach the API server and it does what it’s supposed to do. Most likely, you will be in a similar spot and can do the same.

What you do with these signals is another matter. Maybe there are some actionable metrics and you can trigger some active fail-over, maybe you can only use it to improve your HA setup altogether. In our case, we also use it to deploy mitigations, e.g. via our dependency-watchdog that watches, for instance, Gardener-managed API servers and shuts down components like the controller managers to avert cascading knock-off effects (e.g. melt-down if the kubelets cannot reach the API server, but the controller managers can and start taking down nodes and pods).

Either way, understanding how users perceive your service is key to the improvement process as a whole. Even if you are not struck by a zone outage, the measures above and tracking the meaningful availability will help you improve your service.

Thank you for your interest.

2.2 - Chaos Engineering

Overview

Gardener provides chaostoolkit modules to simulate compute and network outages for various cloud providers such as AWS, Azure, GCP, OpenStack/Converged Cloud, and VMware vSphere, as well as pod disruptions for any Kubernetes cluster.

The API, parameterization, and implementation is as homogeneous as possible across the different cloud providers, so that you have only minimal effort. As a Gardener user, you benefit from an additional garden module that leverages the generic modules, but exposes their functionality in the most simple, homogeneous, and secure way (no need to specify cloud provider credentials, cluster credentials, or filters explicitly; retrieves credentials and stores them in memory only).

Installation

The name of the package is chaosgarden and it was developed and tested with Python 3.9+. It’s being published to PyPI, so that you can comfortably install it via Python’s package installer pip (you may want to create a virtual environment before installing it):

pip install chaosgarden

ℹ️ If you want to use the VMware vSphere module, please note the remarks in requirements.txt for vSphere. Those are not contained in the published PyPI package.

The package can be used directly from Python scripts and supports this usage scenario with additional convenience that helps launch actions and probes in background (more on actions and probes later), so that you can compose also complex scenarios with ease.

If this technology is new to you, you will probably prefer the chaostoolkit CLI in combination with experiment files, so we need to install the CLI next:

pip install chaostoolkit

Please verify that it was installed properly by running:

chaos --help

Usage

ℹ️ We assume you are using Gardener and run Gardener-managed shoot clusters. You can also use the generic cloud provider and Kubernetes chaosgarden modules, but configuration and secrets will then differ. Please see the module docs for details.

A Simple Experiment

The most important command is the run command, but before we can use it, we need to compile an experiment file first. Let’s start with a simple one, invoking only a read-only 📖 action from chaosgarden that lists cloud provider machines and networks (depends on cloud provider) for the “first” zone of one of your shoot clusters.

Let’s assume, your project is called my-project and your shoot is called my-shoot, then we need to create the following experiment:

{

"title": "assess-filters-impact",

"description": "assess-filters-impact",

"method": [

{

"type": "action",

"name": "assess-filters-impact",

"provider": {

"type": "python",

"module": "chaosgarden.garden.actions",

"func": "assess_cloud_provider_filters_impact",

"arguments": {

"zone": 0

}

}

}

],

"configuration": {

"garden_project": "my-project",

"garden_shoot": "my-shoot"

}

}

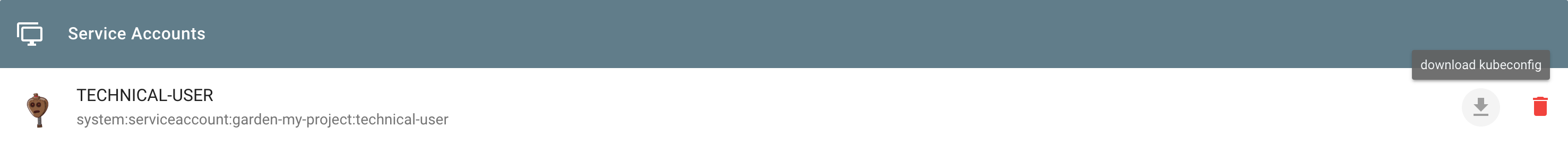

We are not yet there and need one more thing to do before we can run it: We need to “target” the Gardener landscape resp. Gardener API server where you have created your shoot cluster (not to be confused with your shoot cluster API server). If you do not know what this is or how to download the Gardener API server kubeconfig, please follow these instructions. You can either download your personal credentials or project credentials (see creation of a serviceaccount) to interact with Gardener. For now (fastest and most convenient way, but generally not recommended), let’s use your personal credentials, but if you later plan to automate your experiments, please use proper project credentials (a serviceaccount is not bound to your person, but to the project, and can be restricted using RBAC roles and role bindings, which is why we recommend this for production).

To download your personal credentials, open the Gardener Dashboard and click on your avatar in the upper right corner of the page. Click “My Account”, then look for the “Access” pane, then “Kubeconfig”, then press the “Download” button and save the kubeconfig to disk. Run the following command next:

export KUBECONFIG=path/to/kubeconfig

We are now set and you can run your first experiment:

chaos run path/to/experiment

You should see output like this (depends on cloud provider):

[INFO] Validating the experiment's syntax

[INFO] Installing signal handlers to terminate all active background threads on involuntary signals (note that SIGKILL cannot be handled).

[INFO] Experiment looks valid

[INFO] Running experiment: assess-filters-impact

[INFO] Steady-state strategy: default

[INFO] Rollbacks strategy: default

[INFO] No steady state hypothesis defined. That's ok, just exploring.

[INFO] Playing your experiment's method now...

[INFO] Action: assess-filters-impact

[INFO] Validating client credentials and listing probably impacted instances and/or networks with the given arguments zone='world-1a' and filters={'instances': [{'Name': 'tag-key', 'Values': ['kubernetes.io/cluster/shoot--my-project--my-shoot']}], 'vpcs': [{'Name': 'tag-key', 'Values': ['kubernetes.io/cluster/shoot--my-project--my-shoot']}]}:

[INFO] 1 instance(s) would be impacted:

[INFO] - i-aabbccddeeff0000

[INFO] 1 VPC(s) would be impacted:

[INFO] - vpc-aabbccddeeff0000

[INFO] Let's rollback...

[INFO] No declared rollbacks, let's move on.

[INFO] Experiment ended with status: completed

🎉 Congratulations! You successfully ran your first chaosgarden experiment.

A Destructive Experiment

Now let’s break 🪓 your cluster. Be advised that this experiment will be destructive in the sense that we will temporarily network-partition all nodes in one availability zone (machine termination or restart is available with chaosgarden as well). That means, these nodes and their pods won’t be able to “talk” to other nodes, pods, and services. Also, the API server will become unreachable for them and the API server will report them as unreachable (confusingly shown as NotReady when you run kubectl get nodes and Unknown in the status Ready condition when you run kubectl get nodes --output yaml).

Being unreachable will trigger service endpoint and load balancer de-registration (when the node’s grace period lapses) as well as eventually pod eviction and machine replacement (which will continue to fail under test). We won’t run the experiment long enough for all of these effects to materialize, but the longer you run it, the more will happen, up to temporarily giving up/going into “back-off” for the affected worker pool in that zone. You will also see that the Kubernetes cluster autoscaler will try to create a new machine almost immediately, if pods are pending for the affected zone (which will initially fail under test, but may succeed later, which again depends on the runtime of the experiment and whether or not the cluster autoscaler goes into “back-off” or not).

But for now, all of this doesn’t matter as we want to start “small”. You can later read up more on the various settings and effects in our best practices guide on high availability.

Please create a new experiment file, this time with this content:

{

"title": "run-network-failure-simulation",

"description": "run-network-failure-simulation",

"method": [

{

"type": "action",

"name": "run-network-failure-simulation",

"provider": {

"type": "python",

"module": "chaosgarden.garden.actions",

"func": "run_cloud_provider_network_failure_simulation",

"arguments": {

"mode": "total",

"zone": 0,

"duration": 60

}

}

}

],

"rollbacks": [

{

"type": "action",

"name": "rollback-network-failure-simulation",

"provider": {

"type": "python",

"module": "chaosgarden.garden.actions",

"func": "rollback_cloud_provider_network_failure_simulation",

"arguments": {

"mode": "total",

"zone": 0

}

}

}

],

"configuration": {

"garden_project": {

"type": "env",

"key": "GARDEN_PROJECT"

},

"garden_shoot": {

"type": "env",

"key": "GARDEN_SHOOT"

}

}

}

ℹ️ There is an even more destructive action that terminates or alternatively restarts machines in a given zone 🔥 (immediately or delayed with some randomness/chaos for maximum inconvenience for the nodes and pods). You can find links to all these examples at the end of this tutorial.

This experiment is very similar, but this time we will break 🪓 your cluster - for 60s. If that’s too short to even see a node or pod transition from Ready to NotReady (actually Unknown), then increase the duration. Depending on the workload that your cluster runs, you may already see effects of the network partitioning, because it is effective immediately. It’s just that Kubernetes cannot know immediately and rather assumes that something is failing only after the node’s grace period lapses, but the actual workload is impacted immediately.

Most notably, this experiment also has a rollbacks section, which is invoked even if you abort the experiment or it fails unexpectedly, but only if you run the CLI with the option --rollback-strategy always which we will do soon. Any chaosgarden action that can undo its activity, will do that implicitly when the duration lapses, but it is a best practice to always configure a rollbacks section in case something unexpected happens. Should you be in panic and just want to run the rollbacks section, you can remove all other actions and the CLI will execute the rollbacks section immediately.

One other thing is different in the second experiment as well. We now read the name of the project and the shoot from the environment, i.e. a configuration section can automatically expand environment variables. Also useful to know (not shown here), chaostoolkit supports variable substitution too, so that you have to define variables only once. Please note that you can also add a secrets section that can also automatically expand environment variables. For instance, instead of targeting the Gardener API server via $KUBECONFIG, which is supported by our chaosgarden package natively, you can also explicitly refer to it in a secrets section (for brevity reasons not shown here either).

Let’s now run your second experiment (please watch your nodes and pods in parallel, e.g. by running watch kubectl get nodes,pods --output wide in another terminal):

export GARDEN_PROJECT=my-project

export GARDEN_SHOOT=my-shoot

chaos run --rollback-strategy always path/to/experiment

The output of the run command will be similar to the one above, but longer. It will mention either machines or networks that were network-partitioned (depends on cloud provider), but should revert everything back to normal.

Normally, you would not only run actions in the method section, but also probes as part of a steady state hypothesis. Such steady state hypothesis probes are run before and after the actions to validate that the “system” was in a healthy state before and gets back to a healthy state after the actions ran, hence show that the “system” is in a steady state when not under test. Eventually, you will write your own probes that don’t even have to be part of a steady state hypothesis. We at Gardener run multi-zone (multiple zones at once) and rolling-zone (strike each zone once) outages with continuous custom probes all within the method section to validate our KPIs continuously under test (e.g. how long do the individual fail-overs take/how long is the actual outage). The most complex scenarios are even run via Python scripts as all actions and probes can also be invoked directly (which is what the CLI does).

High Availability

Developing highly available workload that can tolerate a zone outage is no trivial task. You can find more information on how to achieve this goal in our best practices guide on high availability.

Thank you for your interest in Gardener chaos engineering and making your workload more resilient.

Further Reading

Here some links for further reading:

- Examples: Experiments, Scripts

- Gardener Chaos Engineering: GitHub, PyPI, Module Docs for Gardener Users

- Chaos Toolkit Core: Home Page, Installation, Concepts, GitHub

2.3 - Control Plane

node and zone. Possible mitigations for zone or node outagesHighly Available Shoot Control Plane

Shoot resource offers a way to request for a highly available control plane.

Failure Tolerance Types

A highly available shoot control plane can be setup with either a failure tolerance of zone or node.

Node Failure Tolerance

The failure tolerance of a node will have the following characteristics:

- Control plane components will be spread across different nodes within a single availability zone. There will not be more than one replica per node for each control plane component which has more than one replica.

Worker poolshould have a minimum of 3 nodes.- A multi-node etcd (quorum size of 3) will be provisioned, offering zero-downtime capabilities with each member in a different node within a single availability zone.

Zone Failure Tolerance

The failure tolerance of a zone will have the following characteristics:

- Control plane components will be spread across different availability zones. There will be at least one replica per zone for each control plane component which has more than one replica.

- Gardener scheduler will automatically select a

seedwhich has a minimum of 3 zones to host the shoot control plane. - A multi-node etcd (quorum size of 3) will be provisioned, offering zero-downtime capabilities with each member in a different zone.

Shoot Spec

To request for a highly available shoot control plane Gardener provides the following configuration in the shoot spec:

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

spec:

controlPlane:

highAvailability:

failureTolerance:

type: <node | zone>

Allowed Transitions

If you already have a shoot cluster with non-HA control plane, then the following upgrades are possible:

- Upgrade of non-HA shoot control plane to HA shoot control plane with

nodefailure tolerance. - Upgrade of non-HA shoot control plane to HA shoot control plane with

zonefailure tolerance. However, it is essential that theseedwhich is currently hosting the shoot control plane should bemulti-zonal. If it is not, then the request to upgrade will be rejected.

Note: There will be a small downtime during the upgrade, especially for etcd, which will transition from a single node etcd cluster to a multi-node etcd cluster.

Disallowed Transitions

If you already have a shoot cluster with HA control plane, then the following transitions are not possible:

- Upgrade of HA shoot control plane from

nodefailure tolerance tozonefailure tolerance is currently not supported, mainly because already existing volumes are bound to the zone they were created in originally. - Downgrade of HA shoot control plane with

zonefailure tolerance tonodefailure tolerance is currently not supported, mainly because of the same reason as above, that already existing volumes are bound to the respective zones they were created in originally. - Downgrade of HA shoot control plane with either

nodeorzonefailure tolerance, to a non-HA shoot control plane is currently not supported, mainly because etcd-druid does not currently support scaling down of a multi-node etcd cluster to a single-node etcd cluster.

Zone Outage Situation

Implementing highly available software that can tolerate even a zone outage unscathed is no trivial task. You may find our HA Best Practices helpful to get closer to that goal. In this document, we collected many options and settings for you that also Gardener internally uses to provide a highly available service.

During a zone outage, you may be forced to change your cluster setup on short notice in order to compensate for failures and shortages resulting from the outage.

For instance, if the shoot cluster has worker nodes across three zones where one zone goes down, the computing power from these nodes is also gone during that time.

Changing the worker pool (shoot.spec.provider.workers[]) and infrastructure (shoot.spec.provider.infrastructureConfig) configuration can eliminate this disbalance, having enough machines in healthy availability zones that can cope with the requests of your applications.

Gardener relies on a sophisticated reconciliation flow with several dependencies for which various flow steps wait for the readiness of prior ones.

During a zone outage, this can block the entire flow, e.g., because all three etcd replicas can never be ready when a zone is down, and required changes mentioned above can never be accomplished.

For this, a special one-off annotation shoot.gardener.cloud/skip-readiness helps to skip any readiness checks in the flow.

The

shoot.gardener.cloud/skip-readinessannotation serves as a last resort if reconciliation is stuck because of important changes during an AZ outage. Use it with caution, only in exceptional cases and after a case-by-case evaluation with your Gardener landscape administrator. If used together with other operations like Kubernetes version upgrades or credential rotation, the annotation may lead to a severe outage of your shoot control plane.

3 - Networking

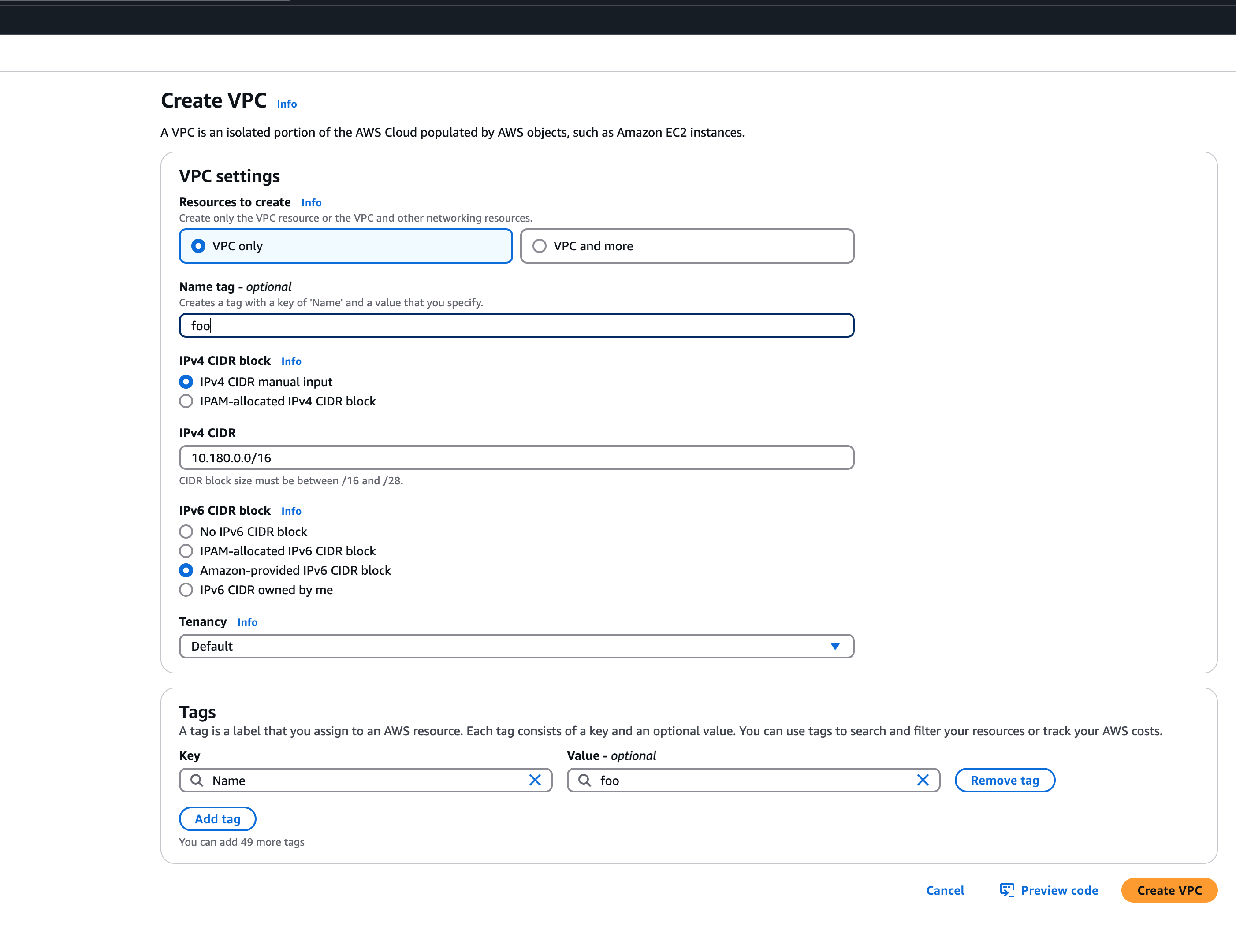

3.1 - Enable IPv4/IPv6 (dual-stack) Ingress on AWS

Using IPv4/IPv6 (dual-stack) Ingress in an IPv4 single-stack cluster

Motivation

IPv6 adoption is continuously growing, already overtaking IPv4 in certain regions, e.g. India, or scenarios, e.g. mobile. Even though most IPv6 installations deploy means to reach IPv4, it might still be beneficial to expose services natively via IPv4 and IPv6 instead of just relying on IPv4.

Disadvantages of full IPv4/IPv6 (dual-stack) Deployments

Enabling full IPv4/IPv6 (dual-stack) support in a kubernetes cluster is a major endeavor. It requires a lot of changes and restarts of all pods so that all pods get addresses for both IP families. A side-effect of dual-stack networking is that failures may be hidden as network traffic may take the other protocol to reach the target. For this reason and also due to reduced operational complexity, service teams might lean towards staying in a single-stack environment as much as possible. Luckily, this is possible with Gardener and IPv4/IPv6 (dual-stack) ingress on AWS.

Simplifying IPv4/IPv6 (dual-stack) Ingress with Protocol Translation on AWS

Fortunately, the network load balancer on AWS supports automatic protocol translation, i.e. it can expose both IPv4 and IPv6 endpoints while communicating with just one protocol to the backends. Under the hood, automatic protocol translation takes place. Client IP address preservation can be achieved by using proxy protocol.

This approach enables users to expose IPv4 workload to IPv6-only clients without having to change the workload/service. Without requiring invasive changes, it allows a fairly simple first step into the IPv6 world for services just requiring ingress (incoming) communication.

Necessary Shoot Cluster Configuration Changes for IPv4/IPv6 (dual-stack) Ingress

To be able to utilize IPv4/IPv6 (dual-stack) Ingress in an IPv4 shoot cluster, the cluster needs to meet two preconditions:

dualStack.enabledneeds to be set totrueto configure VPC/subnet for IPv6 and add a routing rule for IPv6. (This does not add IPv6 addresses to kubernetes nodes.)loadBalancerController.enabledneeds to be set totrueas well to use the load balancer controller, which supports dual-stack ingress.

apiVersion: core.gardener.cloud/v1beta1

kind: Shoot

...

spec:

provider:

type: aws

infrastructureConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: InfrastructureConfig

dualStack:

enabled: true

controlPlaneConfig: