This is the multi-page printable view of this section. Click here to print.

Other Components

1 - Dependency Watchdog

Dependency Watchdog

Overview

A watchdog which actively looks out for disruption and recovery of critical services. If there is a disruption then it will prevent cascading failure by conservatively scaling down dependent configured resources and if a critical service has just recovered then it will expedite the recovery of dependent services/pods.

Avoiding cascading failure is handled by Prober component and expediting recovery of dependent services/pods is handled by Weeder component. These are separately deployed as individual pods.

Current Limitation & Future Scope

Although in the current offering the Prober is tailored to handle one such use case of kube-apiserver connectivity, but the usage of prober can be extended to solve similar needs for other scenarios where the components involved might be different.

Start using or developing the Dependency Watchdog

See our documentation in the /docs repository, please find the index here.

Feedback and Support

We always look forward to active community engagement.

Please report bugs or suggestions on how we can enhance dependency-watchdog to address additional recovery scenarios on GitHub issues

1.1 - Concepts

1.1.1 - Prober

Prober

Overview

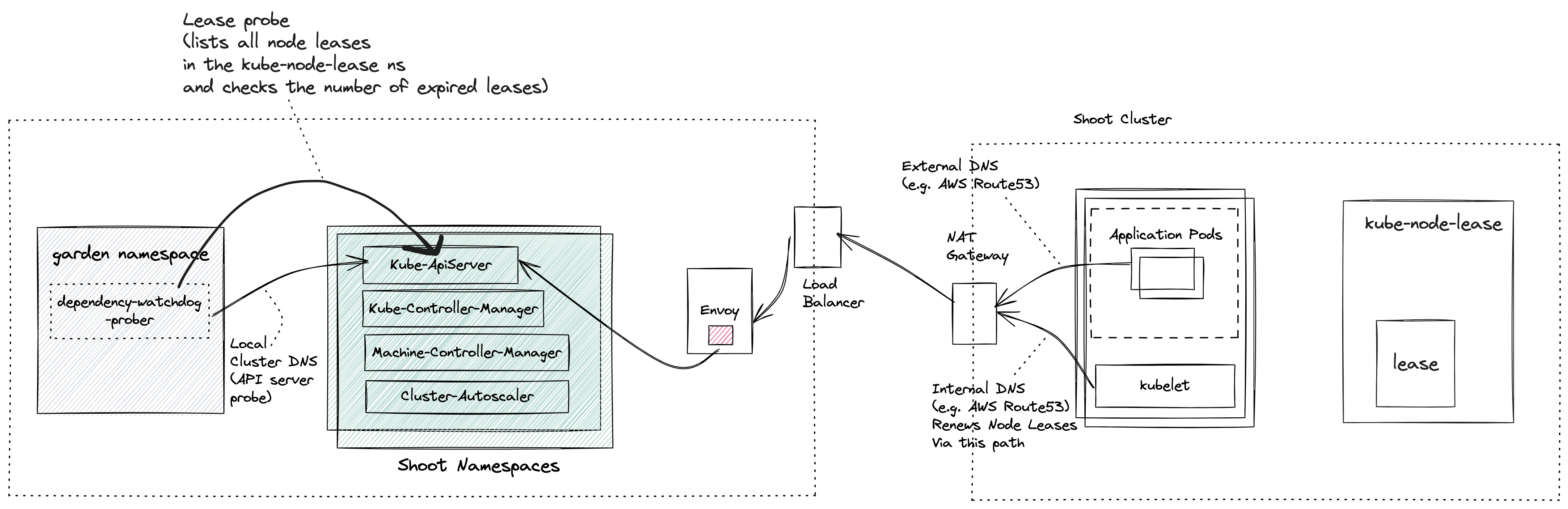

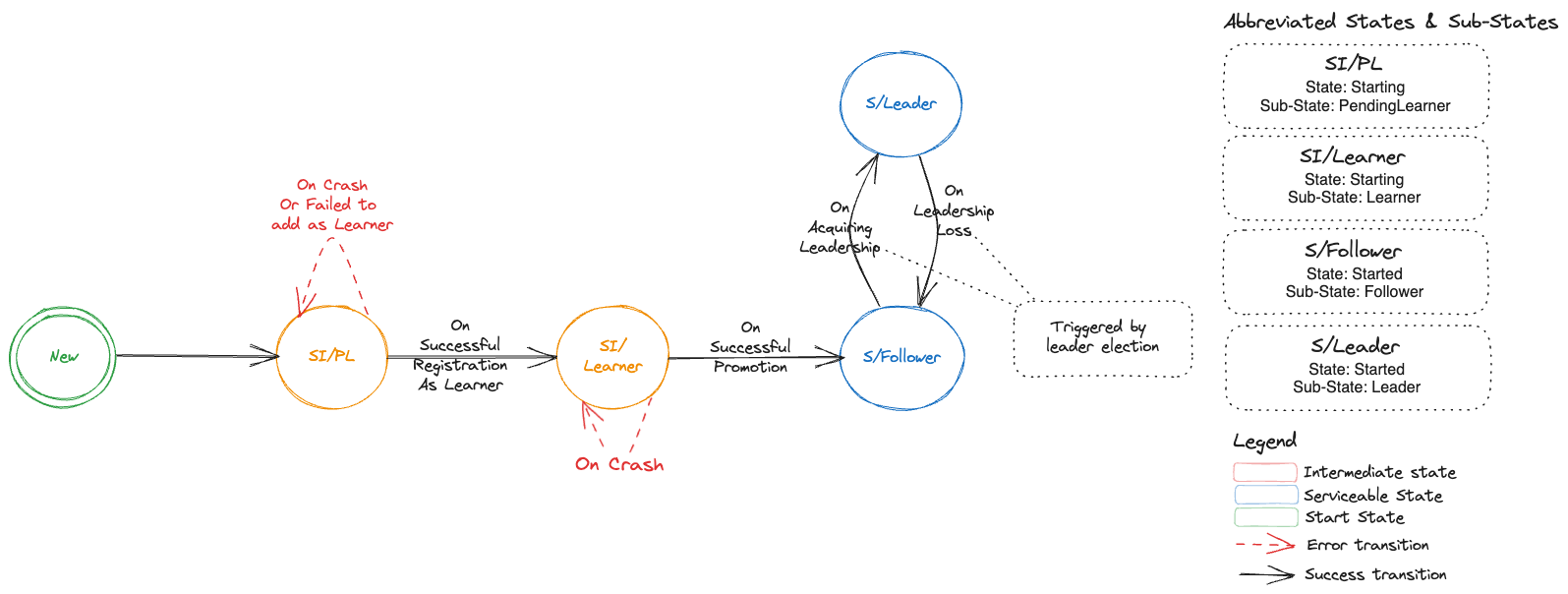

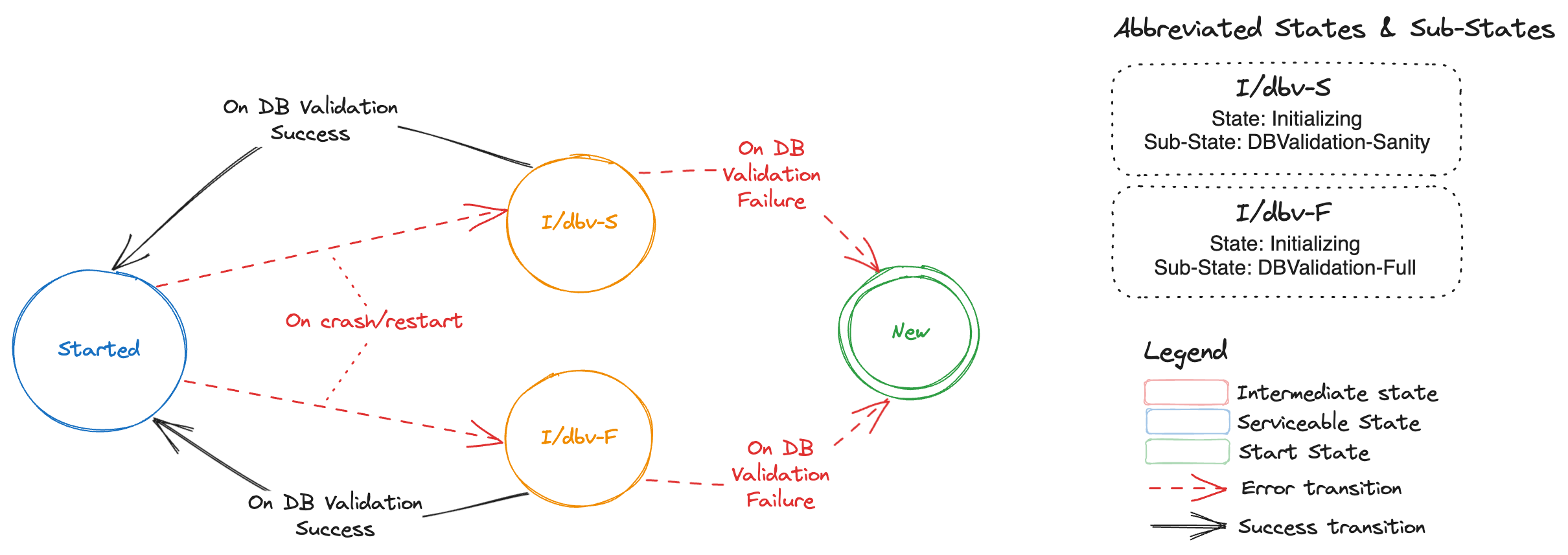

Prober starts asynchronous and periodic probes for every shoot cluster. The first probe is the api-server probe which checks the reachability of the API Server from the control plane. The second probe is the lease probe which is done after the api server probe is successful and checks if the number of expired node leases is below a certain threshold.

If the lease probe fails, it will scale down the dependent kubernetes resources. Once the connectivity to kube-apiserver is reestablished and the number of expired node leases are within the accepted threshold, the prober will then proactively scale up the dependent kubernetes resources it had scaled down earlier. The failure threshold fraction for lease probe

and dependent kubernetes resources are defined in configuration that is passed to the prober.

Origin

In a shoot cluster (a.k.a data plane) each node runs a kubelet which periodically renewes its lease. Leases serve as heartbeats informing Kube Controller Manager that the node is alive. The connectivity between the kubelet and the Kube ApiServer can break for different reasons and not recover in time.

As an example, consider a large shoot cluster with several hundred nodes. There is an issue with a NAT gateway on the shoot cluster which prevents the Kubelet from any node in the shoot cluster to reach its control plane Kube ApiServer. As a consequence, Kube Controller Manager transitioned the nodes of this shoot cluster to Unknown state.

Machine Controller Manager which also runs in the shoot control plane reacts to any changes to the Node status and then takes action to recover backing VMs/machine(s). It waits for a grace period and then it will begin to replace the unhealthy machine(s) with new ones.

This replacement of healthy machines due to a broken connectivity between the worker nodes and the control plane Kube ApiServer results in undesired downtimes for customer workloads that were running on these otherwise healthy nodes. It is therefore required that there be an actor which detects the connectivity loss between the the kubelet and shoot cluster’s Kube ApiServer and proactively scales down components in the shoot control namespace which could exacerbate the availability of nodes in the shoot cluster.

Dependency Watchdog Prober in Gardener

Prober is a central component which is deployed in the garden namespace in the seed cluster. Control plane components for a shoot are deployed in a dedicated shoot namespace for the shoot within the seed cluster.

NOTE: If you are not familiar with what gardener components like seed, shoot then please see the appendix for links.

Prober periodically probes Kube ApiServer via two separate probes:

- API Server Probe: Local cluster DNS name which resolves to the ClusterIP of the Kube Apiserver

- Lease Probe: Checks for number of expired leases to be within the specified threshold. The threshold defines the limit after which DWD can say that the kubelets are not able to reach the API server.

Behind the scene

For all active shoot clusters (which have not been hibernated or deleted or moved to another seed via control-plane-migration), prober will schedule a probe to run periodically. During each run of a probe it will do the following:

- Checks if the Kube ApiServer is reachable via local cluster DNS. This should always succeed and will fail only when the Kube ApiServer has gone down. If the Kube ApiServer is down then there can be no further damage to the existing shoot cluster (barring new requests to the Kube Api Server).

- Only if the probe is able to reach the Kube ApiServer via local cluster DNS, will it attempt to check the number of expired node leases in the shoot. The node lease renewal is done by the Kubelet, and so we can say that the lease probe is checking if the kubelet is able to reach the API server. If the number of expired node leases reaches the threshold, then the probe fails.

- If and when a lease probe fails, then it will initiate a scale-down operation for dependent resources as defined in the prober configuration. While scaling down the resource prober will annotate the deployment of the resource with

dependency-watchdog.gardener.cloud/meltdown-protection-active. This annotation can be checked to ensure not to scale up these resources during a regular shoot maintenance or an out-of-band shoot reconciliation. - In subsequent runs it will keep performing the lease probe. If it is successful, then it will start the scale-up operation for dependent resources as defined in the configuration. It shall also remove the

dependency-watchdog.gardener.cloud/meltdown-protection-activefrom deployment metadata of all scaled up resources.

Manual Intervention/Override of DWD behavior: j In case where you don’t want DWD to act on a resource during a meltdown, you can annotate the said resource deployment with

"dependency-watchdog.gardener.cloud/ignore-scaling"annotation. This will ensure that prober will not act on such resource. When this ignore scaling annotation is put by an operator, thedependency-watchdog.gardener.cloud/meltdown-protection-activeannotation shall be removed to avoid any ambiguity.

Prober lifecycle

A reconciler is registered to listen to all events for Cluster resource.

When a Reconciler receives a request for a Cluster change, it will query the extension kube-api server to get the Cluster resource.

In the following cases it will either remove an existing probe for this cluster or skip creating a new probe:

- Cluster is marked for deletion.

- Hibernation has been enabled for the cluster.

- There is an ongoing seed migration for this cluster.

- If a new cluster is created with no workers.

- If an update is made to the cluster by removing all workers (in other words making it worker-less).

If none of the above conditions are true and there is no existing probe for this cluster then a new probe will be created, registered and started.

Probe failure identification

DWD probe can either be a success or it could return an error. If the API server probe fails, the lease probe is not done and the probes will be retried. If the error is a TooManyRequests error due to requests to the Kube-API-Server being throttled,

then the probes are retried after a backOff of backOffDurationForThrottledRequests.

If the lease probe fails, then the error could be due to failure in listing the leases. In this case, no scaling operations are performed. If the error in listing the leases is a TooManyRequests error due to requests to the Kube-API-Server being throttled,

then the probes are retried after a backOff of backOffDurationForThrottledRequests.

If there is no error in listing the leases, then the Lease probe fails if the number of expired leases reaches the threshold fraction specified in the configuration. A lease is considered expired in the following scenario:-

time.Now() >= lease.Spec.RenewTime + (p.config.KCMNodeMonitorGraceDuration.Duration * expiryBufferFraction)

Here, lease.Spec.RenewTime is the time when current holder of a lease has last updated the lease. config is the probe config generated from the configuration and

KCMNodeMonitorGraceDuration is amount of time which KCM allows a running Node to be unresponsive before marking it unhealthy (See ref)

. expiryBufferFraction is a hard coded value of 0.75. Using this fraction allows the prober to intervene before KCM marks a node as unknown, but at the same time allowing kubelet sufficient retries to renew the node lease (Kubelet renews the lease every 10s See ref).

Appendix

1.1.2 - Weeder

Weeder

Overview

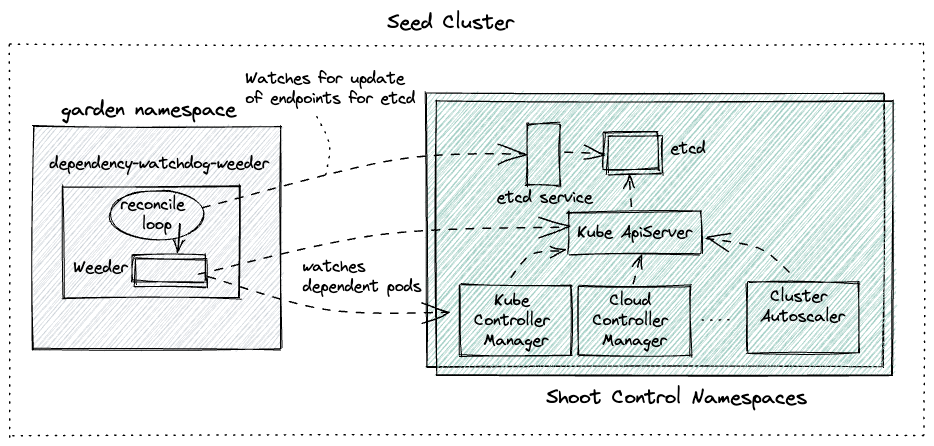

Weeder watches for an update to service endpoints and on receiving such an event it will create a time-bound watch for all configured dependent pods that need to be actively recovered in case they have not yet recovered from CrashLoopBackoff state. In a nutshell it accelerates recovery of pods when an upstream service recovers.

An interference in automatic recovery for dependent pods is required because kubernetes pod restarts a container with an exponential backoff when the pod is in CrashLoopBackOff state. This backoff could become quite large if the service stays down for long. Presence of weeder would not let that happen as it’ll restart the pod.

Prerequisites

Before we understand how Weeder works, we need to be familiar with kubernetes services & endpoints.

NOTE: If a kubernetes service is created with selectors then kubernetes will create corresponding endpoint resource which will have the same name as that of the service. In weeder implementation service and endpoint name is used interchangeably.

Config

Weeder can be configured via command line arguments and a weeder configuration. See configure weeder.

Internals

Weeder keeps a watch on the events for the specified endpoints in the config. For every endpoints a list of podSelectors can be specified. It cretes a weeder object per endpoints resource when it receives a satisfactory Create or Update event. Then for every podSelector it creates a goroutine. This goroutine keeps a watch on the pods with labels as per the podSelector and kills any pod which turn into CrashLoopBackOff. Each weeder lives for watchDuration interval which has a default value of 5 mins if not explicitly set.

To understand the actions taken by the weeder lets use the following diagram as a reference.

Let us also assume the following configuration for the weeder:

Let us also assume the following configuration for the weeder:

watchDuration: 2m0s

servicesAndDependantSelectors:

etcd-main-client: # name of the service/endpoint for etcd statefulset that weeder will receive events for.

podSelectors: # all pods matching the label selector are direct dependencies for etcd service

- matchExpressions:

- key: gardener.cloud/role

operator: In

values:

- controlplane

- key: role

operator: In

values:

- apiserver

kube-apiserver: # name of the service/endpoint for kube-api-server pods that weeder will receive events for.

podSelectors: # all pods matching the label selector are direct dependencies for kube-api-server service

- matchExpressions:

- key: gardener.cloud/role

operator: In

values:

- controlplane

- key: role

operator: NotIn

values:

- main

- apiserver

Only for the sake of demonstration lets pick the first service -> dependent pods tuple (etcd-main-client as the service endpoint).

- Assume that there are 3 replicas for etcd statefulset.

- Time here is just for showing the series of events

t=0-> all etcd pods go downt=10-> kube-api-server pods transition to CrashLoopBackOfft=100-> all etcd pods recover togethert=101-> Weeder seesUpdateevent foretcd-main-clientendpoints resourcet=102-> go routine created to keep watch on kube-api-server podst=103-> Since kube-api-server pods are still in CrashLoopBackOff, weeder deletes the pods to accelerate the recovery.t=104-> new kube-api-server pod created by replica-set controller in kube-controller-manager

Points to Note

- Weeder only respond on

Updateevents where anotReadyendpoints resource turn toReady. Thats why there was no weeder action at timet=10in the example above.notReady-> no backing pod is ReadyReady-> atleast one backing pod is Ready

- Weeder doesn’t respond on

Deleteevents - Weeder will always wait for the entire

watchDuration. If the dependent pods transition to CrashLoopBackOff after the watch duration or even after repeated deletion of these pods they do not recover then weeder will exit. Quality of service offered via a weeder is only Best-Effort.

1.2 - Deployment

1.2.1 - Configure

Configure Dependency Watchdog Components

Prober

Dependency watchdog prober command takes command-line-flags which are meant to fine-tune the prober. In addition a ConfigMap is also mounted to the container which provides tuning knobs for the all probes that the prober starts.

Command line arguments

Prober can be configured via the following flags:

| Flag Name | Type | Required | Default Value | Description |

|---|---|---|---|---|

| kube-api-burst | int | No | 10 | Burst to use while talking with kubernetes API server. The number must be >= 0. If it is 0 then a default value of 10 will be used |

| kube-api-qps | float | No | 5.0 | Maximum QPS (queries per second) allowed when talking with kubernetes API server. The number must be >= 0. If it is 0 then a default value of 5.0 will be used |

| concurrent-reconciles | int | No | 1 | Maximum number of concurrent reconciles |

| config-file | string | Yes | NA | Path of the config file containing the configuration to be used for all probes |

| metrics-bind-addr | string | No | “:9643” | The TCP address that the controller should bind to for serving prometheus metrics |

| health-bind-addr | string | No | “:9644” | The TCP address that the controller should bind to for serving health probes |

| enable-leader-election | bool | No | false | In case prober deployment has more than 1 replica for high availability, then it will be setup in a active-passive mode. Out of many replicas one will become the leader and the rest will be passive followers waiting to acquire leadership in case the leader dies. |

| leader-election-namespace | string | No | “garden” | Namespace in which leader election resource will be created. It should be the same namespace where DWD pods are deployed |

| leader-elect-lease-duration | time.Duration | No | 15s | The duration that non-leader candidates will wait after observing a leadership renewal until attempting to acquire leadership of a led but unrenewed leader slot. This is effectively the maximum duration that a leader can be stopped before it is replaced by another candidate. This is only applicable if leader election is enabled. |

| leader-elect-renew-deadline | time.Duration | No | 10s | The interval between attempts by the acting master to renew a leadership slot before it stops leading. This must be less than or equal to the lease duration. This is only applicable if leader election is enabled. |

| leader-elect-retry-period | time.Duration | No | 2s | The duration the clients should wait between attempting acquisition and renewal of a leadership. This is only applicable if leader election is enabled. |

You can view an example kubernetes prober deployment YAML to see how these command line args are configured.

Prober Configuration

A probe configuration is mounted as ConfigMap to the container. The path to the config file is configured via config-file command line argument as mentioned above. Prober will start one probe per Shoot control plane hosted within the Seed cluster. Each such probe will run asynchronously and will periodically connect to the Kube ApiServer of the Shoot. Configuration below will influence each such probe.

You can view an example YAML configuration provided as data in a ConfigMap here.

| Name | Type | Required | Default Value | Description |

|---|---|---|---|---|

| kubeConfigSecretName | string | Yes | NA | Name of the kubernetes Secret which has the encoded KubeConfig required to connect to the Shoot control plane Kube ApiServer via an internal domain. This typically uses the local cluster DNS. |

| probeInterval | metav1.Duration | No | 10s | Interval with which each probe will run. |

| initialDelay | metav1.Duration | No | 30s | Initial delay for the probe to become active. Only applicable when the probe is created for the first time. |

| probeTimeout | metav1.Duration | No | 30s | In each run of the probe it will attempt to connect to the Shoot Kube ApiServer. probeTimeout defines the timeout after which a single run of the probe will fail. |

| backoffJitterFactor | float64 | No | 0.2 | Jitter with which a probe is run. |

| dependentResourceInfos | []prober.DependentResourceInfo | Yes | NA | Detailed below. |

| kcmNodeMonitorGraceDuration | metav1.Duration | Yes | NA | It is the node-monitor-grace-period set in the kcm flags. Used to determine whether a node lease can be considered expired. |

| nodeLeaseFailureFraction | float64 | No | 0.6 | is used to determine the maximum number of leases that can be expired for a lease probe to succeed. |

DependentResourceInfo

If a lease probe fails, then it scales down the dependent resources defined by this property. Similarly, if the lease probe is now successful, then it scales up the dependent resources defined by this property.

Each dependent resource info has the following properties:

| Name | Type | Required | Default Value | Description |

|---|---|---|---|---|

| ref | autoscalingv1.CrossVersionObjectReference | Yes | NA | It is a collection of ApiVersion, Kind and Name for a kubernetes resource thus serving as an identifier. |

| optional | bool | Yes | NA | It is possible that a dependent resource is optional for a Shoot control plane. This property enables a probe to determine the correct behavior in case it is unable to find the resource identified via ref. |

| scaleUp | prober.ScaleInfo | No | Captures the configuration to scale up this resource. Detailed below. | |

| scaleDown | prober.ScaleInfo | No | Captures the configuration to scale down this resource. Detailed below. |

NOTE: Since each dependent resource is a target for scale up/down, therefore it is mandatory that the resource reference points a kubernetes resource which has a

scalesubresource.

ScaleInfo

How to scale a DependentResourceInfo is captured in ScaleInfo. It has the following properties:

| Name | Type | Required | Default Value | Description |

|---|---|---|---|---|

| level | int | Yes | NA | Detailed below. |

| initialDelay | metav1.Duration | No | 0s (No initial delay) | Once a decision is taken to scale a resource then via this property a delay can be induced before triggering the scale of the dependent resource. |

| timeout | metav1.Duration | No | 30s | Defines the timeout for the scale operation to finish for a dependent resource. |

Determining target replicas

Prober cannot assume any target replicas during a scale-up operation for the following reasons:

- Kubernetes resources could be set to provide highly availability and the number of replicas could wary from one shoot control plane to the other. In gardener the number of replicas of pods in shoot namespace are controlled by the shoot control plane configuration.

- If Horizontal Pod Autoscaler has been configured for a kubernetes dependent resource then it could potentially change the

spec.replicasfor a deployment/statefulset.

Given the above constraint lets look at how prober determines the target replicas during scale-down or scale-up operations.

Scale-Up: Primary responsibility of a probe while performing a scale-up is to restore the replicas of a kubernetes dependent resource prior to scale-down. In order to do that it updates the following for each dependent resource that requires a scale-up:spec.replicas: Checks ifdependency-watchdog.gardener.cloud/replicasis set. If it is, then it will take the value stored against this key as the target replicas. To be a valid value it should always be greater than 0.- If

dependency-watchdog.gardener.cloud/replicasannotation is not present then it falls back to the hard coded default value for scale-up which is set to 1. - Removes the annotation

dependency-watchdog.gardener.cloud/replicasif it exists.

Scale-Down: To scale down a dependent kubernetes resource it does the following:- Adds an annotation

dependency-watchdog.gardener.cloud/replicasand sets its value to the current value ofspec.replicas. - Updates

spec.replicasto 0.

- Adds an annotation

Level

Each dependent resource that should be scaled up or down is associated to a level. Levels are ordered and processed in ascending order (starting with 0 assigning it the highest priority). Consider the following configuration:

dependentResourceInfos:

- ref:

kind: "Deployment"

name: "kube-controller-manager"

apiVersion: "apps/v1"

scaleUp:

level: 1

scaleDown:

level: 0

- ref:

kind: "Deployment"

name: "machine-controller-manager"

apiVersion: "apps/v1"

scaleUp:

level: 1

scaleDown:

level: 1

- ref:

kind: "Deployment"

name: "cluster-autoscaler"

apiVersion: "apps/v1"

scaleUp:

level: 0

scaleDown:

level: 2

Let us order the dependent resources by their respective levels for both scale-up and scale-down. We get the following order:

Scale Up Operation

Order of scale up will be:

- cluster-autoscaler

- kube-controller-manager and machine-controller-manager will be scaled up concurrently after cluster-autoscaler has been scaled up.

Scale Down Operation

Order of scale down will be:

- kube-controller-manager

- machine-controller-manager after (1) has been scaled down.

- cluster-autoscaler after (2) has been scaled down.

Disable/Ignore Scaling

A probe can be configured to ignore scaling of configured dependent kubernetes resources.

To do that one must set dependency-watchdog.gardener.cloud/ignore-scaling annotation to true on the scalable resource for which scaling should be ignored.

Weeder

Dependency watchdog weeder command also (just like the prober command) takes command-line-flags which are meant to fine-tune the weeder. In addition a ConfigMap is also mounted to the container which helps in defining the dependency of pods on endpoints.

Command Line Arguments

Weeder can be configured with the same flags as that for prober described under command-line-arguments section You can find an example weeder deployment YAML to see how these command line args are configured.

Weeder Configuration

Weeder configuration is mounted as ConfigMap to the container. The path to the config file is configured via config-file command line argument as mentioned above. Weeder will start one go routine per podSelector per endpoint on an endpoint event as described in weeder internal concepts.

You can view the example YAML configuration provided as data in a ConfigMap here.

| Name | Type | Required | Default Value | Description |

|---|---|---|---|---|

| watchDuration | *metav1.Duration | No | 5m0s | The time duration for which watch is kept on dependent pods to see if anyone turns to CrashLoopBackoff |

| servicesAndDependantSelectors | map[string]DependantSelectors | Yes | NA | Endpoint name and its corresponding dependent pods. More info below. |

DependantSelectors

If the service recovers from downtime, then weeder starts to watch for CrashLoopBackOff pods. These pods are identified by info stored in this property.

| Name | Type | Required | Default Value | Description |

|---|---|---|---|---|

| podSelectors | []*metav1.LabelSelector | Yes | NA | This is a list of Label selector |

1.2.2 - Monitor

Monitoring

Work In Progress

We will be introducing metrics for Dependency-Watchdog-Prober and Dependency-Watchdog-Weeder. These metrics will be pushed to prometheus. Once that is completed we will provide details on all the metrics that will be supported here.

1.3 - Contribution

How to contribute?

Contributions are always welcome!

In order to contribute ensure that you have the development environment setup and you familiarize yourself with required steps to build, verify-quality and test.

Setting up development environment

Installing Go

Minimum Golang version required: 1.18.

On MacOS run:

brew install go

For other OS, follow the installation instructions.

Installing Git

Git is used as version control for dependency-watchdog. On MacOS run:

brew install git

If you do not have git installed already then please follow the installation instructions.

Installing Docker

In order to test dependency-watchdog containers you will need a local kubernetes setup. Easiest way is to first install Docker. This becomes a pre-requisite to setting up either a vanilla KIND/minikube cluster or a local Gardener cluster.

On MacOS run:

brew install -cash docker

For other OS, follow the installation instructions.

Installing Kubectl

To interact with the local Kubernetes cluster you will need kubectl. On MacOS run:

brew install kubernetes-cli

For other IS, follow the installation instructions.

Get the sources

Clone the repository from Github:

git clone https://github.com/gardener/dependency-watchdog.git

Using Makefile

For every change following make targets are recommended to run.

# build the code changes

> make build

# ensure that all required checks pass

> make verify # this will check formatting, linting and will run unit tests

# if you do not wish to run tests then you can use the following make target.

> make check

All tests should be run and the test coverage should ideally not reduce. Please ensure that you have read testing guidelines.

Before raising a pull request ensure that if you are introducing any new file then you must add licesence header to all new files. To add license header you can run this make target:

> make add-license-headers

# This will add license headers to any file which does not already have it.

NOTE: Also have a look at the Makefile as it has other targets that are not mentioned here.

Raising a Pull Request

To raise a pull request do the following:

- Create a fork of dependency-watchdog

- Add dependency-watchdog as upstream remote via

git remote add upstream https://github.com/gardener/dependency-watchdog

- It is recommended that you create a git branch and push all your changes for the pull-request.

- Ensure that while you work on your pull-request, you continue to rebase the changes from upstream to your branch. To do that execute the following command:

git pull --rebase upstream master

- We prefer clean commits. If you have multiple commits in the pull-request, then squash the commits to a single commit. You can do this via

interactive git rebasecommand. For example if your PR branch is ahead of remote origin HEAD by 5 commits then you can execute the following command and pick the first commit and squash the remaining commits.

git rebase -i HEAD~5 #actual number from the head will depend upon how many commits your branch is ahead of remote origin master

1.4 - Dwd Using Local Garden

Dependency Watchdog with Local Garden Cluster

Setting up Local Garden cluster

A convenient way to test local dependency-watchdog changes is to use a local garden cluster. To setup a local garden cluster you can follow the setup-guide.

Dependency Watchdog resources

As part of the local garden installation, a local seed will be available.

Dependency Watchdog resources created in the seed

Namespaced resources

In the garden namespace of the seed cluster, following resources will be created:

| Resource (GVK) | Name |

|---|---|

| {apiVersion: v1, Kind: ServiceAccount} | dependency-watchdog-prober |

| {apiVersion: v1, Kind: ServiceAccount} | dependency-watchdog-weeder |

| {apiVersion: apps/v1, Kind: Deployment} | dependency-watchdog-prober |

| {apiVersion: apps/v1, Kind: Deployment} | dependency-watchdog-weeder |

| {apiVersion: v1, Kind: ConfigMap} | dependency-watchdog-prober-* |

| {apiVersion: v1, Kind: ConfigMap} | dependency-watchdog-weeder-* |

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: Role} | gardener.cloud:dependency-watchdog-prober:role |

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: Role} | gardener.cloud:dependency-watchdog-weeder:role |

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: RoleBinding} | gardener.cloud:dependency-watchdog-prober:role-binding |

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: RoleBinding} | gardener.cloud:dependency-watchdog-weeder:role-binding |

| {apiVersion: resources.gardener.cloud/v1alpha1, Kind: ManagedResource} | dependency-watchdog-prober |

| {apiVersion: resources.gardener.cloud/v1alpha1, Kind: ManagedResource} | dependency-watchdog-weeder |

| {apiVersion: v1, Kind: Secret} | managedresource-dependency-watchdog-weeder |

| {apiVersion: v1, Kind: Secret} | managedresource-dependency-watchdog-prober |

Cluster resources

| Resource (GVK) | Name |

|---|---|

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: ClusterRole} | gardener.cloud:dependency-watchdog-prober:cluster-role |

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: ClusterRole} | gardener.cloud:dependency-watchdog-weeder:cluster-role |

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: ClusterRoleBinding} | gardener.cloud:dependency-watchdog-prober:cluster-role-binding |

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: ClusterRoleBinding} | gardener.cloud:dependency-watchdog-weeder:cluster-role-binding |

Dependency Watchdog resources created in Shoot control namespace

| Resource (GVK) | Name |

|---|---|

| {apiVersion: v1, Kind: Secret} | dependency-watchdog-prober |

| {apiVersion: resources.gardener.cloud/v1alpha1, Kind: ManagedResource} | shoot-core-dependency-watchdog |

Dependency Watchdog resources created in the kube-node-lease namespace of the shoot

| Resource (GVK) | Name |

|---|---|

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: Role} | gardener.cloud:target:dependency-watchdog |

| {apiVersion: rbac.authorization.k8s.io/v1, Kind: RoleBinding} | gardener.cloud:target:dependency-watchdog |

These will be created by the GRM and will have a managed resource named shoot-core-dependency-watchdog in the shoot namespace in the seed.

Update Gardener with custom Dependency Watchdog Docker images

Build, Tag and Push docker images

To build dependency watchdog docker images run the following make target:

> make docker-build

Local gardener hosts a docker registry which can be access at localhost:5001. To enable local gardener to be able to access the custom docker images you need to tag and push these images to the embedded docker registry. To do that execute the following commands:

> docker images

# Get the IMAGE ID of the dependency watchdog images that were built using docker-build make target.

> docker tag <IMAGE-ID> localhost:5001/europe-docker.pkg.dev/gardener-project/public/gardener/dependency-watchdog-prober:<TAGNAME>

> docker push localhost:5001/europe-docker.pkg.dev/gardener-project/public/gardener/dependency-watchdog-prober:<TAGNAME>

Update ManagedResource

Garden resource manager will revert back any changes that are done to the kubernetes deployment for dependency watchdog. This is quite useful in live landscapes where only tested and qualified images are used for all gardener managed components. Any change therefore is automatically reverted.

However, during development and testing you will need to use custom docker images. To prevent garden resource manager from reverting the changes done to the kubernetes deployment for dependency watchdog components you must update the respective managed resources first.

# List the managed resources

> kubectl get mr -n garden | grep dependency

# Sample response

dependency-watchdog-weeder seed True True False 26h

dependency-watchdog-prober seed True True False 26h

# Lets assume that you are currently testing prober and would like to use a custom docker image

> kubectl edit mr dependency-watchdog-prober -n garden

# This will open the resource YAML for editing. Add the annotation resources.gardener.cloud/ignore=true

# Reference: https://github.com/gardener/gardener/blob/master/docs/concepts/resource-manager.md

# Save the YAML file.

When you are done with your testing then you can again edit the ManagedResource and remove the annotation. Garden resource manager will revert back to the image with which gardener was initially built and started.

Update Kubernetes Deployment

Find and update the kubernetes deployment for dependency watchdog.

> kubectl get deploy -n garden | grep dependency

# Sample response

dependency-watchdog-weeder 1/1 1 1 26h

dependency-watchdog-prober 1/1 1 1 26h

# Lets assume that you are currently testing prober and would like to use a custom docker image

> kubectl edit deploy dependency-watchdog-prober -n garden

# This will open the resource YAML for editing. Change the image or any other changes and save.

1.5 - Testing

Testing Strategy and Developer Guideline

Intent of this document is to introduce you (the developer) to the following:

- Category of tests that exists.

- Libraries that are used to write tests.

- Best practices to write tests that are correct, stable, fast and maintainable.

- How to run each category of tests.

For any new contributions tests are a strict requirement. Boy Scouts Rule is followed: If you touch a code for which either no tests exist or coverage is insufficient then it is expected that you will add relevant tests.

Tools Used for Writing Tests

These are the following tools that were used to write all the tests (unit + envtest + vanilla kind cluster tests), it is preferred not to introduce any additional tools / test frameworks for writing tests:

Gomega

We use gomega as our matcher or assertion library. Refer to Gomega’s official documentation for details regarding its installation and application in tests.

Testing Package from Standard Library

We use the Testing package provided by the standard library in golang for writing all our tests. Refer to its official documentation to learn how to write tests using Testing package. You can also refer to this example.

Writing Tests

Common for All Kinds

- For naming the individual tests (

TestXxxandtestXxxmethods) and helper methods, make sure that the name describes the implementation of the method. For eg:testScalingWhenMandatoryResourceNotFoundtests the behaviour of thescalerwhen a mandatory resource (KCM deployment) is not present. - Maintain proper logging in tests. Use

t.log()method to add appropriate messages wherever necessary to describe the flow of the test. See this for examples. - Make use of the

testdatadirectory for storing arbitrary sample data needed by tests (YAML manifests, etc.). See this package for examples.- From https://pkg.go.dev/cmd/go/internal/test:

The go tool will ignore a directory named “testdata”, making it available to hold ancillary data needed by the tests.

- From https://pkg.go.dev/cmd/go/internal/test:

Table-driven tests

We need a tabular structure in two cases:

- When we have multiple tests which require the same kind of setup:- In this case we have a

TestXxxSuitemethod which will do the setup and run all the tests. We have a slice ofteststruct which holds all the tests (typically atitleandrunmethod). We use aforloop to run all the tests one by one. See this for examples. - When we have the same code path and multiple possible values to check:- In this case we have the arguments and expectations in a struct. We iterate through the slice of all such structs, passing the arguments to appropriate methods and checking if the expectation is met. See this for examples.

Env Tests

Env tests in Dependency Watchdog use the sigs.k8s.io/controller-runtime/pkg/envtest package. It sets up a temporary control plane (etcd + kube-apiserver) and runs the test against it. The code to set up and teardown the environment can be checked out here.

These are the points to be followed while writing tests that use envtest setup:

All tests should be divided into two top level partitions:

- tests with common environment (

testXxxCommonEnvTests) - tests which need a dedicated environment for each one. (

testXxxDedicatedEnvTests)

They should be contained within the

TestXxxSuitemethod. See this for examples. If all tests are of one kind then this is not needed.- tests with common environment (

Create a method named

setUpXxxTestfor performing setup tasks before all/each test. It should either return a method or have a separate method to perform teardown tasks. See this for examples.The tests run by the suite can be table-driven as well.

Use the

envtestsetup when there is a need of an environment close to an actual setup. Eg: start controllers against a real Kubernetes control plane to catch bugs that can only happen when talking to a real API server.

NOTE: It is currently not possible to bring up more than one envtest environments. See issue#1363. We enforce running serial execution of test suites each of which uses a different envtest environments. See hack/test.sh.

Vanilla Kind Cluster Tests

There are some tests where we need a vanilla kind cluster setup, for eg:- The scaler.go code in the prober package uses the scale subresource to scale the deployments mentioned in the prober config. But the envtest setup does not support the scale subresource as of now. So we need this setup to test if the deployments are scaled as per the config or not.

You can check out the code for this setup here. You can add utility methods for different kubernetes and custom resources in there.

These are the points to be followed while writing tests that use Vanilla Kind Cluster setup:

- Use this setup only if there is a need of an actual Kubernetes cluster(api server + control plane + etcd) to write the tests. (Because this is slower than your normal

envTestsetup) - Create

setUpXxxTestsimilar to the one inenvTest. Follow the same structural pattern used inenvTestfor writing these tests. See this for examples.

Run Tests

To run unit tests, use the following Makefile target

make test

To run KIND cluster based tests, use the following Makefile target

make kind-tests # these tests will be slower as it brings up a vanilla KIND cluster

To view coverage after running the tests, run :

go tool cover -html=cover.out

Flaky tests

If you see that a test is flaky then you can use make stress target which internally uses stress tool

make stress test-package=<test-package> test-func=<test-func> tool-params="<tool-params>"

An example invocation:

make stress test-package=./internal/util test-func=TestRetryUntilPredicateWithBackgroundContext tool-params="-p 10"

The make target will do the following:

- It will create a test binary for the package specified via

test-packageat/tmp/pkg-stress.testdirectory. - It will run

stresstool passing thetool-paramsand targets the functiontest-func.

2 - etcd-druid

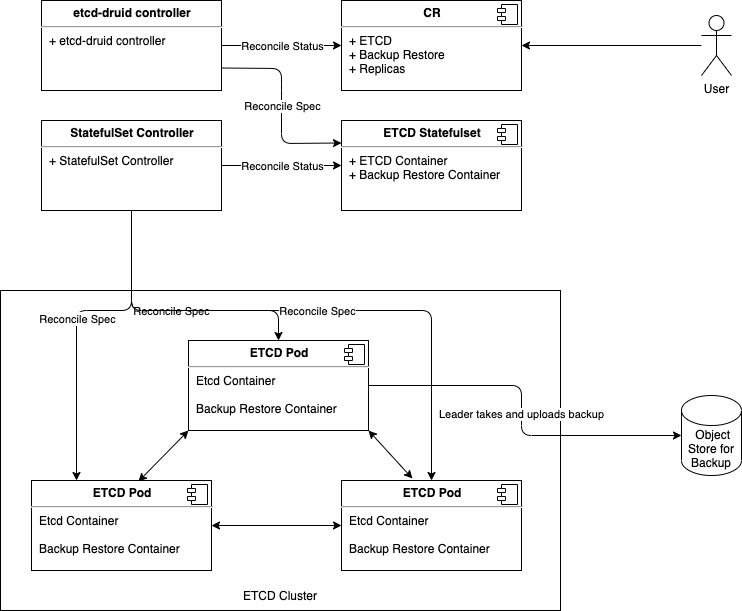

etcd-druid is an etcd operator which makes it easy to configure, provision, reconcile, monitor and delete etcd clusters. It enables management of etcd clusters through declarative Kubernetes API model.

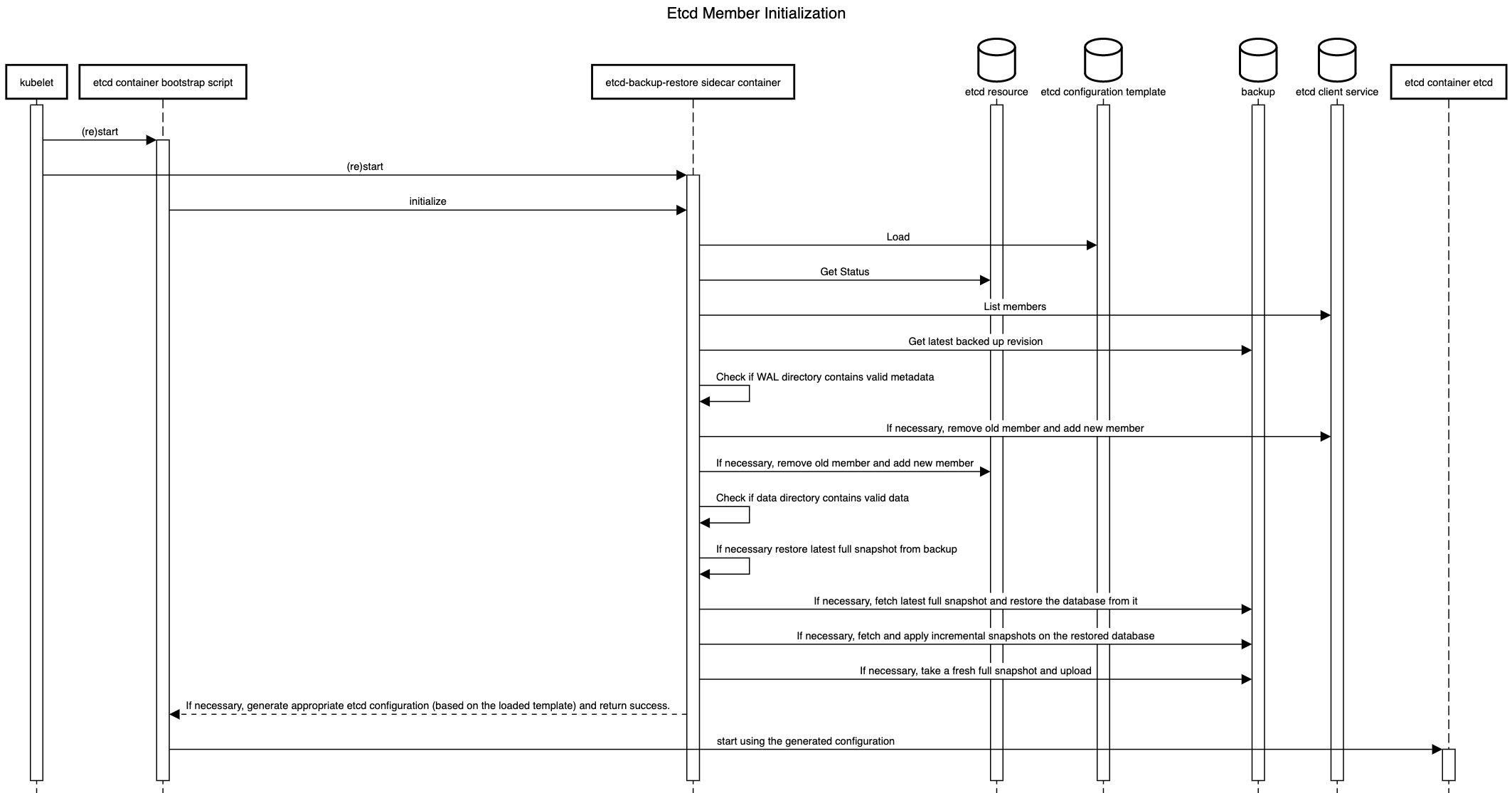

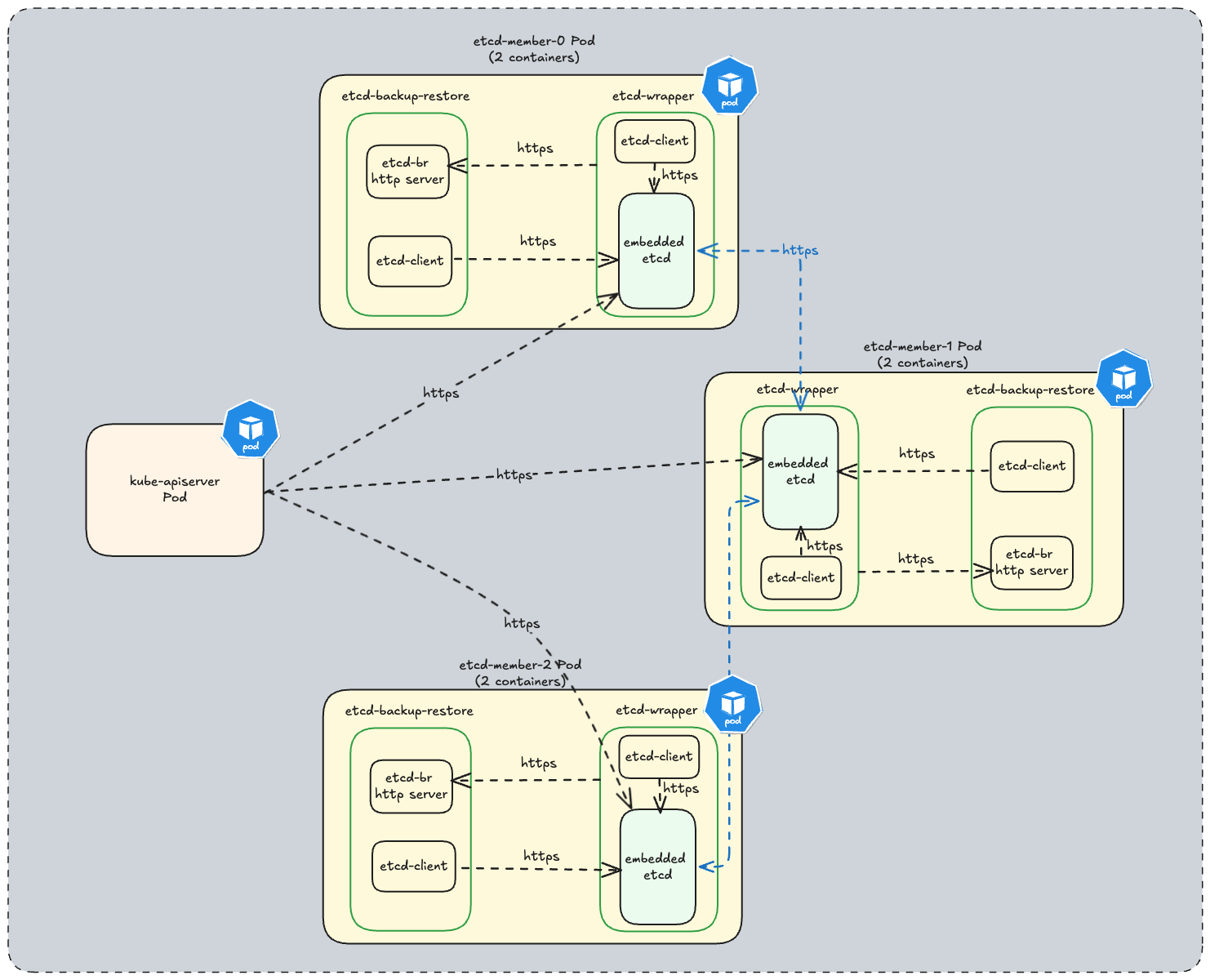

In every etcd cluster managed by etcd-druid, each etcd member is a two container Pod which consists of:

- etcd-wrapper which manages the lifecycle (validation & initialization) of an etcd.

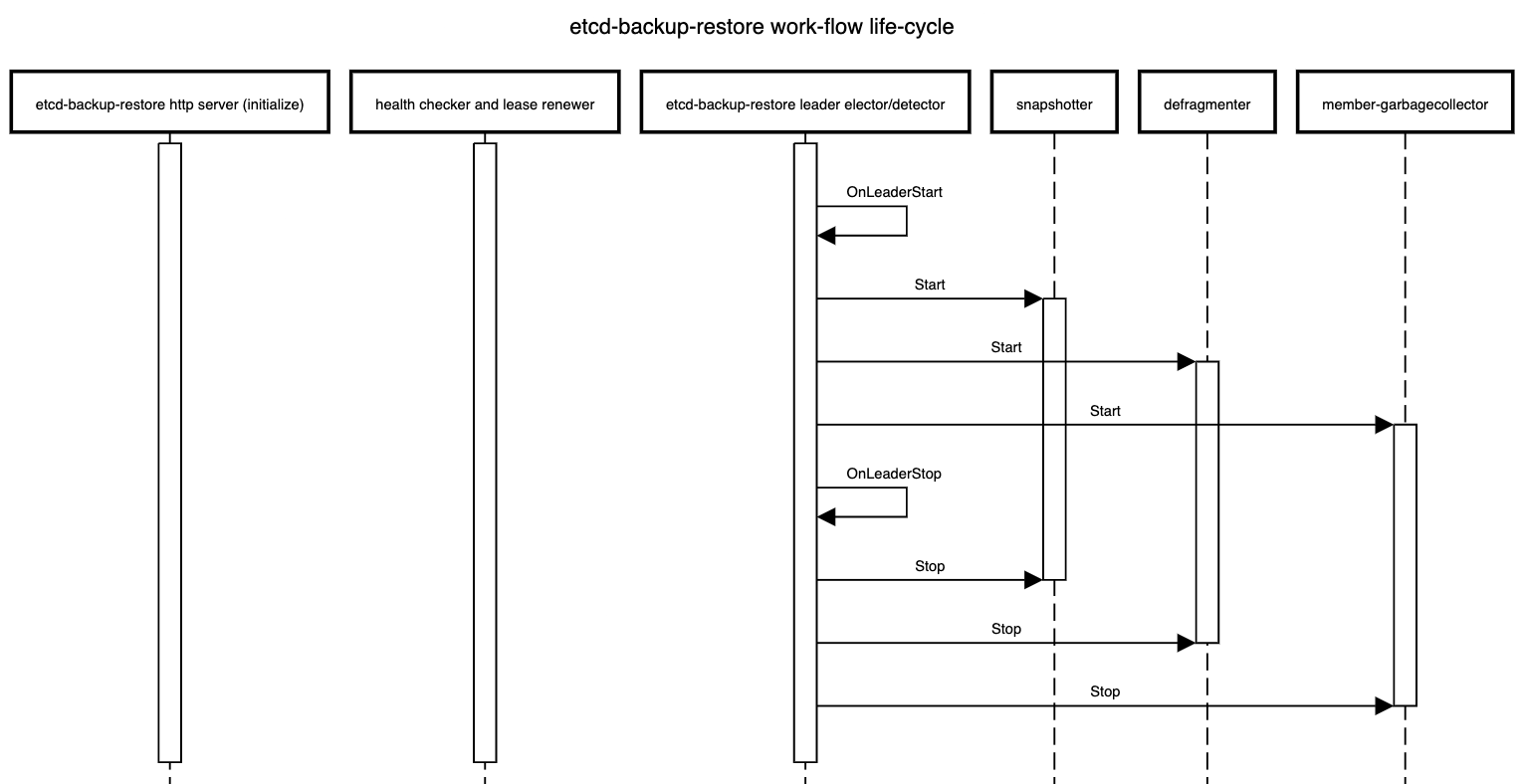

- etcd-backup-restore sidecar which currently provides the following capabilities (the list is not comprehensive):

- etcd DB validation.

- Scheduled etcd DB defragmentation.

- Backup - etcd DB snapshots are taken regularly and backed in an object store if one is configured.

- Restoration - In case of a DB corruption for a single-member cluster it helps in restoring from latest set of snapshots (full & delta).

- Member control operations.

etcd-druid additionally provides the following capabilities:

- Facilitates declarative scale-out of etcd clusters.

- Provides protection against accidental deletion/mutation of resources provisioned as part of an etcd cluster.

- Offers an asynchronous and threshold based capability to process backed up snapshots to:

- Potentially minimize the recovery time by leveraging restoration from backups followed by etcd’s compaction and defragmentation.

- Indirectly assert integrity of the backed up snaphots.

- Allows seamless copy of backups between any two object store buckets.

Start using or developing etcd-druid locally

If you are looking to try out druid then you can use a Kind cluster based setup.

https://github.com/user-attachments/assets/cfe0d891-f709-4d7f-b975-4300c6de67e4

For detailed documentation, see our docs.

Contributions

If you wish to contribute then please see our contributor guidelines.

Feedback and Support

We always look forward to active community engagement. Please report bugs or suggestions on how we can enhance etcd-druid on GitHub Issues.

License

Release under Apache-2.0 license.

2.1 - 01 Multi Node Etcd Clusters

DEP-01: Multi-node etcd cluster instances via etcd-druid

This document proposes an approach (along with some alternatives) to support provisioning and management of multi-node etcd cluster instances via etcd-druid and etcd-backup-restore.

Goal

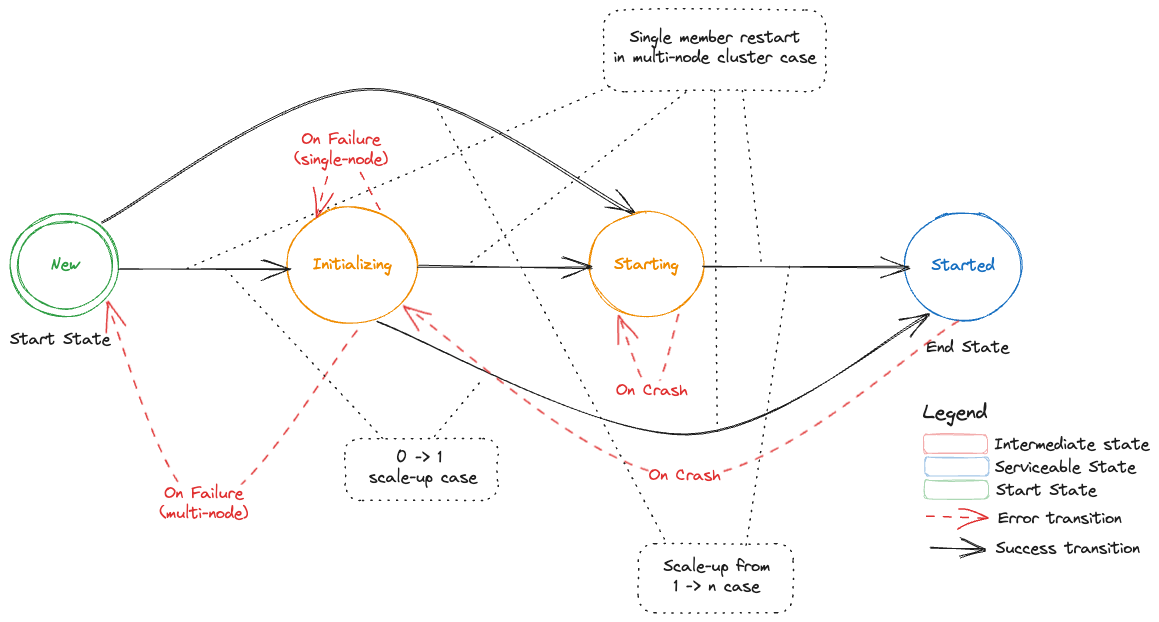

- Enhance etcd-druid and etcd-backup-restore to support provisioning and management of multi-node etcd cluster instances within a single Kubernetes cluster.

- The etcd CRD interface should be simple to use. It should preferably work with just setting the

spec.replicasfield to the desired value and should not require any more configuration in the CRD than currently required for the single-node etcd instances. Thespec.replicasfield is part of thescalesub-resource implementation inEtcdCRD. - The single-node and multi-node scenarios must be automatically identified and managed by

etcd-druidandetcd-backup-restore. - The etcd clusters (single-node or multi-node) managed by

etcd-druidandetcd-backup-restoremust automatically recover from failures (even quorum loss) and disaster (e.g. etcd member persistence/data loss) as much as possible. - It must be possible to dynamically scale an etcd cluster horizontally (even between single-node and multi-node scenarios) by simply scaling the

Etcdscale sub-resource. - It must be possible to (optionally) schedule the individual members of an etcd clusters on different nodes or even infrastructure availability zones (within the hosting Kubernetes cluster).

Though this proposal tries to cover most aspects related to single-node and multi-node etcd clusters, there are some more points that are not goals for this document but are still in the scope of either etcd-druid/etcd-backup-restore and/or gardener. In such cases, a high-level description of how they can be addressed in the future are mentioned at the end of the document.

Background and Motivation

Single-node etcd cluster

At present, etcd-druid supports only single-node etcd cluster instances.

The advantages of this approach are given below.

- The problem domain is smaller. There are no leader election and quorum related issues to be handled. It is simpler to setup and manage a single-node etcd cluster.

- Single-node etcd clusters instances have less request latency than multi-node etcd clusters because there is no requirement to replicate the changes to the other members before committing the changes.

etcd-druidprovisions etcd cluster instances as pods (actually asstatefulsets) in a Kubernetes cluster and Kubernetes is quick (<20s) to restart container/pods if they go down.- Also,

etcd-druidis currently only used by gardener to provision etcd clusters to act as back-ends for Kubernetes control-planes and Kubernetes control-plane components (kube-apiserver,kubelet,kube-controller-manager,kube-scheduleretc.) can tolerate etcd going down and recover when it comes back up. - Single-node etcd clusters incur less cost (CPU, memory and storage)

- It is easy to cut-off client requests if backups fail by using

readinessProbeon theetcd-backup-restorehealthz endpoint to minimize the gap between the latest revision and the backup revision.

The disadvantages of using single-node etcd clusters are given below.

- The database verification step by

etcd-backup-restorecan introduce additional delays whenever etcd container/pod restarts (in total ~20-25s). This can be much longer if a database restoration is required. Especially, if there are incremental snapshots that need to be replayed (this can be mitigated by compacting the incremental snapshots in the background). - Kubernetes control-plane components can go into

CrashloopBackoffif etcd is down for some time. This is mitigated by the dependency-watchdog. But Kubernetes control-plane components require a lot of resources and create a lot of load on the etcd cluster and the apiserver when they come out ofCrashloopBackoff. Especially, in medium or large sized clusters (>20nodes). - Maintenance operations such as updates to etcd (and updates to

etcd-druidofetcd-backup-restore), rolling updates to the nodes of the underlying Kubernetes cluster and vertical scaling of etcd pods are disruptive because they cause etcd pods to be restarted. The vertical scaling of etcd pods is somewhat mitigated during scale down by doing it only during the target clusters’ maintenance window. But scale up is still disruptive. - We currently use some form of elastic storage (via

persistentvolumeclaims) for storing which have some upper-bounds on the I/O latency and throughput. This can be potentially be a problem for large clusters (>220nodes). Also, some cloud providers (e.g. Azure) take a long time to attach/detach volumes to and from machines which increases the down time to the Kubernetes components that depend on etcd. It is difficult to use ephemeral/local storage (to achieve better latency/throughput as well as to circumvent volume attachment/detachment) for single-node etcd cluster instances.

Multi-node etcd-cluster

The advantages of introducing support for multi-node etcd clusters via etcd-druid are below.

- Multi-node etcd cluster is highly-available. It can tolerate disruption to individual etcd pods as long as the quorum is not lost (i.e. more than half the etcd member pods are healthy and ready).

- Maintenance operations such as updates to etcd (and updates to

etcd-druidofetcd-backup-restore), rolling updates to the nodes of the underlying Kubernetes cluster and vertical scaling of etcd pods can be done non-disruptively by respectingpoddisruptionbudgetsfor the various multi-node etcd cluster instances hosted on that cluster. - Kubernetes control-plane components do not see any etcd cluster downtime unless quorum is lost (which is expected to be lot less frequent than current frequency of etcd container/pod restarts).

- We can consider using ephemeral/local storage for multi-node etcd cluster instances because individual member restarts can afford to take time to restore from backup before (re)joining the etcd cluster because the remaining members serve the requests in the meantime.

- High-availability across availability zones is also possible by specifying (anti)affinity for the etcd pods (possibly via

kupid).

Some disadvantages of using multi-node etcd clusters due to which it might still be desirable, in some cases, to continue to use single-node etcd cluster instances in the gardener context are given below.

- Multi-node etcd cluster instances are more complex to manage.

The problem domain is larger including the following.

- Leader election

- Quorum loss

- Managing rolling changes

- Backups to be taken from only the leading member.

- More complex to cut-off client requests if backups fail to minimize the gap between the latest revision and the backup revision is under control.

- Multi-node etcd cluster instances incur more cost (CPU, memory and storage).

Dynamic multi-node etcd cluster

Though it is not part of this proposal, it is conceivable to convert a single-node etcd cluster into a multi-node etcd cluster temporarily to perform some disruptive operation (etcd, etcd-backup-restore or etcd-druid updates, etcd cluster vertical scaling and perhaps even node rollout) and convert it back to a single-node etcd cluster once the disruptive operation has been completed. This will necessarily still involve a down-time because scaling from a single-node etcd cluster to a three-node etcd cluster will involve etcd pod restarts, it is still probable that it can be managed with a shorter down time than we see at present for single-node etcd clusters (on the other hand, converting a three-node etcd cluster to five node etcd cluster can be non-disruptive).

This is definitely not to argue in favour of such a dynamic approach in all cases (eventually, if/when dynamic multi-node etcd clusters are supported). On the contrary, it makes sense to make use of static (fixed in size) multi-node etcd clusters for production scenarios because of the high-availability.

Prior Art

ETCD Operator from CoreOS

This project is no longer actively developed or maintained. The project exists here for historical reference. If you are interested in the future of the project and taking over stewardship, please contact etcd-dev@googlegroups.com.

etcdadm from kubernetes-sigs

etcdadm is a command-line tool for operating an etcd cluster. It makes it easy to create a new cluster, add a member to, or remove a member from an existing cluster. Its user experience is inspired by kubeadm.

It is a tool more tailored for manual command-line based management of etcd clusters with no API’s. It also makes no assumptions about the underlying platform on which the etcd clusters are provisioned and hence, doesn’t leverage any capabilities of Kubernetes.

Etcd Cluster Operator from Improbable-Engineering

Etcd Cluster Operator is an Operator for automating the creation and management of etcd inside of Kubernetes. It provides a custom resource definition (CRD) based API to define etcd clusters with Kubernetes resources, and enable management with native Kubernetes tooling._

Out of all the alternatives listed here, this one seems to be the only possible viable alternative. Parts of its design/implementations are similar to some of the approaches mentioned in this proposal. However, we still don’t propose to use it as -

- The project is still in early phase and is not mature enough to be consumed as is in productive scenarios of ours.

- The resotration part is completely different which makes it difficult to adopt as-is and requries lot of re-work with the current restoration semantics with etcd-backup-restore making the usage counter-productive.

General Approach to ETCD Cluster Management

Bootstrapping

There are three ways to bootstrap an etcd cluster which are static, etcd discovery and DNS discovery. Out of these, the static way is the simplest (and probably faster to bootstrap the cluster) and has the least external dependencies. Hence, it is preferred in this proposal. But it requires that the initial (during bootstrapping) etcd cluster size (number of members) is already known before bootstrapping and that all of the members are already addressable (DNS,IP,TLS etc.). Such information needs to be passed to the individual members during startup using the following static configuration.

- ETCD_INITIAL_CLUSTER

- The list of peer URLs including all the members. This must be the same as the advertised peer URLs configuration. This can also be passed as

initial-clusterflag to etcd.

- The list of peer URLs including all the members. This must be the same as the advertised peer URLs configuration. This can also be passed as

- ETCD_INITIAL_CLUSTER_STATE

- This should be set to

newwhile bootstrapping an etcd cluster.

- This should be set to

- ETCD_INITIAL_CLUSTER_TOKEN

- This is a token to distinguish the etcd cluster from any other etcd cluster in the same network.

Assumptions

- ETCD_INITIAL_CLUSTER can use DNS instead of IP addresses. We need to verify this by deleting a pod (as against scaling down the statefulset) to ensure that the pod IP changes and see if the recreated pod (by the statefulset controller) re-joins the cluster automatically.

- DNS for the individual members is known or computable. This is true in the case of etcd-druid setting up an etcd cluster using a single statefulset. But it may not necessarily be true in other cases (multiple statefulset per etcd cluster or deployments instead of statefulsets or in the case of etcd cluster with members distributed across more than one Kubernetes cluster.

Adding a new member to an etcd cluster

A new member can be added to an existing etcd cluster instance using the following steps.

- If the latest backup snapshot exists, restore the member’s etcd data to the latest backup snapshot. This can reduce the load on the leader to bring the new member up to date when it joins the cluster.

- If the latest backup snapshot doesn’t exist or if the latest backup snapshot is not accessible (please see backup failure) and if the cluster itself is quorate, then the new member can be started with an empty data. But this will will be suboptimal because the new member will fetch all the data from the leading member to get up-to-date.

- The cluster is informed that a new member is being added using the

MemberAddAPI including information like the member name and its advertised peer URLs. - The new etcd member is then started with

ETCD_INITIAL_CLUSTER_STATE=existingapart from other required configuration.

This proposal recommends this approach.

Note

- If there are incremental snapshots (taken by

etcd-backup-restore), they cannot be applied because that requires the member to be started in isolation without joining the cluster which is not possible. This is acceptable if the amount of incremental snapshots are managed to be relatively small. This adds one more reason to increase the priority of the issue of incremental snapshot compaction. - There is a time window, between the

MemberAddcall and the new member joining the cluster and getting up to date, where the cluster is vulnerable to leader elections which could be disruptive.

Alternative

With v3.4, the new raft learner approach can be used to mitigate some of the possible disruptions mentioned above.

Then the steps will be as follows.

- If the latest backup snapshot exists, restore the member’s etcd data to the latest backup snapshot. This can reduce the load on the leader to bring the new member up to date when it joins the cluster.

- The cluster is informed that a new member is being added using the

MemberAddAsLearnerAPI including information like the member name and its advertised peer URLs. - The new etcd member is then started with

ETCD_INITIAL_CLUSTER_STATE=existingapart from other required configuration. - Once the new member (learner) is up to date, it can be promoted to a full voting member by using the

MemberPromoteAPI

This approach is new and involves more steps and is not recommended in this proposal. It can be considered in future enhancements.

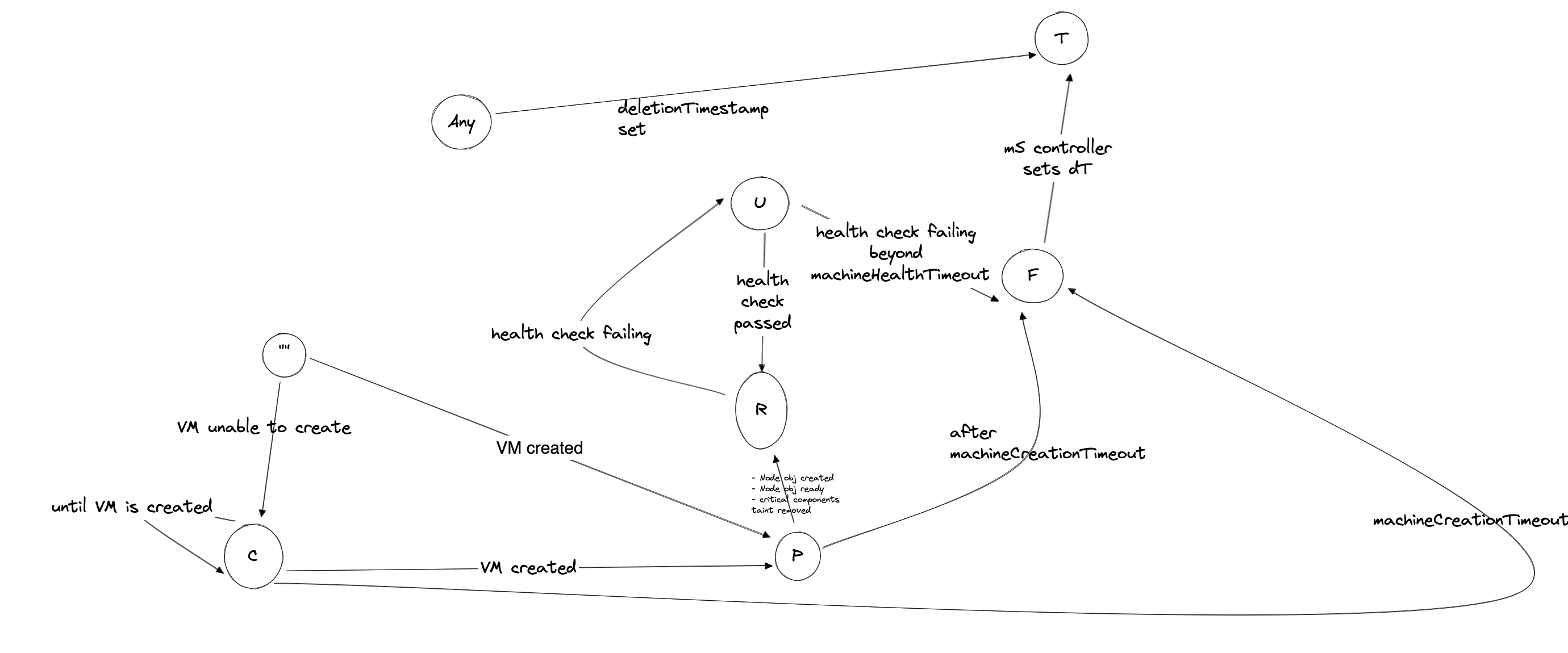

Managing Failures

A multi-node etcd cluster may face failures of diffent kinds during its life-cycle. The actions that need to be taken to manage these failures depend on the failure mode.

Removing an existing member from an etcd cluster

If a member of an etcd cluster becomes unhealthy, it must be explicitly removed from the etcd cluster, as soon as possible.

This can be done by using the MemberRemove API.

This ensures that only healthy members participate as voting members.

A member of an etcd cluster may be removed not just for managing failures but also for other reasons such as -

- The etcd cluster is being scaled down. I.e. the cluster size is being reduced

- An existing member is being replaced by a new one for some reason (e.g. upgrades)

If the majority of the members of the etcd cluster are healthy and the member that is unhealthy/being removed happens to be the leader at that moment then the etcd cluster will automatically elect a new leader. But if only a minority of etcd clusters are healthy after removing the member then the the cluster will no longer be quorate and will stop accepting write requests. Such an etcd cluster needs to be recovered via some kind of disaster-recovery.

Restarting an existing member of an etcd cluster

If the existing member of an etcd cluster restarts and retains an uncorrupted data directory after the restart, then it can simply re-join the cluster as an existing member without any API calls or configuration changes. This is because the relevant metadata (including member ID and cluster ID) are maintained in the write ahead logs. However, if it doesn’t retain an uncorrupted data directory after the restart, then it must first be removed and added as a new member.

Recovering an etcd cluster from failure of majority of members

If a majority of members of an etcd cluster fail but if they retain their uncorrupted data directory then they can be simply restarted and they will re-form the existing etcd cluster when they come up. However, if they do not retain their uncorrupted data directory, then the etcd cluster must be recovered from latest snapshot in the backup. This is very similar to bootstrapping with the additional initial step of restoring the latest snapshot in each of the members. However, the same limitation about incremental snapshots, as in the case of adding a new member, applies here. But unlike in the case of adding a new member, not applying incremental snapshots is not acceptable in the case of etcd cluster recovery. Hence, if incremental snapshots are required to be applied, the etcd cluster must be recovered in the following steps.

- Restore a new single-member cluster using the latest snapshot.

- Apply incremental snapshots on the single-member cluster.

- Take a full snapshot which can now be used while adding the remaining members.

- Add new members using the latest snapshot created in the step above.

Kubernetes Context

- Users will provision an etcd cluster in a Kubernetes cluster by creating an etcd CRD resource instance.

- A multi-node etcd cluster is indicated if the

spec.replicasfield is set to any value greater than 1. The etcd-druid will add validation to ensure that thespec.replicasvalue is an odd number according to the requirements of etcd. - The etcd-druid controller will provision a statefulset with the etcd main container and the etcd-backup-restore sidecar container. It will pass on the

spec.replicasfield from the etcd resource to the statefulset. It will also supply the right pre-computed configuration to both the containers. - The statefulset controller will create the pods based on the pod template in the statefulset spec and these individual pods will be the members that form the etcd cluster.

This approach makes it possible to satisfy the assumption that the DNS for the individual members of the etcd cluster must be known/computable.

This can be achieved by using a headless service (along with the statefulset) for each etcd cluster instance.

Then we can address individual pods/etcd members via the predictable DNS name of <statefulset_name>-{0|1|2|3|…|n}.<headless_service_name> from within the Kubernetes namespace (or from outside the Kubernetes namespace by appending .<namespace>.svc.<cluster_domain> suffix).

The etcd-druid controller can compute the above configurations automatically based on the spec.replicas in the etcd resource.

This proposal recommends this approach.

Alternative

One statefulset is used for each member (instead of one statefulset for all members). While this approach gives a flexibility to have different pod specifications for the individual members, it makes managing the individual members (e.g. rolling updates) more complicated. Hence, this approach is not recommended.

ETCD Configuration

As mentioned in the general approach section, there are differences in the configuration that needs to be passed to individual members of an etcd cluster in different scenarios such as bootstrapping, adding a new member, removing a member, restarting an existing member etc. Managing such differences in configuration for individual pods of a statefulset is tricky in the recommended approach of using a single statefulset to manage all the member pods of an etcd cluster. This is because statefulset uses the same pod template for all its pods.

The recommendation is for etcd-druid to provision the base configuration template in a ConfigMap which is passed to all the pods via the pod template in the StatefulSet.

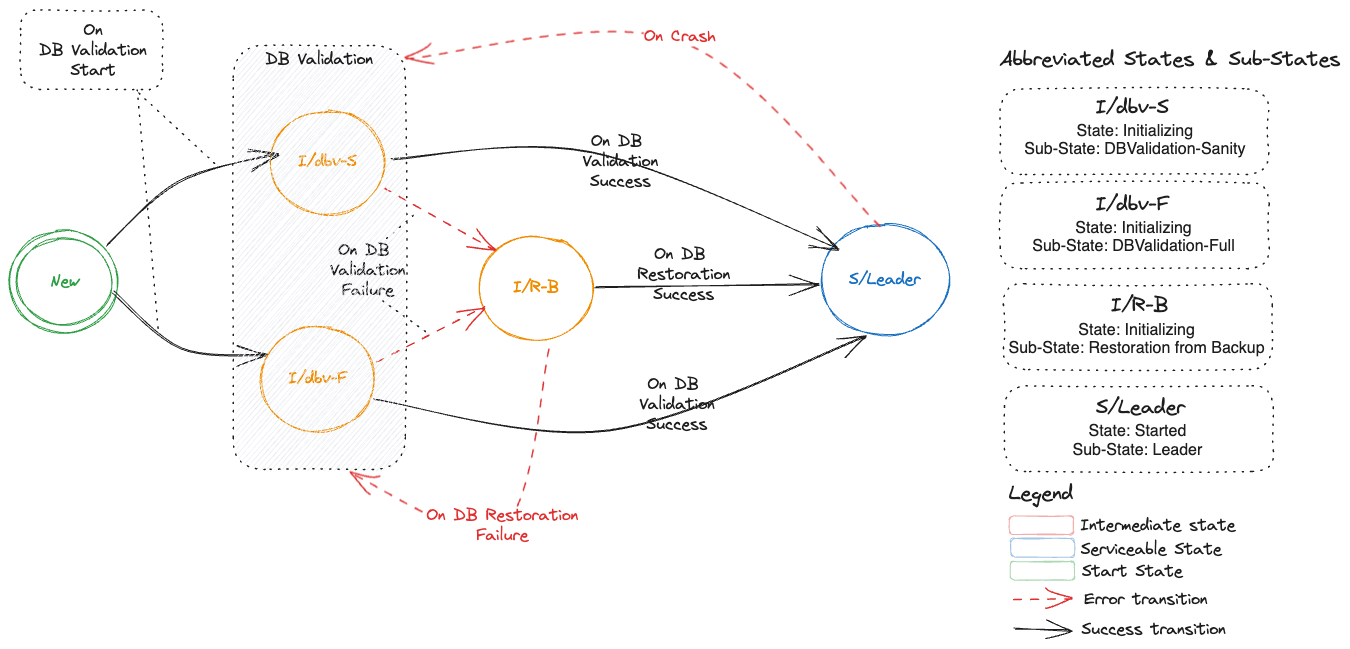

The initialization flow of etcd-backup-restore (which is invoked every time the etcd container is (re)started) is then enhanced to generate the customized etcd configuration for the corresponding member pod (in a shared volume between etcd and the backup-restore containers) based on the supplied template configuration.

This will require that etcd-backup-restore will have to have a mechanism to detect which scenario listed above applies during any given member container/pod restart.

Alternative

As mentioned above, one statefulset is used for each member of the etcd cluster.

Then different configuration (generated directly by etcd-druid) can be passed in the pod templates of the different statefulsets.

Though this approach is advantageous in the context of managing the different configuration, it is not recommended in this proposal because it makes the rest of the management (e.g. rolling updates) more complicated.

Data Persistence

The type of persistence used to store etcd data (including the member ID and cluster ID) has an impact on the steps that are needed to be taken when the member pods or containers (minority of them or majority) need to be recovered.

Persistent

Like the single-node case, persistentvolumes can be used to persist ETCD data for all the member pods. The individual member pods then get their own persistentvolumes.

The advantage is that individual members retain their member ID across pod restarts and even pod deletion/recreation across Kubernetes nodes.

This means that member pods that crash (or are unhealthy) can be restarted automatically (by configuring livenessProbe) and they will re-join the etcd cluster using their existing member ID without any need for explicit etcd cluster management).

The disadvantages of this approach are as follows.

- The number of persistentvolumes increases linearly with the cluster size which is a cost-related concern.

- Network-mounted persistentvolumes might eventually become a performance bottleneck under heavy load for a latency-sensitive component like ETCD.

- Volume attach/detach issues when associated with etcd cluster instances cause downtimes to the target shoot clusters that are backed by those etcd cluster instances.

Ephemeral

The ephemeral volumes use-case is considered as an optimization and may be planned as a follow-up action.

Disk

Ephemeral persistence can be achieved in Kubernetes by using either emptyDir volumes or local persistentvolumes to persist ETCD data.

The advantages of this approach are as follows.

- Potentially faster disk I/O.

- The number of persistent volumes does not increase linearly with the cluster size (at least not technically).

- Issues related volume attachment/detachment can be avoided.

The main disadvantage of using ephemeral persistence is that the individual members may retain their identity and data across container restarts but not across pod deletion/recreation across Kubernetes nodes. If the data is lost then on restart of the member pod, the older member (represented by the container) has to be removed and a new member has to be added.

Using emptyDir ephemeral persistence has the disadvantage that the volume doesn’t have its own identity.

So, if the member pod is recreated but scheduled on the same node as before then it will not retain the identity as the persistence is lost.

But it has the advantage that scheduling of pods is unencumbered especially during pod recreation as they are free to be scheduled anywhere.

Using local persistentvolumes has the advantage that the volume has its own indentity and hence, a recreated member pod will retain its identity if scheduled on the same node.

But it has the disadvantage of tying down the member pod to a node which is a problem if the node becomes unhealthy requiring etcd druid to take additional actions (such as deleting the local persistent volume).

Based on these constraints, if ephemeral persistence is opted for, it is recommended to use emptyDir ephemeral persistence.

In-memory

In-memory ephemeral persistence can be achieved in Kubernetes by using emptyDir with medium: Memory.

In this case, a tmpfs (RAM-backed file-system) volume will be used.

In addition to the advantages of ephemeral persistence, this approach can achieve the fastest possible disk I/O.

Similarly, in addition to the disadvantages of ephemeral persistence, in-memory persistence has the following additional disadvantages.

- More memory required for the individual member pods.

- Individual members may not at all retain their data and identity across container restarts let alone across pod restarts/deletion/recreation across Kubernetes nodes. I.e. every time an etcd container restarts, the old member (represented by the container) will have to be removed and a new member has to be added.

How to detect if valid metadata exists in an etcd member

Since the likelyhood of a member not having valid metadata in the WAL files is much more likely in the ephemeral persistence scenario, one option is to pass the information that ephemeral persistence is being used to the etcd-backup-restore sidecar (say, via command-line flags or environment variables).

But in principle, it might be better to determine this from the WAL files directly so that the possibility of corrupted WAL files also gets handled correctly. To do this, the wal package has some functions that might be useful.

Recommendation

It might be possible that using the wal package for verifying if valid metadata exists might be performance intensive. So, the performance impact needs to be measured. If the performance impact is acceptable (both in terms of resource usage and time), it is recommended to use this way to verify if the member contains valid metadata. Otherwise, alternatives such as a simple check that WAL folder exists coupled with the static information about use of persistent or ephemeral storage might be considered.

How to detect if valid data exists in an etcd member

The initialization sequence in etcd-backup-restore already includes database verification.

This would suffice to determine if the member has valid data.

Recommendation

Though ephemeral persistence has performance and logistics advantages, it is recommended to start with persistent data for the member pods. In addition to the reasons and concerns listed above, there is also the additional concern that in case of backup failure, the risk of additional data loss is a bit higher if ephemeral persistence is used (simultaneous quoram loss is sufficient) when compared to persistent storage (simultaenous quorum loss with majority persistence loss is needed). The risk might still be acceptable but the idea is to gain experience about how frequently member containers/pods get restarted/recreated, how frequently leader election happens among members of an etcd cluster and how frequently etcd clusters lose quorum. Based on this experience, we can move towards using ephemeral (perhaps even in-memory) persistence for the member pods.

Separating peer and client traffic

The current single-node ETCD cluster implementation in etcd-druid and etcd-backup-restore uses a single service object to act as the entry point for the client traffic.

There is no separation or distinction between the client and peer traffic because there is not much benefit to be had by making that distinction.

In the multi-node ETCD cluster scenario, it makes sense to distinguish between and separate the peer and client traffic.

This can be done by using two services.

- peer

- To be used for peer communication. This could be a

headlessservice.

- To be used for peer communication. This could be a

- client

- To be used for client communication. This could be a normal

ClusterIPservice like it is in the single-node case.

- To be used for client communication. This could be a normal

The main advantage of this approach is that it makes it possible (if needed) to allow only peer to peer communication while blocking client communication. Such a thing might be required during some phases of some maintenance tasks (manual or automated).

Cutting off client requests

At present, in the single-node ETCD instances, etcd-druid configures the readinessProbe of the etcd main container to probe the healthz endpoint of the etcd-backup-restore sidecar which considers the status of the latest backup upload in addition to the regular checks about etcd and the side car being up and healthy. This has the effect of setting the etcd main container (and hence the etcd pod) as not ready if the latest backup upload failed. This results in the endpoints controller removing the pod IP address from the endpoints list for the service which eventually cuts off ingress traffic coming into the etcd pod via the etcd client service. The rationale for this is to fail early when the backup upload fails rather than continuing to serve requests while the gap between the last backup and the current data increases which might lead to unacceptably large amount of data loss if disaster strikes.

This approach will not work in the multi-node scenario because we need the individual member pods to be able to talk to each other to maintain the cluster quorum when backup upload fails but need to cut off only client ingress traffic.

It is recommended to separate the backup health condition tracking taking appropriate remedial actions.

With that, the backup health condition tracking is now separated to the BackupReady condition in the Etcd resource status and the cutting off of client traffic (which could now be done for more reasons than failed backups) can be achieved in a different way described below.

Manipulating Client Service podSelector

The client traffic can be cut off by updating (manually or automatically by some component) the podSelector of the client service to add an additional label (say, unhealthy or disabled) such that the podSelector no longer matches the member pods created by the statefulset.

This will result in the client ingress traffic being cut off.

The peer service is left unmodified so that peer communication is always possible.

Health Check

The etcd main container and the etcd-backup-restore sidecar containers will be configured with livenessProbe and readinessProbe which will indicate the health of the containers and effectively the corresponding ETCD cluster member pod.

Backup Failure

As described above using readinessProbe failures based on latest backup failure is not viable in the multi-node ETCD scenario.

Though cutting off traffic by manipulating client service podSelector is workable, it may not be desirable.

It is recommended that on backup failure, the leading etcd-backup-restore sidecar (the one that is responsible for taking backups at that point in time, as explained in the backup section below, updates the BackupReady condition in the Etcd status and raises a high priority alert to the landscape operators but does not cut off the client traffic.

The reasoning behind this decision to not cut off the client traffic on backup failure is to allow the Kubernetes cluster’s control plane (which relies on the ETCD cluster) to keep functioning as long as possible and to avoid bringing down the control-plane due to a missed backup.

The risk of this approach is that with a cascaded sequence of failures (on top of the backup failure), there is a chance of more data loss than the frequency of backup would otherwise indicate.

To be precise, the risk of such an additional data loss manifests only when backup failure as well as a special case of quorum loss (majority of the members are not ready) happen in such a way that the ETCD cluster needs to be re-bootstrapped from the backup. As described here, re-bootstrapping the ETCD cluster requires restoration from the latest backup only when a majority of members no longer have uncorrupted data persistence.

If persistent storage is used, this will happen only when backup failure as well as a majority of the disks/volumes backing the ETCD cluster members fail simultaneously. This would indeed be rare and might be an acceptable risk.

If ephemeral storage is used (especially, in-memory), the data loss will happen if a majority of the ETCD cluster members become NotReady (requiring a pod restart) at the same time as the backup failure.

This may not be as rare as majority members’ disk/volume failure.

The risk can be somewhat mitigated at least for planned maintenance operations by postponing potentially disruptive maintenance operations when BackupReady condition is false (vertical scaling, rolling updates, evictions due to node roll-outs).

But in practice (when ephemeral storage is used), the current proposal suggests restoring from the latest full backup even when a minority of ETCD members (even a single pod) restart both to speed up the process of the new member catching up to the latest revision but also to avoid load on the leading member which needs to supply the data to bring the new member up-to-date. But as described here, in case of a minority member failure while using ephemeral storage, it is possible to restart the new member with empty data and let it fetch all the data from the leading member (only if backup is not accessible). Though this is suboptimal, it is workable given the constraints and conditions. With this, the risk of additional data loss in the case of ephemeral storage is only if backup failure as well as quorum loss happens. While this is still less rare than the risk of additional data loss in case of persistent storage, the risk might be tolerable. Provided the risk of quorum loss is not too high. This needs to be monitored/evaluated before opting for ephemeral storage.

Given these constraints, it is better to dynamically avoid/postpone some potentially disruptive operations when BackupReady condition is false.

This has the effect of allowing n/2 members to be evicted when the backups are healthy and completely disabling evictions when backups are not healthy.

- Skip/postpone potentially disruptive maintenance operations (listed below) when the

BackupReadycondition isfalse. - Vertical scaling.

- Rolling updates, Basically, any updates to the

StatefulSetspec which includes vertical scaling. - Dynamically toggle the

minAvailablefield of thePodDisruptionBudgetbetweenn/2 + 1andn(wherenis the ETCD desired cluster size) whenever theBackupReadycondition toggles betweentrueandfalse.

This will mean that etcd-backup-restore becomes Kubernetes-aware. But there might be reasons for making etcd-backup-restore Kubernetes-aware anyway (e.g. to update the etcd resource status with latest full snapshot details).

This enhancement should keep etcd-backup-restore backward compatible.

I.e. it should be possible to use etcd-backup-restore Kubernetes-unaware as before this proposal.

This is possible either by auto-detecting the existence of kubeconfig or by an explicit command-line flag (such as --enable-client-service-updates which can be defaulted to false for backward compatibility).

Alternative

The alternative is for etcd-druid to implement the above functionality.

But etcd-druid is centrally deployed in the host Kubernetes cluster and cannot scale well horizontally.

So, it can potentially be a bottleneck if it is involved in regular health check mechanism for all the etcd clusters it manages.

Also, the recommended approach above is more robust because it can work even if etcd-druid is down when the backup upload of a particular etcd cluster fails.

Status

It is desirable (for the etcd-druid and landscape administrators/operators) to maintain/expose status of the etcd cluster instances in the status sub-resource of the Etcd CRD.

The proposed structure for maintaining the status is as shown in the example below.