Machine Controller Manager

Declarative way of managing machines for Kubernetes cluster

machine-controller-manager

Note

One can add support for a new cloud provider by following Adding support for new provider.

Overview

Machine Controller Manager aka MCM is a group of cooperative controllers that manage the lifecycle of the worker machines. It is inspired by the design of Kube Controller Manager in which various sub controllers manage their respective Kubernetes Clients. MCM gives you the following benefits:

- seamlessly manage machines/nodes with a declarative API (of course, across different cloud providers)

- integrate generically with the cluster autoscaler

- plugin with tools such as the node-problem-detector

- transport the immutability design principle to machine/nodes

- implement e.g. rolling upgrades of machines/nodes

MCM supports following providers. These provider code is maintained externally (out-of-tree), and the links for the same are linked below:

It can easily be extended to support other cloud providers as well.

Example of managing machine:

kubectl create/get/delete machine vm1

Key terminologies

Nodes/Machines/VMs are different terminologies used to represent similar things. We use these terms in the following way

- VM: A virtual machine running on any cloud provider. It could also refer to a physical machine (PM) in case of a bare metal setup.

- Node: Native kubernetes node objects. The objects you get to see when you do a “kubectl get nodes”. Although nodes can be either physical/virtual machines, for the purposes of our discussions it refers to a VM.

- Machine: A VM that is provisioned/managed by the Machine Controller Manager.

Design of Machine Controller Manager

The design of the Machine Controller Manager is influenced by the Kube Controller Manager, where-in multiple sub-controllers are used to manage the Kubernetes clients.

Design Principles

It’s designed to run in the master plane of a Kubernetes cluster. It follows the best principles and practices of writing controllers, including, but not limited to:

- Reusing code from kube-controller-manager

- leader election to allow HA deployments of the controller

workqueues and multiple thread-workersSharedInformers that limit to minimum network calls, de-serialization and provide helpful create/update/delete events for resources- rate-limiting to allow back-off in case of network outages and general instability of other cluster components

- sending events to respected resources for easy debugging and overview

- Prometheus metrics, health and (optional) profiling endpoints

Objects of Machine Controller Manager

Machine Controller Manager reconciles a set of Custom Resources namely MachineDeployment, MachineSet and Machines which are managed & monitored by their controllers MachineDeployment Controller, MachineSet Controller, Machine Controller respectively along with another cooperative controller called the Safety Controller.

Machine Controller Manager makes use of 4 CRD objects and 1 Kubernetes secret object to manage machines. They are as follows:

| Custom ResourceObject | Description |

|---|

MachineClass | A MachineClass represents a template that contains cloud provider specific details used to create machines. |

Machine | A Machine represents a VM which is backed by the cloud provider. |

MachineSet | A MachineSet ensures that the specified number of Machine replicas are running at a given point of time. |

MachineDeployment | A MachineDeployment provides a declarative update for MachineSet and Machines. |

Secret | A Secret here is a Kubernetes secret that stores cloudconfig (initialization scripts used to create VMs) and cloud specific credentials. |

See here for CRD API Documentation

Components of Machine Controller Manager

| Controller | Description |

|---|

| MachineDeployment controller | Machine Deployment controller reconciles the MachineDeployment objects and manages the lifecycle of MachineSet objects. MachineDeployment consumes provider specific MachineClass in its spec.template.spec which is the template of the VM spec that would be spawned on the cloud by MCM. |

| MachineSet controller | MachineSet controller reconciles the MachineSet objects and manages the lifecycle of Machine objects. |

| Safety controller | There is a Safety Controller responsible for handling the unidentified or unknown behaviours from the cloud providers. Safety Controller:- freezes the MachineDeployment controller and MachineSet controller if the number of

Machine objects goes beyond a certain threshold on top of Spec.replicas. It can be configured by the flag --safety-up or --safety-down and also --machine-safety-overshooting-period. - freezes the functionality of the MCM if either of the

target-apiserver or the control-apiserver is not reachable. - unfreezes the MCM automatically once situation is resolved to normal. A

freeze label is applied on MachineDeployment/MachineSet to enforce the freeze condition.

|

Along with the above Custom Controllers and Resources, MCM requires the MachineClass to use K8s Secret that stores cloudconfig (initialization scripts used to create VMs) and cloud specific credentials. All these controllers work in an co-operative manner. They form a parent-child relationship with MachineDeployment Controller being the grandparent, MachineSet Controller being the parent, and Machine Controller being the child.

Development

To start using or developing the Machine Controller Manager, see the documentation in the /docs repository.

FAQ

An FAQ is available here.

cluster-api Implementation

1 - Documents

1.1 - Apis

Specification

ProviderSpec Schema

Machine

Machine is the representation of a physical or virtual machine.

| Field | Type | Description |

|---|

apiVersion | string | machine.sapcloud.io/v1alpha1 |

kind | string | Machine |

metadata | Kubernetes meta/v1.ObjectMeta | ObjectMeta for machine object Refer to the Kubernetes API documentation for the fields of the

metadata field. |

spec | MachineSpec | Spec contains the specification of the machine

class | ClassSpec | (Optional) Class contains the machineclass attributes of a machine | providerID | string | (Optional) ProviderID represents the provider’s unique ID given to a machine | nodeTemplate | NodeTemplateSpec | (Optional) NodeTemplateSpec describes the data a node should have when created from a template | MachineConfiguration | MachineConfiguration | (Members of MachineConfiguration are embedded into this type.) (Optional)Configuration for the machine-controller. |

|

status | MachineStatus | Status contains fields depicting the status |

MachineClass

MachineClass can be used to templatize and re-use provider configuration

across multiple Machines / MachineSets / MachineDeployments.

| Field | Type | Description |

|---|

apiVersion | string | machine.sapcloud.io/v1alpha1 |

kind | string | MachineClass |

metadata | Kubernetes meta/v1.ObjectMeta | (Optional)

Refer to the Kubernetes API documentation for the fields of the

metadata field. |

nodeTemplate | NodeTemplate | (Optional) NodeTemplate contains subfields to track all node resources and other node info required to scale nodegroup from zero |

credentialsSecretRef | Kubernetes core/v1.SecretReference | CredentialsSecretRef can optionally store the credentials (in this case the SecretRef does not need to store them).

This might be useful if multiple machine classes with the same credentials but different user-datas are used. |

providerSpec | k8s.io/apimachinery/pkg/runtime.RawExtension | Provider-specific configuration to use during node creation. |

provider | string | Provider is the combination of name and location of cloud-specific drivers. |

secretRef | Kubernetes core/v1.SecretReference | SecretRef stores the necessary secrets such as credentials or userdata. |

MachineDeployment

MachineDeployment enables declarative updates for machines and MachineSets.

| Field | Type | Description |

|---|

apiVersion | string | machine.sapcloud.io/v1alpha1 |

kind | string | MachineDeployment |

metadata | Kubernetes meta/v1.ObjectMeta | (Optional) Standard object metadata. Refer to the Kubernetes API documentation for the fields of the

metadata field. |

spec | MachineDeploymentSpec | (Optional) Specification of the desired behavior of the MachineDeployment.

replicas | int32 | (Optional) Number of desired machines. This is a pointer to distinguish between explicit

zero and not specified. Defaults to 0. | selector | Kubernetes meta/v1.LabelSelector | (Optional) Label selector for machines. Existing MachineSets whose machines are

selected by this will be the ones affected by this MachineDeployment. | template | MachineTemplateSpec | Template describes the machines that will be created. | strategy | MachineDeploymentStrategy | (Optional) The MachineDeployment strategy to use to replace existing machines with new ones. | minReadySeconds | int32 | (Optional) Minimum number of seconds for which a newly created machine should be ready

without any of its container crashing, for it to be considered available.

Defaults to 0 (machine will be considered available as soon as it is ready) | revisionHistoryLimit | *int32 | (Optional) The number of old MachineSets to retain to allow rollback.

This is a pointer to distinguish between explicit zero and not specified. | paused | bool | (Optional) Indicates that the MachineDeployment is paused and will not be processed by the

MachineDeployment controller. | rollbackTo | RollbackConfig | (Optional) DEPRECATED.

The config this MachineDeployment is rolling back to. Will be cleared after rollback is done. | progressDeadlineSeconds | *int32 | (Optional) The maximum time in seconds for a MachineDeployment to make progress before it

is considered to be failed. The MachineDeployment controller will continue to

process failed MachineDeployments and a condition with a ProgressDeadlineExceeded

reason will be surfaced in the MachineDeployment status. Note that progress will

not be estimated during the time a MachineDeployment is paused. This is not set

by default, which is treated as infinite deadline. |

|

status | MachineDeploymentStatus | (Optional) Most recently observed status of the MachineDeployment. |

MachineSet

MachineSet TODO

ClassSpec

(Appears on:

MachineSetSpec,

MachineSpec)

ClassSpec is the class specification of machine

| Field | Type | Description |

|---|

apiGroup | string | API group to which it belongs |

kind | string | Kind for machine class |

name | string | Name of machine class |

ConditionStatus

(string alias)

(Appears on:

MachineDeploymentCondition,

MachineSetCondition)

ConditionStatus are valid condition statuses

CurrentStatus

(Appears on:

MachineStatus)

CurrentStatus contains information about the current status of Machine.

InPlaceUpdateMachineDeployment

(Appears on:

MachineDeploymentStrategy)

InPlaceUpdateMachineDeployment specifies the spec to control the desired behavior of inplace update.

| Field | Type | Description |

|---|

UpdateConfiguration | UpdateConfiguration | (Members of UpdateConfiguration are embedded into this type.) |

orchestrationType | OrchestrationType | OrchestrationType specifies the orchestration type for the inplace update. |

LastOperation

(Appears on:

MachineSetStatus,

MachineStatus,

MachineSummary)

LastOperation suggests the last operation performed on the object

| Field | Type | Description |

|---|

description | string | Description of the current operation |

errorCode | string | (Optional) ErrorCode of the current operation if any |

lastUpdateTime | Kubernetes meta/v1.Time | Last update time of current operation |

state | MachineState | State of operation |

type | MachineOperationType | Type of operation |

MachineConfiguration

(Appears on:

MachineSpec)

MachineConfiguration describes the configurations useful for the machine-controller.

| Field | Type | Description |

|---|

drainTimeout | Kubernetes meta/v1.Duration | (Optional) MachineDraintimeout is the timeout after which machine is forcefully deleted. |

healthTimeout | Kubernetes meta/v1.Duration | (Optional) MachineHealthTimeout is the timeout after which machine is declared unhealhty/failed. |

creationTimeout | Kubernetes meta/v1.Duration | (Optional) MachineCreationTimeout is the timeout after which machinie creation is declared failed. |

inPlaceUpdateTimeout | Kubernetes meta/v1.Duration | (Optional) MachineInPlaceUpdateTimeout is the timeout after which in-place update is declared failed. |

disableHealthTimeout | *bool | (Optional) DisableHealthTimeout if set to true, health timeout will be ignored. Leading to machine never being declared failed. |

maxEvictRetries | *int32 | (Optional) MaxEvictRetries is the number of retries that will be attempted while draining the node. |

nodeConditions | *string | (Optional) NodeConditions are the set of conditions if set to true for MachineHealthTimeOut, machine will be declared failed. |

MachineDeploymentCondition

(Appears on:

MachineDeploymentStatus)

MachineDeploymentCondition describes the state of a MachineDeployment at a certain point.

| Field | Type | Description |

|---|

type | MachineDeploymentConditionType | Type of MachineDeployment condition. |

status | ConditionStatus | Status of the condition, one of True, False, Unknown. |

lastUpdateTime | Kubernetes meta/v1.Time | The last time this condition was updated. |

lastTransitionTime | Kubernetes meta/v1.Time | Last time the condition transitioned from one status to another. |

reason | string | The reason for the condition’s last transition. |

message | string | A human readable message indicating details about the transition. |

MachineDeploymentConditionType

(string alias)

(Appears on:

MachineDeploymentCondition)

MachineDeploymentConditionType are valid conditions of MachineDeployments

MachineDeploymentSpec

(Appears on:

MachineDeployment)

MachineDeploymentSpec is the specification of the desired behavior of the MachineDeployment.

| Field | Type | Description |

|---|

replicas | int32 | (Optional) Number of desired machines. This is a pointer to distinguish between explicit

zero and not specified. Defaults to 0. |

selector | Kubernetes meta/v1.LabelSelector | (Optional) Label selector for machines. Existing MachineSets whose machines are

selected by this will be the ones affected by this MachineDeployment. |

template | MachineTemplateSpec | Template describes the machines that will be created. |

strategy | MachineDeploymentStrategy | (Optional) The MachineDeployment strategy to use to replace existing machines with new ones. |

minReadySeconds | int32 | (Optional) Minimum number of seconds for which a newly created machine should be ready

without any of its container crashing, for it to be considered available.

Defaults to 0 (machine will be considered available as soon as it is ready) |

revisionHistoryLimit | *int32 | (Optional) The number of old MachineSets to retain to allow rollback.

This is a pointer to distinguish between explicit zero and not specified. |

paused | bool | (Optional) Indicates that the MachineDeployment is paused and will not be processed by the

MachineDeployment controller. |

rollbackTo | RollbackConfig | (Optional) DEPRECATED.

The config this MachineDeployment is rolling back to. Will be cleared after rollback is done. |

progressDeadlineSeconds | *int32 | (Optional) The maximum time in seconds for a MachineDeployment to make progress before it

is considered to be failed. The MachineDeployment controller will continue to

process failed MachineDeployments and a condition with a ProgressDeadlineExceeded

reason will be surfaced in the MachineDeployment status. Note that progress will

not be estimated during the time a MachineDeployment is paused. This is not set

by default, which is treated as infinite deadline. |

MachineDeploymentStatus

(Appears on:

MachineDeployment)

MachineDeploymentStatus is the most recently observed status of the MachineDeployment.

| Field | Type | Description |

|---|

observedGeneration | int64 | (Optional) The generation observed by the MachineDeployment controller. |

replicas | int32 | (Optional) Total number of non-terminated machines targeted by this MachineDeployment (their labels match the selector). |

updatedReplicas | int32 | (Optional) Total number of non-terminated machines targeted by this MachineDeployment that have the desired template spec. |

readyReplicas | int32 | (Optional) Total number of ready machines targeted by this MachineDeployment. |

availableReplicas | int32 | (Optional) Total number of available machines (ready for at least minReadySeconds) targeted by this MachineDeployment. |

unavailableReplicas | int32 | (Optional) Total number of unavailable machines targeted by this MachineDeployment. This is the total number of

machines that are still required for the MachineDeployment to have 100% available capacity. They may

either be machines that are running but not yet available or machines that still have not been created. |

conditions | []MachineDeploymentCondition | Represents the latest available observations of a MachineDeployment’s current state. |

collisionCount | *int32 | (Optional) Count of hash collisions for the MachineDeployment. The MachineDeployment controller uses this

field as a collision avoidance mechanism when it needs to create the name for the

newest MachineSet. |

failedMachines | []*github.com/gardener/machine-controller-manager/pkg/apis/machine/v1alpha1.MachineSummary | (Optional) FailedMachines has summary of machines on which lastOperation Failed |

MachineDeploymentStrategy

(Appears on:

MachineDeploymentSpec)

MachineDeploymentStrategy describes how to replace existing machines with new ones.

| Field | Type | Description |

|---|

type | MachineDeploymentStrategyType | (Optional) Type of MachineDeployment. Can be “Recreate” or “RollingUpdate”. Default is RollingUpdate. |

rollingUpdate | RollingUpdateMachineDeployment | (Optional) Rolling update config params. Present only if MachineDeploymentStrategyType = RollingUpdate.TODO: Update this to follow our convention for oneOf, whatever we decide it

to be. |

inPlaceUpdate | InPlaceUpdateMachineDeployment | (Optional) InPlaceUpdate update config params. Present only if MachineDeploymentStrategyType =

InPlaceUpdate. |

MachineDeploymentStrategyType

(string alias)

(Appears on:

MachineDeploymentStrategy)

MachineDeploymentStrategyType are valid strategy types for rolling MachineDeployments

MachineOperationType

(string alias)

(Appears on:

LastOperation)

MachineOperationType is a label for the operation performed on a machine object.

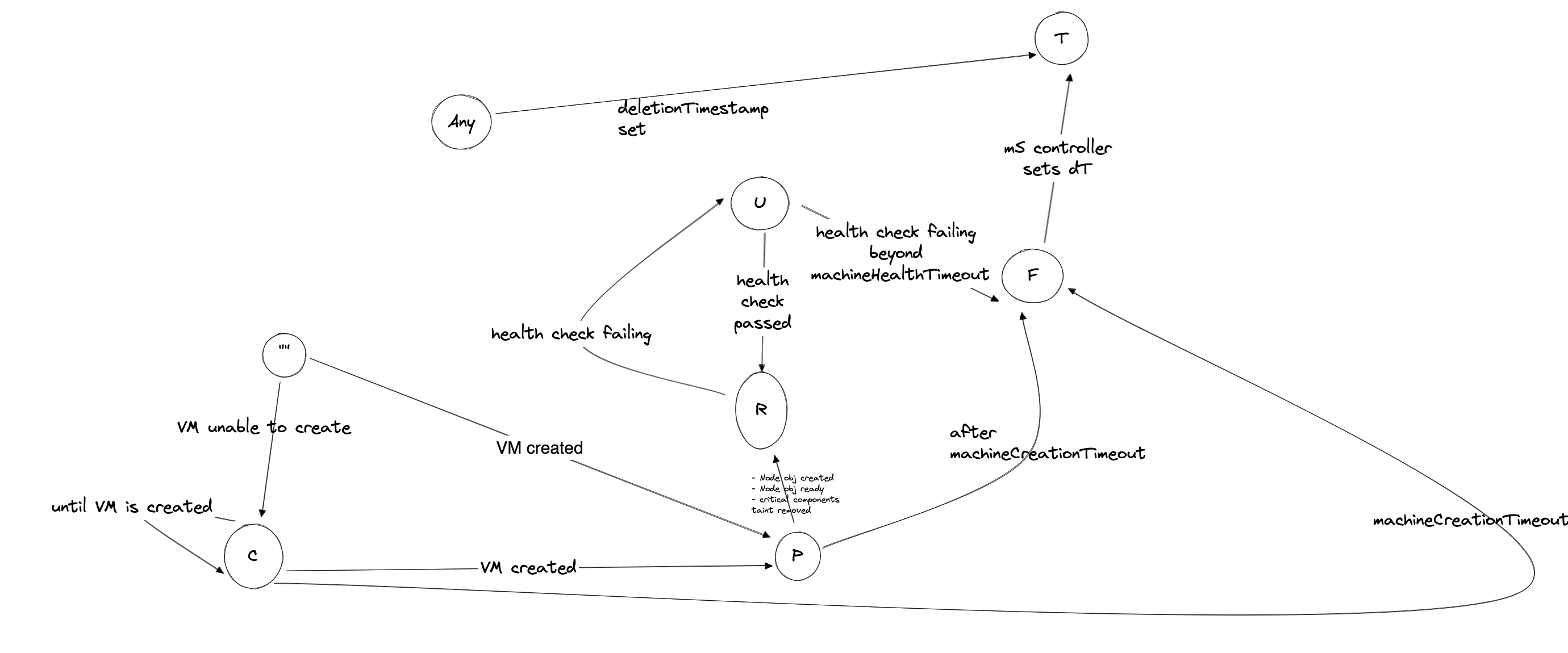

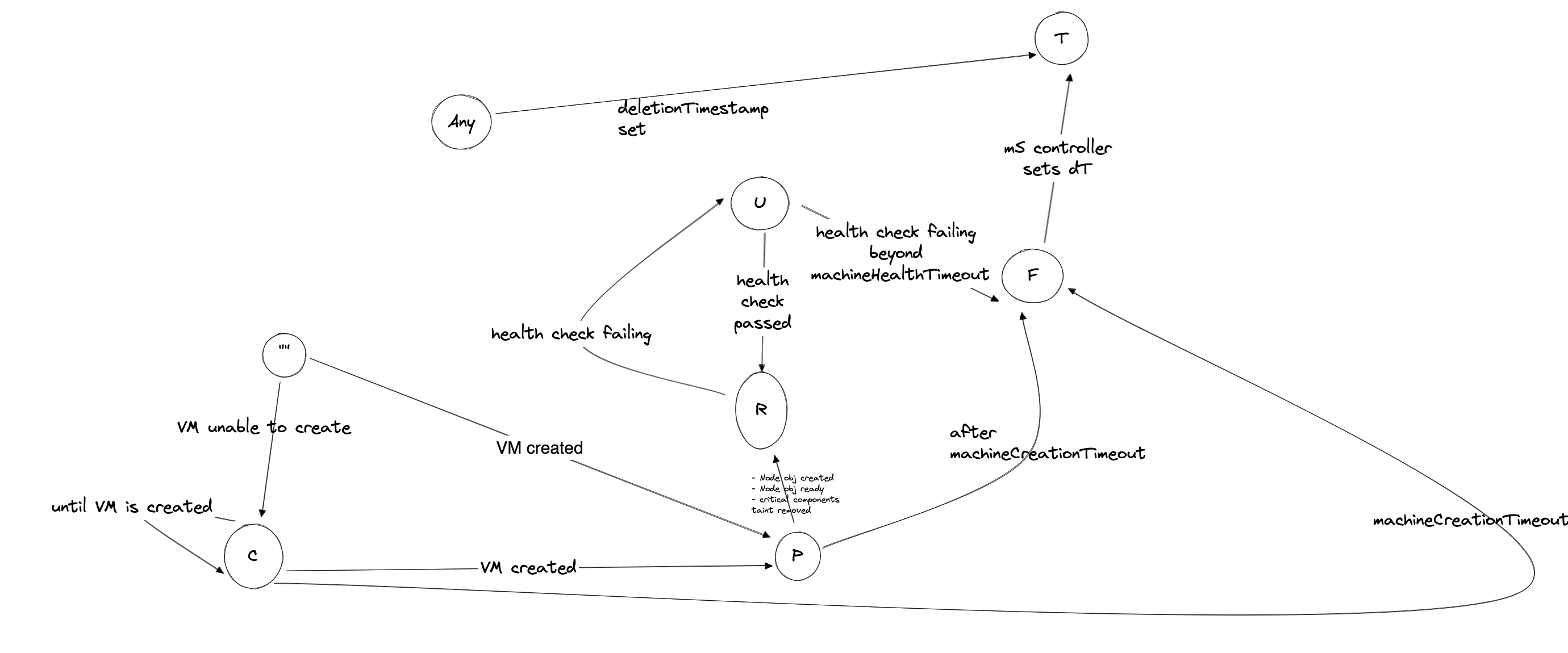

MachinePhase

(string alias)

(Appears on:

CurrentStatus)

MachinePhase is a label for the condition of a machine at the current time.

MachineSetCondition

(Appears on:

MachineSetStatus)

MachineSetCondition describes the state of a machine set at a certain point.

| Field | Type | Description |

|---|

type | MachineSetConditionType | Type of machine set condition. |

status | ConditionStatus | Status of the condition, one of True, False, Unknown. |

lastTransitionTime | Kubernetes meta/v1.Time | (Optional) The last time the condition transitioned from one status to another. |

reason | string | (Optional) The reason for the condition’s last transition. |

message | string | (Optional) A human readable message indicating details about the transition. |

MachineSetConditionType

(string alias)

(Appears on:

MachineSetCondition)

MachineSetConditionType is the condition on machineset object

MachineSetSpec

(Appears on:

MachineSet)

MachineSetSpec is the specification of a MachineSet.

MachineSetStatus

(Appears on:

MachineSet)

MachineSetStatus holds the most recently observed status of MachineSet.

| Field | Type | Description |

|---|

replicas | int32 | Replicas is the number of actual replicas. |

fullyLabeledReplicas | int32 | (Optional) The number of pods that have labels matching the labels of the pod template of the replicaset. |

readyReplicas | int32 | (Optional) The number of ready replicas for this replica set. |

availableReplicas | int32 | (Optional) The number of available replicas (ready for at least minReadySeconds) for this replica set. |

observedGeneration | int64 | (Optional) ObservedGeneration is the most recent generation observed by the controller. |

machineSetCondition | []MachineSetCondition | (Optional) Represents the latest available observations of a replica set’s current state. |

lastOperation | LastOperation | LastOperation performed |

failedMachines | []github.com/gardener/machine-controller-manager/pkg/apis/machine/v1alpha1.MachineSummary | (Optional) FailedMachines has summary of machines on which lastOperation Failed |

MachineSpec

(Appears on:

Machine,

MachineTemplateSpec)

MachineSpec is the specification of a Machine.

| Field | Type | Description |

|---|

class | ClassSpec | (Optional) Class contains the machineclass attributes of a machine |

providerID | string | (Optional) ProviderID represents the provider’s unique ID given to a machine |

nodeTemplate | NodeTemplateSpec | (Optional) NodeTemplateSpec describes the data a node should have when created from a template |

MachineConfiguration | MachineConfiguration | (Members of MachineConfiguration are embedded into this type.) (Optional)Configuration for the machine-controller. |

MachineState

(string alias)

(Appears on:

LastOperation)

MachineState is a current state of the operation.

MachineStatus

(Appears on:

Machine)

MachineStatus holds the most recently observed status of Machine.

| Field | Type | Description |

|---|

conditions | []Kubernetes core/v1.NodeCondition | Conditions of this machine, same as node |

lastOperation | LastOperation | Last operation refers to the status of the last operation performed |

currentStatus | CurrentStatus | Current status of the machine object |

lastKnownState | string | (Optional) LastKnownState can store details of the last known state of the VM by the plugins.

It can be used by future operation calls to determine current infrastucture state |

MachineSummary

MachineSummary store the summary of machine.

| Field | Type | Description |

|---|

name | string | Name of the machine object |

providerID | string | ProviderID represents the provider’s unique ID given to a machine |

lastOperation | LastOperation | Last operation refers to the status of the last operation performed |

ownerRef | string | OwnerRef |

MachineTemplateSpec

(Appears on:

MachineDeploymentSpec,

MachineSetSpec)

MachineTemplateSpec describes the data a machine should have when created from a template

NodeTemplate

(Appears on:

MachineClass)

NodeTemplate contains subfields to track all node resources and other node info required to scale nodegroup from zero

| Field | Type | Description |

|---|

capacity | Kubernetes core/v1.ResourceList | Capacity contains subfields to track all node resources required to scale nodegroup from zero |

instanceType | string | Instance type of the node belonging to nodeGroup |

region | string | Region of the expected node belonging to nodeGroup |

zone | string | Zone of the expected node belonging to nodeGroup |

architecture | *string | (Optional) CPU Architecture of the node belonging to nodeGroup |

NodeTemplateSpec

(Appears on:

MachineSpec)

NodeTemplateSpec describes the data a node should have when created from a template

| Field | Type | Description |

|---|

metadata | Kubernetes meta/v1.ObjectMeta | (Optional)

Refer to the Kubernetes API documentation for the fields of the

metadata field. |

spec | Kubernetes core/v1.NodeSpec | (Optional) NodeSpec describes the attributes that a node is created with.

podCIDR | string | (Optional) PodCIDR represents the pod IP range assigned to the node. | podCIDRs | []string | (Optional) podCIDRs represents the IP ranges assigned to the node for usage by Pods on that node. If this

field is specified, the 0th entry must match the podCIDR field. It may contain at most 1 value for

each of IPv4 and IPv6. | providerID | string | (Optional) ID of the node assigned by the cloud provider in the format: :// | unschedulable | bool | (Optional) Unschedulable controls node schedulability of new pods. By default, node is schedulable.

More info: https://kubernetes.io/docs/concepts/nodes/node/#manual-node-administration | taints | []Kubernetes core/v1.Taint | (Optional) If specified, the node’s taints. | configSource | Kubernetes core/v1.NodeConfigSource | (Optional) Deprecated: Previously used to specify the source of the node’s configuration for the DynamicKubeletConfig feature. This feature is removed. | externalID | string | (Optional) Deprecated. Not all kubelets will set this field. Remove field after 1.13.

see: https://issues.k8s.io/61966 |

|

OrchestrationType

(string alias)

(Appears on:

InPlaceUpdateMachineDeployment)

OrchestrationType specifies the orchestration type for the inplace update.

RollbackConfig

(Appears on:

MachineDeploymentSpec)

RollbackConfig is the config to rollback a MachineDeployment

| Field | Type | Description |

|---|

revision | int64 | (Optional) The revision to rollback to. If set to 0, rollback to the last revision. |

RollingUpdateMachineDeployment

(Appears on:

MachineDeploymentStrategy)

RollingUpdateMachineDeployment is the spec to control the desired behavior of rolling update.

| Field | Type | Description |

|---|

UpdateConfiguration | UpdateConfiguration | (Members of UpdateConfiguration are embedded into this type.) |

UpdateConfiguration

(Appears on:

InPlaceUpdateMachineDeployment,

RollingUpdateMachineDeployment)

UpdateConfiguration specifies the udpate configuration for the deployment strategy.

| Field | Type | Description |

|---|

maxUnavailable | k8s.io/apimachinery/pkg/util/intstr.IntOrString | (Optional) The maximum number of machines that can be unavailable during the update.

Value can be an absolute number (ex: 5) or a percentage of desired machines (ex: 10%).

Absolute number is calculated from percentage by rounding down.

This can not be 0 if MaxSurge is 0.

Example: when this is set to 30%, the old machine set can be scaled down to 70% of desired machines

immediately when the update starts. Once new machines are ready, old machine set

can be scaled down further, followed by scaling up the new machine set, ensuring

that the total number of machines available at all times during the update is at

least 70% of desired machines. |

maxSurge | k8s.io/apimachinery/pkg/util/intstr.IntOrString | (Optional) The maximum number of machines that can be scheduled above the desired number of

machines.

Value can be an absolute number (ex: 5) or a percentage of desired machines (ex: 10%).

This can not be 0 if MaxUnavailable is 0.

Absolute number is calculated from percentage by rounding up.

Example: when this is set to 30%, the new machine set can be scaled up immediately when

the update starts, such that the total number of old and new machines does not exceed

130% of desired machines. Once old machines have been killed,

new machine set can be scaled up further, ensuring that total number of machines running

at any time during the update is utmost 130% of desired machines. |

Generated with gen-crd-api-reference-docs

2 - Proposals

2.1 - Excess Reserve Capacity

Excess Reserve Capacity

Goal

Currently, autoscaler optimizes the number of machines for a given application-workload. Along with effective resource utilization, this feature brings concern where, many times, when new application instances are created - they don’t find space in existing cluster. This leads the cluster-autoscaler to create new machines via MachineDeployment, which can take from 3-4 minutes to ~10 minutes, for the machine to really come-up and join the cluster. In turn, application-instances have to wait till new machines join the cluster.

One of the promising solutions to this issue is Excess Reserve Capacity. Idea is to keep a certain number of machines or percent of resources[cpu/memory] always available, so that new workload, in general, can be scheduled immediately unless huge spike in the workload. Also, the user should be given enough flexibility to choose how many resources or how many machines should be kept alive and non-utilized as this affects the Cost directly.

Note

- We decided to go with Approach-4 which is based on low priority pods. Please find more details here: https://github.com/gardener/gardener/issues/254

- Approach-3 looks more promising in long term, we may decide to adopt that in future based on developments/contributions in autoscaler-community.

Possible Approaches

Following are the possible approaches, we could think of so far.

Approach 1: Enhance Machine-controller-manager to also entertain the excess machines

Machine-controller-manager currently takes care of the machines in the shoot cluster starting from creation-deletion-health check to efficient rolling-update of the machines. From the architecture point of view, MachineSet makes sure that X number of machines are always running and healthy. MachineDeployment controller smartly uses this facility to perform rolling-updates.

We can expand the scope of MachineDeployment controller to maintain excess number of machines by introducing new parallel independent controller named MachineTaint controller. This will result in MCM to include Machine, MachineSet, MachineDeployment, MachineSafety, MachineTaint controllers. MachineTaint controller does not need to introduce any new CRD - analogy fits where taint-controller also resides into kube-controller-manager.

Only Job of MachineTaint controller will be:

- List all the Machines under each MachineDeployment.

- Maintain taints of noSchedule and noExecute on

X latest MachineObjects. - There should be an event-based informer mechanism where MachineTaintController gets to know about any Update/Delete/Create event of MachineObjects - in turn, maintains the noSchedule and noExecute taints on all the latest machines.

- Why latest machines?

- Whenever autoscaler decides to add new machines - essentially ScaleUp event - taints from the older machines are removed and newer machines get the taints. This way X number of Machines immediately becomes free for new pods to be scheduled.

- While ScaleDown event, autoscaler specifically mentions which machines should be deleted, and that should not bring any concerns. Though we will have to put proper label/annotation defined by autoscaler on taintedMachines, so that autoscaler does not consider the taintedMachines for deletion while scale-down.

* Annotation on tainted node:

"cluster-autoscaler.kubernetes.io/scale-down-disabled": "true"

Implementation Details:

- Expect new optional field ExcessReplicas in

MachineDeployment.Spec. MachineDeployment controller now adds both Spec.Replicas and Spec.ExcessReplicas[if provided], and considers that as a standard desiredReplicas.

- Current working of MCM will not be affected if ExcessReplicas field is kept nil. - MachineController currently reads the NodeObject and sets the MachineConditions in MachineObject. Machine-controller will now also read the taints/labels from the MachineObject - and maintains it on the NodeObject.

We expect cluster-autoscaler to intelligently make use of the provided feature from MCM.

- CA gets the input of min:max:excess from Gardener. CA continues to set the

MachineDeployment.Spec.Replicas as usual based on the application-workload. - In addition, CA also sets the

MachieDeployment.Spec.ExcessReplicas . - Corner-case:

* CA should decrement the excessReplicas field accordingly when desiredReplicas+excessReplicas on MachineDeployment goes beyond max.

Approach 2: Enhance Cluster-autoscaler by simulating fake pods in it

- There was already an attempt by community to support this feature.

Approach 3: Enhance cluster-autoscaler to support pluggable scaling-events

- Forked version of cluster-autoscaler could be improved to plug-in the algorithm for excess-reserve capacity.

- Needs further discussion around upstream support.

- Create golang channel to separate the algorithms to trigger scaling (hard-coded in cluster-autoscaler, currently) from the algorithms about how to to achieve the scaling (already pluggable in cluster-autoscaler). This kind of separation can help us introduce/plug-in new algorithms (such as based node resource utilisation) without affecting existing code-base too much while almost completely re-using the code-base for the actual scaling.

- Also this approach is not specific to our fork of cluster-autoscaler. It can be made upstream eventually as well.

Approach 4: Make intelligent use of Low-priority pods

- Refer to: pod-priority-preemption

- TL; DR:

- High priority pods can preempt the low-priority pods which are already scheduled.

- Pre-create bunch[equivivalent of X shoot-control-planes] of low-priority pods with priority of zero, then start creating the workload pods with better priority which will reschedule the low-priority pods or otherwise keep them in pending state if the limit for max-machines has reached.

- This is still alpha feature.

2.2 - GRPC Based Implementation of Cloud Providers

GRPC based implementation of Cloud Providers - WIP

Goal:

Currently the Cloud Providers’ (CP) functionalities ( Create(), Delete(), List() ) are part of the Machine Controller Manager’s (MCM)repository. Because of this, adding support for new CPs into MCM requires merging code into MCM which may not be required for core functionalities of MCM itself. Also, for various reasons it may not be feasible for all CPs to merge their code with MCM which is an Open Source project.

Because of these reasons, it was decided that the CP’s code will be moved out in separate repositories so that they can be maintained separately by the respective teams. Idea is to make MCM act as a GRPC server, and CPs as GRPC clients. The CP can register themselves with the MCM using a GRPC service exposed by the MCM. Details of this approach is discussed below.

How it works:

MCM acts as GRPC server and listens on a pre-defined port 5000. It implements below GRPC services. Details of each of these services are mentioned in next section.

Register()GetMachineClass()GetSecret()

GRPC services exposed by MCM:

Register()

rpc Register(stream DriverSide) returns (stream MCMside) {}

The CP GRPC client calls this service to register itself with the MCM. The CP passes the kind and the APIVersion which it implements, and MCM maintains an internal map for all the registered clients. A GRPC stream is returned in response which is kept open througout the life of both the processes. MCM uses this stream to communicate with the client for machine operations: Create(), Delete() or List().

The CP client is responsible for reading the incoming messages continuously, and based on the operationType parameter embedded in the message, it is supposed to take the required action. This part is already handled in the package grpc/infraclient.

To add a new CP client, import the package, and implement the ExternalDriverProvider interface:

type ExternalDriverProvider interface {

Create(machineclass *MachineClassMeta, credentials, machineID, machineName string) (string, string, error)

Delete(machineclass *MachineClassMeta, credentials, machineID string) error

List(machineclass *MachineClassMeta, credentials, machineID string) (map[string]string, error)

}

GetMachineClass()

rpc GetMachineClass(MachineClassMeta) returns (MachineClass) {}

As part of the message from MCM for various machine operations, the name of the machine class is sent instead of the full machine class spec. The CP client is expected to use this GRPC service to get the full spec of the machine class. This optionally enables the client to cache the machine class spec, and make the call only if the machine calass spec is not already cached.

GetSecret()

rpc GetSecret(SecretMeta) returns (Secret) {}

As part of the message from MCM for various machine operations, the Cloud Config (CC) and CP credentials are not sent. The CP client is expected to use this GRPC service to get the secret which has CC and CP’s credentials from MCM. This enables the client to cache the CC and credentials, and to make the call only if the data is not already cached.

How to add a new Cloud Provider’s support

Import the package grpc/infraclient and grpc/infrapb from MCM (currently in MCM’s “grpc-driver” branch)

- Implement the interface

ExternalDriverProviderCreate(): Creates a new machineDelete(): Deletes a machineList(): Lists machines

- Use the interface

MachineClassDataProviderGetMachineClass(): Makes the call to MCM to get machine class specGetSecret(): Makes the call to MCM to get secret containing Cloud Config and CP’s credentials

Example implementation:

Refer GRPC based implementation for AWS client:

https://github.com/ggaurav10/aws-driver-grpc

2.3 - Hotupdate Instances

Motivation

MCM Issue#750 There is a requirement to provide a way for consumers to add tags which can be hot-updated onto VMs. This requirement can be generalized to also offer a convenient way to specify tags which can be applied to VMs, NICs, Devices etc.

MCM Issue#635 which in turn points to MCM-Provider-AWS Issue#36 - The issue hints at other fields like enable/disable source/destination checks for NAT instances which needs to be hot-updated on network interfaces.

In GCP provider - instance.ServiceAccounts can be updated without the need to roll-over the instance. See

Boundary Condition

All tags that are added via means other than MachineClass.ProviderSpec should be preserved as-is. Only updates done to tags in MachineClass.ProviderSpec should be applied to the infra resources (VM/NIC/Disk).

What is available today?

WorkerPool configuration inside shootYaml provides a way to set labels. As per the definition these labels will be applied on Node resources. Currently these labels are also passed to the VMs as tags. There is no distinction made between Node labels and VM tags.

MachineClass has a field which holds provider specific configuration and one such configuration is tags. Gardener provider extensions updates the tags in MachineClass.

Let us look at an example of MachineClass.ProviderSpec in AWS:

providerSpec:

ami: ami-02fe00c0afb75bbd3

tags:

#[section-1] pool lables added by gardener extension

#########################################################

kubernetes.io/arch: amd64

networking.gardener.cloud/node-local-dns-enabled: "true"

node.kubernetes.io/role: node

worker.garden.sapcloud.io/group: worker-ser234

worker.gardener.cloud/cri-name: containerd

worker.gardener.cloud/pool: worker-ser234

worker.gardener.cloud/system-components: "true"

#[section-2] Tags defined in the gardener-extension-provider-aws

###########################################################

kubernetes.io/cluster/cluster-full-name: "1"

kubernetes.io/role/node: "1"

#[section-3]

###########################################################

user-defined-key1: user-defined-val1

user-defined-key2: user-defined-val2

Refer src for tags defined in section-1.

Refer src for tags defined in section-2.

Tags in section-3 are defined by the user.

Out of the above three tag categories, MCM depends section-2 tags (mandatory-tags) for its orphan collection and Driver’s DeleteMachineand GetMachineStatus to work.

ProviderSpec.Tags are transported to the provider specific resources as follows:

| Provider | Resources Tags are set on | Code Reference | Comment |

|---|

| AWS | Instance(VM), Volume, Network-Interface | aws-VM-Vol-NIC | No distinction is made between tags set on VM, NIC or Volume |

| Azure | Instance(VM), Network-Interface | azure-VM-parameters & azureNIC-Parameters | |

| GCP | Instance(VM), 1 tag: name (denoting the name of the worker) is added to Disk | gcp-VM & gcp-Disk | In GCP key-value pairs are called labels while network tags have only keys |

| AliCloud | Instance(VM) | aliCloud-VM | |

What are the problems with the current approach?

There are a few shortcomings in the way tags/labels are handled:

- Tags can only be set at the time a machine is created.

- There is no distinction made amongst tags/labels that are added to VM’s, disks or network interfaces. As stated above for AWS same set of tags are added to all. There is a limit defined on the number of tags/labels that can be associated to the devices (disks, VMs, NICs etc). Example: In AWS a max of 50 user created tags are allowed. Similar restrictions are applied on different resources across providers. Therefore adding all tags to all devices even if the subset of tags are not meant for that resource exhausts the total allowed tags/labels for that resource.

- The only placeholder in shoot yaml as mentioned above is meant to only hold labels that should be applied on primarily on the Node objects. So while you could use the node labels for extended resources, using it also for tags is not clean.

- There is no provision in the shoot YAML today to add tags only to a subset of resources.

MachineClass Update and its impact

When Worker.ProviderConfig is changed then a worker-hash is computed which includes the raw ProviderConfig. This hash value is then used as a suffix when constructing the name for a MachineClass. See aws-extension-provider as an example. A change in the name of the MachineClass will then in-turn trigger a rolling update of machines. Since tags are provider specific and therefore will be part of ProviderConfig, any update to them will result in a rolling-update of machines.

Proposal

Shoot YAML changes

Provider specific configuration is set via providerConfig section for each worker pool.

Example worker provider config (current):

providerConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: WorkerConfig

volume:

iops: 10000

dataVolumes:

- name: kubelet-dir

snapshotID: snap-13234

iamInstanceProfile: # (specify either ARN or name)

name: my-profile

arn: my-instance-profile-arn

It is proposed that an additional field be added for tags under providerConfig. Proposed changed YAML:

providerConfig:

apiVersion: aws.provider.extensions.gardener.cloud/v1alpha1

kind: WorkerConfig

volume:

iops: 10000

dataVolumes:

- name: kubelet-dir

snapshotID: snap-13234

iamInstanceProfile: # (specify either ARN or name)

name: my-profile

arn: my-instance-profile-arn

tags:

vm:

key1: val1

key2: val2

..

# for GCP network tags are just keys (there is no value associated to them).

# What is shown below will work for AWS provider.

network:

key3: val3

key4: val4

Under tags clear distinction is made between tags for VMs, Disks, network interface etc. Each provider has a different allowed-set of characters that it accepts as key names, has different limits on the tags that can be set on a resource (disk, NIC, VM etc.) and also has a different format (GCP network tags are only keys).

TODO:

Check if worker.labels are getting added as tags on infra resources. We should continue to support it and double check that these should only be added to VMs and not to other resources.

Should we support users adding VM tags as node labels?

Provider specific WorkerConfig API changes

Taking AWS provider extension as an example to show the changes.

WorkerConfig will now have the following changes:

- A new field for tags will be introduced.

- Additional metadata for struct fields will now be added via

struct tags.

type WorkerConfig struct {

metav1.TypeMeta

Volume *Volume

// .. all fields are not mentioned here.

// Tags are a collection of tags to be set on provider resources (e.g. VMs, Disks, Network Interfaces etc.)

Tags *Tags `hotupdatable:true`

}

// Tags is a placeholder for all tags that can be set/updated on VMs, Disks and Network Interfaces.

type Tags struct {

// VM tags set on the VM instances.

VM map[string]string

// Network tags set on the network interfaces.

Network map[string]string

// Disk tags set on the volumes/disks.

Disk map[string]string

}

There is a need to distinguish fields within ProviderSpec (which is then mapped to the above WorkerConfig) which can be updated without the need to change the hash suffix for MachineClass and thus trigger a rolling update on machines.

To achieve that we propose to use struct tag hotupdatable whose value indicates if the field can be updated without the need to do a rolling update. To ensure backward compatibility, all fields which do not have this tag or have hotupdatable set to false will be considered as immutable and will require a rolling update to take affect.

Gardener provider extension changes

Taking AWS provider extension as an example. Following changes should be made to all gardener provider extensions

AWS Gardener Extension generates machine config using worker pool configuration. As part of that it also computes the workerPoolHash which is then used to create the name of the MachineClass.

Currently WorkerPoolHash function uses the entire providerConfig to compute the hash. Proposal is to do the following:

- Remove the code from function

WorkerPoolHash. - Add another function to compute hash using all immutable fields in the provider config struct and then pass that to

worker.WorkerPoolHash as additionalData.

The above will ensure that tags and any other field in WorkerConfig which is marked with updatable:true is not considered for hash computation and will therefore not contribute to changing the name of MachineClass object thus preventing a rolling update.

WorkerConfig and therefore the contained tags will be set as ProviderSpec in MachineClass.

If only fields which have updatable:true are changed then it should result in update/patch of MachineClass and not creation.

Driver interface changes

Driver interface which is a facade to provider specific API implementations will have one additional method.

type Driver interface {

// .. existing methods are not mentioned here for brevity.

UpdateMachine(context.Context, *UpdateMachineRequest) error

}

// UpdateMachineRequest is the request to update machine tags.

type UpdateMachineRequest struct {

ProviderID string

LastAppliedProviderSpec raw.Extension

MachineClass *v1alpha1.MachineClass

Secret *corev1.Secret

}

If any machine-controller-manager-provider-<providername> has not implemented UpdateMachine then updates of tags on Instances/NICs/Disks will not be done. An error message will be logged instead.

Machine Class reconciliation

Current MachineClass reconciliation does not reconcile MachineClass resource updates but it only enqueues associated machines. The reason is that it is assumed that anything that is changed in a MachineClass will result in a creation of a new MachineClass with a different name. This will result in a rolling update of all machines using the MachineClass as a template.

However, it is possible that there is data that all machines in a MachineSet share which do not require a rolling update (e.g. tags), therefore there is a need to reconcile the MachineClass as well.

Reconciliation Changes

In order to ensure that machines get updated eventually with changes to the hot-updatable fields defined in the MachineClass.ProviderConfig as raw.Extension.

We should only fix MCM Issue#751 in the MachineClass reconciliation and let it enqueue the machines as it does today. We additionally propose the following two things:

Introduce a new annotation last-applied-providerspec on every machine resource. This will capture the last successfully applied MachineClass.ProviderSpec on this instance.

Enhance the machine reconciliation to include code to hot-update machine.

In machine-reconciliation there are currently two flows triggerDeletionFlow and triggerCreationFlow. When a machine gets enqueued due to changes in MachineClass then in this method following changes needs to be introduced:

Check if the machine has last-applied-providerspec annotation.

Case 1.1

If the annotation is not present then there can be just 2 possibilities:

It is a fresh/new machine and no backing resources (VM/NIC/Disk) exist yet. The current flow checks if the providerID is empty and Status.CurrenStatus.Phase is empty then it enters into the triggerCreationFlow.

It is an existing machine which does not yet have this annotation. In this case call Driver.UpdateMachine. If the driver returns no error then add last-applied-providerspec annotation with the value of MachineClass.ProviderSpec to this machine.

Case 1.2

If the annotation is present then compare the last applied provider-spec with the current provider-spec. If there are changes (check their hash values) then call Driver.UpdateMachine. If the driver returns no error then add last-applied-providerspec annotation with the value of MachineClass.ProviderSpec to this machine.

NOTE: It is assumed that if there are changes to the fields which are not marked as hotupdatable then it will result in the change of name for MachineClass resulting in a rolling update of machines. If the name has not changed + machine is enqueued + there is a change in machine-class then it will be change to a hotupdatable fields in the spec.

Trigger update flow can be done after reconcileMachineHealth and syncMachineNodeTemplates in machine-reconciliation.

There are 2 edge cases that needs attention and special handling:

Premise: It is identified that there is an update done to one or more hotupdatable fields in the MachineClass.ProviderSpec.

Edge-Case-1

In the machine reconciliation, an update-machine-flow is triggered which in-turn calls Driver.UpdateMachine. Consider the case where the hot update needs to be done to all VM, NIC and Disk resources. The driver returns an error which indicates a partial-failure. As we have mentioned above only when Driver.UpdateMachine returns no error will last-applied-providerspec be updated. In case of partial failure the annotation will not be updated. This event will be re-queued for a re-attempt. However consider a case where before the item is re-queued, another update is done to MachineClass reverting back the changes to the original spec.

| At T1 | At T2 (T2 > T1) | At T3 (T3> T2) |

|---|

last-applied-providerspec=S1

MachineClass.ProviderSpec = S1 | last-applied-providerspec=S1

MachineClass.ProviderSpec = S2

Another update to MachineClass.ProviderConfig = S3 is enqueue (S3 == S1) | last-applied-providerspec=S1

Driver.UpdateMachine for S1-S2 update - returns partial failure

Machine-Key is requeued |

At T4 (T4> T3) when a machine is reconciled then it checks that last-applied-providerspec is S1 and current MachineClass.ProviderSpec = S3 and since S3 is same as S1, no update is done. At T2 Driver.UpdateMachine was called to update the machine with S2 but it partially failed. So now you will have resources which are partially updated with S2 and no further updates will be attempted.

Edge-Case-2

The above situation can also happen when Driver.UpdateMachine is in the process of updating resources. It has hot-updated lets say 1 resource. But now MCM crashes. By the time it comes up another update to MachineClass.ProviderSpec is done essentially reverting back the previous change (same case as above). In this case reconciliation loop never got a chance to get any response from the driver.

To handle the above edge cases there are 2 options:

Option #1

Introduce a new annotation inflight-providerspec-hash . The value of this annotation will be the hash value of the MachineClass.ProviderSpec that is in the process of getting applied on this machine. The machine will be updated with this annotation just before calling Driver.UpdateMachine (in the trigger-update-machine-flow). If the driver returns no error then (in a single update):

last-applied-providerspec will be updated

inflight-providerspec-hash annotation will be removed.

Option #2 - Preferred

Leverage Machine.Status.LastOperation with Type set to MachineOperationUpdate and State set to MachineStateProcessing This status will be updated just before calling Driver.UpdateMachine.

Semantically LastOperation captures the details of the operation post-operation and not pre-operation. So this solution would be a divergence from the norm.

2.4 - Initialize Machine

Post-Create Initialization of Machine Instance

Background

Today the driver.Driver facade represents the boundary between the the machine-controller and its various provider specific implementations.

We have abstract operations for creation/deletion and listing of machines (actually compute instances) but we do not correctly handle post-creation initialization logic. Nor do we provide an abstract operation to represent the hot update of an instance after creation.

We have found this to be necessary for several use cases. Today in the MCM AWS Provider, we already misuse driver.GetMachineStatus which is supposed to be a read-only operation obtaining the status of an instance.

Each AWS EC2 instance performs source/destination checks by default.

For EC2 NAT instances

these should be disabled. This is done by issuing

a ModifyInstanceAttribute request with the SourceDestCheck set to false. The MCM AWS Provider, decodes the AWSProviderSpec, reads providerSpec.SrcAndDstChecksEnabled and correspondingly issues the call to modify the already launched instance. However, this should be done as an action after creating the instance and should not be part of the VM status retrieval.

Similarly, there is a pending PR to add the Ipv6AddessCount and Ipv6PrefixCount to enable the assignment of an ipv6 address and an ipv6 prefix to instances. This requires constructing and issuing an AssignIpv6Addresses request after the EC2 instance is available.

We have other uses-cases such as MCM Issue#750 where there is a requirement to provide a way for consumers to add tags which can be hot-updated onto instances. This requirement can be generalized to also offer a convenient way to specify tags which can be applied to VMs, NICs, Devices etc.

We have a need for “machine-instance-not-ready” taint as described in MCM#740 which should only get removed once the post creation updates are finished.

Objectives

We will split the fulfilment of this overall need into 2 stages of implementation.

Stage-A: Support post-VM creation initialization logic of the instance suing a proposed Driver.InitializeMachine by permitting provider implementors to add initialization logic after VM creation, return with special new error code codes.Initialization for initialization errors and correspondingly support a new machine operation stage InstanceInitialization which will be updated in the machine LastOperation. The triggerCreationFlow - a reconciliation sub-flow of the MCM responsible for orchestrating instance creation and updating machine status will be changed to support this behaviour.

Stage-B: Introduction of Driver.UpdateMachine and enhancing the MCM, MCM providers and gardener extension providers to support hot update of instances through Driver.UpdateMachine. The MCM triggerUpdationFlow - a reconciliation sub-flow of the MCM which is supposed to be responsible for orchestrating instance update - but currently not used, will be updated to invoke the provider Driver.UpdateMachine on hot-updates to to the Machine object

Stage-A Proposal

Current MCM triggerCreationFlow

Today, reconcileClusterMachine which is the main routine for the Machine object reconciliation invokes triggerCreationFlow at the end when the machine.Spec.ProviderID is empty or if the machine.Status.CurrentStatus.Phase is empty or in CrashLoopBackOff

%%{ init: {

'themeVariables':

{ 'fontSize': '12px'}

} }%%

flowchart LR

other["..."]

-->chk{"machine ProviderID empty

OR

Phase empty or CrashLoopBackOff ?

"}--yes-->triggerCreationFlow

chk--noo-->LongRetry["return machineutils.LongRetry"]Today, the triggerCreationFlow is illustrated below with some minor details omitted/compressed for brevity

NOTES

- The

lastop below is an abbreviation for machine.Status.LastOperation. This, along with the machine phase is generally updated on the Machine object just before returning from the method. - regarding

phase=CrashLoopBackOff|Failed. the machine phase may either be CrashLoopBackOff or move to Failed if the difference between current time and the machine.CreationTimestamp has exceeded the configured MachineCreationTimeout.

%%{ init: {

'themeVariables':

{ 'fontSize': '12px'}

} }%%

flowchart TD

end1(("end"))

begin((" "))

medretry["return MediumRetry, err"]

shortretry["return ShortRetry, err"]

medretry-->end1

shortretry-->end1

begin-->AddBootstrapTokenToUserData

-->gms["statusResp,statusErr=driver.GetMachineStatus(...)"]

-->chkstatuserr{"Check statusErr"}

chkstatuserr--notFound-->chknodelbl{"Chk Node Label"}

chkstatuserr--else-->createFailed["lastop.Type=Create,lastop.state=Failed,phase=CrashLoopBackOff|Failed"]-->medretry

chkstatuserr--nil-->initnodename["nodeName = statusResp.NodeName"]-->setnodename

chknodelbl--notset-->createmachine["createResp, createErr=driver.CreateMachine(...)"]-->chkCreateErr{"Check createErr"}

chkCreateErr--notnil-->createFailed

chkCreateErr--nil-->getnodename["nodeName = createResp.NodeName"]

-->chkstalenode{"nodeName != machine.Name\n//chk stale node"}

chkstalenode--false-->setnodename["if unset machine.Labels['node']= nodeName"]

-->machinepending["if empty/crashloopbackoff lastop.type=Create,lastop.State=Processing,phase=Pending"]

-->shortretry

chkstalenode--true-->delmachine["driver.DeleteMachine(...)"]

-->permafail["lastop.type=Create,lastop.state=Failed,Phase=Failed"]

-->shortretry

subgraph noteA [" "]

permafail -.- note1(["VM was referring to stale node obj"])

end

style noteA opacity:0

subgraph noteB [" "]

setnodename-.- note2(["Proposal: Introduce Driver.InitializeMachine after this"])

endEnhancement of MCM triggerCreationFlow

Relevant Observations on Current Flow

- Observe that we always perform a call to

Driver.GetMachineStatus and only then conditionally perform a call to Driver.CreateMachine if there was was no machine found. - Observe that after the call to a successful

Driver.CreateMachine, the machine phase is set to Pending, the LastOperation.Type is currently set to Create and the LastOperation.State set to Processing before returning with a ShortRetry. The LastOperation.Description is (unfortunately) set to the fixed message: Creating machine on cloud provider. - Observe that after an erroneous call to

Driver.CreateMachine, the machine phase is set to CrashLoopBackOff or Failed (in case of creation timeout).

The following changes are proposed with a view towards minimal impact on current code and no introduction of a new Machine Phase.

MCM Changes

- We propose introducing a new machine operation

Driver.InitializeMachine with the following signaturetype Driver interface {

// .. existing methods are omitted for brevity.

// InitializeMachine call is responsible for post-create initialization of the provider instance.

InitializeMachine(context.Context, *InitializeMachineRequest) error

}

// InitializeMachineRequest is the initialization request for machine instance initialization

type InitializeMachineRequest struct {

// Machine object whose VM instance should be initialized

Machine *v1alpha1.Machine

// MachineClass backing the machine object

MachineClass *v1alpha1.MachineClass

// Secret backing the machineClass object

Secret *corev1.Secret

}

- We propose introducing a new MC error code

codes.Initialization indicating that the VM Instance was created but there was an error in initialization after VM creation. The implementor of Driver.InitializeMachine can return this error code, indicating that InitializeMachine needs to be called again. The Machine Controller will change the phase to CrashLoopBackOff as usual when encountering a codes.Initialization error. - We will introduce a new machine operation stage

InstanceInitialization. In case of an codes.Initialization error- the

machine.Status.LastOperation.Description will be set to InstanceInitialization, machine.Status.LastOperation.ErrorCode will be set to codes.Initialization- the

LastOperation.Type will be set to Create - the

LastOperation.State set to Failed before returning with a ShortRetry

- The semantics of

Driver.GetMachineStatus will be changed. If the instance associated with machine exists, but the instance was not initialized as expected, the provider implementations of GetMachineStatus should return an error: status.Error(codes.Initialization). - If

Driver.GetMachineStatus returned an error encapsulating codes.Initialization then Driver.InitializeMachine will be invoked again in the triggerCreationFlow. - As according to the usual logic, the main machine controller reconciliation loop will now re-invoke the

triggerCreationFlow again if the machine phase is CrashLoopBackOff.

Illustration

AWS Provider Changes

Driver.InitializeMachine

The implementation for the AWS Provider will look something like:

- After the VM instance is available, check

providerSpec.SrcAndDstChecksEnabled, construct ModifyInstanceAttributeInput and call ModifyInstanceAttribute. In case of an error return codes.Initialization instead of the current codes.Internal - Check

providerSpec.NetworkInterfaces and if Ipv6PrefixCount is not nil, then construct AssignIpv6AddressesInput and call AssignIpv6Addresses. In case of an error return codes.Initialization. Don’t use the generic codes.Internal

The existing Ipv6 PR will need modifications.

Driver.GetMachineStatus

- If

providerSpec.SrcAndDstChecksEnabled is false, check ec2.Instance.SourceDestCheck. If it does not match then return status.Error(codes.Initialization) - Check

providerSpec.NetworkInterfaces and if Ipv6PrefixCount is not nil, check ec2.Instance.NetworkInterfaces and check if InstanceNetworkInterface.Ipv6Addresses has a non-nil slice. If this is not the case then return status.Error(codes.Initialization)

Instance Not Ready Taint

- Due to the fact that creation flow for machines will now be enhanced to correctly support post-creation startup logic, we should not scheduled workload until this startup logic is complete. Even without this feature we have a need for such a taint as described in MCM#740

- We propose a new taint

node.machine.sapcloud.io/instance-not-ready which will be added as a node startup taint in gardener core KubeletConfiguration.RegisterWithTaints - The will will then removed by MCM in health check reconciliation, once the machine becomes fully ready. (when moving to

Running phase) - We will add this taint as part of

--ignore-taint in CA - We will introduce a disclaimer / prerequisite in the MCM FAQ, to add this taint as part of kubelet config under

--register-with-taints, otherwise workload could get scheduled , before machine beomes Running

Stage-B Proposal

Enhancement of Driver Interface for Hot Updation

Kindly refer to the Hot-Update Instances design which provides elaborate detail.

3 - To-Do

3.1 - Outline

Machine Controller Manager

CORE – ./machine-controller-manager(provider independent)

Out of tree : Machine controller (provider specific)

MCM is a set controllers:

Questions and refactoring Suggestions

Refactoring

| Statement | FilePath | Status |

|---|

ConcurrentNodeSyncs” bad name - nothing to do with node syncs actually.

If its value is ’10’ then it will start 10 goroutines (workers) per resource type (machine, machinist, machinedeployment, provider-specific-class, node - study the different resource types. | cmd/machine-controller-manager/app/options/options.go | pending |

| LeaderElectionConfiguration is very similar to the one present in “client-go/tools/leaderelection/leaderelection.go” - can we simply used the one in client-go instead of defining again? | pkg/options/types.go - MachineControllerManagerConfiguration | pending |

| Have all userAgents as constant. Right now there is just one. | cmd/app/controllermanager.go | pending |

| Shouldn’t run function be defined on MCMServer struct itself? | cmd/app/controllermanager.go | pending |

| clientcmd.BuildConfigFromFlags fallsback to inClusterConfig which will surely not work as that is not the target. Should it not check and exit early? | cmd/app/controllermanager.go - run Function | pending |

A more direct way to create an in cluster config is using k8s.io/client-go/rest -> rest.InClusterConfig instead of using clientcmd.BuildConfigFromFlags passing empty arguments and depending upon the implementation to fallback to creating a inClusterConfig. If they change the implementation that you get affected. | cmd/app/controllermanager.go - run Function | pending |

| Introduce a method on MCMServer which gets a target KubeConfig and controlKubeConfig or alternatively which creates respective clients. | cmd/app/controllermanager.go - run Function | pending |

| Why can’t we use Kubernetes.NewConfigOrDie also for kubeClientControl? | cmd/app/controllermanager.go - run Function | pending |

| I do not see any benefit of client builders actually. All you need to do is pass in a config and then directly use client-go functions to create a client. | cmd/app/controllermanager.go - run Function | pending |

| Function: getAvailableResources - rename this to getApiServerResources | cmd/app/controllermanager.go | pending |

| Move the method which waits for API server to up and ready to a separate method which returns a discoveryClient when the API server is ready. | cmd/app/controllermanager.go - getAvailableResources function | pending |

| Many methods in client-go used are now deprecated. Switch to the ones that are now recommended to be used instead. | cmd/app/controllermanager.go - startControllers | pending |

| This method needs a general overhaul | cmd/app/controllermanager.go - startControllers | pending |

| If the design is influenced/copied from KCM then its very different. There are different controller structs defined for deployment, replicaset etc which makes the code much more clearer. You can see “kubernetes/cmd/kube-controller-manager/apps.go” and then follow the trail from there. - agreed needs to be changed in future (if time permits) | pkg/controller/controller.go | pending |

| I am not sure why “MachineSetControlInterface”, “RevisionControlInterface”, “MachineControlInterface”, “FakeMachineControl” are defined in this file? | pkg/controller/controller_util.go | pending |

IsMachineActive - combine the first 2 conditions into one with OR. | pkg/controller/controller_util.go | pending |

| Minor change - correct the comment, first word should always be the method name. Currently none of the comments have correct names. | pkg/controller/controller_util.go | pending |

| There are too many deep copies made. What is the need to make another deep copy in this method? You are not really changing anything here. | pkg/controller/deployment.go - updateMachineDeploymentFinalizers | pending |

| Why can’t these validations be done as part of a validating webhook? | pkg/controller/machineset.go - reconcileClusterMachineSet | pending |

Small change to the following if condition. else if is not required a simple else is sufficient. Code1 | | |

| pkg/controller/machineset.go - reconcileClusterMachineSet | pending | |

Why call these inactiveMachines, these are live and running and therefore active. | pkg/controller/machineset.go - terminateMachines | pending |

Clarification

| Statement | FilePath | Status |

|---|

| Why are there 2 versions - internal and external versions? | General | pending |

Safety controller freezes MCM controllers in the following cases:

* Num replicas go beyond a threshold (above the defined replicas)

* Target API service is not reachable

There seems to be an overlap between DWD and MCM Safety controller. In the meltdown scenario why is MCM being added to DWD, you could have used Safety controller for that. | General | pending |

| All machine resources are v1alpha1 - should we not promote it to beta. V1alpha1 has a different semantic and does not give any confidence to the consumers. | cmd/app/controllermanager.go | pending |

Shouldn’t controller manager use context.Context instead of creating a stop channel? - Check if signals (os.Interrupt and SIGTERM are handled properly. Do not see code where this is handled currently.) | cmd/app/controllermanager.go | pending |

| What is the rationale behind a timeout of 10s? If the API server is not up, should this not just block as it can anyways not do anything. Also, if there is an error returned then you exit the MCM which does not make much sense actually as it will be started again and you will again do the poll for the API server to come back up. Forcing an exit of MCM will not have any impact on the reachability of the API server in anyway so why exit? | cmd/app/controllermanager.go - getAvailableResources | pending |

There is a very weird check - availableResources[machineGVR] || availableResources[machineSetGVR] || availableResources[machineDeploymentGVR]

Shouldn’t this be conjunction instead of disjunction?

* What happens if you do not find one or all of these resources?

Currently an error log is printed and nothing else is done. MCM can be used outside gardener context where consumers can directly create MachineClass and Machine and not create MachineSet / Maching Deployment. There is no distinction made between context (gardener or outside-gardener).

| cmd/app/controllermanager.go - StartControllers | pending |

| Instead of having an empty select {} to block forever, isn’t it better to wait on the stop channel? | cmd/app/controllermanager.go - StartControllers | pending |

| Do we need provider specific queues and syncs and listers | pkg/controller/controller.go | pending |

| Why are resource types prefixed with “Cluster”? - not sure , check PR | pkg/controller/controller.go | pending |

| When will forgetAfterSuccess be false and why? - as per the current code this is never the case. - Himanshu will check | cmd/app/controllermanager.go - createWorker | pending |

What is the use of “ExpectationsInterface” and “UIDTrackingContExpectations”?

* All expectations related code should be in its own file “expectations.go” and not in this file. | pkg/controller/controller_util.go | pending |

| Why do we not use lister but directly use the controlMachingClient to get the deployment? Is it because you want to avoid any potential delays caused by update of the local cache held by the informer and accessed by the lister? What is the load on API server due to this? | pkg/controller/deployment.go - reconcileClusterMachineDeployment | pending |

| Why is this conversion needed? code2 | pkg/controller/deployment.go - reconcileClusterMachineDeployment | pending |

A deep copy of machineDeployment is already passed and within the function another deepCopy is made. Any reason for it? | pkg/controller/deployment.go - addMachineDeploymentFinalizers | pending |

What is an Status.ObservedGeneration?

*Read more about generations and observedGeneration at:

https://github.com/kubernetes/community/blob/master/contributors/devel/sig-architecture/api-conventions.md#metadata

https://alenkacz.medium.com/kubernetes-operator-best-practices-implementing-observedgeneration-250728868792

Ideally the update to the ObservedGeneration should only be made after successful reconciliation and not before. I see that this is just copied from deployment_controller.go as is | pkg/controller/deployment.go - reconcileClusterMachineDeployment | pending |

Why and when will a MachineDeployment be marked as frozen and when will it be un-frozen? | pkg/controller/deployment.go - reconcileClusterMachineDeployment | pending |

| Shoudn’t the validation of the machine deployment be done during the creation via a validating webhook instead of allowing it to be stored in etcd and then failing the validation during sync? I saw the checks and these can be done via validation webhook. | pkg/controller/deployment.go - reconcileClusterMachineDeployment | pending |

| RollbackTo has been marked as deprecated. What is the replacement? code3 | pkg/controller/deployment.go - reconcileClusterMachineDeployment | pending |

What is the max machineSet deletions that you could process in a single run? The reason for asking this question is that for every machineSetDeletion a new goroutine spawned.

* Is the Delete call a synchrounous call? Which means it blocks till the machineset deletion is triggered which then also deletes the machines (due to cascade-delete and blockOwnerDeletion= true)? | pkg/controller/deployment.go - terminateMachineSets | pending |

If there are validation errors or error when creating label selector then a nil is returned. In the worker reconcile loop if the return value is nil then it will remove it from the queue (forget + done). What is the way to see any errors? Typically when we describe a resource the errors are displayed. Will these be displayed when we discribe a MachineDeployment? | pkg/controller/deployment.go - reconcileClusterMachineSet | pending |

If an error is returned by updateMachineSetStatus and it is IsNotFound error then returning an error will again queue the MachineSet. Is this desired as IsNotFound indicates the MachineSet has been deleted and is no longer there? | pkg/controller/deployment.go - reconcileClusterMachineSet | pending |

is machineControl.DeleteMachine a synchronous operation which will wait till the machine has been deleted? Also where is the DeletionTimestamp set on the Machine? Will it be automatically done by the API server? | pkg/controller/deployment.go - prepareMachineForDeletion | pending |

Bugs/Enhancements

| Statement + TODO | FilePath | Status |

|---|

| This defines QPS and Burst for its requests to the KAPI. Check if it would make sense to explicitly define a FlowSchema and PriorityLevelConfiguration to ensure that the requests from this controller are given a well-defined preference. What is the rational behind deciding these values? | pkg/options/types.go - MachineControllerManagerConfiguration | pending |

| In function “validateMachineSpec” fldPath func parameter is never used. | pkg/apis/machine/validation/machine.go | pending |

| If there is an update failure then this method recursively calls itself without any sort of delays which could lead to a LOT of load on the API server. (opened: https://github.com/gardener/machine-controller-manager/issues/686) | pkg/controller/deployment.go - updateMachineDeploymentFinalizers | pending |

We are updating filteredMachines by invoking syncMachinesNodeTemplates, syncMachinesConfig and syncMachinesClassKind but we do not create any deepCopy here. Everywhere else the general principle is when you mutate always make a deepCopy and then mutate the copy instead of the original as a lister is used and that changes the cached copy.

Fix: SatisfiedExpectations check has been commented and there is a TODO there to fix it. Is there a PR for this? | pkg/controller/machineset.go - reconcileClusterMachineSet | pending |

Code references

1.1 code1

if machineSet.DeletionTimestamp == nil {

// manageReplicas is the core machineSet method where scale up/down occurs

// It is not called when deletion timestamp is set

manageReplicasErr = c.manageReplicas(ctx, filteredMachines, machineSet)

} else if machineSet.DeletionTimestamp != nil {

//FIX: change this to simple else without the if

1.2 code2

defer dc.enqueueMachineDeploymentAfter(deployment, 10*time.Minute)